A sprawling academic cheating network turbocharged by Google Ads that has generated nearly $25 million in revenue has curious ties to a Kremlin-connected oligarch whose Russian university builds drones for Russia’s war against Ukraine.

The Nerdify homepage.

The link between essay mills and Russian attack drones might seem improbable, but understanding it begins with a simple question: How does a human-intensive academic cheating service stay relevant in an era when students can simply ask AI to write their term papers? The answer – recasting the business as an AI company – is just the latest chapter in a story of many rebrands that link the operation to Russia’s largest private university.

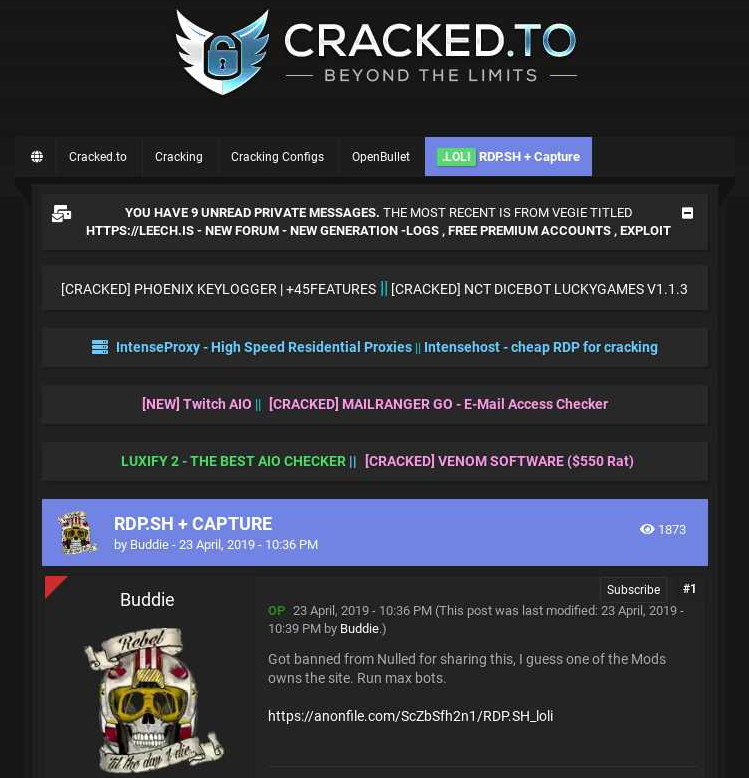

Search in Google for any terms related to academic cheating services — e.g., “help with exam online” or “term paper online” — and you’re likely to encounter websites with the words “nerd” or “geek” in them, such as thenerdify[.]com and geekly-hub[.]com. With a simple request sent via text message, you can hire their tutors to help with any assignment.

These nerdy and geeky-branded websites frequently cite their “honor code,” which emphasizes they do not condone academic cheating, will not write your term papers for you, and will only offer support and advice for customers. But according to This Isn’t Fine, a Substack blog about contract cheating and essay mills, the Nerdify brand of websites will happily ignore that mantra.

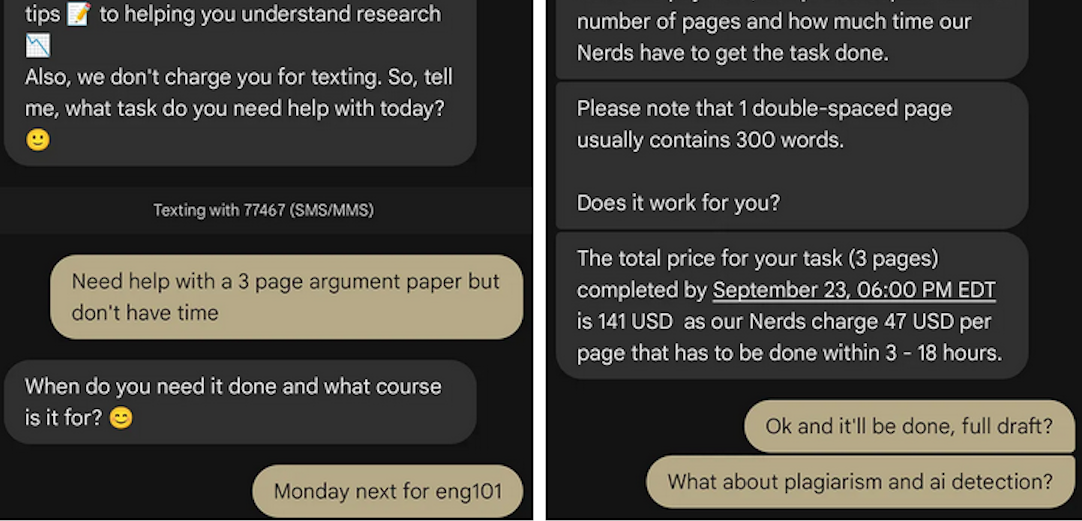

“We tested the quick SMS for a price quote,” wrote This Isn’t Fine author Joseph Thibault. “The honor code references and platitudes apparently stop at the website. Within three minutes, we confirmed that a full three-page, plagiarism- and AI-free MLA formatted Argumentative essay could be ours for the low price of $141.”

A screenshot from Joseph Thibault’s Substack post shows him purchasing a 3-page paper with the Nerdify service.

Google prohibits ads that “enable dishonest behavior.” Yet, a sprawling global essay and homework cheating network run under the Nerdy brands has quietly bought its way to the top of Google searches – booking revenues of almost $25 million through a maze of companies in Cyprus, Malta and Hong Kong, while pitching “tutoring” that delivers finished work that students can turn in.

When one Nerdy-related Google Ads account got shut down, the group behind the company would form a new entity with a front-person (typically a young Ukrainian woman), start a new ads account along with a new website and domain name (usually with “nerdy” in the brand), and resume running Google ads for the same set of keywords.

UK companies belonging to the group that have been shut down by Google Ads since Jan 2025 include:

–Proglobal Solutions LTD (advertised nerdifyit[.]com);

–AW Tech Limited (advertised thenerdify[.]com);

–Geekly Solutions Ltd (advertised geekly-hub[.]com).

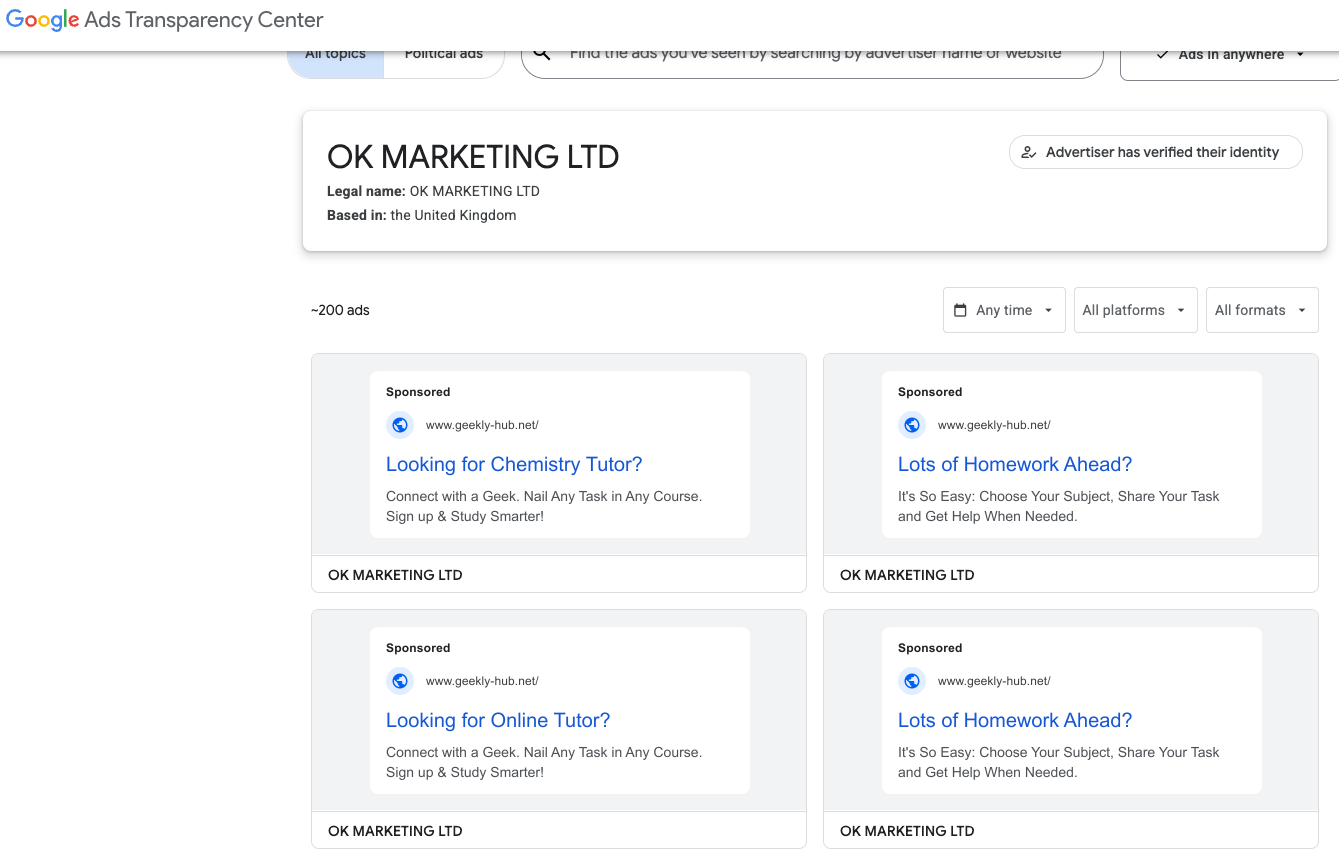

Currently active Google Ads accounts for the Nerdify brands include:

-OK Marketing LTD (advertising geekly-hub[.]net), formed in the name of Olha Karpenko, a young Ukrainian woman;

–Two Sigma Solutions LTD (advertising litero[.]ai), formed in the name of Olekszij (Alexey) Pokatilo.

Google’s Ads Transparency page for current Nerdify advertiser OK Marketing LTD.

Mr. Pokatilo has been in the essay-writing business since at least 2009, operating a paper-mill enterprise called Livingston Research alongside Alexander Korsukov, who is listed as an owner. According to a lengthy account from a former employee, Livingston Research mainly farmed its writing tasks out to low-cost workers from Kenya, Philippines, Pakistan, Russia and Ukraine.

Pokatilo moved from Ukraine to the United Kingdom in Sept. 2015 and co-founded a company called Awesome Technologies, which pitched itself as a way for people to outsource tasks by sending a text message to the service’s assistants.

The other co-founder of Awesome Technologies is 36-year-old Filip Perkon, a Swedish man living in London who touts himself as a serial entrepreneur and investor. Years before starting Awesome together, Perkon and Pokatilo co-founded a student group called Russian Business Week while the two were classmates at the London School of Economics. According to the Bulgarian investigative journalist Christo Grozev, Perkon’s birth certificate was issued by the Soviet Embassy in Sweden.

Alexey Pokatilo (left) and Filip Perkon at a Facebook event for startups in San Francisco in mid-2015.

Around the time Perkon and Pokatilo launched Awesome Technologies, Perkon was building a social media propaganda tool called the Russian Diplomatic Online Club, which Perkon said would “turbo-charge” Russian messaging online. The club’s newsletter urged subscribers to install in their Twitter accounts a third-party app called Tweetsquad that would retweet Kremlin messaging on the social media platform.

Perkon was praised by the Russian Embassy in London for his efforts: During the contentious Brexit vote that ultimately led to the United Kingdom leaving the European Union, the Russian embassy in London used this spam tweeting tool to auto-retweet the Russian ambassador’s posts from supporters’ accounts.

Neither Mr. Perkon nor Mr. Pokatilo replied to requests for comment.

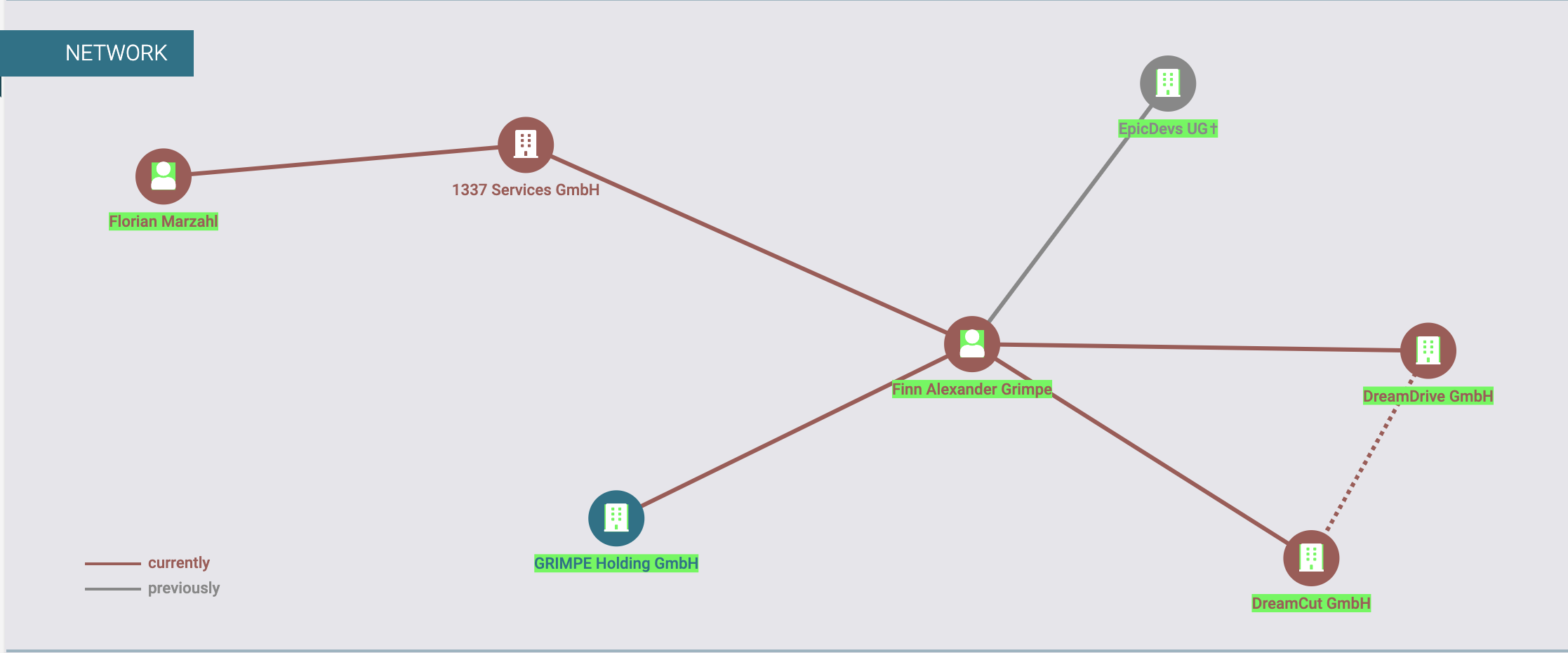

A review of corporations tied to Mr. Perkon as indexed by the business research service North Data finds he holds or held director positions in several U.K. subsidiaries of Synergy University, Russia’s largest private education provider. Synergy has more than 35,000 students, and sells T-shirts with patriotic slogans such as “Crimea is Ours,” and “The Russian Empire — Reloaded.”

The president of Synergy University is Vadim Lobov, a Kremlin insider whose headquarters on the outskirts of Moscow reportedly features a wall-sized portrait of Russian President Vladimir Putin in the pop-art style of Andy Warhol. For a number of years, Lobov and Perkon co-produced a cross-cultural event in the U.K. called Russian Film Week.

Synergy President Vadim Lobov and Filip Perkon, speaking at a press conference for Russian Film Week, a cross-cultural event in the U.K. co-produced by both men.

Mr. Lobov was one of 11 individuals reportedly hand-picked by the convicted Russian spy Marina Butina to attend the 2017 National Prayer Breakfast held in Washington D.C. just two weeks after President Trump’s first inauguration.

While Synergy University promotes itself as Russia’s largest private educational institution, hundreds of international students tell a different story. Online reviews from students paint a picture of unkept promises: Prospective students from Nigeria, Kenya, Ghana, and other nations paying thousands in advance fees for promised study visas to Russia, only to have their applications denied with no refunds offered.

“My experience with Synergy University has been nothing short of heartbreaking,” reads one such account. “When I first discovered the school, their representative was extremely responsive and eager to assist. He communicated frequently and made me believe I was in safe hands. However, after paying my hard-earned tuition fees, my visa was denied. It’s been over 9 months since that denial, and despite their promises, I have received no refund whatsoever. My messages are now ignored, and the same representative who once replied instantly no longer responds at all. Synergy University, how can an institution in Europe feel comfortable exploiting the hopes of Africans who trust you with their life savings? This is not just unethical — it’s predatory.”

This pattern repeats across reviews by multilingual students from Pakistan, Nepal, India, and various African nations — all describing the same scheme: Attractive online marketing, promises of easy visa approval, upfront payment requirements, and then silence after visa denials.

Reddit discussions in r/Moscow and r/AskARussian are filled with warnings. “It’s a scam, a diploma mill,” writes one user. “They literally sell exams. There was an investigation on Rossiya-1 television showing students paying to pass tests.”

The Nerdify website’s “About Us” page says the company was co-founded by Pokatilo and an American named Brian Mellor. The latter identity seems to have been fabricated, or at least there is no evidence that a person with this name ever worked at Nerdify.

Rather, it appears that the SMS assistance company co-founded by Messrs. Pokatilo and Perkon (Awesome Technologies) fizzled out shortly after its creation, and that Nerdify soon adopted the process of accepting assignment requests via text message and routing them to freelance writers.

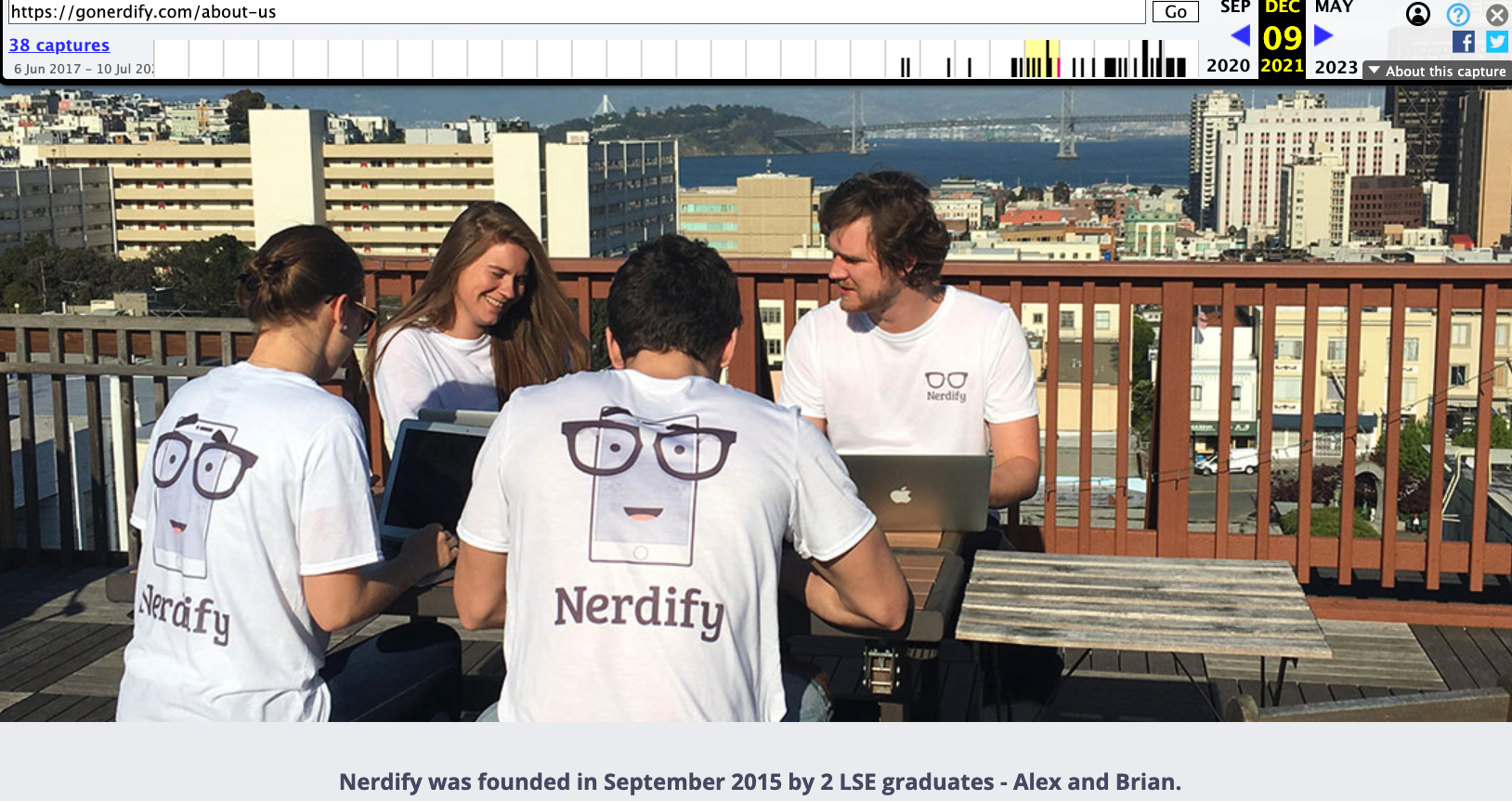

A closer look at an early “About Us” page for Nerdify in The Wayback Machine suggests that Mr. Perkon was the real co-founder of the company: The photo at the top of the page shows four people wearing Nerdify T-shirts seated around a table on a rooftop deck in San Francisco, and the man facing the camera is Perkon.

Filip Perkon, top right, is pictured wearing a Nerdify T-shirt in an archived copy of the company’s About Us page. Image: archive.org.

Where are they now? Pokatilo is currently running a startup called Litero.Ai, which appears to be an AI-based essay writing service. In July 2025, Mr. Pokatilo received pre-seed funding of $800,000 for Litero from an investment program backed by the venture capital firms AltaIR Capital, Yellow Rocks, Smart Partnership Capital, and I2BF Global Ventures.

Meanwhile, Filip Perkon is busy setting up toy rubber duck stores in Miami and in at least three locations in the United Kingdom. These “Duck World” shops market themselves as “the world’s largest duck store.”

This past week, Mr. Lobov was in India with Putin’s entourage on a charm tour with India’s Prime Minister Narendra Modi. Although Synergy is billed as an educational institution, a review of the company’s sprawling corporate footprint (via DNS) shows it also is assisting the Russian government in its war against Ukraine.

Synergy University President Vadim Lobov (right) pictured this week in India next to Natalia Popova, a Russian TV presenter known for her close ties to Putin’s family, particularly Putin’s daughter, who works with Popova at the education and culture-focused Innopraktika Foundation.

The website bpla.synergy[.]bot, for instance, says the company is involved in developing combat drones to aid Russian forces and to evade international sanctions on the supply and re-export of high-tech products.

A screenshot from the website of synergy,bot shows the company is actively engaged in building armed drones for the war in Ukraine.

KrebsOnSecurity would like to thank the anonymous researcher NatInfoSec for their assistance in this investigation.

Update, Dec. 8, 10:06 a.m. ET: Mr. Pokatilo responded to requests for comment after the publication of this story. Pokatilo said he has no relation to Synergy nor to Mr. Lobov, and that his work with Mr. Perkon ended with the dissolution of Awesome Technologies.

“I have had no involvement in any of his projects and business activities mentioned in the article and he has no involvement in Litero.ai,” Pokatilo said of Perkon.

Mr. Pokatilo said his new company Litero “does not provide contract cheating services and is built specifically to improve transparency and academic integrity in the age of universal use of AI by students.”

“I am Ukrainian,” he said in an email. “My close friends, colleagues, and some family members continue to live in Ukraine under the ongoing invasion. Any suggestion that I or my company may be connected in any way to Russia’s war efforts is deeply offensive on a personal level and harmful to the reputation of Litero.ai, a company where many team members are Ukrainian.”

Update, Dec. 11, 12:07 p.m. ET: Mr. Perkon responded to requests for comment after the publication of this story. Perkon said the photo of him in a Nerdify T-shirt (see screenshot above) was taken after a startup event in San Francisco, where he volunteered to act as a photo model to help friends with their project.

“I have no business or other relations to Nerdify or any other ventures in that space,” Mr. Perkon said in an email response. “As for Vadim Lobov, I worked for Venture Capital arm at Synergy until 2013 as well as his business school project in the UK, that didn’t get off the ground, so the company related to this was made dormant. Then Synergy kindly provided sponsorship for my Russian Film Week event that I created and ran until 2022 in the U.K., an event that became the biggest independent Russian film festival outside of Russia. Since the start of the Ukraine war in 2022 I closed the festival down.”

“I have had no business with Vadim Lobov since 2021 (the last film festival) and I don’t keep track of his endeavours,” Perkon continued. “As for Alexey Pokatilo, we are university friends. Our business relationship has ended after the concierge service Awesome Technologies didn’t work out, many years ago.”

Chances are, you have more personal information posted online than you think.

In 2024, the U.S. Federal Trade Commission (FTC) reported that 1.1 million identity theft complaints were filed, where $12.5 billion was lost to identity theft and fraud overall—a 25% increase over the year prior.

What fuels all this theft and fraud? Easy access to personal information.

Here’s one way you can reduce your chances of identity theft: remove your personal information from the internet.

Scammers and thieves can get a hold of your personal information in several ways, such as information leaked in data breaches, phishing attacks that lure you into handing it over, malware that steals it from your devices, or by purchasing your information on dark web marketplaces, just to name a few.

However, scammers and thieves have other resources and connections to help them commit theft and fraud—data broker sites, places where personal information is posted online for practically anyone to see. This makes removing your info from these sites so important, from both an identity and privacy standpoint.

Data broker sites are massive repositories of personal information that also buy information from other data brokers. As a result, some data brokers have thousands of pieces of data on billions of individuals worldwide.

What kind of data could they have on you? A broker may know how much you paid for your home, your education level, where you’ve lived over the years, who you’ve lived with, your driving record, and possibly your political leanings. A broker could even know your favorite flavor of ice cream and your preferred over-the-counter allergy medicine thanks to information from loyalty cards. They may also have health-related information from fitness apps. The amount of personal information can run that broadly, and that deeply.

With information at this level of detail, it’s no wonder that data brokers rake in an estimated $200 billion worldwide every year.

Your personal information reaches the internet through six primary methods, most of which are initiated by activities you perform on a daily basis. Understanding these channels can help you make more informed choices about your digital footprint.

When you buy a home, register to vote, get married, or start a business, government agencies create public records that contain your personal details. These records, once stored in filing cabinets, are now digitized, accessible online, and searchable by anyone with an internet connection.

Every photo you post, location you tag, and profile detail you share contributes to your digital presence. Even with privacy settings enabled, social media platforms collect extensive data about your behavior, relationships, and preferences. You may not realize it, but every time you share details with your network, you are training algorithms that analyze and categorize your information.

You create accounts with retailers, healthcare providers, employers, and service companies, trusting them to protect your information. However, when hackers breach these systems, your personal information often ends up for sale on dark web marketplaces, where data brokers can purchase it. The Identity Theft Research Center Annual Data Breach Report revealed that 2024 saw the second-highest number of data compromises in the U.S. since the organization began recording incidents in 2005.

When you browse, shop, or use apps, your online behavior is recorded by tracking pixels, cookies, and software development kits. The data collected—such as your location, device usage, and interests—is packaged and sold to data brokers who combine it with other sources to build a profile of you.

Grocery store cards, coffee shop apps, and airline miles programs offer discounts in exchange for detailed purchasing information. Every transaction gets recorded, analyzed, and often shared with third-party data brokers, who then create detailed lifestyle profiles that are sold to marketing companies.

Data brokers act as the hubs that collect information from various sources to create comprehensive profiles that may include over 5,000 data points per person. Seemingly separate pieces of information become a detailed digital dossier that reveals intimate details about your life, relationships, health, and financial situation.

Legally, your aggregated information from data brokers is used by advertisers to create targeted ad campaigns. In addition, law enforcement, journalists, and employers may use data brokers because the time-consuming pre-work of assembling your data has largely been done.

Currently, the U.S. has no federal laws that regulate data brokers or require them to remove personal information if requested. Only a few states, such as Nevada, Vermont, and California, have legislation that protects consumers. In the European Union, the General Data Protection Regulation (GDPR) has stricter rules about what information can be collected and what can be done with it.

On the darker side, scammers and thieves use personal information for identity theft and other forms of fraud. With enough information, they can create a high-fidelity profile of their victims to open new accounts in their name. For this reason, cleaning up your personal information online makes a great deal of sense.

Understanding efforts to remove personal information, which data types pose the greatest threat, can help you prioritize your removal efforts. Here are the high-risk personal details you should target first, ranked by their potential for harm.

When prioritizing your personal information removal efforts, focus on combinations of data rather than individual pieces. For example, your name alone poses minimal risk, but when combined with your address, phone number, and date of birth, it creates a comprehensive profile that criminals can exploit. Tools such as McAfee Personal Data Cleanup can help you identify and systematically remove these high-risk combinations from data broker sites.

This process takes time and persistence, but services such as McAfee Personal Data Cleanup can continuously monitor for new exposures and manage opt-out requests on your behalf. The key is to first understand the full scope of your online presence before beginning the removal process.

Let’s review some ways you can remove your personal information from data brokers and other sources on the internet.

Once you have found the sites that have your information, the next step is to request that it be removed. You can do this yourself or employ services such as McAfee’s Personal Data Cleanup, which can help manage the removal for you depending on your subscription. It also monitors those sites, so if your info gets posted again, you can request its removal again.

You can request to remove your name from Google search to limit your information from turning up in searches. You can also enable “Auto Delete” in your privacy settings to ensure your data is regularly deleted. Occasionally, deleting your cookies or browsing in incognito mode prevents websites from tracking you. If Google denies your initial request, you can appeal using the same tool, providing more context, documentation, or legal grounds for removal. Google’s troubleshooter tool may explain why your request was denied—either legitimate public interest or newsworthiness—and how to improve your appeal.

It’s important to know that the original content remains on the source website. You’ll still need to contact website owners directly to have your actual content removed. Additionally, the information may still appear in other search engines.

If you have old, inactive accounts that have become obsolete, such as Myspace or Tumblr, you may want to deactivate or delete them entirely. For social media platforms that you use regularly, such as Facebook and Instagram, consider adjusting your privacy settings to keep your personal information to the bare minimum.

If you’ve ever published articles, written blogs, or created any content online, it is a good time to consider taking them down if they no longer serve a purpose. If you were mentioned or tagged by other people, it is worth requesting them to take down posts with sensitive information.

Another way to tidy up your digital footprint is to delete phone apps you no longer use, as hackers are able to track personal information on these and sell it. As a rule, share as little information with apps as possible using your phone’s settings.

After sending your removal request, give the search engine or source website 7 to 10 business days to respond initially, then follow up weekly if needed. If a website owner doesn’t respond within 30 days or refuses your request, you have several escalation options:

For comprehensive guidance on website takedown procedures and your legal rights, visit the FTC’s privacy and security guidance for the most current information on consumer data protection. Direct website contact can be time-consuming, but it’s often effective for removing information from smaller sites that don’t appear on major data broker opt-out lists. Stay persistent, document everything, and remember that you have legal rights to protect your privacy online.

After you’ve cleaned up your data from websites and social platforms, your web browsers may still save personal information, such as your browsing history, cookies, autofill data, saved passwords, and even payment methods. Clearing this information and adjusting your privacy settings helps prevent tracking, reduces targeted ads, and limits the amount of personal data websites can collect about you.

When your home address is publicly available, it can expose you to risks like identity theft, stalking, or targeted scams. Taking steps to remove or mask your address across data broker sites, public records, and even old social media profiles helps protect your privacy, reduce unwanted contact, and keep your personal life more secure.

The cost to remove your personal information from the internet varies, depending on whether you do it yourself or use a professional service. Read the guide below to help you make an informed decision:

Removing your information on your own primarily requires time investment. Expect to spend 20 to 40 hours looking for your information online and submitting removal requests. In terms of financial costs, most data brokers may not charge for opting out; however, other expenses could include certified mail fees for formal removal requests, which range from $3 to $8 per letter, and possibly notarization fees for legal documents. In total, this effort can be substantial when dealing with dozens of sites.

Depending on which paid removal and monitoring service you employ, basic plans typically range from $8 to $25 monthly, while annual plans, which often provide better value, range from $100 to $600. Premium services that monitor hundreds of data broker sites and provide ongoing removal can cost $1,200-$2,400 annually.

The difference in pricing is driven by several factors. This includes the number of data broker sites to be monitored, which could cover more than 200 sites, and the scope of removal requests, which may include basic personal information or comprehensive family protection. The monitoring frequency and additional features, such as dark web monitoring, credit protection, identity restoration support, and insurance coverage, typically command higher prices.

The upfront cost may seem significant, but continuous monitoring provides essential value. A McAfee survey revealed that 95% of consumers’ personal information ends up on data broker sites without their consent. It is possible that after the successful removal of your information, it may reappear on data broker sites without ongoing monitoring. This makes continuous protection far more cost-effective than repeated one-time cleanups.

Services such as McAfee Personal Data Cleanup can prove invaluable, as it handles the initial removal process, as well as ongoing monitoring to catch when your information resurfaces, saving you time and effort while offering long-term privacy protection.

Aside from the services above, comprehensive protection software can help safeguard your privacy and minimize your exposure to cybercrime with these offerings, such as:

So while it may seem like all this rampant collecting and selling of personal information is out of your hands, there’s plenty you can do to take control. With the steps outlined above and strong online protection software in place, you can keep your personal information more private and secure.

Unlike legitimate data broker sites, the dark web operates outside legal boundaries where takedown requests don’t apply. Rather than trying to remove information that’s already circulating, you can take immediate steps to reduce the potential harm and focus on preventing future exposure. A more effective approach is to treat data breaches as ongoing security issues rather than one-time events.

Both the FTC and Cybersecurity and Infrastructure Security Agency have released guidelines on proactive controls and continuous monitoring. Here are the key steps of those recommendations:

As you go about removing your information from the internet, it is important to set realistic expectations. Several factors may limit how completely you can remove personal data from internet sources:

While some states like California have stronger consumer privacy rights, most data removal still depends on voluntary compliance from companies.

Removing your personal information from the internet takes effort, but it’s one of the most effective ways to protect yourself from identity theft and privacy violations. The steps outlined above provide you with a clear roadmap to systematically reduce your online exposure, from opting out of data brokers to tightening your social media privacy settings.

This isn’t a one-time task but an ongoing process that requires regular attention, as new data appears online constantly. Rather than attempting to completely erase your digital presence, focus on reducing your exposure to the most harmful uses of your personal information. Services like McAfee Personal Data Cleanup can help automate the most time-consuming parts of this process, monitoring high-risk data broker sites and managing removal requests for you.

The post How to Remove Your Personal Information From the Internet appeared first on McAfee Blog.

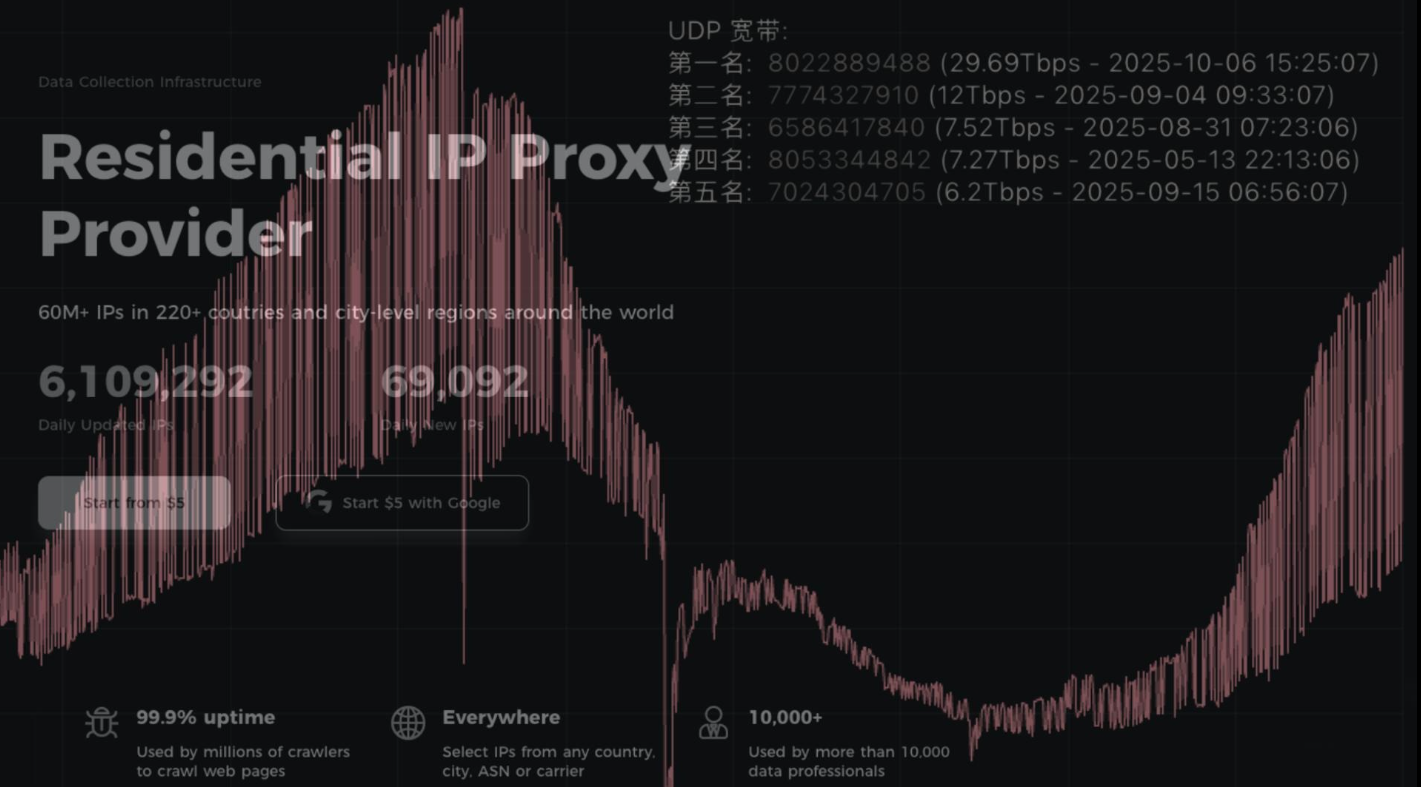

Aisuru, the botnet responsible for a series of record-smashing distributed denial-of-service (DDoS) attacks this year, recently was overhauled to support a more low-key, lucrative and sustainable business: Renting hundreds of thousands of infected Internet of Things (IoT) devices to proxy services that help cybercriminals anonymize their traffic. Experts say a glut of proxies from Aisuru and other sources is fueling large-scale data harvesting efforts tied to various artificial intelligence (AI) projects, helping content scrapers evade detection by routing their traffic through residential connections that appear to be regular Internet users.

First identified in August 2024, Aisuru has spread to at least 700,000 IoT systems, such as poorly secured Internet routers and security cameras. Aisuru’s overlords have used their massive botnet to clobber targets with headline-grabbing DDoS attacks, flooding targeted hosts with blasts of junk requests from all infected systems simultaneously.

In June, Aisuru hit KrebsOnSecurity.com with a DDoS clocking at 6.3 terabits per second — the biggest attack that Google had ever mitigated at the time. In the weeks and months that followed, Aisuru’s operators demonstrated DDoS capabilities of nearly 30 terabits of data per second — well beyond the attack mitigation capabilities of most Internet destinations.

These digital sieges have been particularly disruptive this year for U.S.-based Internet service providers (ISPs), in part because Aisuru recently succeeded in taking over a large number of IoT devices in the United States. And when Aisuru launches attacks, the volume of outgoing traffic from infected systems on these ISPs is often so high that it can disrupt or degrade Internet service for adjacent (non-botted) customers of the ISPs.

“Multiple broadband access network operators have experienced significant operational impact due to outbound DDoS attacks in excess of 1.5Tb/sec launched from Aisuru botnet nodes residing on end-customer premises,” wrote Roland Dobbins, principal engineer at Netscout, in a recent executive summary on Aisuru. “Outbound/crossbound attack traffic exceeding 1Tb/sec from compromised customer premise equipment (CPE) devices has caused significant disruption to wireline and wireless broadband access networks. High-throughput attacks have caused chassis-based router line card failures.”

The incessant attacks from Aisuru have caught the attention of federal authorities in the United States and Europe (many of Aisuru’s victims are customers of ISPs and hosting providers based in Europe). Quite recently, some of the world’s largest ISPs have started informally sharing block lists identifying the rapidly shifting locations of the servers that the attackers use to control the activities of the botnet.

Experts say the Aisuru botmasters recently updated their malware so that compromised devices can more easily be rented to so-called “residential proxy” providers. These proxy services allow paying customers to route their Internet communications through someone else’s device, providing anonymity and the ability to appear as a regular Internet user in almost any major city worldwide.

From a website’s perspective, the IP traffic of a residential proxy network user appears to originate from the rented residential IP address, not from the proxy service customer. Proxy services can be used in a legitimate manner for several business purposes — such as price comparisons or sales intelligence. But they are massively abused for hiding cybercrime activity (think advertising fraud, credential stuffing) because they can make it difficult to trace malicious traffic to its original source.

And as we’ll see in a moment, this entire shadowy industry appears to be shifting its focus toward enabling aggressive content scraping activity that continuously feeds raw data into large language models (LLMs) built to support various AI projects.

Riley Kilmer is co-founder of spur.us, a service that tracks proxy networks. Kilmer said all of the top proxy services have grown substantially over the past six months.

“I just checked, and in the last 90 days we’ve seen 250 million unique residential proxy IPs,” Kilmer said. “That is insane. That is so high of a number, it’s unheard of. These proxies are absolutely everywhere now.”

Today, Spur says it is tracking an unprecedented spike in available proxies across all providers, including;

LUMINATI_PROXY 11,856,421

NETNUT_PROXY 10,982,458

ABCPROXY_PROXY 9,294,419

OXYLABS_PROXY 6,754,790

IPIDEA_PROXY 3,209,313

EARNFM_PROXY 2,659,913

NODEMAVEN_PROXY 2,627,851

INFATICA_PROXY 2,335,194

IPROYAL_PROXY 2,032,027

YILU_PROXY 1,549,155

Reached for comment about the apparent rapid growth in their proxy network, Oxylabs (#4 on Spur’s list) said while their proxy pool did grow recently, it did so at nowhere near the rate cited by Spur.

“We don’t systematically track other providers’ figures, and we’re not aware of any instances of 10× or 100× growth, especially when it comes to a few bigger companies that are legitimate businesses,” the company said in a written statement.

Bright Data was formerly known as Luminati Networks, the name that is currently at the top of Spur’s list of the biggest residential proxy networks. Bright Data likewise told KrebsOnSecurity that Spur’s current estimates of its proxy network are dramatically overstated and inaccurate.

“We did not actively initiate nor do we see any 10x or 100x expansion of our network, which leads me to believe that someone might be presenting these IPs as Bright Data’s in some way,” said Rony Shalit, Bright Data’s chief compliance and ethics officer. “In many cases in the past, due to us being the leading data collection proxy provider, IPs were falsely tagged as being part of our network, or while being used by other proxy providers for malicious activity.”

“Our network is only sourced from verified IP providers and a robust opt-in only residential peers, which we work hard and in complete transparency to obtain,” Shalit continued. “Every DC, ISP or SDK partner is reviewed and approved, and every residential peer must actively opt in to be part of our network.”

Even Spur acknowledges that Luminati and Oxylabs are unlike most other proxy services on their top proxy providers list, in that these providers actually adhere to “know-your-customer” policies, such as requiring video calls with all customers, and strictly blocking customers from reselling access.

Benjamin Brundage is founder of Synthient, a startup that helps companies detect proxy networks. Brundage said if there is increasing confusion around which proxy networks are the most worrisome, it’s because nearly all of these lesser-known proxy services have evolved into highly incestuous bandwidth resellers. What’s more, he said, some proxy providers do not appreciate being tracked and have been known to take aggressive steps to confuse systems that scan the Internet for residential proxy nodes.

Brundage said most proxy services today have created their own software development kit or SDK that other app developers can bundle with their code to earn revenue. These SDKs quietly modify the user’s device so that some portion of their bandwidth can be used to forward traffic from proxy service customers.

“Proxy providers have pools of constantly churning IP addresses,” he said. “These IP addresses are sourced through various means, such as bandwidth-sharing apps, botnets, Android SDKs, and more. These providers will often either directly approach resellers or offer a reseller program that allows users to resell bandwidth through their platform.”

Many SDK providers say they require full consent before allowing their software to be installed on end-user devices. Still, those opt-in agreements and consent checkboxes may be little more than a formality for cybercriminals like the Aisuru botmasters, who can earn a commission each time one of their infected devices is forced to install some SDK that enables one or more of these proxy services.

Depending on its structure, a single provider may operate hundreds of different proxy pools at a time — all maintained through other means, Brundage said.

“Often, you’ll see resellers maintaining their own proxy pool in addition to an upstream provider,” he said. “It allows them to market a proxy pool to high-value clients and offer an unlimited bandwidth plan for cheap reduce their own costs.”

Some proxy providers appear to be directly in league with botmasters. Brundage identified one proxy seller that was aggressively advertising cheap and plentiful bandwidth to content scraping companies. After scanning that provider’s pool of available proxies, Brundage said he found a one-to-one match with IP addresses he’d previously mapped to the Aisuru botnet.

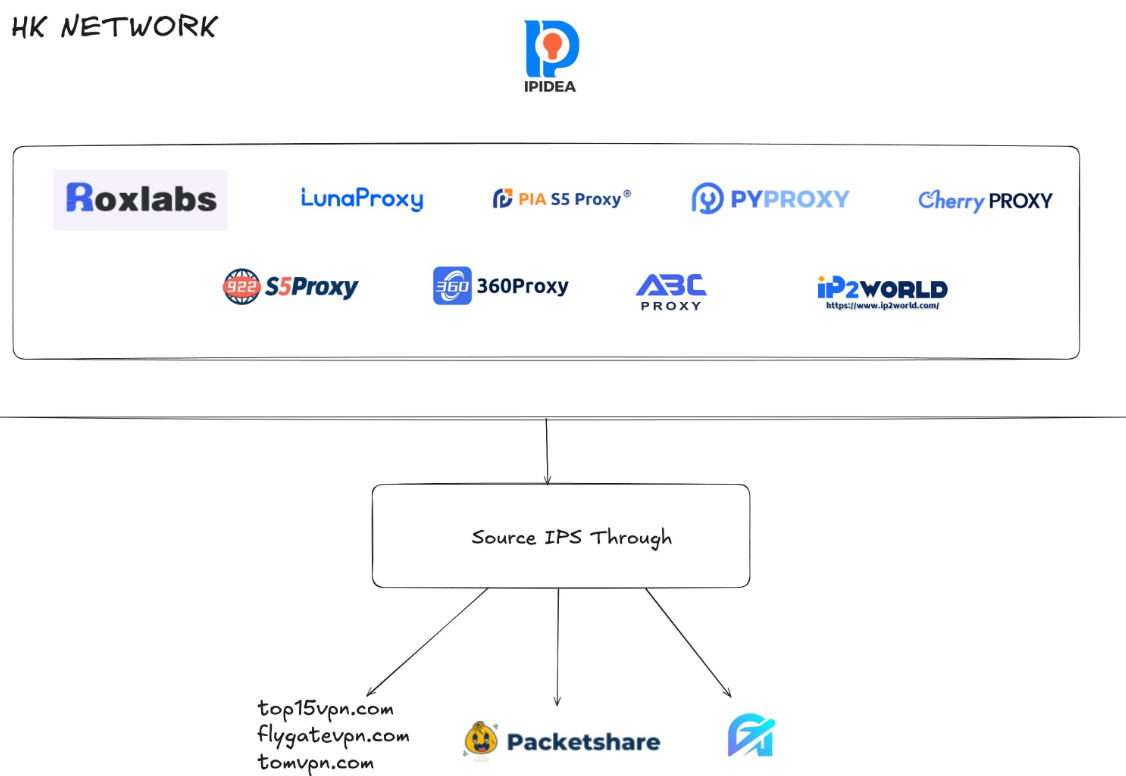

Brundage says that by almost any measurement, the world’s largest residential proxy service is IPidea, a China-based proxy network. IPidea is #5 on Spur’s Top 10, and Brundage said its brands include ABCProxy (#3), Roxlabs, LunaProxy, PIA S5 Proxy, PyProxy, 922Proxy, 360Proxy, IP2World, and Cherry Proxy. Spur’s Kilmer said they also track Yilu Proxy (#10) as IPidea.

Brundage said all of these providers operate under a corporate umbrella known on the cybercrime forums as “HK Network.”

“The way it works is there’s this whole reseller ecosystem, where IPidea will be incredibly aggressive and approach all these proxy providers with the offer, ‘Hey, if you guys buy bandwidth from us, we’ll give you these amazing reseller prices,'” Brundage explained. “But they’re also very aggressive in recruiting resellers for their apps.”

A graphic depicting the relationship between proxy providers that Synthient found are white labeling IPidea proxies. Image: Synthient.com.

Those apps include a range of low-cost and “free” virtual private networking (VPN) services that indeed allow users to enjoy a free VPN, but which also turn the user’s device into a traffic relay that can be rented to cybercriminals, or else parceled out to countless other proxy networks.

“They have all this bandwidth to offload,” Brundage said of IPidea and its sister networks. “And they can do it through their own platforms, or they go get resellers to do it for them by advertising on sketchy hacker forums to reach more people.”

One of IPidea’s core brands is 922S5Proxy, which is a not-so-subtle nod to the 911S5Proxy service that was hugely popular between 2015 and 2022. In July 2022, KrebsOnSecurity published a deep dive into 911S5Proxy’s origins and apparent owners in China. Less than a week later, 911S5Proxy announced it was closing down after the company’s servers were massively hacked.

That 2022 story named Yunhe Wang from Beijing as the apparent owner and/or manager of the 911S5 proxy service. In May 2024, the U.S. Department of Justice arrested Mr Wang, alleging that his network was used to steal billions of dollars from financial institutions, credit card issuers, and federal lending programs. At the same time, the U.S. Treasury Department announced sanctions against Wang and two other Chinese nationals for operating 911S5Proxy.

The website for 922Proxy.

In recent months, multiple experts who track botnet and proxy activity have shared that a great deal of content scraping which ultimately benefits AI companies is now leveraging these proxy networks to further obfuscate their aggressive data-slurping activity. That’s because by routing it through residential IP addresses, content scraping firms can make their traffic far trickier to filter out.

“It’s really difficult to block, because there’s a risk of blocking real people,” Spur’s Kilmer said of the LLM scraping activity that is fed through individual residential IP addresses, which are often shared by multiple customers at once.

Kilmer says the AI industry has brought a veneer of legitimacy to residential proxy business, which has heretofore mostly been associated with sketchy affiliate money making programs, automated abuse, and unwanted Internet traffic.

“Web crawling and scraping has always been a thing, but AI made it like a commodity, data that had to be collected,” Kilmer said. “Everybody wanted to monetize their own data pots, and how they monetize that is different across the board.”

Kilmer said many LLM-related scrapers rely on residential proxies in cases where the content provider has restricted access to their platform in some way, such as forcing interaction through an app, or keeping all content behind a login page with multi-factor authentication.

“Where the cost of data is out of reach — there is some exclusivity or reason they can’t access the data — they’ll turn to residential proxies so they look like a real person accessing that data,” Kilmer said of the content scraping efforts.

Aggressive AI crawlers increasingly are overloading community-maintained infrastructure, causing what amounts to persistent DDoS attacks on vital public resources. A report earlier this year from LibreNews found some open-source projects now see as much as 97 percent of their traffic originating from AI company bots, dramatically increasing bandwidth costs, service instability, and burdening already stretched-thin maintainers.

Cloudflare is now experimenting with tools that will allow content creators to charge a fee to AI crawlers to scrape their websites. The company’s “pay-per-crawl” feature is currently in a private beta, and it lets publishers set their own prices that bots must pay before scraping content.

On October 22, the social media and news network Reddit sued Oxylabs (PDF) and several other proxy providers, alleging that their systems enabled the mass-scraping of Reddit user content even though Reddit had taken steps to block such activity.

“Recognizing that Reddit denies scrapers like them access to its site, Defendants scrape the data from Google’s search results instead,” the lawsuit alleges. “They do so by masking their identities, hiding their locations, and disguising their web scrapers as regular people (among other techniques) to circumvent or bypass the security restrictions meant to stop them.”

Denas Grybauskas, chief governance and strategy officer at Oxylabs, said the company was shocked and disappointed by the lawsuit.

“Reddit has made no attempt to speak with us directly or communicate any potential concerns,” Grybauskas said in a written statement. “Oxylabs has always been and will continue to be a pioneer and an industry leader in public data collection, and it will not hesitate to defend itself against these allegations. Oxylabs’ position is that no company should claim ownership of public data that does not belong to them. It is possible that it is just an attempt to sell the same public data at an inflated price.”

As big and powerful as Aisuru may be, it is hardly the only botnet that is contributing to the overall broad availability of residential proxies. For example, on June 5 the FBI’s Internet Crime Complaint Center warned that an IoT malware threat dubbed BADBOX 2.0 had compromised millions of smart-TV boxes, digital projectors, vehicle infotainment units, picture frames, and other IoT devices.

In July, Google filed a lawsuit in New York federal court against the Badbox botnet’s alleged perpetrators. Google said the Badbox 2.0 botnet “compromised more than 10 million uncertified devices running Android’s open-source software, which lacks Google’s security protections. Cybercriminals infected these devices with pre-installed malware and exploited them to conduct large-scale ad fraud and other digital crimes.”

Brundage said the Aisuru botmasters have their own SDK, and for some reason part of its code tells many newly-infected systems to query the domain name fuckbriankrebs[.]com. This may be little more than an elaborate “screw you” to this site’s author: One of the botnet’s alleged partners goes by the handle “Forky,” and was identified in June by KrebsOnSecurity as a young man from Sao Paulo, Brazil.

Brundage noted that only systems infected with Aisuru’s Android SDK will be forced to resolve the domain. Initially, there was some discussion about whether the domain might have some utility as a “kill switch” capable of disrupting the botnet’s operations, although Brundage and others interviewed for this story say that is unlikely.

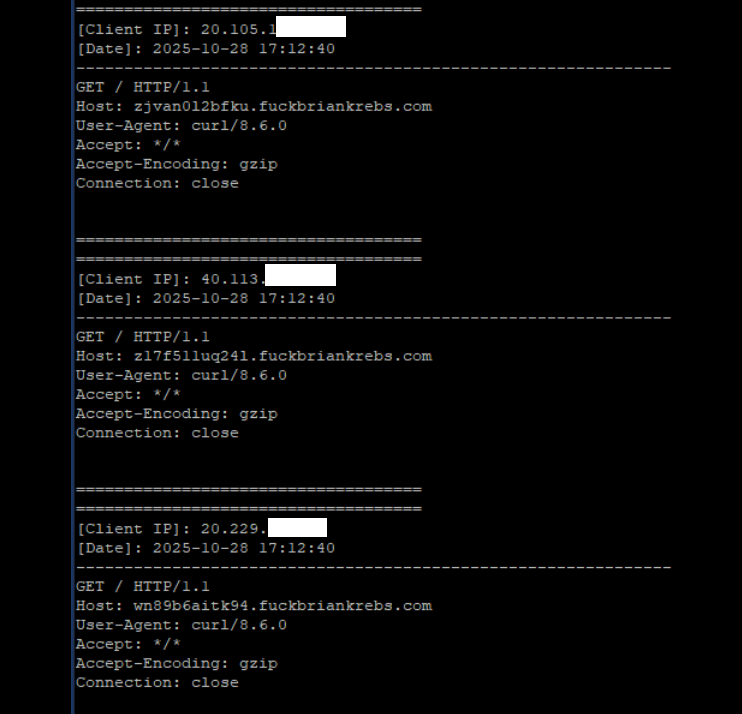

A tiny sample of the traffic after a DNS server was enabled on the newly registered domain fuckbriankrebs dot com. Each unique IP address requested its own unique subdomain. Image: Seralys.

For one thing, they said, if the domain was somehow critical to the operation of the botnet, why was it still unregistered and actively for-sale? Why indeed, we asked. Happily, the domain name was deftly snatched up last week by Philippe Caturegli, “chief hacking officer” for the security intelligence company Seralys.

Caturegli enabled a passive DNS server on that domain and within a few hours received more than 700,000 requests for unique subdomains on fuckbriankrebs[.]com.

But even with that visibility into Aisuru, it is difficult to use this domain check-in feature to measure its true size, Brundage said. After all, he said, the systems that are phoning home to the domain are only a small portion of the overall botnet.

“The bots are hardcoded to just spam lookups on the subdomains,” he said. “So anytime an infection occurs or it runs in the background, it will do one of those DNS queries.”

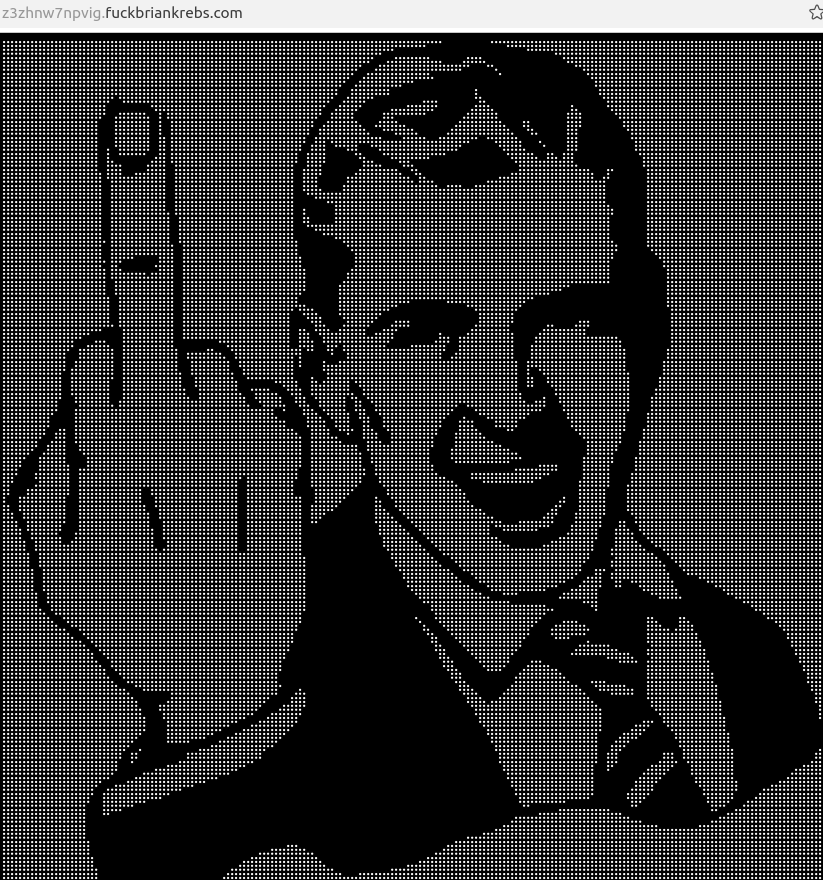

Caturegli briefly configured all subdomains on fuckbriankrebs dot com to display this ASCII art image to visiting systems today.

The domain fuckbriankrebs[.]com has a storied history. On its initial launch in 2009, it was used to spread malicious software by the Cutwail spam botnet. In 2011, the domain was involved in a notable DDoS against this website from a botnet powered by Russkill (a.k.a. “Dirt Jumper”).

Domaintools.com finds that in 2015, fuckbriankrebs[.]com was registered to an email address attributed to David “Abdilo” Crees, a 27-year-old Australian man sentenced in May 2025 to time served for cybercrime convictions related to the Lizard Squad hacking group.

Update, Nov. 1, 2025, 10:25 a.m. ET: An earlier version of this story erroneously cited Spur’s proxy numbers from earlier this year; Spur said those numbers conflated residential proxies — which are rotating and attached to real end-user devices — with “ISP proxies” located at AT&T. ISP proxies, Spur said, involve tricking an ISP into routing a large number of IP addresses that are resold as far more static datacenter proxies.

U.S. prosecutors last week levied criminal hacking charges against 19-year-old U.K. national Thalha Jubair for allegedly being a core member of Scattered Spider, a prolific cybercrime group blamed for extorting at least $115 million in ransom payments from victims. The charges came as Jubair and an alleged co-conspirator appeared in a London court to face accusations of hacking into and extorting several large U.K. retailers, the London transit system, and healthcare providers in the United States.

At a court hearing last week, U.K. prosecutors laid out a litany of charges against Jubair and 18-year-old Owen Flowers, accusing the teens of involvement in an August 2024 cyberattack that crippled Transport for London, the entity responsible for the public transport network in the Greater London area.

A court artist sketch of Owen Flowers (left) and Thalha Jubair appearing at Westminster Magistrates’ Court last week. Credit: Elizabeth Cook, PA Wire.

On July 10, 2025, KrebsOnSecurity reported that Flowers and Jubair had been arrested in the United Kingdom in connection with recent Scattered Spider ransom attacks against the retailers Marks & Spencer and Harrods, and the British food retailer Co-op Group.

That story cited sources close to the investigation saying Flowers was the Scattered Spider member who anonymously gave interviews to the media in the days after the group’s September 2023 ransomware attacks disrupted operations at Las Vegas casinos operated by MGM Resorts and Caesars Entertainment.

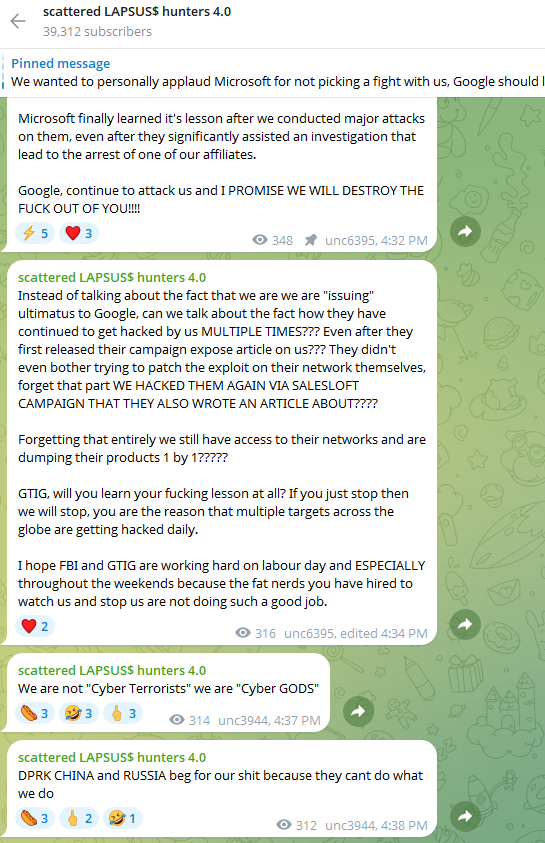

The story also noted that Jubair’s alleged handles on cybercrime-focused Telegram channels had far lengthier rap sheets involving some of the more consequential and headline-grabbing data breaches over the past four years. What follows is an account of cybercrime activities that prosecutors have attributed to Jubair’s alleged hacker handles, as told by those accounts in posts to public Telegram channels that are closely monitored by multiple cyber intelligence firms.

Jubair is alleged to have been a core member of the LAPSUS$ cybercrime group that broke into dozens of technology companies beginning in late 2021, stealing source code and other internal data from tech giants including Microsoft, Nvidia, Okta, Rockstar Games, Samsung, T-Mobile, and Uber.

That is, according to the former leader of the now-defunct LAPSUS$. In April 2022, KrebsOnSecurity published internal chat records taken from a server that LAPSUS$ used, and those chats indicate Jubair was working with the group using the nicknames Amtrak and Asyntax. In the middle of the gang’s cybercrime spree, Asyntax told the LAPSUS$ leader not to share T-Mobile’s logo in images sent to the group because he’d been previously busted for SIM-swapping and his parents would suspect he was back at it again.

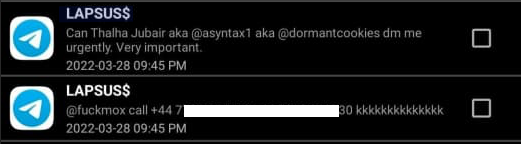

The leader of LAPSUS$ responded by gleefully posting Asyntax’s real name, phone number, and other hacker handles into a public chat room on Telegram:

In March 2022, the leader of the LAPSUS$ data extortion group exposed Thalha Jubair’s name and hacker handles in a public chat room on Telegram.

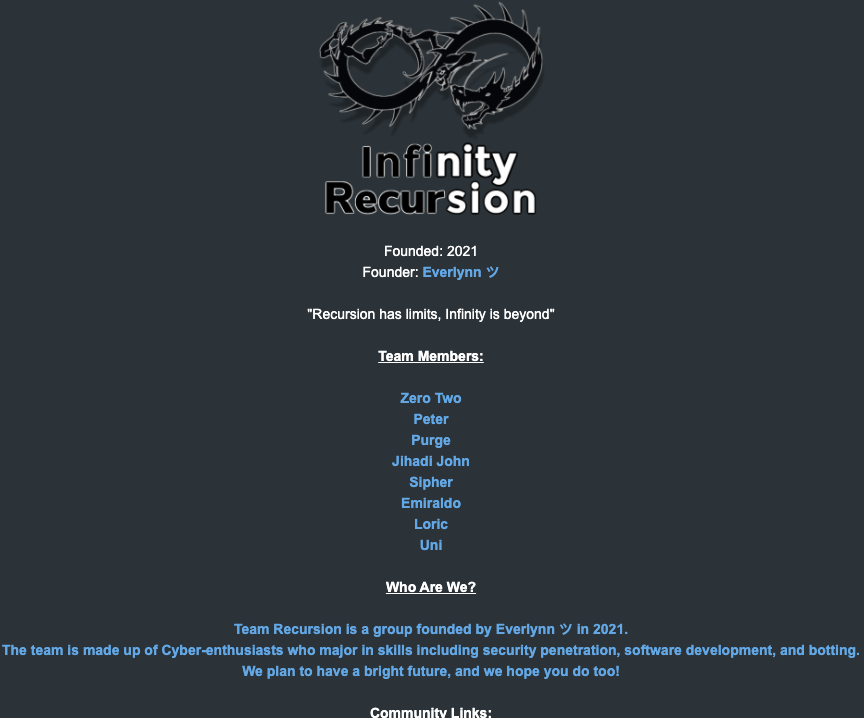

That story about the leaked LAPSUS$ chats also connected Amtrak/Asyntax to several previous hacker identities, including “Everlynn,” who in April 2021 began offering a cybercriminal service that sold fraudulent “emergency data requests” targeting the major social media and email providers.

In these so-called “fake EDR” schemes, the hackers compromise email accounts tied to police departments and government agencies, and then send unauthorized demands for subscriber data (e.g. username, IP/email address), while claiming the information being requested can’t wait for a court order because it relates to an urgent matter of life and death.

The roster of the now-defunct “Infinity Recursion” hacking team, which sold fake EDRs between 2021 and 2022. The founder “Everlynn” has been tied to Jubair. The member listed as “Peter” became the leader of LAPSUS$ who would later post Jubair’s name, phone number and hacker handles into LAPSUS$’s chat channel.

Prosecutors in New Jersey last week alleged Jubair was part of a threat group variously known as Scattered Spider, 0ktapus, and UNC3944, and that he used the nicknames EarthtoStar, Brad, Austin, and Austistic.

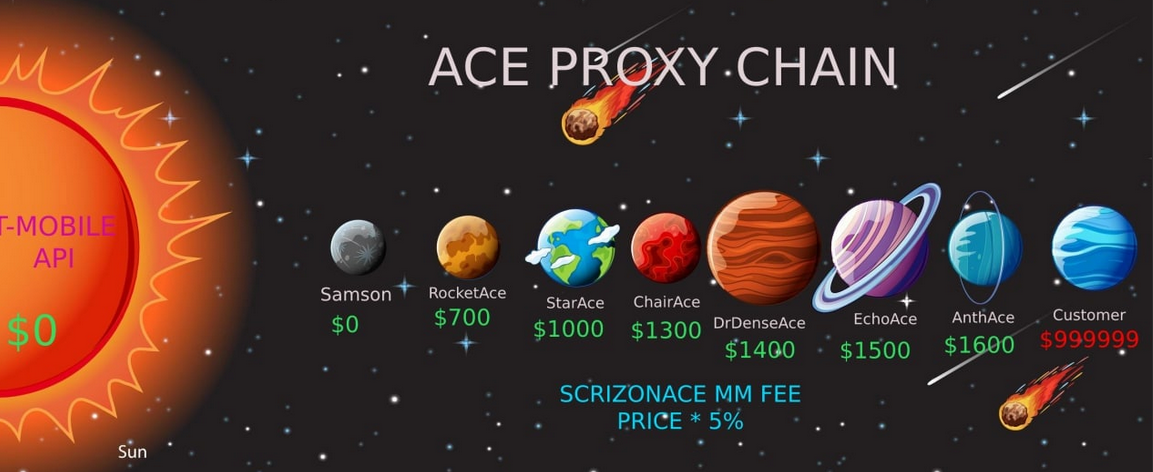

Beginning in 2022, EarthtoStar co-ran a bustling Telegram channel called Star Chat, which was home to a prolific SIM-swapping group that relentlessly used voice- and SMS-based phishing attacks to steal credentials from employees at the major wireless providers in the U.S. and U.K.

Jubair allegedly used the handle “Earth2Star,” a core member of a prolific SIM-swapping group operating in 2022. This ad produced by the group lists various prices for SIM swaps.

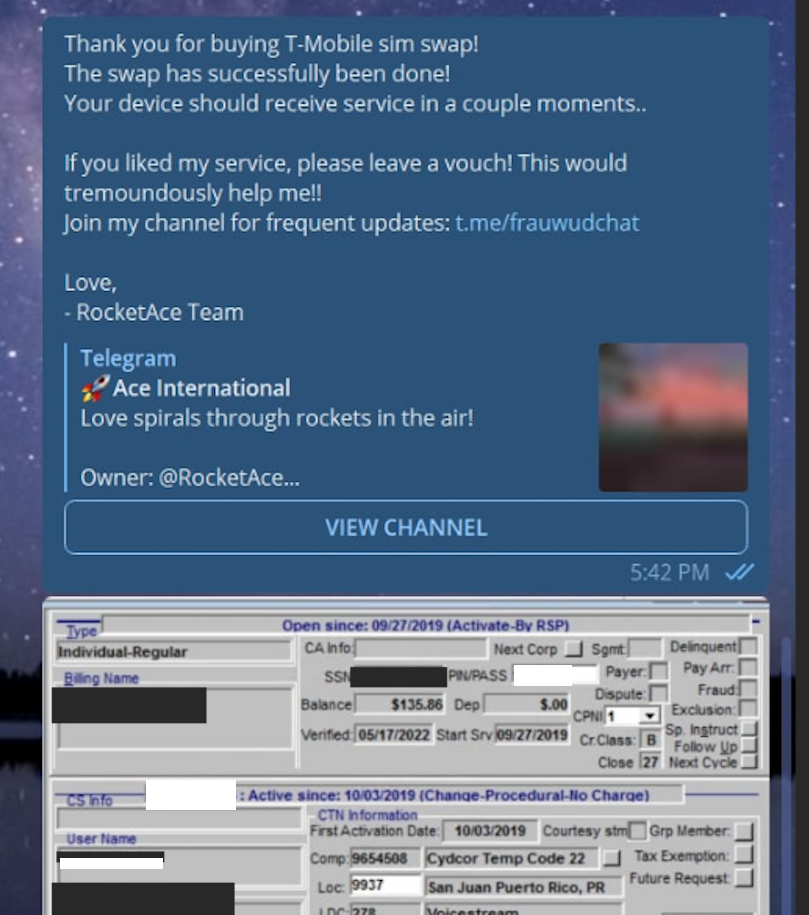

The group would then use that access to sell a SIM-swapping service that could redirect a target’s phone number to a device the attackers controlled, allowing them to intercept the victim’s phone calls and text messages (including one-time codes). Members of Star Chat targeted multiple wireless carriers with SIM-swapping attacks, but they focused mainly on phishing T-Mobile employees.

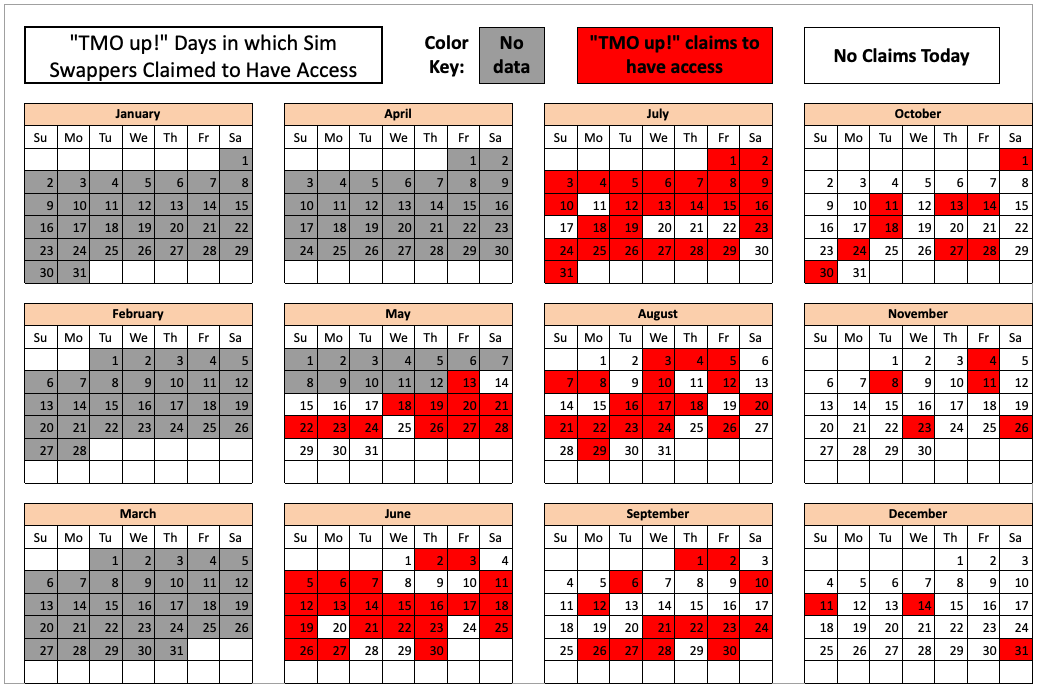

In February 2023, KrebsOnSecurity scrutinized more than seven months of these SIM-swapping solicitations on Star Chat, which almost daily peppered the public channel with “Tmo up!” and “Tmo down!” notices indicating periods wherein the group claimed to have active access to T-Mobile’s network.

A redacted receipt from Star Chat’s SIM-swapping service targeting a T-Mobile customer after the group gained access to internal T-Mobile employee tools.

The data showed that Star Chat — along with two other SIM-swapping groups operating at the same time — collectively broke into T-Mobile over a hundred times in the last seven months of 2022. However, Star Chat was by far the most prolific of the three, responsible for at least 70 of those incidents.

The 104 days in the latter half of 2022 in which different known SIM-swapping groups claimed access to T-Mobile employee tools. Star Chat was responsible for a majority of these incidents. Image: krebsonsecurity.com.

A review of EarthtoStar’s messages on Star Chat as indexed by the threat intelligence firm Flashpoint shows this person also sold “AT&T email resets” and AT&T call forwarding services for up to $1,200 per line. EarthtoStar explained the purpose of this service in post on Telegram:

“Ok people are confused, so you know when u login to chase and it says ‘2fa required’ or whatever the fuck, well it gives you two options, SMS or Call. If you press call, and I forward the line to you then who do you think will get said call?”

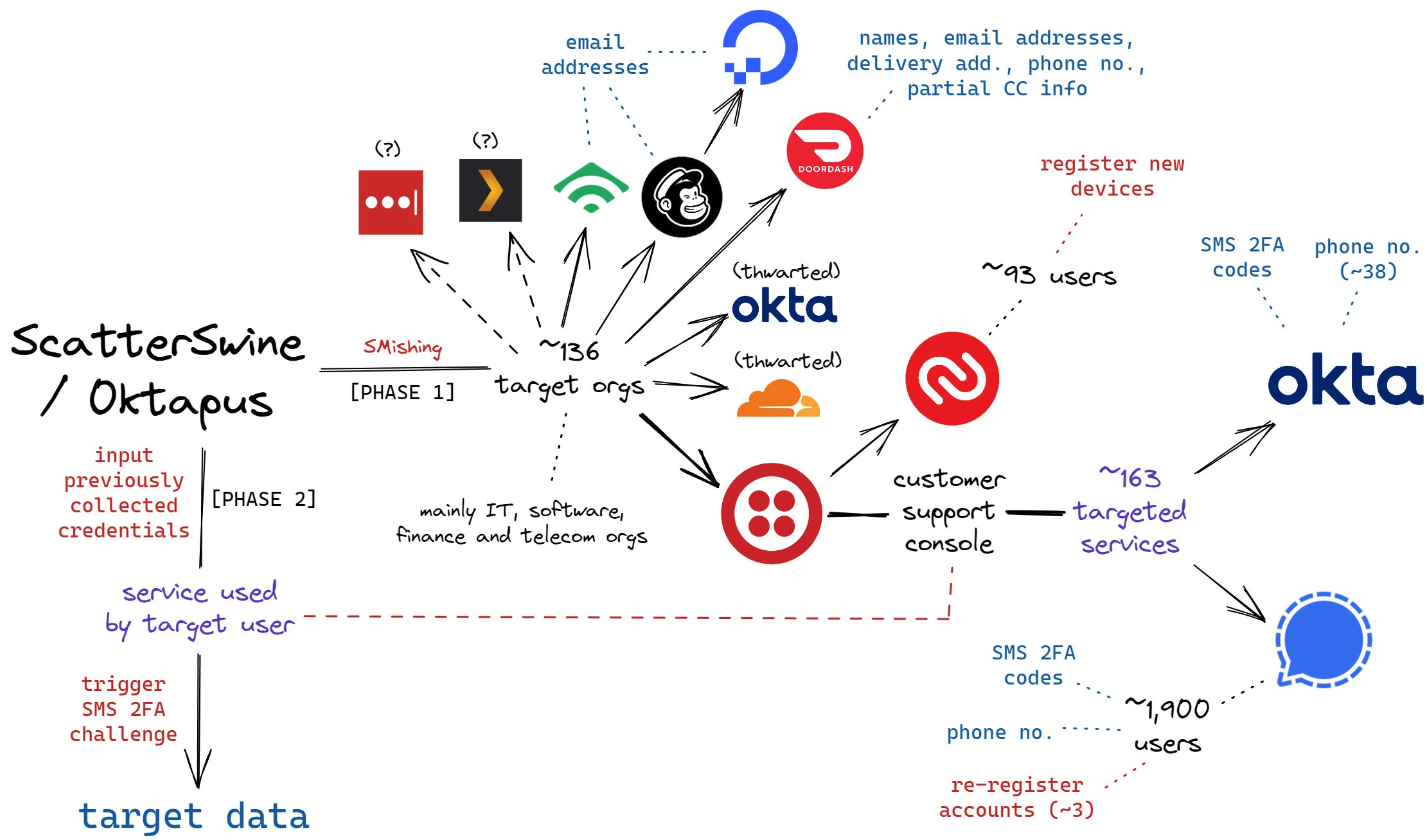

New Jersey prosecutors allege Jubair also was involved in a mass SMS phishing campaign during the summer of 2022 that stole single sign-on credentials from employees at hundreds of companies. The text messages asked users to click a link and log in at a phishing page that mimicked their employer’s Okta authentication page, saying recipients needed to review pending changes to their upcoming work schedules.

The phishing websites used a Telegram instant message bot to forward any submitted credentials in real-time, allowing the attackers to use the phished username, password and one-time code to log in as that employee at the real employer website.

That weeks-long SMS phishing campaign led to intrusions and data thefts at more than 130 organizations, including LastPass, DoorDash, Mailchimp, Plex and Signal.

A visual depiction of the attacks by the SMS phishing group known as 0ktapus, ScatterSwine, and Scattered Spider. Image: Amitai Cohen twitter.com/amitaico.

EarthtoStar’s group Star Chat specialized in phishing their way into business process outsourcing (BPO) companies that provide customer support for a range of multinational companies, including a number of the world’s largest telecommunications providers. In May 2022, EarthtoStar posted to the Telegram channel “Frauwudchat”:

“Hi, I am looking for partners in order to exfiltrate data from large telecommunications companies/call centers/alike, I have major experience in this field, [including] a massive call center which houses 200,000+ employees where I have dumped all user credentials and gained access to the [domain controller] + obtained global administrator I also have experience with REST API’s and programming. I have extensive experience with VPN, Citrix, cisco anyconnect, social engineering + privilege escalation. If you have any Citrix/Cisco VPN or any other useful things please message me and lets work.”

At around the same time in the Summer of 2022, at least two different accounts tied to Star Chat — “RocketAce” and “Lopiu” — introduced the group’s services to denizens of the Russian-language cybercrime forum Exploit, including:

-SIM-swapping services targeting Verizon and T-Mobile customers;

-Dynamic phishing pages targeting customers of single sign-on providers like Okta;

-Malware development services;

-The sale of extended validation (EV) code signing certificates.

The user “Lopiu” on the Russian cybercrime forum Exploit advertised many of the same unique services offered by EarthtoStar and other Star Chat members. Image source: ke-la.com.

These two accounts on Exploit created multiple sales threads in which they claimed administrative access to U.S. telecommunications providers and asked other Exploit members for help in monetizing that access. In June 2022, RocketAce, which appears to have been just one of EarthtoStar’s many aliases, posted to Exploit:

Hello. I have access to a telecommunications company’s citrix and vpn. I would like someone to help me break out of the system and potentially attack the domain controller so all logins can be extracted we can discuss payment and things leave your telegram in the comments or private message me ! Looking for someone with knowledge in citrix/privilege escalation

On Nov. 15, 2022, EarthtoStar posted to their Star Sanctuary Telegram channel that they were hiring malware developers with a minimum of three years of experience and the ability to develop rootkits, backdoors and malware loaders.

“Optional: Endorsed by advanced APT Groups (e.g. Conti, Ryuk),” the ad concluded, referencing two of Russia’s most rapacious and destructive ransomware affiliate operations. “Part of a nation-state / ex-3l (3 letter-agency).”

The Telegram and Discord chat channels wherein Flowers and Jubair allegedly planned and executed their extortion attacks are part of a loose-knit network known as the Com, an English-speaking cybercrime community consisting mostly of individuals living in the United States, the United Kingdom, Canada and Australia.

Many of these Com chat servers have hundreds to thousands of members each, and some of the more interesting solicitations on these communities are job offers for in-person assignments and tasks that can be found if one searches for posts titled, “If you live near,” or “IRL job” — short for “in real life” job.

These “violence-as-a-service” solicitations typically involve “brickings,” where someone is hired to toss a brick through the window at a specified address. Other IRL jobs for hire include tire-stabbings, molotov cocktail hurlings, drive-by shootings, and even home invasions. The people targeted by these services are typically other criminals within the community, but it’s not unusual to see Com members asking others for help in harassing or intimidating security researchers and even the very law enforcement officers who are investigating their alleged crimes.

It remains unclear what precipitated this incident or what followed directly after, but on January 13, 2023, a Star Sanctuary account used by EarthtoStar solicited the home invasion of a sitting U.S. federal prosecutor from New York. That post included a photo of the prosecutor taken from the Justice Department’s website, along with the message:

“Need irl niggas, in home hostage shit no fucking pussies no skinny glock holding 100 pound niggas either”

Throughout late 2022 and early 2023, EarthtoStar’s alias “Brad” (a.k.a. “Brad_banned”) frequently advertised Star Chat’s malware development services, including custom malicious software designed to hide the attacker’s presence on a victim machine:

We can develop KERNEL malware which will achieve persistence for a long time,

bypass firewalls and have reverse shell access.This shit is literally like STAGE 4 CANCER FOR COMPUTERS!!!

Kernel meaning the highest level of authority on a machine.

This can range to simple shells to Bootkits.Bypass all major EDR’s (SentinelOne, CrowdStrike, etc)

Patch EDR’s scanning functionality so it’s rendered useless!Once implanted, extremely difficult to remove (basically impossible to even find)

Development Experience of several years and in multiple APT Groups.Be one step ahead of the game. Prices start from $5,000+. Message @brad_banned to get a quote

In September 2023 , both MGM Resorts and Caesars Entertainment suffered ransomware attacks at the hands of a Russian ransomware affiliate program known as ALPHV and BlackCat. Caesars reportedly paid a $15 million ransom in that incident.

Within hours of MGM publicly acknowledging the 2023 breach, members of Scattered Spider were claiming credit and telling reporters they’d broken in by social engineering a third-party IT vendor. At a hearing in London last week, U.K. prosecutors told the court Jubair was found in possession of more than $50 million in ill-gotten cryptocurrency, including funds that were linked to the Las Vegas casino hacks.

The Star Chat channel was finally banned by Telegram on March 9, 2025. But U.S. prosecutors say Jubair and fellow Scattered Spider members continued their hacking, phishing and extortion activities up until September 2025.

In April 2025, the Com was buzzing about the publication of “The Com Cast,” a lengthy screed detailing Jubair’s alleged cybercriminal activities and nicknames over the years. This account included photos and voice recordings allegedly of Jubair, and asserted that in his early days on the Com Jubair used the nicknames Clark and Miku (these are both aliases used by Everlynn in connection with their fake EDR services).

Thalha Jubair (right), without his large-rimmed glasses, in an undated photo posted in The Com Cast.

More recently, the anonymous Com Cast author(s) claimed, Jubair had used the nickname “Operator,” which corresponds to a Com member who ran an automated Telegram-based doxing service that pulled consumer records from hacked data broker accounts. That public outing came after Operator allegedly seized control over the Doxbin, a long-running and highly toxic community that is used to “dox” or post deeply personal information on people.

“Operator/Clark/Miku: A key member of the ransomware group Scattered Spider, which consists of a diverse mix of individuals involved in SIM swapping and phishing,” the Com Cast account stated. “The group is an amalgamation of several key organizations, including Infinity Recursion (owned by Operator), True Alcorians (owned by earth2star), and Lapsus, which have come together to form a single collective.”

The New Jersey complaint (PDF) alleges Jubair and other Scattered Spider members committed computer fraud, wire fraud, and money laundering in relation to at least 120 computer network intrusions involving 47 U.S. entities between May 2022 and September 2025. The complaint alleges the group’s victims paid at least $115 million in ransom payments.

U.S. authorities say they traced some of those payments to Scattered Spider to an Internet server controlled by Jubair. The complaint states that a cryptocurrency wallet discovered on that server was used to purchase several gift cards, one of which was used at a food delivery company to send food to his apartment. Another gift card purchased with cryptocurrency from the same server was allegedly used to fund online gaming accounts under Jubair’s name. U.S. prosecutors said that when they seized that server they also seized $36 million in cryptocurrency.

The complaint also charges Jubair with involvement in a hacking incident in January 2025 against the U.S. courts system that targeted a U.S. magistrate judge overseeing a related Scattered Spider investigation. That other investigation appears to have been the prosecution of Noah Michael Urban, a 20-year-old Florida man charged in November 2024 by prosecutors in Los Angeles as one of five alleged Scattered Spider members.

Urban pleaded guilty in April 2025 to wire fraud and conspiracy charges, and in August he was sentenced to 10 years in federal prison. Speaking with KrebsOnSecurity from jail after his sentencing, Urban asserted that the judge gave him more time than prosecutors requested because he was mad that Scattered Spider hacked his email account.

Noah “Kingbob” Urban, posting to Twitter/X around the time of his sentencing on Aug. 20.

A court transcript (PDF) from a status hearing in February 2025 shows Urban was telling the truth about the hacking incident that happened while he was in federal custody. The judge told attorneys for both sides that a co-defendant in the California case was trying to find out about Mr. Urban’s activity in the Florida case, and that the hacker accessed the account by impersonating a judge over the phone and requesting a password reset.

Allison Nixon is chief research officer at the New York based security firm Unit 221B, and easily one of the world’s leading experts on Com-based cybercrime activity. Nixon said the core problem with legally prosecuting well-known cybercriminals from the Com has traditionally been that the top offenders tend to be under the age of 18, and thus difficult to charge under federal hacking statutes.

In the United States, prosecutors typically wait until an underage cybercrime suspect becomes an adult to charge them. But until that day comes, she said, Com actors often feel emboldened to continue committing — and very often bragging about — serious cybercrime offenses.

“Here we have a special category of Com offenders that effectively enjoy legal immunity,” Nixon told KrebsOnSecurity. “Most get recruited to Com groups when they are older, but of those that join very young, such as 12 or 13, they seem to be the most dangerous because at that age they have no grounding in reality and so much longevity before they exit their legal immunity.”

Nixon said U.K. authorities face the same challenge when they briefly detain and search the homes of underage Com suspects: Namely, the teen suspects simply go right back to their respective cliques in the Com and start robbing and hurting people again the minute they’re released.

Indeed, the U.K. court heard from prosecutors last week that both Scattered Spider suspects were detained and/or searched by local law enforcement on multiple occasions, only to return to the Com less than 24 hours after being released each time.

“What we see is these young Com members become vectors for perpetrators to commit enormously harmful acts and even child abuse,” Nixon said. “The members of this special category of people who enjoy legal immunity are meeting up with foreign nationals and conducting these sometimes heinous acts at their behest.”

Nixon said many of these individuals have few friends in real life because they spend virtually all of their waking hours on Com channels, and so their entire sense of identity, community and self-worth gets wrapped up in their involvement with these online gangs. She said if the law was such that prosecutors could treat these people commensurate with the amount of harm they cause society, that would probably clear up a lot of this problem.

“If law enforcement was allowed to keep them in jail, they would quit reoffending,” she said.

The Times of London reports that Flowers is facing three charges under the Computer Misuse Act: two of conspiracy to commit an unauthorized act in relation to a computer causing/creating risk of serious damage to human welfare/national security and one of attempting to commit the same act. Maximum sentences for these offenses can range from 14 years to life in prison, depending on the impact of the crime.

Jubair is reportedly facing two charges in the U.K.: One of conspiracy to commit an unauthorized act in relation to a computer causing/creating risk of serious damage to human welfare/national security and one of failing to comply with a section 49 notice to disclose the key to protected information.

In the United States, Jubair is charged with computer fraud conspiracy, two counts of computer fraud, wire fraud conspiracy, two counts of wire fraud, and money laundering conspiracy. If extradited to the U.S., tried and convicted on all charges, he faces a maximum penalty of 95 years in prison.

In July 2025, the United Kingdom barred victims of hacking from paying ransoms to cybercriminal groups unless approved by officials. U.K. organizations that are considered part of critical infrastructure reportedly will face a complete ban, as will the entire public sector. U.K. victims of a hack are now required to notify officials to better inform policymakers on the scale of Britain’s ransomware problem.

For further reading (bless you), check out Bloomberg’s poignant story last week based on a year’s worth of jailhouse interviews with convicted Scattered Spider member Noah Urban.

At least 18 popular JavaScript code packages that are collectively downloaded more than two billion times each week were briefly compromised with malicious software today, after a developer involved in maintaining the projects was phished. The attack appears to have been quickly contained and was narrowly focused on stealing cryptocurrency. But experts warn that a similar attack with a slightly more nefarious payload could lead to a disruptive malware outbreak that is far more difficult to detect and restrain.

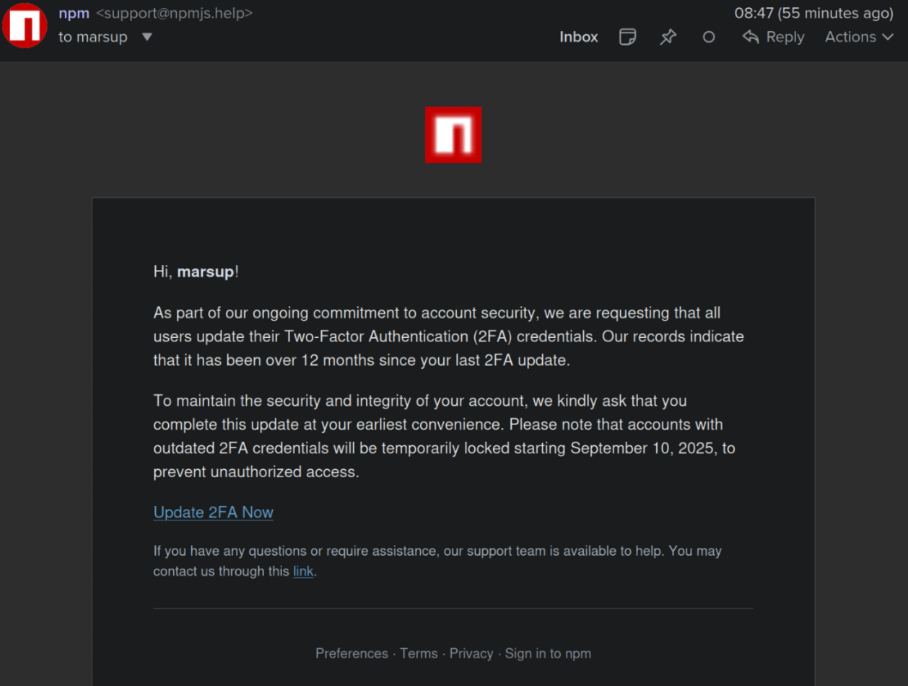

This phishing email lured a developer into logging in at a fake NPM website and supplying a one-time token for two-factor authentication. The phishers then used that developer’s NPM account to add malicious code to at least 18 popular JavaScript code packages.

Aikido is a security firm in Belgium that monitors new code updates to major open-source code repositories, scanning any code updates for suspicious and malicious code. In a blog post published today, Aikido said its systems found malicious code had been added to at least 18 widely-used code libraries available on NPM (short for) “Node Package Manager,” which acts as a central hub for JavaScript development and the latest updates to widely-used JavaScript components.

JavaScript is a powerful web-based scripting language used by countless websites to build a more interactive experience with users, such as entering data into a form. But there’s no need for each website developer to build a program from scratch for entering data into a form when they can just reuse already existing packages of code at NPM that are specifically designed for that purpose.

Unfortunately, if cybercriminals manage to phish NPM credentials from developers, they can introduce malicious code that allows attackers to fundamentally control what people see in their web browser when they visit a website that uses one of the affected code libraries.

According to Aikido, the attackers injected a piece of code that silently intercepts cryptocurrency activity in the browser, “manipulates wallet interactions, and rewrites payment destinations so that funds and approvals are redirected to attacker-controlled accounts without any obvious signs to the user.”

“This malware is essentially a browser-based interceptor that hijacks both network traffic and application APIs,” Aikido researcher Charlie Eriksen wrote. “What makes it dangerous is that it operates at multiple layers: Altering content shown on websites, tampering with API calls, and manipulating what users’ apps believe they are signing. Even if the interface looks correct, the underlying transaction can be redirected in the background.”

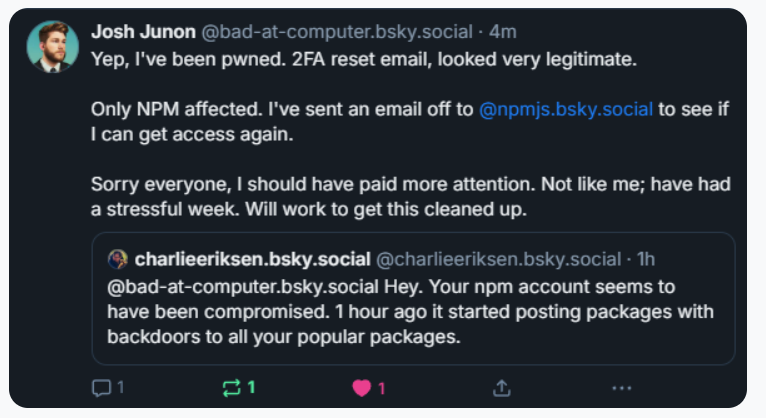

Aikido said it used the social network Bsky to notify the affected developer, Josh Junon, who quickly replied that he was aware of having just been phished. The phishing email that Junon fell for was part of a larger campaign that spoofed NPM and told recipients they were required to update their two-factor authentication (2FA) credentials. The phishing site mimicked NPM’s login page, and intercepted Junon’s credentials and 2FA token. Once logged in, the phishers then changed the email address on file for Junon’s NPM account, temporarily locking him out.

Aikido notified the maintainer on Bluesky, who replied at 15:15 UTC that he was aware of being compromised, and starting to clean up the compromised packages.

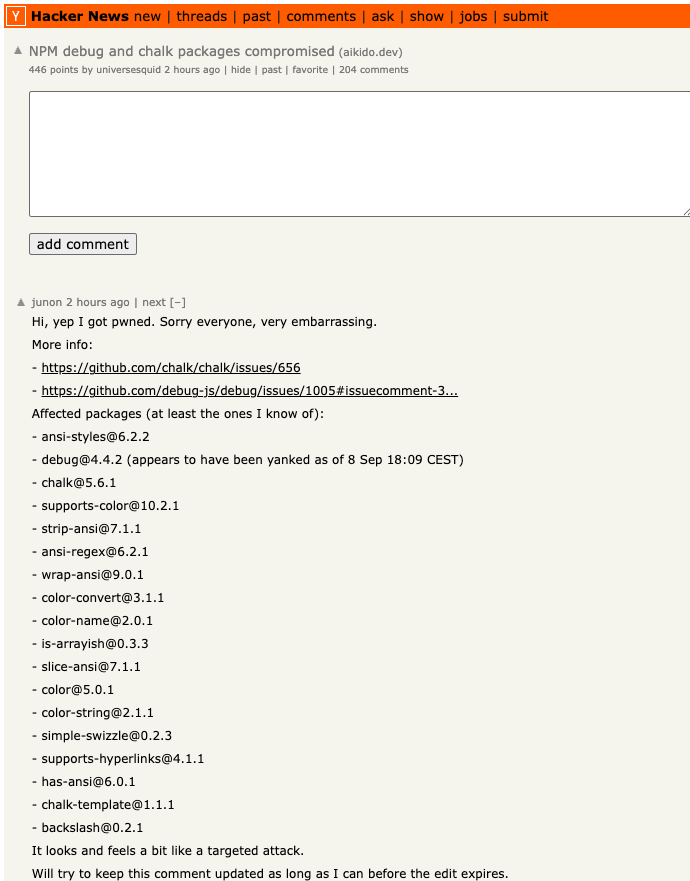

Junon also issued a mea culpa on HackerNews, telling the community’s coder-heavy readership, “Hi, yep I got pwned.”

“It looks and feels a bit like a targeted attack,” Junon wrote. “Sorry everyone, very embarrassing.”

Philippe Caturegli, “chief hacking officer” at the security consultancy Seralys, observed that the attackers appear to have registered their spoofed website — npmjs[.]help — just two days before sending the phishing email. The spoofed website used services from dnsexit[.]com, a “dynamic DNS” company that also offers “100% free” domain names that can instantly be pointed at any IP address controlled by the user.

Junon’s mea cupla on Hackernews today listed the affected packages.

Caturegli said it’s remarkable that the attackers in this case were not more ambitious or malicious with their code modifications.

“The crazy part is they compromised billions of websites and apps just to target a couple of cryptocurrency things,” he said. “This was a supply chain attack, and it could easily have been something much worse than crypto harvesting.”

Aikido’s Eriksen agreed, saying countless websites dodged a bullet because this incident was handled in a matter of hours. As an example of how these supply-chain attacks can escalate quickly, Eriksen pointed to another compromise of an NPM developer in late August that added malware to “nx,” an open-source code development toolkit with as many as six million weekly downloads.

In the nx compromise, the attackers introduced code that scoured the user’s device for authentication tokens from programmer destinations like GitHub and NPM, as well as SSH and API keys. But instead of sending those stolen credentials to a central server controlled by the attackers, the malicious code created a new public repository in the victim’s GitHub account, and published the stolen data there for all the world to see and download.

Eriksen said coding platforms like GitHub and NPM should be doing more to ensure that any new code commits for broadly-used packages require a higher level of attestation that confirms the code in question was in fact submitted by the person who owns the account, and not just by that person’s account.

“More popular packages should require attestation that it came through trusted provenance and not just randomly from some location on the Internet,” Eriksen said. “Where does the package get uploaded from, by GitHub in response to a new pull request into the main branch, or somewhere else? In this case, they didn’t compromise the target’s GitHub account. They didn’t touch that. They just uploaded a modified version that didn’t come where it’s expected to come from.”

Eriksen said code repository compromises can be devastating for developers, many of whom end up abandoning their projects entirely after such an incident.

“It’s unfortunate because one thing we’ve seen is people have their projects get compromised and they say, ‘You know what, I don’t have the energy for this and I’m just going to deprecate the whole package,'” Eriksen said.

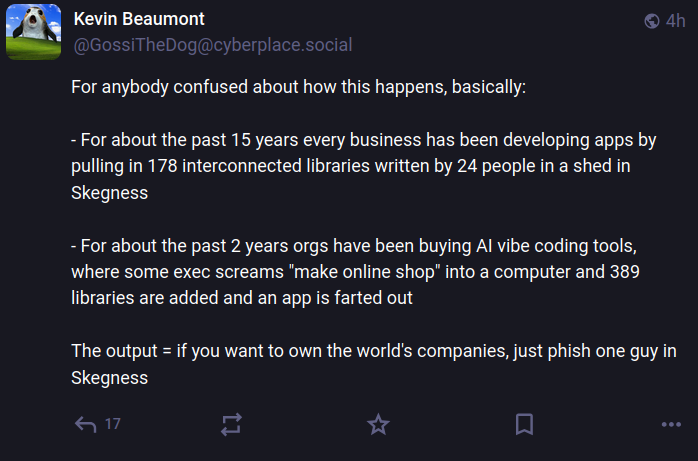

Kevin Beaumont, a frequently quoted security expert who writes about security incidents at the blog doublepulsar.com, has been following this story closely today in frequent updates to his account on Mastodon. Beaumont said the incident is a reminder that much of the planet still depends on code that is ultimately maintained by an exceedingly small number of people who are mostly overburdened and under-resourced.

“For about the past 15 years every business has been developing apps by pulling in 178 interconnected libraries written by 24 people in a shed in Skegness,” Beaumont wrote on Mastodon. “For about the past 2 years orgs have been buying AI vibe coding tools, where some exec screams ‘make online shop’ into a computer and 389 libraries are added and an app is farted out. The output = if you want to own the world’s companies, just phish one guy in Skegness.”

Image: https://infosec.exchange/@GossiTheDog@cyberplace.social.

Aikido recently launched a product that aims to help development teams ensure that every code library used is checked for malware before it can be used or installed. Nicholas Weaver, a researcher with the International Computer Science Institute, a nonprofit in Berkeley, Calif., said Aikido’s new offering exists because many organizations are still one successful phishing attack away from a supply-chain nightmare.

Weaver said these types of supply-chain compromises will continue as long as people responsible for maintaining widely-used code continue to rely on phishable forms of 2FA.

“NPM should only support phish-proof authentication,” Weaver said, referring to physical security keys that are phish-proof — meaning that even if phishers manage to steal your username and password, they still can’t log in to your account without also possessing that physical key.

“All critical infrastructure needs to use phish-proof 2FA, and given the dependencies in modern software, archives such as NPM are absolutely critical infrastructure,” Weaver said. “That NPM does not require that all contributor accounts use security keys or similar 2FA methods should be considered negligence.”

The recent mass-theft of authentication tokens from Salesloft, whose AI chatbot is used by a broad swath of corporate America to convert customer interaction into Salesforce leads, has left many companies racing to invalidate the stolen credentials before hackers can exploit them. Now Google warns the breach goes far beyond access to Salesforce data, noting the hackers responsible also stole valid authentication tokens for hundreds of online services that customers can integrate with Salesloft, including Slack, Google Workspace, Amazon S3, Microsoft Azure, and OpenAI.

Salesloft says its products are trusted by 5,000+ customers. Some of the bigger names are visible on the company’s homepage.

Salesloft disclosed on August 20 that, “Today, we detected a security issue in the Drift application,” referring to the technology that powers an AI chatbot used by so many corporate websites. The alert urged customers to re-authenticate the connection between the Drift and Salesforce apps to invalidate their existing authentication tokens, but it said nothing then to indicate those tokens had already been stolen.

On August 26, the Google Threat Intelligence Group (GTIG) warned that unidentified hackers tracked as UNC6395 used the access tokens stolen from Salesloft to siphon large amounts of data from numerous corporate Salesforce instances. Google said the data theft began as early as Aug. 8, 2025 and lasted through at least Aug. 18, 2025, and that the incident did not involve any vulnerability in the Salesforce platform.

Google said the attackers have been sifting through the massive data haul for credential materials such as AWS keys, VPN credentials, and credentials to the cloud storage provider Snowflake.

“If successful, the right credentials could allow them to further compromise victim and client environments, as well as pivot to the victim’s clients or partner environments,” the GTIG report stated.

The GTIG updated its advisory on August 28 to acknowledge the attackers used the stolen tokens to access email from “a very small number of Google Workspace accounts” that were specially configured to integrate with Salesloft. More importantly, it warned organizations to immediately invalidate all tokens stored in or connected to their Salesloft integrations — regardless of the third-party service in question.

“Given GTIG’s observations of data exfiltration associated with the campaign, organizations using Salesloft Drift to integrate with third-party platforms (including but not limited to Salesforce) should consider their data compromised and are urged to take immediate remediation steps,” Google advised.