/\

_ / |

/ \ | \

| |\| |

| | | /

| /| |/

|/ |/

,/; ; ;

,'/|; ,/,/,

,'/ |;/,/,/,/|

,/; |;|/,/,/,/,/|

,/'; |;|,/,/,/,/,/|

,/'; |;|/,/,/,/,/,/|,

/ ; |;|,/,/,/,/,/,/|

/ ,'; |;|/,/,/,/,/,/,/|

/,/'; |;|,/,/,/,/,/,/,/|

/;/ '; |;|/,/,/,/,/,/,/,/|

██████╗ ███████╗ ██████╗ █████╗ ███████╗██╗ ██╗███████╗

██╔══██╗██╔════╝██╔════╝ ██╔══██╗██╔════╝██║ ██║██╔════╝

██████╔╝█████╗ ██║ ███╗███████║███████╗██║ ██║███████╗

██╔═══╝ ██╔══╝ ██║ ██║██╔══██║╚════██║██║ ██║╚════██║

██║ ███████╗╚██████╔╝██║ ██║███████║╚██████╔╝███████║

╚═╝ ╚══════╝ ╚═════╝ ╚═╝ ╚═╝╚══════╝ ╚═════╝ ╚══════╝

P E N T E S T A R S E N A L

A comprehensive web application security testing toolkit that combines 10 powerful penetration testing features into one tool.

Identifies potential security Misconfigurations" title="Misconfigurations">misconfigurations

JWT Token Inspector

Detects common JWT vulnerabilities

Parameter Pollution Finder

Detects server-side parameter handling issues

CORS Misconfiguration Scanner

Detects credential exposure risks

Upload Bypass Tester

Identifies dangerous file type handling

Exposed .git Directory Finder

Tests for sensitive information disclosure

SSRF (Server Side Request Forgery) Detector

Includes cloud metadata endpoint tests

Blind SQL Injection Time Delay Detector

Identifies injectable parameters

Local File Inclusion (LFI) Mapper

Supports various encoding bypasses

Web Application Firewall (WAF) Fingerprinter

git clone https://github.com/sobri3195/pegasus-pentest-arsenal.git

cd pegasus-pentest-arsenal

python -m venv venv

source venv/bin/activate # On Windows: venv\Scripts\activate

pip install -r requirements.txt

python pegasus_pentest.py

This project is licensed under the MIT License - see the LICENSE file for details.

This tool is provided for educational and authorized testing purposes only. Users are responsible for obtaining proper authorization before testing any target. The authors are not responsible for any misuse or damage caused by this tool.

Thank you for following me! https://cybdetective.com

| Name | Link | Description | Price |

|---|---|---|---|

| Shodan | https://developer.shodan.io | Search engine for Internet connected host and devices | from $59/month |

| Netlas.io | https://netlas-api.readthedocs.io/en/latest/ | Search engine for Internet connected host and devices. Read more at Netlas CookBook | Partly FREE |

| Fofa.so | https://fofa.so/static_pages/api_help | Search engine for Internet connected host and devices | ??? |

| Censys.io | https://censys.io/api | Search engine for Internet connected host and devices | Partly FREE |

| Hunter.how | https://hunter.how/search-api | Search engine for Internet connected host and devices | Partly FREE |

| Fullhunt.io | https://api-docs.fullhunt.io/#introduction | Search engine for Internet connected host and devices | Partly FREE |

| IPQuery.io | https://ipquery.io | API for ip information such as ip risk, geolocation data, and asn details | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Social Links | https://sociallinks.io/products/sl-api | Email info lookup, phone info lookup, individual and company profiling, social media tracking, dark web monitoring and more. Code example of using this API for face search in this repo | PAID. Price per request |

| Name | Link | Description | Price |

|---|---|---|---|

| Numverify | https://numverify.com | Global Phone Number Validation & Lookup JSON API. Supports 232 countries. | 250 requests FREE |

| Twillo | https://www.twilio.com/docs/lookup/api | Provides a way to retrieve additional information about a phone number | Free or $0.01 per request (for caller lookup) |

| Plivo | https://www.plivo.com/lookup/ | Determine carrier, number type, format, and country for any phone number worldwide | from $0.04 per request |

| GetContact | https://github.com/kovinevmv/getcontact | Find info about user by phone number | from $6,89 in months/100 requests |

| Veriphone | https://veriphone.io/ | Phone number validation & carrier lookup | 1000 requests/month FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Global Address | https://rapidapi.com/adminMelissa/api/global-address/ | Easily verify, check or lookup address | FREE |

| US Street Address | https://smartystreets.com/docs/cloud/us-street-api | Validate and append data for any US postal address | FREE |

| Google Maps Geocoding API | https://developers.google.com/maps/documentation/geocoding/overview | convert addresses (like "1600 Amphitheatre Parkway, Mountain View, CA") into geographic coordinates | 0.005 USD per request |

| Postcoder | https://postcoder.com/address-lookup | Find adress by postcode | £130/5000 requests |

| Zipcodebase | https://zipcodebase.com | Lookup postal codes, calculate distances and much more | 5000 requests FREE |

| Openweathermap geocoding API | https://openweathermap.org/api/geocoding-api | get geographical coordinates (lat, lon) by using name of the location (city name or area name) | 60 calls/minute 1,000,000 calls/month |

| DistanceMatrix | https://distancematrix.ai/product | Calculate, evaluate and plan your routes | $1.25-$2 per 1000 elements |

| Geotagging API | https://geotagging.ai/ | Predict geolocations by texts | Freemium |

| Name | Link | Description | Price |

|---|---|---|---|

| Approuve.com | https://appruve.co | Allows you to verify the identities of individuals, businesses, and connect to financial account data across Africa | Paid |

| Onfido.com | https://onfido.com | Onfido Document Verification lets your users scan a photo ID from any device, before checking it's genuine. Combined with Biometric Verification, it's a seamless way to anchor an account to the real identity of a customer. India | Paid |

| Superpass.io | https://surepass.io/passport-id-verification-api/ | Passport, Photo ID and Driver License Verification in India | Paid |

| Name | Link | Description | Price |

|---|---|---|---|

| Open corporates | https://api.opencorporates.com | Companies information | Paid, price upon request |

| Linkedin company search API | https://docs.microsoft.com/en-us/linkedin/marketing/integrations/community-management/organizations/company-search?context=linkedin%2Fcompliance%2Fcontext&tabs=http | Find companies using keywords, industry, location, and other criteria | FREE |

| Mattermark | https://rapidapi.com/raygorodskij/api/Mattermark/ | Get companies and investor information | free 14-day trial, from $49 per month |

| Name | Link | Description | Price |

|---|---|---|---|

| API OSINT DS | https://github.com/davidonzo/apiosintDS | Collect info about IPv4/FQDN/URLs and file hashes in md5, sha1 or sha256 | FREE |

| InfoDB API | https://www.ipinfodb.com/api | The API returns the location of an IP address (country, region, city, zipcode, latitude, longitude) and the associated timezone in XML, JSON or plain text format | FREE |

| Domainsdb.info | https://domainsdb.info | Registered Domain Names Search | FREE |

| BGPView | https://bgpview.docs.apiary.io/# | allowing consumers to view all sort of analytics data about the current state and structure of the internet | FREE |

| DNSCheck | https://www.dnscheck.co/api | monitor the status of both individual DNS records and groups of related DNS records | up to 10 DNS records/FREE |

| Cloudflare Trace | https://github.com/fawazahmed0/cloudflare-trace-api | Get IP Address, Timestamp, User Agent, Country Code, IATA, HTTP Version, TLS/SSL Version & More | FREE |

| Host.io | https://host.io/ | Get info about domain | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| BeVigil OSINT API | https://bevigil.com/osint-api | provides access to millions of asset footprint data points including domain intel, cloud services, API information, and third party assets extracted from millions of mobile apps being continuously uploaded and scanned by users on bevigil.com | 50 credits free/1000 credits/$50 |

| Name | Link | Description | Price |

|---|---|---|---|

| WebScraping.AI | https://webscraping.ai/ | Web Scraping API with built-in proxies and JS rendering | FREE |

| ZenRows | https://www.zenrows.com/ | Web Scraping API that bypasses anti-bot solutions while offering JS rendering, and rotating proxies apiKey Yes Unknown | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Whois freaks | https://whoisfreaks.com/ | well-parsed and structured domain WHOIS data for all domain names, registrars, countries and TLDs since the birth of internet | $19/5000 requests |

| WhoisXMLApi | https://whois.whoisxmlapi.com | gathers a variety of domain ownership and registration data points from a comprehensive WHOIS database | 500 requests in month/FREE |

| IPtoWhois | https://www.ip2whois.com/developers-api | Get detailed info about a domain | 500 requests/month FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Ipstack | https://ipstack.com | Detect country, region, city and zip code | FREE |

| Ipgeolocation.io | https://ipgeolocation.io | provides country, city, state, province, local currency, latitude and longitude, company detail, ISP lookup, language, zip code, country calling code, time zone, current time, sunset and sunrise time, moonset and moonrise | 30 000 requests per month/FREE |

| IPInfoDB | https://ipinfodb.com/api | Free Geolocation tools and APIs for country, region, city and time zone lookup by IP address | FREE |

| IP API | https://ip-api.com/ | Free domain/IP geolocation info | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Mylnikov API | https://www.mylnikov.org | public API implementation of Wi-Fi Geo-Location database | FREE |

| Wigle | https://api.wigle.net/ | get location and other information by SSID | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| PeetingDB | https://www.peeringdb.com/apidocs/ | Database of networks, and the go-to location for interconnection data | FREE |

| PacketTotal | https://packettotal.com/api.html | .pcap files analyze | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Binlist.net | https://binlist.net/ | get information about bank by BIN | FREE |

| FDIC Bank Data API | https://banks.data.fdic.gov/docs/ | institutions, locations and history events | FREE |

| Amdoren | https://www.amdoren.com/currency-api/ | Free currency API with over 150 currencies | FREE |

| VATComply.com | https://www.vatcomply.com/documentation | Exchange rates, geolocation and VAT number validation | FREE |

| Alpaca | https://alpaca.markets/docs/api-documentation/api-v2/market-data/alpaca-data-api-v2/ | Realtime and historical market data on all US equities and ETFs | FREE |

| Swiftcodesapi | https://swiftcodesapi.com | Verifying the validity of a bank SWIFT code or IBAN account number | $39 per month/4000 swift lookups |

| IBANAPI | https://ibanapi.com | Validate IBAN number and get bank account information from it | Freemium/10$ Starter plan |

| Name | Link | Description | Price |

|---|---|---|---|

| EVA | https://eva.pingutil.com/ | Measuring email deliverability & quality | FREE |

| Mailboxlayer | https://mailboxlayer.com/ | Simple REST API measuring email deliverability & quality | 100 requests FREE, 5000 requests in month — $14.49 |

| EmailCrawlr | https://emailcrawlr.com/ | Get key information about company websites. Find all email addresses associated with a domain. Get social accounts associated with an email. Verify email address deliverability. | 200 requests FREE, 5000 requets — $40 |

| Voila Norbert | https://www.voilanorbert.com/api/ | Find anyone's email address and ensure your emails reach real people | from $49 in month |

| Kickbox | https://open.kickbox.com/ | Email verification API | FREE |

| FachaAPI | https://api.facha.dev/ | Allows checking if an email domain is a temporary email domain | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Genderize.io | https://genderize.io | Instantly answers the question of how likely a certain name is to be male or female and shows the popularity of the name. | 1000 names/day free |

| Agify.io | https://agify.io | Predicts the age of a person given their name | 1000 names/day free |

| Nataonalize.io | https://nationalize.io | Predicts the nationality of a person given their name | 1000 names/day free |

| Name | Link | Description | Price |

|---|---|---|---|

| HaveIBeenPwned | https://haveibeenpwned.com/API/v3 | allows the list of pwned accounts (email addresses and usernames) | $3.50 per month |

| Psdmp.ws | https://psbdmp.ws/api | search in Pastebin | $9.95 per 10000 requests |

| LeakPeek | https://psbdmp.ws/api | searc in leaks databases | $9.99 per 4 weeks unlimited access |

| BreachDirectory.com | https://breachdirectory.com/api_documentation | search domain in data breaches databases | FREE |

| LeekLookup | https://leak-lookup.com/api | search domain, email_address, fullname, ip address, phone, password, username in leaks databases | 10 requests FREE |

| BreachDirectory.org | https://rapidapi.com/rohan-patra/api/breachdirectory/pricing | search domain, email_address, fullname, ip address, phone, password, username in leaks databases (possible to view password hashes) | 50 requests in month/FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Wayback Machine API (Memento API, CDX Server API, Wayback Availability JSON API) | https://archive.org/help/wayback_api.php | Retrieve information about Wayback capture data | FREE |

| TROVE (Australian Web Archive) API | https://trove.nla.gov.au/about/create-something/using-api | Retrieve information about TROVE capture data | FREE |

| Archive-it API | https://support.archive-it.org/hc/en-us/articles/115001790023-Access-Archive-It-s-Wayback-index-with-the-CDX-C-API | Retrieve information about archive-it capture data | FREE |

| UK Web Archive API | https://ukwa-manage.readthedocs.io/en/latest/#api-reference | Retrieve information about UK Web Archive capture data | FREE |

| Arquivo.pt API | https://github.com/arquivo/pwa-technologies/wiki/Arquivo.pt-API | Allows full-text search and access preserved web content and related metadata. It is also possible to search by URL, accessing all versions of preserved web content. API returns a JSON object. | FREE |

| Library Of Congress archive API | https://www.loc.gov/apis/ | Provides structured data about Library of Congress collections | FREE |

| BotsArchive | https://botsarchive.com/docs.html | JSON formatted details about Telegram Bots available in database | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| MD5 Decrypt | https://md5decrypt.net/en/Api/ | Search for decrypted hashes in the database | 1.99 EURO/day |

| Name | Link | Description | Price |

|---|---|---|---|

| BTC.com | https://btc.com/btc/adapter?type=api-doc | get information about addresses and transanctions | FREE |

| Blockchair | https://blockchair.com | Explore data stored on 17 blockchains (BTC, ETH, Cardano, Ripple etc) | $0.33 - $1 per 1000 calls |

| Bitcointabyse | https://www.bitcoinabuse.com/api-docs | Lookup bitcoin addresses that have been linked to criminal activity | FREE |

| Bitcoinwhoswho | https://www.bitcoinwhoswho.com/api | Scam reports on the Bitcoin Address | FREE |

| Etherscan | https://etherscan.io/apis | Ethereum explorer API | FREE |

| apilayer coinlayer | https://coinlayer.com | Real-time Crypto Currency Exchange Rates | FREE |

| BlockFacts | https://blockfacts.io/ | Real-time crypto data from multiple exchanges via a single unified API, and much more | FREE |

| Brave NewCoin | https://bravenewcoin.com/developers | Real-time and historic crypto data from more than 200+ exchanges | FREE |

| WorldCoinIndex | https://www.worldcoinindex.com/apiservice | Cryptocurrencies Prices | FREE |

| WalletLabels | https://www.walletlabels.xyz/docs | Labels for 7,5 million Ethereum wallets | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| VirusTotal | https://developers.virustotal.com/reference | files and urls analyze | Public API is FREE |

| AbuseLPDB | https://docs.abuseipdb.com/#introduction | IP/domain/URL reputation | FREE |

| AlienVault Open Threat Exchange (OTX) | https://otx.alienvault.com/api | IP/domain/URL reputation | FREE |

| Phisherman | https://phisherman.gg | IP/domain/URL reputation | FREE |

| URLScan.io | https://urlscan.io/about-api/ | Scan and Analyse URLs | FREE |

| Web of Thrust | https://support.mywot.com/hc/en-us/sections/360004477734-API- | IP/domain/URL reputation | FREE |

| Threat Jammer | https://threatjammer.com/docs/introduction-threat-jammer-user-api | IP/domain/URL reputation | ??? |

| Name | Link | Description | Price |

|---|---|---|---|

| Search4faces | https://search4faces.com/api.html | Detect and locate human faces within an image, and returns high-precision face bounding boxes. Face⁺⁺ also allows you to store metadata of each detected face for future use. | $21 per 1000 requests |

## Face Detection

| Name | Link | Description | Price |

|---|---|---|---|

| Face++ | https://www.faceplusplus.com/face-detection/ | Search for people in social networks by facial image | from 0.03 per call |

| BetaFace | https://www.betafaceapi.com/wpa/ | Can scan uploaded image files or image URLs, find faces and analyze them. API also provides verification (faces comparison) and identification (faces search) services, as well able to maintain multiple user-defined recognition databases (namespaces) | 50 image per day FREE/from 0.15 EUR per request |

## Reverse Image Search

| Name | Link | Description | Price |

|---|---|---|---|

| Google Reverse images search API | https://github.com/SOME-1HING/google-reverse-image-api/ | This is a simple API built using Node.js and Express.js that allows you to perform Google Reverse Image Search by providing an image URL. | FREE (UNOFFICIAL) |

| TinEyeAPI | https://services.tineye.com/TinEyeAPI | Verify images, Moderate user-generated content, Track images and brands, Check copyright compliance, Deploy fraud detection solutions, Identify stock photos, Confirm the uniqueness of an image | Start from $200/5000 searches |

| Bing Images Search API | https://www.microsoft.com/en-us/bing/apis/bing-image-search-api | With Bing Image Search API v7, help users scour the web for images. Results include thumbnails, full image URLs, publishing website info, image metadata, and more. | 1,000 requests free per month FREE |

| MRISA | https://github.com/vivithemage/mrisa | MRISA (Meta Reverse Image Search API) is a RESTful API which takes an image URL, does a reverse Google image search, and returns a JSON array with the search results | FREE? (no official) |

| PicImageSearch | https://github.com/kitUIN/PicImageSearch | Aggregator for different Reverse Image Search API | FREE? (no official) |

## AI Geolocation

| Name | Link | Description | Price |

|---|---|---|---|

| Geospy | https://api.geospy.ai/ | Detecting estimation location of uploaded photo | Access by request |

| Picarta | https://picarta.ai/api | Detecting estimation location of uploaded photo | 100 request/day FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Twitch | https://dev.twitch.tv/docs/v5/reference | ||

| YouTube Data API | https://developers.google.com/youtube/v3 | ||

| https://www.reddit.com/dev/api/ | |||

| Vkontakte | https://vk.com/dev/methods | ||

| Twitter API | https://developer.twitter.com/en | ||

| Linkedin API | https://docs.microsoft.com/en-us/linkedin/ | ||

| All Facebook and Instagram API | https://developers.facebook.com/docs/ | ||

| Whatsapp Business API | https://www.whatsapp.com/business/api | ||

| Telegram and Telegram Bot API | https://core.telegram.org | ||

| Weibo API | https://open.weibo.com/wiki/API文档/en | ||

| https://dev.xing.com/partners/job_integration/api_docs | |||

| Viber | https://developers.viber.com/docs/api/rest-bot-api/ | ||

| Discord | https://discord.com/developers/docs | ||

| Odnoklassniki | https://ok.ru/apiok | ||

| Blogger | https://developers.google.com/blogger/ | The Blogger APIs allows client applications to view and update Blogger content | FREE |

| Disqus | https://disqus.com/api/docs/auth/ | Communicate with Disqus data | FREE |

| Foursquare | https://developer.foursquare.com/ | Interact with Foursquare users and places (geolocation-based checkins, photos, tips, events, etc) | FREE |

| HackerNews | https://github.com/HackerNews/API | Social news for CS and entrepreneurship | FREE |

| Kakao | https://developers.kakao.com/ | Kakao Login, Share on KakaoTalk, Social Plugins and more | FREE |

| Line | https://developers.line.biz/ | Line Login, Share on Line, Social Plugins and more | FREE |

| TikTok | https://developers.tiktok.com/doc/login-kit-web | Fetches user info and user's video posts on TikTok platform | FREE |

| Tumblr | https://www.tumblr.com/docs/en/api/v2 | Read and write Tumblr Data | FREE |

!WARNING Use with caution! Accounts may be blocked permanently for using unofficial APIs.

| Name | Link | Description | Price |

|---|---|---|---|

| TikTok | https://github.com/davidteather/TikTok-Api | The Unofficial TikTok API Wrapper In Python | FREE |

| Google Trends | https://github.com/suryasev/unofficial-google-trends-api | Unofficial Google Trends API | FREE |

| YouTube Music | https://github.com/sigma67/ytmusicapi | Unofficial APi for YouTube Music | FREE |

| Duolingo | https://github.com/KartikTalwar/Duolingo | Duolingo unofficial API (can gather info about users) | FREE |

| Steam. | https://github.com/smiley/steamapi | An unofficial object-oriented Python library for accessing the Steam Web API. | FREE |

| https://github.com/ping/instagram_private_api | Instagram Private API | FREE | |

| Discord | https://github.com/discordjs/discord.js | JavaScript library for interacting with the Discord API | FREE |

| Zhihu | https://github.com/syaning/zhihu-api | FREE Unofficial API for Zhihu | FREE |

| Quora | https://github.com/csu/quora-api | Unofficial API for Quora | FREE |

| DnsDumbster | https://github.com/PaulSec/API-dnsdumpster.com | (Unofficial) Python API for DnsDumbster | FREE |

| PornHub | https://github.com/sskender/pornhub-api | Unofficial API for PornHub in Python | FREE |

| Skype | https://github.com/ShyykoSerhiy/skyweb | Unofficial Skype API for nodejs via 'Skype (HTTP)' protocol. | FREE |

| Google Search | https://github.com/aviaryan/python-gsearch | Google Search unofficial API for Python with no external dependencies | FREE |

| Airbnb | https://github.com/nderkach/airbnb-python | Python wrapper around the Airbnb API (unofficial) | FREE |

| Medium | https://github.com/enginebai/PyMedium | Unofficial Medium Python Flask API and SDK | FREE |

| https://github.com/davidyen1124/Facebot | Powerful unofficial Facebook API | FREE | |

| https://github.com/tomquirk/linkedin-api | Unofficial Linkedin API for Python | FREE | |

| Y2mate | https://github.com/Simatwa/y2mate-api | Unofficial Y2mate API for Python | FREE |

| Livescore | https://github.com/Simatwa/livescore-api | Unofficial Livescore API for Python | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Google Custom Search JSON API | https://developers.google.com/custom-search/v1/overview | Search in Google | 100 requests FREE |

| Serpstack | https://serpstack.com/ | Google search results to JSON | FREE |

| Serpapi | https://serpapi.com | Google, Baidu, Yandex, Yahoo, DuckDuckGo, Bint and many others search results | $50/5000 searches/month |

| Bing Web Search API | https://www.microsoft.com/en-us/bing/apis/bing-web-search-api | Search in Bing (+instant answers and location) | 1000 transactions per month FREE |

| WolframAlpha API | https://products.wolframalpha.com/api/pricing/ | Short answers, conversations, calculators and many more | from $25 per 1000 queries |

| DuckDuckgo Instant Answers API | https://duckduckgo.com/api | An API for some of our Instant Answers, not for full search results. | FREE |

| Memex Marginalia | https://memex.marginalia.nu/projects/edge/api.gmi | An API for new privacy search engine | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| MediaStack | https://mediastack.com/ | News articles search results in JSON | 500 requests/month FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Darksearch.io | https://darksearch.io/apidoc | search by websites in .onion zone | FREE |

| Onion Lookup | https://onion.ail-project.org/ | onion-lookup is a service for checking the existence of Tor hidden services and retrieving their associated metadata. onion-lookup relies on an private AIL instance to obtain the metadata | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Jackett | https://github.com/Jackett/Jackett | API for automate searching in different torrent trackers | FREE |

| Torrents API PY | https://github.com/Jackett/Jackett | Unofficial API for 1337x, Piratebay, Nyaasi, Torlock, Torrent Galaxy, Zooqle, Kickass, Bitsearch, MagnetDL,Libgen, YTS, Limetorrent, TorrentFunk, Glodls, Torre | FREE |

| Torrent Search API | https://github.com/Jackett/Jackett | API for Torrent Search Engine with Extratorrents, Piratebay, and ISOhunt | 500 queries/day FREE |

| Torrent search api | https://github.com/JimmyLaurent/torrent-search-api | Yet another node torrent scraper (supports iptorrents, torrentleech, torrent9, torrentz2, 1337x, thepiratebay, Yggtorrent, TorrentProject, Eztv, Yts, LimeTorrents) | FREE |

| Torrentinim | https://github.com/sergiotapia/torrentinim | Very low memory-footprint, self hosted API-only torrent search engine. Sonarr + Radarr Compatible, native support for Linux, Mac and Windows. | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| National Vulnerability Database CVE Search API | https://nvd.nist.gov/developers/vulnerabilities | Get basic information about CVE and CVE history | FREE |

| OpenCVE API | https://docs.opencve.io/api/cve/ | Get basic information about CVE | FREE |

| CVEDetails API | https://www.cvedetails.com/documentation/apis | Get basic information about CVE | partly FREE (?) |

| CVESearch API | https://docs.cvesearch.com/ | Get basic information about CVE | by request |

| KEVin API | https://kevin.gtfkd.com/ | API for accessing CISA's Known Exploited Vulnerabilities Catalog (KEV) and CVE Data | FREE |

| Vulners.com API | https://vulners.com | Get basic information about CVE | FREE for personal use |

| Name | Link | Description | Price |

|---|---|---|---|

| Aviation Stack | https://aviationstack.com | get information about flights, aircrafts and airlines | FREE |

| OpenSky Network | https://opensky-network.org/apidoc/index.html | Free real-time ADS-B aviation data | FREE |

| AviationAPI | https://docs.aviationapi.com/ | FAA Aeronautical Charts and Publications, Airport Information, and Airport Weather | FREE |

| FachaAPI | https://api.facha.dev | Aircraft details and live positioning API | FREE |

| Name | Link | Description | Price |

|---|---|---|---|

| Windy Webcams API | https://api.windy.com/webcams/docs | Get a list of available webcams for a country, city or geographical coordinates | FREE with limits or 9990 euro without limits |

## Regex

| Name | Link | Description | Price |

|---|---|---|---|

| Autoregex | https://autoregex.notion.site/AutoRegex-API-Documentation-97256bad2c114a6db0c5822860214d3a | Convert English phrase to regular expression | from $3.49/month |

| Name | Link |

|---|---|

| API Guessr (detect API by auth key or by token) | https://api-guesser.netlify.app/ |

| REQBIN Online REST & SOAP API Testing Tool | https://reqbin.com |

| ExtendClass Online REST Client | https://extendsclass.com/rest-client-online.html |

| Codebeatify.org Online API Test | https://codebeautify.org/api-test |

| SyncWith Google Sheet add-on. Link more than 1000 APIs with Spreadsheet | https://workspace.google.com/u/0/marketplace/app/syncwith_crypto_binance_coingecko_airbox/449644239211?hl=ru&pann=sheets_addon_widget |

| Talend API Tester Google Chrome Extension | https://workspace.google.com/u/0/marketplace/app/syncwith_crypto_binance_coingecko_airbox/449644239211?hl=ru&pann=sheets_addon_widget |

| Michael Bazzel APIs search tools | https://inteltechniques.com/tools/API.html |

| Name | Link |

|---|---|

| Convert curl commands to Python, JavaScript, PHP, R, Go, C#, Ruby, Rust, Elixir, Java, MATLAB, Dart, CFML, Ansible URI or JSON | https://curlconverter.com |

| Curl-to-PHP. Instantly convert curl commands to PHP code | https://incarnate.github.io/curl-to-php/ |

| Curl to PHP online (Codebeatify) | https://codebeautify.org/curl-to-php-online |

| Curl to JavaScript fetch | https://kigiri.github.io/fetch/ |

| Curl to JavaScript fetch (Scrapingbee) | https://www.scrapingbee.com/curl-converter/javascript-fetch/ |

| Curl to C# converter | https://curl.olsh.me |

| Name | Link |

|---|---|

| Sheety. Create API frome GOOGLE SHEET | https://sheety.co/ |

| Postman. Platform for creating your own API | https://www.postman.com |

| Reetoo. Rest API Generator | https://retool.com/api-generator/ |

| Beeceptor. Rest API mocking and intercepting in seconds (no coding). | https://beeceptor.com |

| Name | Link |

|---|---|

| RapidAPI. Market your API for millions of developers | https://rapidapi.com/solution/api-provider/ |

| Apilayer. API Marketplace | https://apilayer.com |

| Name | Link | Description |

|---|---|---|

| Keyhacks | https://github.com/streaak/keyhacks | Keyhacks is a repository which shows quick ways in which API keys leaked by a bug bounty program can be checked to see if they're valid. |

| All about APIKey | https://github.com/daffainfo/all-about-apikey | Detailed information about API key / OAuth token for different services (Description, Request, Response, Regex, Example) |

| API Guessr | https://api-guesser.netlify.app/ | Enter API Key and and find out which service they belong to |

| Name | Link | Description |

|---|---|---|

| APIDOG ApiHub | https://apidog.com/apihub/ | |

| Rapid APIs collection | https://rapidapi.com/collections | |

| API Ninjas | https://api-ninjas.com/api | |

| APIs Guru | https://apis.guru/ | |

| APIs List | https://apislist.com/ | |

| API Context Directory | https://apicontext.com/api-directory/ | |

| Any API | https://any-api.com/ | |

| Public APIs Github repo | https://github.com/public-apis/public-apis |

If you don't know how to work with the REST API, I recommend you check out the Netlas API guide I wrote for Netlas.io.

There it is very brief and accessible to write how to automate requests in different programming languages (focus on Python and Bash) and process the resulting JSON data.

Thank you for following me! https://cybdetective.com

VulnKnox is a powerful command-line tool written in Go that interfaces with the KNOXSS API. It automates the process of testing URLs for Cross-Site Scripting (XSS) vulnerabilities using the advanced capabilities of the KNOXSS engine.

go install github.com/iqzer0/vulnknox@latest

Before using the tool, you need to set up your configuration:

API Key

Obtain your KNOXSS API key from knoxss.me.

On the first run, a default configuration file will be created at:

Linux/macOS: ~/.config/vulnknox/config.json

Windows: %APPDATA%\VulnKnox\config.json

Edit the config.json file and replace YOUR_API_KEY_HERE with your actual API key.

Discord Webhook (Optional)

If you want to receive notifications on Discord, add your webhook URL to the config.json file or use the -dw flag.

Usage of vulnknox:

-u Input URL to send to KNOXSS API

-i Input file containing URLs to send to KNOXSS API

-X GET HTTP method to use: GET, POST, or BOTH

-pd POST data in format 'param1=value¶m2=value'

-headers Custom headers in format 'Header1:value1,Header2:value2'

-afb Use Advanced Filter Bypass

-checkpoc Enable CheckPoC feature

-flash Enable Flash Mode

-o The file to save the results to

-ow Overwrite output file if it exists

-oa Output all results to file, not just successful ones

-s Only show successful XSS payloads in output

-p 3 Number of parallel processes (1-5)

-t 600 Timeout for API requests in seconds

-dw Discord Webhook URL (overrides config file)

-r 3 Number of retries for failed requests

-ri 30 Interval between retries in seconds

-sb 0 Skip domains after this many 403 responses

-proxy Proxy URL (e.g., http://127.0.0.1:8080)

-v Verbose output

-version Show version number

-no-banner Suppress the banner

-api-key KNOXSS API Key (overrides config file)

Test a single URL using GET method:

vulnknox -u "https://example.com/page?param=value"

Test a URL with POST data:

vulnknox -u "https://example.com/submit" -X POST -pd "param1=value1¶m2=value2"

Enable Advanced Filter Bypass and Flash Mode:

vulnknox -u "https://example.com/page?param=value" -afb -flash

Use custom headers (e.g., for authentication):

vulnknox -u "https://example.com/secure" -headers "Cookie:sessionid=abc123"

Process URLs from a file with 5 concurrent processes:

vulnknox -i urls.txt -p 5

Send notifications to Discord on successful XSS findings:

vulnknox -u "https://example.com/page?param=value" -dw "https://discord.com/api/webhooks/your/webhook/url"

Test both GET and POST methods with CheckPoC enabled:

vulnknox -u "https://example.com/page" -X BOTH -checkpoc

Use a proxy and increase the number of retries:

vulnknox -u "https://example.com/page?param=value" -proxy "http://127.0.0.1:8080" -r 5

Suppress the banner and only show successful XSS payloads:

vulnknox -u "https://example.com/page?param=value" -no-banner -s

[ XSS! ]: Indicates a successful XSS payload was found.

[ SAFE ]: No XSS vulnerability was found in the target.

[ ERR! ]: An error occurred during the request.

[ SKIP ]: The domain or URL was skipped due to multiple failed attempts (e.g., after receiving too many 403 Forbidden responses as specified by the -sb option).

[BALANCE]: Indicates your current API usage with KNOXSS, showing how many API calls you've used out of your total allowance.

The tool also provides a summary at the end of execution, including the number of requests made, successful XSS findings, safe responses, errors, and any skipped domains.

Contributions are welcome! If you have suggestions for improvements or encounter any issues, please open an issue or submit a pull request.

This project is licensed under the MIT License.

A critical resource that cybersecurity professionals worldwide rely on to identify, mitigate and fix security vulnerabilities in software and hardware is in danger of breaking down. The federally funded, non-profit research and development organization MITRE warned today that its contract to maintain the Common Vulnerabilities and Exposures (CVE) program — which is traditionally funded each year by the Department of Homeland Security — expires on April 16.

A letter from MITRE vice president Yosry Barsoum, warning that the funding for the CVE program will expire on April 16, 2025.

Tens of thousands of security flaws in software are found and reported every year, and these vulnerabilities are eventually assigned their own unique CVE tracking number (e.g. CVE-2024-43573, which is a Microsoft Windows bug that Redmond patched last year).

There are hundreds of organizations — known as CVE Numbering Authorities (CNAs) — that are authorized by MITRE to bestow these CVE numbers on newly reported flaws. Many of these CNAs are country and government-specific, or tied to individual software vendors or vulnerability disclosure platforms (a.k.a. bug bounty programs).

Put simply, MITRE is a critical, widely-used resource for centralizing and standardizing information on software vulnerabilities. That means the pipeline of information it supplies is plugged into an array of cybersecurity tools and services that help organizations identify and patch security holes — ideally before malware or malcontents can wriggle through them.

“What the CVE lists really provide is a standardized way to describe the severity of that defect, and a centralized repository listing which versions of which products are defective and need to be updated,” said Matt Tait, chief operating officer of Corellium, a cybersecurity firm that sells phone-virtualization software for finding security flaws.

In a letter sent today to the CVE board, MITRE Vice President Yosry Barsoum warned that on April 16, 2025, “the current contracting pathway for MITRE to develop, operate and modernize CVE and several other related programs will expire.”

“If a break in service were to occur, we anticipate multiple impacts to CVE, including deterioration of national vulnerability databases and advisories, tool vendors, incident response operations, and all manner of critical infrastructure,” Barsoum wrote.

MITRE told KrebsOnSecurity the CVE website listing vulnerabilities will remain up after the funding expires, but that new CVEs won’t be added after April 16.

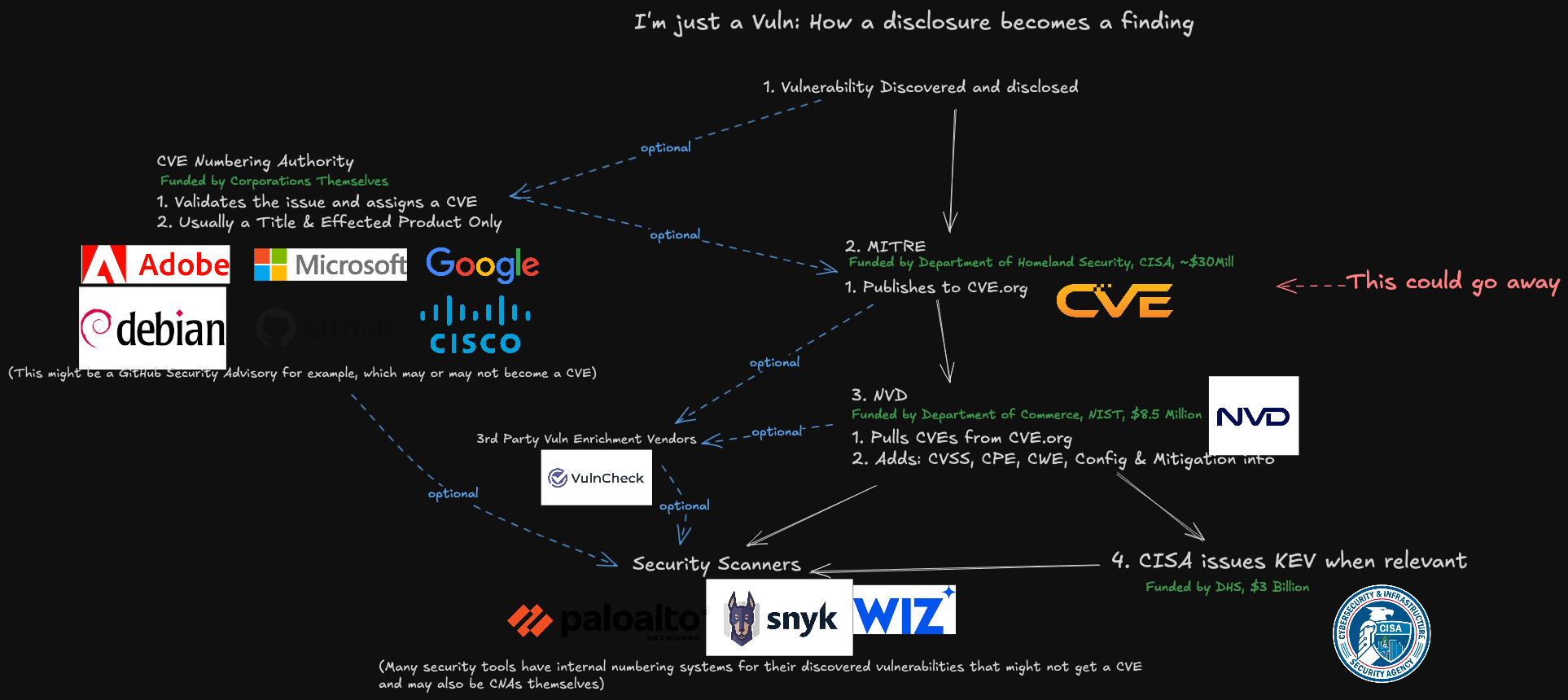

A representation of how a vulnerability becomes a CVE, and how that information is consumed. Image: James Berthoty, Latio Tech, via LinkedIn.

DHS officials did not immediately respond to a request for comment. The program is funded through DHS’s Cybersecurity & Infrastructure Security Agency (CISA), which is currently facing deep budget and staffing cuts by the Trump administration. The CVE contract available at USAspending.gov says the project was awarded approximately $40 million last year.

Former CISA Director Jen Easterly said the CVE program is a bit like the Dewey Decimal System, but for cybersecurity.

“It’s the global catalog that helps everyone—security teams, software vendors, researchers, governments—organize and talk about vulnerabilities using the same reference system,” Easterly said in a post on LinkedIn. “Without it, everyone is using a different catalog or no catalog at all, no one knows if they’re talking about the same problem, defenders waste precious time figuring out what’s wrong, and worst of all, threat actors take advantage of the confusion.”

John Hammond, principal security researcher at the managed security firm Huntress, told Reuters he swore out loud when he heard the news that CVE’s funding was in jeopardy, and that losing the CVE program would be like losing “the language and lingo we used to address problems in cybersecurity.”

“I really can’t help but think this is just going to hurt,” said Hammond, who posted a Youtube video to vent about the situation and alert others.

Several people close to the matter told KrebsOnSecurity this is not the first time the CVE program’s budget has been left in funding limbo until the last minute. Barsoum’s letter, which was apparently leaked, sounded a hopeful note, saying the government is making “considerable efforts to continue MITRE’s role in support of the program.”

Tait said that without the CVE program, risk managers inside companies would need to continuously monitor many other places for information about new vulnerabilities that may jeopardize the security of their IT networks. Meaning, it may become more common that software updates get mis-prioritized, with companies having hackable software deployed for longer than they otherwise would, he said.

“Hopefully they will resolve this, but otherwise the list will rapidly fall out of date and stop being useful,” he said.

Update, April 16, 11:00 a.m. ET: The CVE board today announced the creation of non-profit entity called The CVE Foundation that will continue the program’s work under a new, unspecified funding mechanism and organizational structure.

“Since its inception, the CVE Program has operated as a U.S. government-funded initiative, with oversight and management provided under contract,” the press release reads. “While this structure has supported the program’s growth, it has also raised longstanding concerns among members of the CVE Board about the sustainability and neutrality of a globally relied-upon resource being tied to a single government sponsor.”

The organization’s website, thecvefoundation.org, is less than a day old and currently hosts no content other than the press release heralding its creation. The announcement said the foundation would release more information about its structure and transition planning in the coming days.

Update, April 16, 4:26 p.m. ET: MITRE issued a statement today saying it “identified incremental funding to keep the programs operational. We appreciate the overwhelming support for these programs that have been expressed by the global cyber community, industry and government over the last 24 hours. The government continues to make considerable efforts to support MITRE’s role in the program and MITRE remains committed to CVE and CWE as global resources.”

Mass Assigner is a powerful tool designed to identify and exploit mass assignment vulnerabilities in web applications. It achieves this by first retrieving data from a specified request, such as fetching user profile data. Then, it systematically attempts to apply each parameter extracted from the response to a second request provided, one parameter at a time. This approach allows for the automated testing and exploitation of potential mass assignment vulnerabilities.

This tool actively modifies server-side data. Please ensure you have proper authorization before use. Any unauthorized or illegal activity using this tool is entirely at your own risk.

Install requirements

pip3 install -r requirements.txt

Run the script

python3 mass_assigner.py --fetch-from "http://example.com/path-to-fetch-data" --target-req "http://example.com/path-to-probe-the-data"

Forbidden Buster accepts the following arguments:

-h, --help show this help message and exit

--fetch-from FETCH_FROM

URL to fetch data from

--target-req TARGET_REQ

URL to send modified data to

-H HEADER, --header HEADER

Add a custom header. Format: 'Key: Value'

-p PROXY, --proxy PROXY

Use Proxy, Usage i.e: http://127.0.0.1:8080.

-d DATA, --data DATA Add data to the request body. JSON is supported with escaping.

--rate-limit RATE_LIMIT

Number of requests per second

--source-method SOURCE_METHOD

HTTP method for the initial request. Default is GET.

--target-method TARGET_METHOD

HTTP method for the modified request. Default is PUT.

--ignore-params IGNORE_PARAMS

Parameters to ignore during modification, separated by comma.

Example Usage:

python3 mass_assigner.py --fetch-from "http://example.com/api/v1/me" --target-req "http://example.com/api/v1/me" --header "Authorization: Bearer XXX" --proxy "http://proxy.example.com" --data '{\"param1\": \"test\", \"param2\":true}'

DockerSpy searches for images on Docker Hub and extracts sensitive information such as authentication secrets, private keys, and more.

Docker is an open-source platform that automates the deployment, scaling, and management of applications using containerization technology. Containers allow developers to package an application and its dependencies into a single, portable unit that can run consistently across various computing environments. Docker simplifies the development and deployment process by ensuring that applications run the same way regardless of where they are deployed.

Docker Hub is a cloud-based repository where developers can store, share, and distribute container images. It serves as the largest library of container images, providing access to both official images created by Docker and community-contributed images. Docker Hub enables developers to easily find, download, and deploy pre-built images, facilitating rapid application development and deployment.

Open Source Intelligence (OSINT) on Docker Hub involves using publicly available information to gather insights and data from container images and repositories hosted on Docker Hub. This is particularly important for identifying exposed secrets for several reasons:

Security Audits: By analyzing Docker images, organizations can uncover exposed secrets such as API keys, authentication tokens, and private keys that might have been inadvertently included. This helps in mitigating potential security risks.

Incident Prevention: Proactively searching for exposed secrets in Docker images can prevent security breaches before they happen, protecting sensitive information and maintaining the integrity of applications.

Compliance: Ensuring that container images do not expose secrets is crucial for meeting regulatory and organizational security standards. OSINT helps verify that no sensitive information is unintentionally disclosed.

Vulnerability Assessment: Identifying exposed secrets as part of regular security assessments allows organizations to address these vulnerabilities promptly, reducing the risk of exploitation by malicious actors.

Enhanced Security Posture: Continuously monitoring Docker Hub for exposed secrets strengthens an organization's overall security posture, making it more resilient against potential threats.

Utilizing OSINT on Docker Hub to find exposed secrets enables organizations to enhance their security measures, prevent data breaches, and ensure the confidentiality of sensitive information within their containerized applications.

DockerSpy obtains information from Docker Hub and uses regular expressions to inspect the content for sensitive information, such as secrets.

To use DockerSpy, follow these steps:

git clone https://github.com/UndeadSec/DockerSpy.git && cd DockerSpy && make

dockerspy

To customize DockerSpy configurations, edit the following files: - Regular Expressions - Ignored File Extensions

DockerSpy is intended for educational and research purposes only. Users are responsible for ensuring that their use of this tool complies with applicable laws and regulations.

Contributions to DockerSpy are welcome! Feel free to submit issues, feature requests, or pull requests to help improve this tool.

DockerSpy is developed and maintained by Alisson Moretto (UndeadSec)

I'm a passionate cyber threat intelligence pro who loves sharing insights and crafting cybersecurity tools.

Consider following me:

Special thanks to @akaclandestine

A vulnerable application made using node.js, express server and ejs template engine. This application is meant for educational purposes only.

git clone https://github.com/4auvar/VulnNodeApp.git

npm install

CREATE USER 'vulnnodeapp'@'localhost' IDENTIFIED BY 'password';

create database vuln_node_app_db;

GRANT ALL PRIVILEGES ON vuln_node_app_db.* TO 'vulnnodeapp'@'localhost';

USE vuln_node_app_db;

create table users (id int AUTO_INCREMENT PRIMARY KEY, fullname varchar(255), username varchar(255),password varchar(255), email varchar(255), phone varchar(255), profilepic varchar(255));

insert into users(fullname,username,password,email,phone) values("test1","test1","test1","test1@test.com","976543210");

insert into users(fullname,username,password,email,phone) values("test2","test2","test2","test2@test.com","9887987541");

insert into users(fullname,username,password,email,phone) values("test3","test3","test3","test3@test.com","9876987611");

insert into users(fullname,username,password,email,phone) values("test4","test4","test4","test4@test.com","9123459876");

insert into users(fullname,username,password,email,phone) values("test5","test5","test 5","test5@test.com","7893451230");

npm start

You can reach me out at @4auvar

ROPDump is a tool for analyzing binary executables to identify potential Return-Oriented Programming (ROP) gadgets, as well as detecting potential buffer overflow and memory leak vulnerabilities.

<binary>: Path to the binary file for analysis.-s, --search SEARCH: Optional. Search for specific instruction patterns.-f, --functions: Optional. Print function names and addresses.python3 ropdump.py /path/to/binary

python3 ropdump.py /path/to/binary -s "pop eax"

python3 ropdump.py /path/to/binary -f

Presented at CODE BLUE 2023, this project titled Enhanced Vulnerability Hunting in WDM Drivers with Symbolic Execution and Taint Analysis introduces IOCTLance, a tool that enhances its capacity to detect various vulnerability types in Windows Driver Model (WDM) drivers. In a comprehensive evaluation involving 104 known vulnerable WDM drivers and 328 unknow n ones, IOCTLance successfully unveiled 117 previously unidentified vulnerabilities within 26 distinct drivers. As a result, 41 CVEs were reported, encompassing 25 cases of denial of service, 5 instances of insufficient access control, and 11 examples of elevation of privilege.

docker build .

dpkg --add-architecture i386

apt-get update

apt-get install git build-essential python3 python3-pip python3-dev htop vim sudo \

openjdk-8-jdk zlib1g:i386 libtinfo5:i386 libstdc++6:i386 libgcc1:i386 \

libc6:i386 libssl-dev nasm binutils-multiarch qtdeclarative5-dev libpixman-1-dev \

libglib2.0-dev debian-archive-keyring debootstrap libtool libreadline-dev cmake \

libffi-dev libxslt1-dev libxml2-dev

pip install angr==9.2.18 ipython==8.5.0 ipdb==0.13.9

# python3 analysis/ioctlance.py -h

usage: ioctlance.py [-h] [-i IOCTLCODE] [-T TOTAL_TIMEOUT] [-t TIMEOUT] [-l LENGTH] [-b BOUND]

[-g GLOBAL_VAR] [-a ADDRESS] [-e EXCLUDE] [-o] [-r] [-c] [-d]

path

positional arguments:

path dir (including subdirectory) or file path to the driver(s) to analyze

optional arguments:

-h, --help show this help message and exit

-i IOCTLCODE, --ioctlcode IOCTLCODE

analyze specified IoControlCode (e.g. 22201c)

-T TOTAL_TIMEOUT, --total_timeout TOTAL_TIMEOUT

total timeout for the whole symbolic execution (default 1200, 0 to unlimited)

-t TIMEOUT, --timeout TIMEOUT

timeout for analyze each IoControlCode (default 40, 0 to unlimited)

-l LENGTH, --length LENGTH

the limit of number of instructions for technique L engthLimiter (default 0, 0

to unlimited)

-b BOUND, --bound BOUND

the bound for technique LoopSeer (default 0, 0 to unlimited)

-g GLOBAL_VAR, --global_var GLOBAL_VAR

symbolize how many bytes in .data section (default 0 hex)

-a ADDRESS, --address ADDRESS

address of ioctl handler to directly start hunting with blank state (e.g.

140005c20)

-e EXCLUDE, --exclude EXCLUDE

exclude function address split with , (e.g. 140005c20,140006c20)

-o, --overwrite overwrite x.sys.json if x.sys has been analyzed (default False)

-r, --recursion do not kill state if detecting recursion (default False)

-c, --complete get complete base state (default False)

-d, --debug print debug info while analyzing (default False)

# python3 evaluation/statistics.py -h

usage: statistics.py [-h] [-w] path

positional arguments:

path target dir or file path

optional arguments:

-h, --help show this help message and exit

-w, --wdm copy the wdm drivers into <path>/wdm

APKDeepLens is a Python based tool designed to scan Android applications (APK files) for security vulnerabilities. It specifically targets the OWASP Top 10 mobile vulnerabilities, providing an easy and efficient way for developers, penetration testers, and security researchers to assess the security posture of Android apps.

APKDeepLens is a Python-based tool that performs various operations on APK files. Its main features include:

To use APKDeepLens, you'll need to have Python 3.8 or higher installed on your system. You can then install APKDeepLens using the following command:

git clone https://github.com/d78ui98/APKDeepLens/tree/main

cd /APKDeepLens

python3 -m venv venv

source venv/bin/activate

pip install -r requirements.txt

python APKDeepLens.py --help

git clone https://github.com/d78ui98/APKDeepLens/tree/main

cd \APKDeepLens

python3 -m venv venv

.\venv\Scripts\activate

pip install -r .\requirements.txt

python APKDeepLens.py --help

To simply scan an APK, use the below command. Mention the apk file with -apk argument. Once the scan is complete, a detailed report will be displayed in the console.

python3 APKDeepLens.py -apk file.apk

If you've already extracted the source code and want to provide its path for a faster scan you can use the below command. Mention the source code of the android application with -source parameter.

python3 APKDeepLens.py -apk file.apk -source <source-code-path>

To generate detailed PDF and HTML reports after the scan you can pass -report argument as mentioned below.

python3 APKDeepLens.py -apk file.apk -report

We welcome contributions to the APKDeepLens project. If you have a feature request, bug report, or proposal, please open a new issue here.

For those interested in contributing code, please follow the standard GitHub process. We'll review your contributions as quickly as possible :)

drozer (formerly Mercury) is the leading security testing framework for Android.

drozer allows you to search for security vulnerabilities in apps and devices by assuming the role of an app and interacting with the Dalvik VM, other apps' IPC endpoints and the underlying OS.

drozer provides tools to help you use, share and understand public Android exploits. It helps you to deploy a drozer Agent to a device through exploitation or social engineering. Using weasel (WithSecure's advanced exploitation payload) drozer is able to maximise the permissions available to it by installing a full agent, injecting a limited agent into a running process, or connecting a reverse shell to act as a Remote Access Tool (RAT).

drozer is a good tool for simulating a rogue application. A penetration tester does not have to develop an app with custom code to interface with a specific content provider. Instead, drozer can be used with little to no programming experience required to show the impact of letting certain components be exported on a device.

drozer is open source software, maintained by WithSecure, and can be downloaded from: https://labs.withsecure.com/tools/drozer/

To help with making sure drozer can be run on modern systems, a Docker container was created that has a working build of Drozer. This is currently the recommended method of using Drozer on modern systems.

Note: On Windows please ensure that the path to the Python installation and the Scripts folder under the Python installation are added to the PATH environment variable.

Note: On Windows please ensure that the path to javac.exe is added to the PATH environment variable.

git clone https://github.com/WithSecureLabs/drozer.git

cd drozer

python setup.py bdist_wheel

sudo pip install dist/drozer-2.x.x-py2-none-any.whl

git clone https://github.com/WithSecureLabs/drozer.git

cd drozer

make deb

sudo dpkg -i drozer-2.x.x.deb

git clone https://github.com/WithSecureLabs/drozer.git

cd drozer

make rpm

sudo rpm -I drozer-2.x.x-1.noarch.rpm

NOTE: Windows Defender and other Antivirus software will flag drozer as malware (an exploitation tool without exploit code wouldn't be much fun!). In order to run drozer you would have to add an exception to Windows Defender and any antivirus software. Alternatively, we recommend running drozer in a Windows/Linux VM.

git clone https://github.com/WithSecureLabs/drozer.git

cd drozer

python.exe setup.py bdist_msi

Run dist/drozer-2.x.x.win-x.msi

Drozer can be installed using Android Debug Bridge (adb).

Download the latest Drozer Agent here.

$ adb install drozer-agent-2.x.x.apk

You should now have the drozer Console installed on your PC, and the Agent running on your test device. Now, you need to connect the two and you're ready to start exploring.

We will use the server embedded in the drozer Agent to do this.

If using the Android emulator, you need to set up a suitable port forward so that your PC can connect to a TCP socket opened by the Agent inside the emulator, or on the device. By default, drozer uses port 31415:

$ adb forward tcp:31415 tcp:31415

Now, launch the Agent, select the "Embedded Server" option and tap "Enable" to start the server. You should see a notification that the server has started.

Then, on your PC, connect using the drozer Console:

On Linux:

$ drozer console connect

On Windows:

> drozer.bat console connect

If using a real device, the IP address of the device on the network must be specified:

On Linux:

$ drozer console connect --server 192.168.0.10

On Windows:

> drozer.bat console connect --server 192.168.0.10

You should be presented with a drozer command prompt:

selecting f75640f67144d9a3 (unknown sdk 4.1.1)

dz>

The prompt confirms the Android ID of the device you have connected to, along with the manufacturer, model and Android software version.

You are now ready to start exploring the device.

| Command | Description |

|---|---|

| run | Executes a drozer module |

| list | Show a list of all drozer modules that can be executed in the current session. This hides modules that you do not have suitable permissions to run. |

| shell | Start an interactive Linux shell on the device, in the context of the Agent process. |

| cd | Mounts a particular namespace as the root of session, to avoid having to repeatedly type the full name of a module. |

| clean | Remove temporary files stored by drozer on the Android device. |

| contributors | Displays a list of people who have contributed to the drozer framework and modules in use on your system. |

| echo | Print text to the console. |

| exit | Terminate the drozer session. |

| help | Display help about a particular command or module. |

| load | Load a file containing drozer commands, and execute them in sequence. |

| module | Find and install additional drozer modules from the Internet. |

| permissions | Display a list of the permissions granted to the drozer Agent. |

| set | Store a value in a variable that will be passed as an environment variable to any Linux shells spawned by drozer. |

| unset | Remove a named variable that drozer passes to any Linux shells that it spawns. |

drozer is released under a 3-clause BSD License. See LICENSE for full details.

drozer is Open Source software, made great by contributions from the community.

Bug reports, feature requests, comments and questions can be submitted here.

There’s little rest for your hard-working smartphone. If you’re like many professionals today, you use it for work, play, and a mix of personal business in between. Now, what if something went wrong with that phone, like loss or theft? Worse yet, what if your smartphone got hacked?

Globally, plenty of people pull double duty with their smartphones. One survey found that 87% of companies have policies that integrate personal devices in the workplace. Therein lies the higher potential for security risks such as data breaches, malware infection, and difficulties in maintaining data privacy and compliance. You see, a smartphone loaded with both business and personal data makes it a desirable, high-value target. It only takes one dedicated hacker—and there are plenty—to infiltrate an unprotected smartphone and access the treasure trove of both your personal and company information in a single effort.

Let’s try to keep that from happening to you. This guide will walk you through exactly how to keep your digital life secure.

Smartphone hacking is when someone gains unauthorized access to your phone and the vast amount of personal data it contains. As you can imagine, this type of digital break-in can have serious real-world consequences, including financial loss from compromised banking apps, identity theft using your private information, and a complete invasion of your privacy through access to your emails, photos, and messages. This isn’t a distant threat; mobile malware is consistently on the rise, with cybercriminals developing more sophisticated methods to target unsuspecting users. The good news is that you have the power to stop them. Understanding how to protect your phone from hackers is the first step.

If you notice one or more of these signs, don’t panic. Investigate further and follow the recovery steps below. Sometimes, these issues can be caused by a legitimate but buggy app or an aging battery.

Once you’ve resolved an attack, the next step is to prevent phone hacking from happening again. Think of it as strengthening your digital front door. As both a parent and professional, I have put together a few things you can do to protect your smartphone from future hacks, so that you can keep your personal and work life safe:

Locking your phone with facial ID, a fingerprint, a pattern, or a PIN is your most basic form of protection, particularly in the event of loss or theft. (Your options will vary depending on the device, operating system, and manufacturer.) Take it a step further for even more protection. Secure the accounts on your phone with strong passwords and use two-factor authentication on the apps that offer it.

Don’t hop onto public Wi-Fi networks without protection. A virtual private network (VPN) masks your connection from hackers, allowing you to browse privately on unsecure public networks at airports, cafes, hotels, and the like. With a VPN connection, your sensitive data, documents, and activities are protected from snooping. It’s definitely a great feeling given the amount of personal and professional business we manage with our smartphones.

Both Google Play and Apple’s App Store have measures in place to help prevent potentially dangerous apps from making it into their stores. Malicious apps are often found outside of the app stores, which can run in the background and compromise your personal data such as passwords, credit card numbers, and more—practically everything that you keep on your phone. Further, when you are in the app stores, look closely at the descriptions and reviews for apps before you download them as malicious apps and counterfeits can still find their way into stores.

Backing up your phone is always a good idea for two reasons:

Both iPhones and Android phones have straightforward ways of backing up your phone regularly.

Worst case scenario—your phone is gone. Really gone. Either it’s hopelessly lost or got stolen. What now? Lock it remotely or even wipe its data entirely. While it seems like a drastic move, your data is secure in the cloud ready to be restored IF you maintain regular backups as mentioned above. This means hackers won’t be able to access your or your company’s sensitive information, keeping you and your professional business safe. Apple and Google provide their users with a step-by-step guide for remotely wiping devices.

Needless to say, smartphone updates should always start with the operating system (OS). In addition, you also need to conduct app updates as soon as they’re available, as they contain critical security patches. Take a few moments to swipe through your screen, see which ones you’re truly done with and delete them along with their data. Every extra app is another app that needs updating or that may come with a security issue. Along with deleting the app, also delete your account associated with it. As for the ones you keep, update them regularly and turn on auto-updates if that’s an option.

With so much of your life on your phone, getting security software installed on it can protect you and the things you keep. Whether you’re an Android owner or iOS owner, McAfee+ conducts regular security scans to help you keep your personal, financial, and even company data secure.

While it’s convenient to have everything at your fingertips, storing too much sensitive information on your smartphone makes you vulnerable if your device is lost, stolen, or compromised. Here are some tips to limit the data on your phone and reduce your risk of identity theft, financial fraud, and privacy breaches.

At a deeper level, there are several lesser-known settings you can adjust to protect your phone from being hacked. These advanced steps add extra layers of security to your device.

Yes, malware or spyware can give a hacker access to your camera and microphone, allowing them to see and hear you without your knowledge. To prevent this, be cautious about app permissions and consider using a physical camera cover for peace of mind.

It’s possible. Some malicious websites can attempt to automatically download malware or exploit browser vulnerabilities to compromise your device. Using a secure browser and comprehensive security software that warns you of risky sites is your best defense.

For the vast majority of users, a phone that is completely powered off cannot be hacked remotely. Hacking requires the device’s operating system and network connections to be active, so turning it off effectively cuts that connection.

Simply answering a call from an unknown number is highly unlikely to hack your phone. The real danger lies in social engineering, where the scammer on the other end tries to trick you into revealing personal information, visiting a malicious website, or dialing a specific code.

Your smartphone is central to your life, and understanding how to keep your phone safe from hackers is not about being fearful, but about being prepared. By taking proactive and consistent steps, you create powerful layers of defense that make you a much harder target for cybercriminals. Combining smart habits with the advanced protection offered by security solutions like McAfee+ ensures your data, privacy, and peace of mind are always safeguarded. Stay informed about new threats, keep your security software current, and enjoy all the good your connected life has to offer, safely and securely.

The post 7 Tips to Protect Your Smartphone from Getting Hacked appeared first on McAfee Blog.

Exploitation and scanning tool specifically designed for Jenkins versions <= 2.441 & <= LTS 2.426.2. It leverages CVE-2024-23897 to assess and exploit vulnerabilities in Jenkins instances.

Ensure you have the necessary permissions to scan and exploit the target systems. Use this tool responsibly and ethically.

python CVE-2024-23897.py -t <target> -p <port> -f <file>

or

python CVE-2024-23897.py -i <input_file> -f <file>

Parameters: - -t or --target: Specify the target IP(s). Supports single IP, IP range, comma-separated list, or CIDR block. - -i or --input-file: Path to input file containing hosts in the format of http://1.2.3.4:8080/ (one per line). - -o or --output-file: Export results to file (optional). - -p or --port: Specify the port number. Default is 8080 (optional). - -f or --file: Specify the file to read on the target system.

-i INPUT_FILE). -o OUTPUT_FILE).Contributions are welcome. Please feel free to fork, modify, and make pull requests or report issues.

Alexander Hagenah - URL - Twitter

This tool is meant for educational and professional purposes only. Unauthorized scanning and exploiting of systems is illegal and unethical. Always ensure you have explicit permission to test and exploit any systems you target.

SploitScan is a powerful and user-friendly tool designed to streamline the process of identifying exploits for known vulnerabilities and their respective exploitation probability. Empowering cybersecurity professionals with the capability to swiftly identify and apply known and test exploits. It's particularly valuable for professionals seeking to enhance their security measures or develop robust detection strategies against emerging threats.

Regular:

python sploitscan.py CVE-YYYY-NNNNN

Enter one or more CVE IDs to fetch data. Separate multiple CVE IDs with spaces.

python sploitscan.py CVE-YYYY-NNNNN CVE-YYYY-NNNNN

Optional: Export the results to a JSON or CSV file. Specify the format: 'json' or 'csv'.

python sploitscan.py CVE-YYYY-NNNNN -e JSON

The Patching Prioritization System in SploitScan provides a strategic approach to prioritizing security patches based on the severity and exploitability of vulnerabilities. It's influenced by the model from CVE Prioritizer, with enhancements for handling publicly available exploits. Here's how it works:

This system assists users in making informed decisions on which vulnerabilities to patch first, considering both their potential impact and the likelihood of exploitation. Thresholds can be changed to your business needs.

Contributions are welcome. Please feel free to fork, modify, and make pull requests or report issues.

Alexander Hagenah - URL - Twitter

SqliSniper is a robust Python tool designed to detect time-based blind SQL injections in HTTP request headers. It enhances the security assessment process by rapidly scanning and identifying potential vulnerabilities using multi-threaded, ensuring speed and efficiency. Unlike other scanners, SqliSniper is designed to eliminates false positives through and send alerts upon detection, with the built-in Discord notification functionality.

git clone https://github.com/danialhalo/SqliSniper.git

cd SqliSniper

chmod +x sqlisniper.py

pip3 install -r requirements.txt

This will display help for the tool. Here are all the options it supports.

ubuntu:~/sqlisniper$ ./sqlisniper.py -h

███████╗ ██████╗ ██╗ ██╗ ███████╗███╗ ██╗██╗██████╗ ███████╗██████╗

██╔════╝██╔═══██╗██║ ██║ ██╔════╝████╗ ██║██║██╔══██╗██╔════╝██╔══██╗

██████╗██║ ██║██║ ██║ ███████╗██╔██╗ ██║██║██████╔╝█████╗ ██████╔╝

╚════██║██║▄▄ ██║██║ ██║ ╚════██║██║╚██╗██║██║██╔═══╝ ██╔══╝ ██╔══██╗

███████║╚██ ███╔╝███████╗██║ ███████║██║ ╚████║██║██║ ███████╗██║ ██║

╚══════╝ ╚══▀▀═╝ ╚══════╝╚═╝ ╚══════╝╚═╝ ╚═══╝╚═╝╚═╝ ╚══════╝╚═╝ ╚═╝

-: By Muhammad Danial :-

usage: sqlisniper.py [-h] [-u URL] [-r URLS_FILE] [-p] [--proxy PROXY] [--payload PA YLOAD] [--single-payload SINGLE_PAYLOAD] [--discord DISCORD] [--headers HEADERS]

[--threads THREADS]

Detect SQL injection by sending malicious queries

options:

-h, --help show this help message and exit

-u URL, --url URL Single URL for the target

-r URLS_FILE, --urls_file URLS_FILE

File containing a list of URLs

-p, --pipeline Read from pipeline

--proxy PROXY Proxy for intercepting requests (e.g., http://127.0.0.1:8080)

--payload PAYLOAD File containing malicious payloads (default is payloads.txt)

--single-payload SINGLE_PAYLOAD

Single payload for testing

--discord DISCORD Discord Webhook URL

--headers HEADERS File containing headers (default is headers.txt)

--threads THREADS Number of threads

The url can be provided with -u flag for single site scan

./sqlisniper.py -u http://example.com

The -r flag allows SqliSniper to read a file containing multiple URLs for simultaneous scanning.

./sqlisniper.py -r url.txt

The SqliSniper can also worked with the pipeline input with -p flag

cat url.txt | ./sqlisniper.py -p

The pipeline feature facilitates seamless integration with other tools. For instance, you can utilize tools like subfinder and httpx, and then pipe their output to SqliSniper for mass scanning.

subfinder -silent -d google.com | sort -u | httpx -silent | ./sqlisniper.py -p

By default the SqliSniper use the payloads.txt file. However --payload flag can be used for providing custom payloads file.

./sqlisniper.py -u http://example.com --payload mssql_payloads.txt

While using the custom payloads file, ensure that you substitute the sleep time with %__TIME_OUT__%. SqliSniper dynamically adjusts the sleep time iteratively to mitigate potential false positives. The payloads file should look like this.

ubuntu:~/sqlisniper$ cat payloads.txt

0\"XOR(if(now()=sysdate(),sleep(%__TIME_OUT__%),0))XOR\"Z

"0"XOR(if(now()=sysdate()%2Csleep(%__TIME_OUT__%)%2C0))XOR"Z"

0'XOR(if(now()=sysdate(),sleep(%__TIME_OUT__%),0))XOR'Z

If you want to only test with the single payload --single-payload flag can be used. Make sure to replace the sleep time with %__TIME_OUT__%

./sqlisniper.py -r url.txt --single-payload "0'XOR(if(now()=sysdate(),sleep(%__TIME_OUT__%),0))XOR'Z"

Headers are saved in the file headers.txt for scanning custom header save the custom HTTP Request Header in headers.txt file.

ubuntu:~/sqlisniper$ cat headers.txt

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64)

X-Forwarded-For: 127.0.0.1

SqliSniper also offers Discord alert notifications, enhancing its functionality by providing real-time alerts through Discord webhooks. This feature proves invaluable during large-scale scans, allowing prompt notifications upon detection.

./sqlisniper.py -r url.txt --discord <web_hookurl>

Threads can be defined with --threads flag

./sqlisniper.py -r url.txt --threads 10

Note: It is crucial to consider that employing a higher number of threads might lead to potential false positives or overlooking valid issues. Due to the nature of time-based SQL injection it is recommended to use lower thread for more accurate detection.

SqliSniper is made in python with lots of <3 by @Muhammad Danial.

This repo contains the code for our USENIX Security '23 paper "ARGUS: A Framework for Staged Static Taint Analysis of GitHub Workflows and Actions". Argus is a comprehensive security analysis tool specifically designed for GitHub Actions. Built with an aim to enhance the security of CI/CD workflows, Argus utilizes taint-tracking techniques and an impact classifier to detect potential vulnerabilities in GitHub Action workflows.

Visit our website - secureci.org for more information.

Taint-Tracking: Argus uses sophisticated algorithms to track the flow of potentially untrusted data from specific sources to security-critical sinks within GitHub Actions workflows. This enables the identification of vulnerabilities that could lead to code injection attacks.

Impact Classifier: Argus classifies identified vulnerabilities into High, Medium, and Low severity classes, providing a clearer understanding of the potential impact of each identified vulnerability. This is crucial in prioritizing mitigation efforts.

This Python script provides a command line interface for interacting with GitHub repositories and GitHub actions.

python argus.py --mode [mode] --url [url] [--output-folder path_to_output] [--config path_to_config] [--verbose] [--branch branch_name] [--commit commit_hash] [--tag tag_name] [--action-path path_to_action] [--workflow-path path_to_workflow]--mode: The mode of operation. Choose either 'repo' or 'action'. This parameter is required.--url: The GitHub URL. Use USERNAME:TOKEN@URL for private repos. This parameter is required.--output-folder: The output folder. The default value is '/tmp'. This parameter is optional.--config: The config file. This parameter is optional.--verbose: Verbose mode. If this option is provided, the logging level is set to DEBUG. Otherwise, it is set to INFO. This parameter is optional.--branch: The branch name. You must provide exactly one of: --branch, --commit, --tag. This parameter is optional.--commit: The commit hash. You must provide exactly one of: --branch, --commit, --tag. This parameter is optional.--tag: The tag. You must provide exactly one of: --branch, --commit, --tag. This parameter is optional.--action-path: The (relative) path to the action. You cannot provide --action-path in repo mode. This parameter is optional.--workflow-path: The (relative) path to the workflow. You cannot provide --workflow-path in action mode. This parameter is optional.To use this script to interact with a GitHub repo, you might run a command like the following:

python argus.py --mode repo --url https://github.com/username/repo.git --branch masterThis would run the script in repo mode on the master branch of the specified repository.

Argus can be run inside a docker container. To do so, follow the steps:

results folderYou can view SARIF results either through an online viewer or with a Visual Studio Code (VSCode) extension.

Online Viewer: The SARIF Web Viewer is an online tool that allows you to visualize SARIF files. You can upload your SARIF file (argus_report.sarif) directly to the website to view the results.

VSCode Extension: If you prefer to use VSCode, you can install the SARIF Viewer extension. After installing the extension, you can open your SARIF file (argus_report.sarif) in VSCode. The results will appear in the SARIF Explorer pane, which provides a detailed and navigable view of the results.

Remember to handle the SARIF file with care, especially if it contains sensitive information from your codebase.