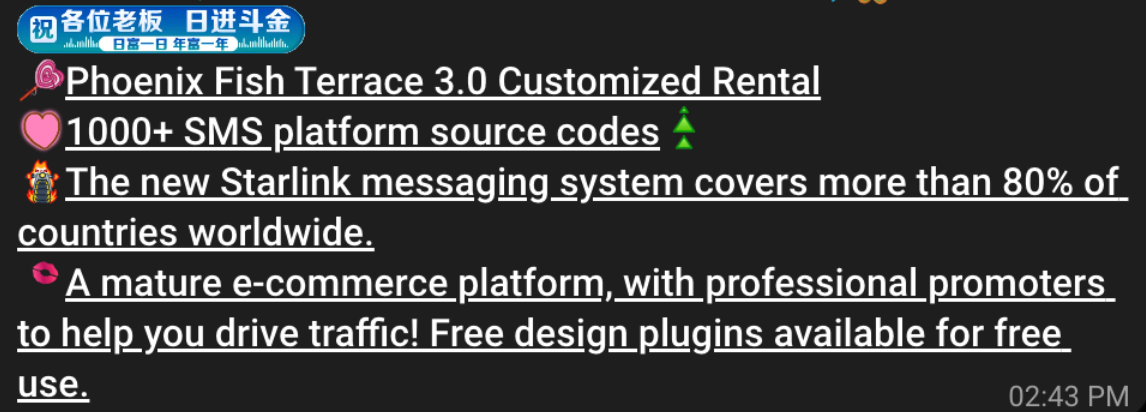

China-based phishing groups blamed for non-stop scam SMS messages about a supposed wayward package or unpaid toll fee are promoting a new offering, just in time for the holiday shopping season: Phishing kits for mass-creating fake but convincing e-commerce websites that convert customer payment card data into mobile wallets from Apple and Google. Experts say these same phishing groups also are now using SMS lures that promise unclaimed tax refunds and mobile rewards points.

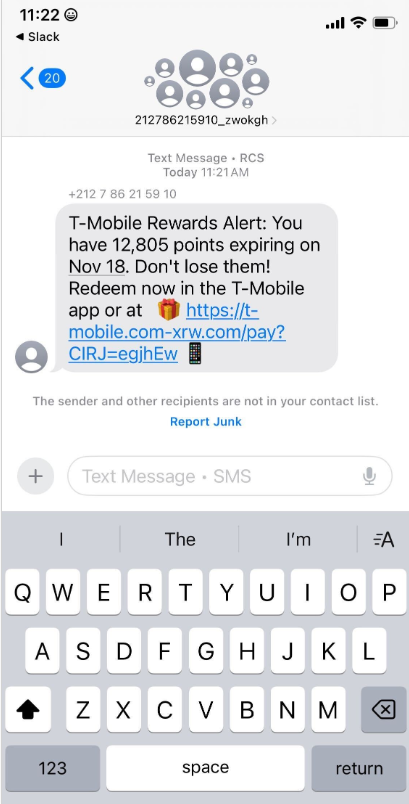

Over the past week, thousands of domain names were registered for scam websites that purport to offer T-Mobile customers the opportunity to claim a large number of rewards points. The phishing domains are being promoted by scam messages sent via Apple’s iMessage service or the functionally equivalent RCS messaging service built into Google phones.

An instant message spoofing T-Mobile says the recipient is eligible to claim thousands of rewards points.

The website scanning service urlscan.io shows thousands of these phishing domains have been deployed in just the past few days alone. The phishing websites will only load if the recipient visits with a mobile device, and they ask for the visitor’s name, address, phone number and payment card data to claim the points.

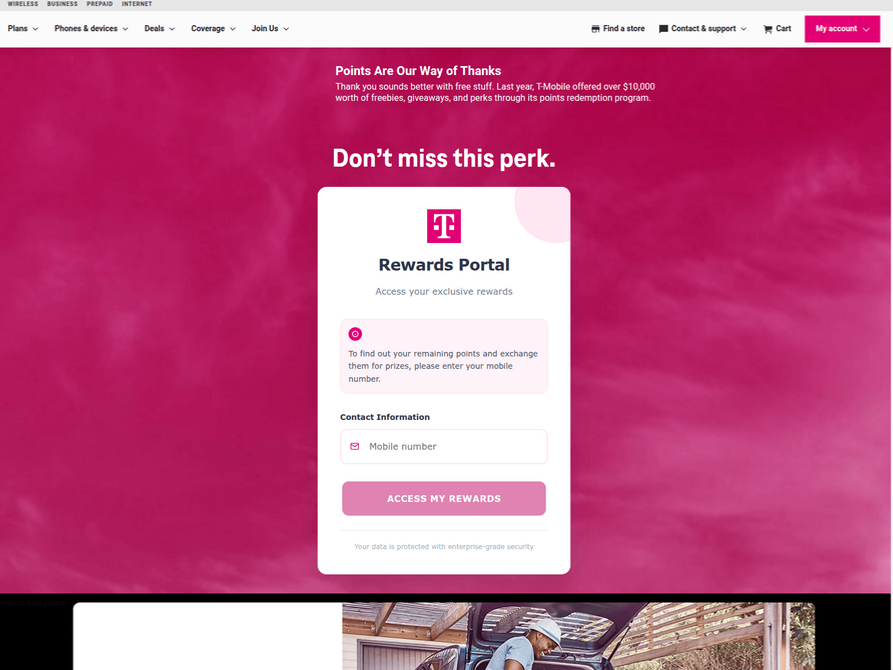

A phishing website registered this week that spoofs T-Mobile.

If card data is submitted, the site will then prompt the user to share a one-time code sent via SMS by their financial institution. In reality, the bank is sending the code because the fraudsters have just attempted to enroll the victim’s phished card details in a mobile wallet from Apple or Google. If the victim also provides that one-time code, the phishers can then link the victim’s card to a mobile device that they physically control.

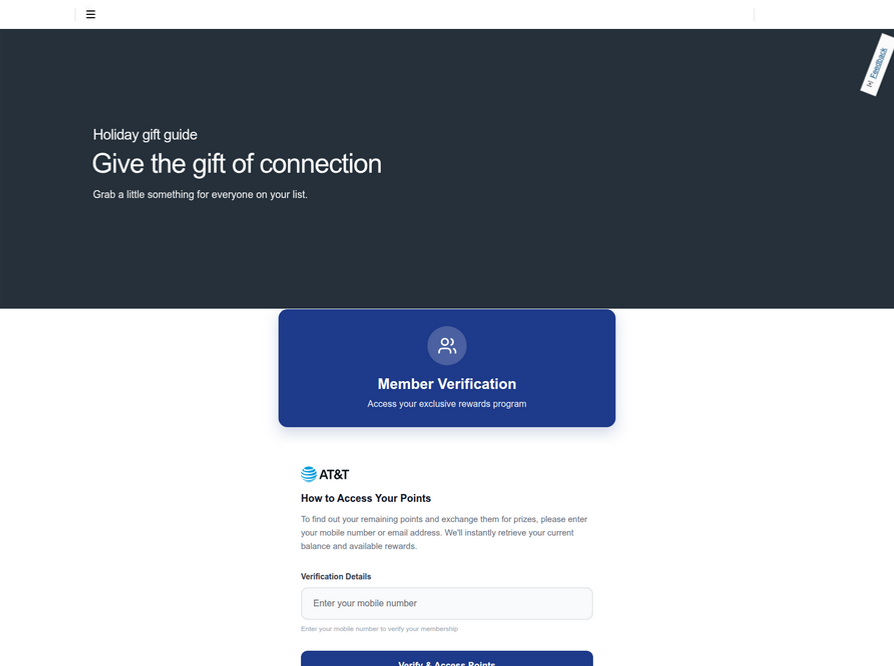

Pivoting off these T-Mobile phishing domains in urlscan.io reveals a similar scam targeting AT&T customers:

An SMS phishing or “smishing” website targeting AT&T users.

Ford Merrill works in security research at SecAlliance, a CSIS Security Group company. Merrill said multiple China-based cybercriminal groups that sell phishing-as-a-service platforms have been using the mobile points lure for some time, but the scam has only recently been pointed at consumers in the United States.

“These points redemption schemes have not been very popular in the U.S., but have been in other geographies like EU and Asia for a while now,” Merrill said.

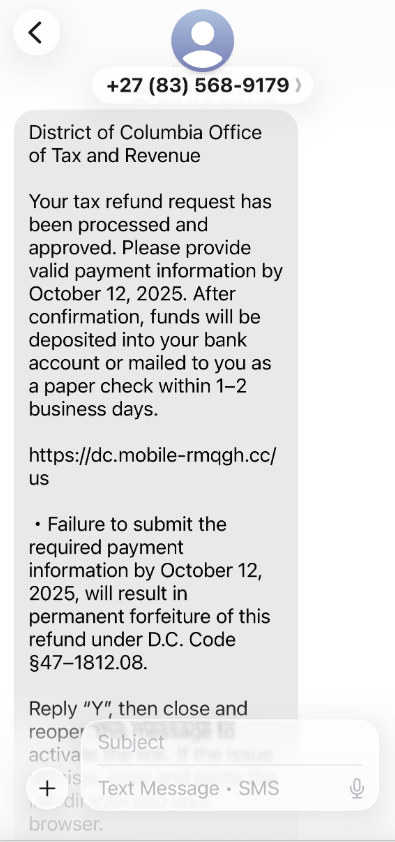

A review of other domains flagged by urlscan.io as tied to this Chinese SMS phishing syndicate shows they are also spoofing U.S. state tax authorities, telling recipients they have an unclaimed tax refund. Again, the goal is to phish the user’s payment card information and one-time code.

A text message that spoofs the District of Columbia’s Office of Tax and Revenue.

Many SMS phishing or “smishing” domains are quickly flagged by browser makers as malicious. But Merrill said one burgeoning area of growth for these phishing kits — fake e-commerce shops — can be far harder to spot because they do not call attention to themselves by spamming the entire world.

Merrill said the same Chinese phishing kits used to blast out package redelivery message scams are equipped with modules that make it simple to quickly deploy a fleet of fake but convincing e-commerce storefronts. Those phony stores are typically advertised on Google and Facebook, and consumers usually end up at them by searching online for deals on specific products.

A machine-translated screenshot of an ad from a China-based phishing group promoting their fake e-commerce shop templates.

With these fake e-commerce stores, the customer is supplying their payment card and personal information as part of the normal check-out process, which is then punctuated by a request for a one-time code sent by your financial institution. The fake shopping site claims the code is required by the user’s bank to verify the transaction, but it is sent to the user because the scammers immediately attempt to enroll the supplied card data in a mobile wallet.

According to Merrill, it is only during the check-out process that these fake shops will fetch the malicious code that gives them away as fraudulent, which tends to make it difficult to locate these stores simply by mass-scanning the web. Also, most customers who pay for products through these sites don’t realize they’ve been snookered until weeks later when the purchased item fails to arrive.

“The fake e-commerce sites are tough because a lot of them can fly under the radar,” Merrill said. “They can go months without being shut down, they’re hard to discover, and they generally don’t get flagged by safe browsing tools.”

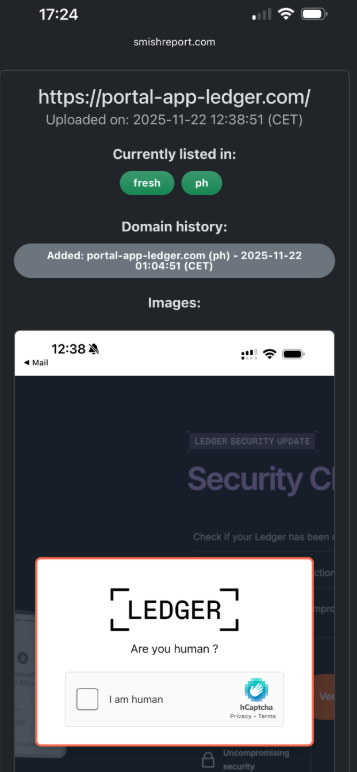

Happily, reporting these SMS phishing lures and websites is one of the fastest ways to get them properly identified and shut down. Raymond Dijkxhoorn is the CEO and a founding member of SURBL, a widely-used blocklist that flags domains and IP addresses known to be used in unsolicited messages, phishing and malware distribution. SURBL has created a website called smishreport.com that asks users to forward a screenshot of any smishing message(s) received.

“If [a domain is] unlisted, we can find and add the new pattern and kill the rest” of the matching domains, Dijkxhoorn said. “Just make a screenshot and upload. The tool does the rest.”

The SMS phishing reporting site smishreport.com.

Merrill said the last few weeks of the calendar year typically see a big uptick in smishing — particularly package redelivery schemes that spoof the U.S. Postal Service or commercial shipping companies.

“Every holiday season there is an explosion in smishing activity,” he said. “Everyone is in a bigger hurry, frantically shopping online, paying less attention than they should, and they’re just in a better mindset to get phished.”

As we can see, adopting a shopping strategy of simply buying from the online merchant with the lowest advertised prices can be a bit like playing Russian Roulette with your wallet. Even people who shop mainly at big-name online stores can get scammed if they’re not wary of too-good-to-be-true offers (think third-party sellers on these platforms).

If you don’t know much about the online merchant that has the item you wish to buy, take a few minutes to investigate its reputation. If you’re buying from an online store that is brand new, the risk that you will get scammed increases significantly. How do you know the lifespan of a site selling that must-have gadget at the lowest price? One easy way to get a quick idea is to run a basic WHOIS search on the site’s domain name. The more recent the site’s “created” date, the more likely it is a phantom store.

If you receive a message warning about a problem with an order or shipment, visit the e-commerce or shipping site directly, and avoid clicking on links or attachments — particularly missives that warn of some dire consequences unless you act quickly. Phishers and malware purveyors typically seize upon some kind of emergency to create a false alarm that often causes recipients to temporarily let their guard down.

But it’s not just outright scammers who can trip up your holiday shopping: Often times, items that are advertised at steeper discounts than other online stores make up for it by charging way more than normal for shipping and handling.

So be careful what you agree to: Check to make sure you know how long the item will take to be shipped, and that you understand the store’s return policies. Also, keep an eye out for hidden surcharges, and be wary of blithely clicking “ok” during the checkout process.

Most importantly, keep a close eye on your monthly statements. If I were a fraudster, I’d most definitely wait until the holidays to cram through a bunch of unauthorized charges on stolen cards, so that the bogus purchases would get buried amid a flurry of other legitimate transactions. That’s why it’s key to closely review your credit card bill and to quickly dispute any charges you didn’t authorize.

New online threats emerge every day, putting our personal information, money and devices at risk. In its 2024 Internet Crime Report, the Federal Bureau of Investigation reports that 859,532 complaints of suspected internet crime—including ransomware, viruses and malware, data breaches, denials of service, and other forms of cyberattack—resulted in losses of over $16 billion—a 33% increase from 2023.

That’s why it is essential to stay ahead of these threats. One way to combat these is by conducting virus scans using proven software tools that constantly monitor and check your devices while safeguarding your sensitive information. In this article, we’ll go through everything you need to know to run a scan effectively to keep your computers, phones and tablets in tip-top shape.

Whether you think you might have a virus on your computer or devices or just want to keep them running smoothly, it’s easy to do a virus scan.

Each antivirus program works a little differently, but in general the software will look for known malware with specific characteristics, as well as their variants that have a similar code base. Some antivirus software even checks for suspicious behavior. If the software comes across a dangerous program or piece of code, the antivirus software removes it. In some cases, a dangerous program can be replaced with a clean one from the manufacturer.

Before doing a virus scan, it is useful to know the telltale signs of viral presence in your device. Is your device acting sluggish or having a hard time booting up? Have you noticed missing files or a lack of storage space? Have you noticed emails or messages sent from your account that you did not write? Perhaps you’ve noticed changes to your browser homepage or settings? Maybe you’re seeing unexpected pop-up windows, or experiencing crashes and other program errors. These are just some signs that your device may have a virus, but don’t get too worried yet because many of these issues can be resolved with a virus scan.

Free virus scanner tools, both in web-based and downloadable formats, offer a convenient way to perform a one-time check for malware. They are most useful when you need a second opinion or are asking yourself, “do I have a virus?” after noticing something suspect.

However, it’s critical to be cautious. For one, cybercriminals often create fake “free” virus checker tools that are actually malware in disguise. If you opt for free scanning tools, it is best to lean on highly reputable cybersecurity brands. On your app store or browser, navigate to a proven online scanning tool with good reviews or a website whose URL starts with “https” to confirm you are in a secure location.

Secondly, free tools are frequently quite basic and perform only the minimum required service. If you choose to go this path, look for free trial versions that offer access to the full suite of premium features, including real-time protection, a firewall, and a VPN. This will give you a glimpse of a solution’s comprehensive, multi-layered security capability before you commit to a subscription.

If safeguarding all your computers and mobile devices individually sounds overwhelming, you can opt for comprehensive security products that protect computers, smartphones and tablets from a central, cloud-based hub, making virus prevention a breeze. Many of these modern antivirus solutions are powered by both local and cloud-based technologies to reduce the strain on your computer’s resources.

This guide will walk you through the simple steps to safely scan your computer using reliable online tools, helping you detect potential threats, and protect your personal data.

When selecting the right antivirus software, look beyond a basic virus scan and consider these key features:

The process of checking for viruses depends on the device type and its operating system. Generally, however, the virus scanner will display a “Scan” button to start the process of checking your system’s files and apps.

Here are more specific tips to help you scan your computers, phones and tablets:

If you use Windows 11, go into “Settings” and drill down to the “Privacy & Security > Windows Security > Virus & Threat Protection” tab, which will indicate if there are actions needed. This hands-off function is Microsoft’s own basic antivirus solution called Windows Defender. Built directly into the operating system and enabled by default, this solution provides a baseline of protection at no extra cost for casual Windows users. However, Microsoft is the first to admit that it lags behind specialized paid products in detecting the very latest zero-day threats.

Mac computers don’t have a built-in antivirus program, so you will have to download security software to do a virus scan. As mentioned, free antivirus applications are available online, but we recommend investing in trusted software that is proven to protect you from cyberthreats.

If you decide to invest in more robust antivirus software, running a scan is usually straightforward and intuitive. For more detailed instructions, we suggest searching the software’s help menu or going online and following their step-by-step instructions.

Smartphones and tablets are powerful devices that you likely use for nearly every online operation in your daily life from banking, emailing, messaging, connecting, and storing personal information. This opens your mobile device to getting infected through malicious apps, especially those downloaded from unofficial stores, phishing links sent via text or email, or by connecting to compromised wi-fi networks.

Regular virus scans with a mobile security software are crucial for protecting your devices. Be aware, however, that Android and IOS operating systems merit distinct solutions.

Antivirus products for Android devices abound due to this system’s open-source foundation. However, due to Apple’s strong security model, which includes app sandboxing, traditional viruses are rare on iPhones and iPads. However, these devices are not immune to all threats. You can still fall victim to phishing scams, insecure Wi-Fi networks, and malicious configuration profiles. Signs of a compromise can include unusual calendar events, frequent browser redirects, or unexpected pop-ups.

Apple devices, however, closed platform doesn’t easily accommodate third-party applications, especially unvetted ones. You will most likely find robust and verified antivirus scanning tools on Apple’s official app store.

Before you open any downloaded file or email attachment, it’s wise to check it for threats. To perform a targeted virus scan on a single file, simply right-click the file in Windows Explorer or macOS Finder and select the “Scan” option from the context menu to run the integrated virus checker on a suspicious item.

For an added layer of security, especially involving files from unknown sources, you can use a web-based file-checking service that scans for malware. These websites let you upload a file, which is then analyzed by multiple antivirus engines. Many security-conscious email clients also automatically scan incoming attachments, but a manual scan provides crucial, final-line defense before execution.

Once the scan is complete, the tool will display a report of any threats it found, including the name of the malware and the location of the infected file. If your antivirus software alerts you to a threat, don’t panic—it means the program is doing its job.

The first and most critical step is to follow the software’s instructions. It might direct you to quarantine the malicious file to isolate the file in a secure vault where it can no longer cause harm. You can then review the details of the threat provided by your virus scanner and choose to delete the file permanently, which is usually the safest option.

After the threat is handled, ensure your antivirus software and operating system are fully updated. Finally, run a new, full system virus scan to confirm that all traces of the infection have been eliminated. Regularly backing up your important data to an external drive or cloud service can also be a lifesaver in the event of a serious infection.

The most effective way to maintain your device’s security is to automate your defenses. A quality antivirus suite allows you to easily schedule a regular virus scan so you’re always protected without having to do it manually. A daily quick scan is a great habit for any user; it’s fast and checks the most vulnerable parts of your system. Most antivirus products regularly scan your computer or device in the background, so a manual scan is only needed if you notice something dubious, like crashes or excessive pop-ups. You can also set regular scans on your schedule, but a weekly full scan is ideal.

These days, it is essential to stay ahead of the wide variety of continuously evolving cyberthreats. Your first line of defense against these threats is to regularly conduct a virus scan. You can choose among the many free yet limited-time products or comprehensive, cloud-based solutions.

While many free versions legitimately perform their intended function, it’s critical to be cautious as these are more often baseline solutions while some are malware in disguise. They also lack the continuous, real-time protection necessary to block threats proactively.

A better option is to invest in verified, trustworthy, and all-in-one antivirus products like McAfee+ that, aside from its accurate virus scanning tool, also offers a firewall, a virtual private network, and identity protection. For complete peace of mind, upgrading to a paid solution like McAfee Total Protection is essential for proactively safeguarding your devices and data in real-time, 24/7.

The post How to Scan for Viruses and Confirm Your Device Is Safe appeared first on McAfee Blog.

/\

_ / |

/ \ | \

| |\| |

| | | /

| /| |/

|/ |/

,/; ; ;

,'/|; ,/,/,

,'/ |;/,/,/,/|

,/; |;|/,/,/,/,/|

,/'; |;|,/,/,/,/,/|

,/'; |;|/,/,/,/,/,/|,

/ ; |;|,/,/,/,/,/,/|

/ ,'; |;|/,/,/,/,/,/,/|

/,/'; |;|,/,/,/,/,/,/,/|

/;/ '; |;|/,/,/,/,/,/,/,/|

██████╗ ███████╗ ██████╗ █████╗ ███████╗██╗ ██╗███████╗

██╔══██╗██╔════╝██╔════╝ ██╔══██╗██╔════╝██║ ██║██╔════╝

██████╔╝█████╗ ██║ ███╗███████║███████╗██║ ██║███████╗

██╔═══╝ ██╔══╝ ██║ ██║██╔══██║╚════██║██║ ██║╚════██║

██║ ███████╗╚██████╔╝██║ ██║███████║╚██████╔╝███████║

╚═╝ ╚══════╝ ╚═════╝ ╚═╝ ╚═╝╚══════╝ ╚═════╝ ╚══════╝

P E N T E S T A R S E N A L

A comprehensive web application security testing toolkit that combines 10 powerful penetration testing features into one tool.

Identifies potential security Misconfigurations" title="Misconfigurations">misconfigurations

JWT Token Inspector

Detects common JWT vulnerabilities

Parameter Pollution Finder

Detects server-side parameter handling issues

CORS Misconfiguration Scanner

Detects credential exposure risks

Upload Bypass Tester

Identifies dangerous file type handling

Exposed .git Directory Finder

Tests for sensitive information disclosure

SSRF (Server Side Request Forgery) Detector

Includes cloud metadata endpoint tests

Blind SQL Injection Time Delay Detector

Identifies injectable parameters

Local File Inclusion (LFI) Mapper

Supports various encoding bypasses

Web Application Firewall (WAF) Fingerprinter

git clone https://github.com/sobri3195/pegasus-pentest-arsenal.git

cd pegasus-pentest-arsenal

python -m venv venv

source venv/bin/activate # On Windows: venv\Scripts\activate

pip install -r requirements.txt

python pegasus_pentest.py

This project is licensed under the MIT License - see the LICENSE file for details.

This tool is provided for educational and authorized testing purposes only. Users are responsible for obtaining proper authorization before testing any target. The authors are not responsible for any misuse or damage caused by this tool.

SubGPT looks at subdomains you have already discovered for a domain and uses BingGPT to find more. Best part? It's free!

The following subdomains were found by this tool with these 30 subdomains as input.

call-prompts-staging.example.com

dclb02-dca1.prod.example.com

activedirectory-sjc1.example.com

iadm-staging.example.com

elevatenetwork-c.example.com

If you like my work, you can support me with as little as $1, here :)

pip install subgpt

git clone https://github.com/s0md3v/SubGPT && cd SubGPT && python setup.py install

cookies.json

Note: Any issues regarding BingGPT itself should be reported EdgeGPT, not here.

It is supposed to be used after you have discovered some subdomains using all other methods. The standard way to run SubGPT is as follows:

subgpt -i input.txt -o output.txt -c /path/to/cookies.json

If you don't specify an output file, the output will be shown in your terminal (stdout) instead.

To generate subdomains and not resolve them, use the --dont-resolve option. It's a great way to see all subdomains generated by SubGPT and/or use your own resolver on them.

____ _ _

| _ \ ___ __ _ __ _ ___ _ _ ___| \ | |

| |_) / _ \/ _` |/ _` / __| | | / __| \| |

| __/ __/ (_| | (_| \__ \ |_| \__ \ |\ |

|_| \___|\__, |\__,_|___/\__,_|___/_| \_|

|___/

███▄ █ ▓█████ ▒█████

██ ▀█ █ ▓█ ▀ ▒██▒ ██▒

▓██ ▀█ ██▒▒███ ▒██░ ██▒

▓██▒ ▐▌██▒▒▓█ ▄ ▒██ ██░

▒██░ ▓██░░▒████▒░ ████▓▒░

░ ▒░ ▒ ▒ ░░ ▒░ ░░ ▒░▒░▒░

░ ░░ ░ ▒░ ░ ░ ░ ░ ▒ ▒░

░ ░ ░ ░ ░ ░ ░ ▒

░ ░ ░ ░ ░

PEGASUS-NEO is a comprehensive penetration testing framework designed for security professionals and ethical hackers. It combines multiple security tools and custom modules for reconnaissance, exploitation, wireless attacks, web hacking, and more.

This tool is provided for educational and ethical testing purposes only. Usage of PEGASUS-NEO for attacking targets without prior mutual consent is illegal. It is the end user's responsibility to obey all applicable local, state, and federal laws.

Developers assume no liability and are not responsible for any misuse or damage caused by this program.

PEGASUS-NEO - Advanced Penetration Testing Framework

Copyright (C) 2024 Letda Kes dr. Sobri. All rights reserved.

This software is proprietary and confidential. Unauthorized copying, transfer, or

reproduction of this software, via any medium is strictly prohibited.

Written by Letda Kes dr. Sobri <muhammadsobrimaulana31@gmail.com>, January 2024

Password: Sobri

Social media tracking

Exploitation & Pentesting

Custom payload generation

Wireless Attacks

WPS exploitation

Web Attacks

CMS scanning

Social Engineering

Credential harvesting

Tracking & Analysis

# Clone the repository

git clone https://github.com/sobri3195/pegasus-neo.git

# Change directory

cd pegasus-neo

# Install dependencies

sudo python3 -m pip install -r requirements.txt

# Run the tool

sudo python3 pegasus_neo.py

sudo python3 pegasus_neo.py

This is a proprietary project and contributions are not accepted at this time.

For support, please email muhammadsobrimaulana31@gmail.com atau https://lynk.id/muhsobrimaulana

This project is protected under proprietary license. See the LICENSE file for details.

Made with ❤️ by Letda Kes dr. Sobri

A Python script to check Next.js sites for corrupt middleware vulnerability (CVE-2025-29927).

The corrupt middleware vulnerability allows an attacker to bypass authentication and access protected routes by send a custom header x-middleware-subrequest.

Next JS versions affected: - 11.1.4 and up

[!WARNING] This tool is for educational purposes only. Do not use it on websites or systems you do not own or have explicit permission to test. Unauthorized testing may be illegal and unethical.

Clone the repo

git clone https://github.com/takumade/ghost-route.git

cd ghost-route

Create and activate virtual environment

python -m venv .venv

source .venv/bin/activate

Install dependencies

pip install -r requirements.txt

python ghost-route.py <url> <path> <show_headers>

<url>: Base URL of the Next.js site (e.g., https://example.com)<path>: Protected path to test (default: /admin)<show_headers>: Show response headers (default: False)Basic Example

python ghost-route.py https://example.com /admin

Show Response Headers

python ghost-route.py https://example.com /admin True

MIT License

Welcome to TruffleHog Explorer, a user-friendly web-based tool to visualize and analyze data extracted using TruffleHog. TruffleHog is one of the most powerful secrets discovery, classification, validation, and analysis open source tool. In this context, a secret refers to a credential a machine uses to authenticate itself to another machine. This includes API keys, database passwords, private encryption keys, and more.

With an improved UI/UX, powerful filtering options, and export capabilities, this tool helps security professionals efficiently review potential secrets and credentials found in their repositories.

⚠️ This dashboard has been tested only with GitHub TruffleHog JSON outputs. Expect updates soon to support additional formats and platforms.

You can use online version here: TruffleHog Explorer

$ git clone https://github.com/yourusername/trufflehog-explorer.git

$ cd trufflehog-explorer

index.html

Simply open the index.html file in your preferred web browser.

$ open index.html

.json files from TruffleHog output.Happy Securing! 🔒

Lobo Guará is a platform aimed at cybersecurity professionals, with various features focused on Cyber Threat Intelligence (CTI). It offers tools that make it easier to identify threats, monitor data leaks, analyze suspicious domains and URLs, and much more.

Allows identifying domains and subdomains that may pose a threat to organizations. SSL certificates issued by trusted authorities are indexed in real-time, and users can search using keywords of 4 or more characters.

Note: The current database contains certificates issued from September 5, 2024.

Allows the insertion of keywords for monitoring. When a certificate is issued and the common name contains the keyword (minimum of 5 characters), it will be displayed to the user.

Generates a link to capture device information from attackers. Useful when the security professional can contact the attacker in some way.

Performs a scan on a domain, displaying whois information and subdomains associated with that domain.

Allows performing a scan on a URL to identify URIs (web paths) related to that URL.

Performs a scan on a URL, generating a screenshot and a mirror of the page. The result can be made public to assist in taking down malicious websites.

Monitors a URL with no active application until it returns an HTTP 200 code. At that moment, it automatically initiates a URL scan, providing evidence for actions against malicious sites.

Centralizes intelligence news from various channels, keeping users updated on the latest threats.

The application installation has been approved on Ubuntu 24.04 Server and Red Hat 9.4 distributions, the links for which are below:

Lobo Guará Implementation on Ubuntu 24.04

Lobo Guará Implementation on Red Hat 9.4

There is a Dockerfile and a docker-compose version of Lobo Guará too. Just clone the repo and do:

docker compose up

Then, go to your web browser at localhost:7405.

Before proceeding with the installation, ensure the following dependencies are installed:

git clone https://github.com/olivsec/loboguara.git

cd loboguara/

nano server/app/config.py

Fill in the required parameters in the config.py file:

class Config:

SECRET_KEY = 'YOUR_SECRET_KEY_HERE'

SQLALCHEMY_DATABASE_URI = 'postgresql://guarauser:YOUR_PASSWORD_HERE@localhost/guaradb?sslmode=disable'

SQLALCHEMY_TRACK_MODIFICATIONS = False

MAIL_SERVER = 'smtp.example.com'

MAIL_PORT = 587

MAIL_USE_TLS = True

MAIL_USERNAME = 'no-reply@example.com'

MAIL_PASSWORD = 'YOUR_SMTP_PASSWORD_HERE'

MAIL_DEFAULT_SENDER = 'no-reply@example.com'

ALLOWED_DOMAINS = ['yourdomain1.my.id', 'yourdomain2.com', 'yourdomain3.net']

API_ACCESS_TOKEN = 'YOUR_LOBOGUARA_API_TOKEN_HERE'

API_URL = 'https://loboguara.olivsec.com.br/api'

CHROME_DRIVER_PATH = '/opt/loboguara/bin/chromedriver'

GOOGLE_CHROME_PATH = '/opt/loboguara/bin/google-chrome'

FFUF_PATH = '/opt/loboguara/bin/ffuf'

SUBFINDER_PATH = '/opt/loboguara/bin/subfinder'

LOG_LEVEL = 'ERROR'

LOG_FILE = '/opt/loboguara/logs/loboguara.log'

sudo chmod +x ./install.sh

sudo ./install.sh

sudo -u loboguara /opt/loboguara/start.sh

Access the URL below to register the Lobo Guará Super Admin

http://your_address:7405/admin

Access the Lobo Guará platform online: https://loboguara.olivsec.com.br/

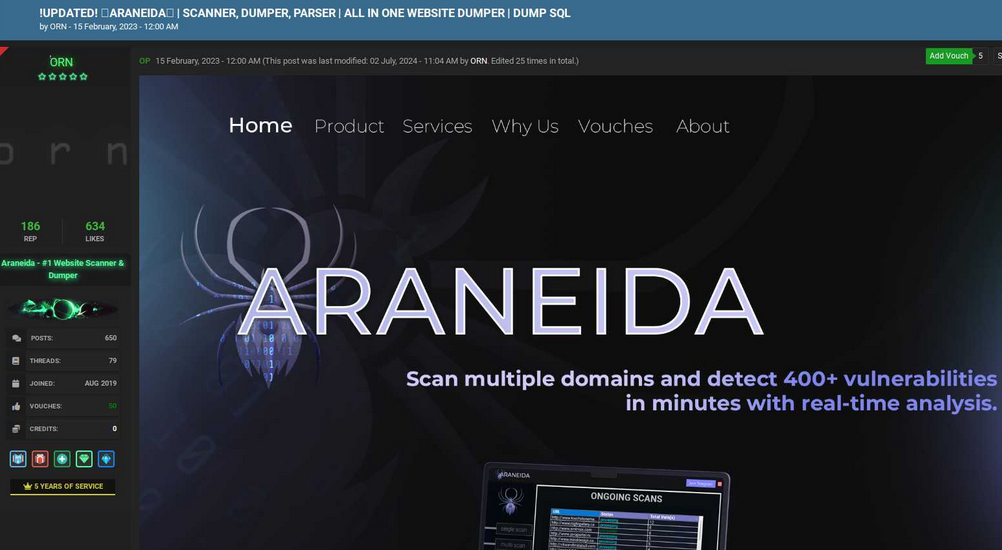

Cybercriminals are selling hundreds of thousands of credential sets stolen with the help of a cracked version of Acunetix, a powerful commercial web app vulnerability scanner, new research finds. The cracked software is being resold as a cloud-based attack tool by at least two different services, one of which KrebsOnSecurity traced to an information technology firm based in Turkey.

Araneida Scanner.

Cyber threat analysts at Silent Push said they recently received reports from a partner organization that identified an aggressive scanning effort against their website using an Internet address previously associated with a campaign by FIN7, a notorious Russia-based hacking group.

But on closer inspection they discovered the address contained an HTML title of “Araneida Customer Panel,” and found they could search on that text string to find dozens of unique addresses hosting the same service.

It soon became apparent that Araneida was being resold as a cloud-based service using a cracked version of Acunetix, allowing paying customers to conduct offensive reconnaissance on potential target websites, scrape user data, and find vulnerabilities for exploitation.

Silent Push also learned Araneida bundles its service with a robust proxy offering, so that customer scans appear to come from Internet addresses that are randomly selected from a large pool of available traffic relays.

The makers of Acunetix, Texas-based application security vendor Invicti Security, confirmed Silent Push’s findings, saying someone had figured out how to crack the free trial version of the software so that it runs without a valid license key.

“We have been playing cat and mouse for a while with these guys,” said Matt Sciberras, chief information security officer at Invicti.

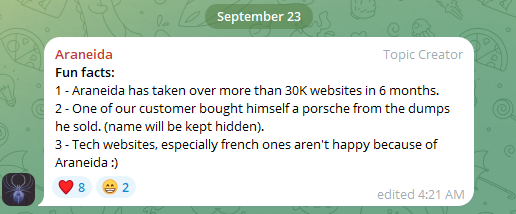

Silent Push said Araneida is being advertised by an eponymous user on multiple cybercrime forums. The service’s Telegram channel boasts nearly 500 subscribers and explains how to use the tool for malicious purposes.

In a “Fun Facts” list posted to the channel in late September, Araneida said their service was used to take over more than 30,000 websites in just six months, and that one customer used it to buy a Porsche with the payment card data (“dumps”) they sold.

Araneida Scanner’s Telegram channel bragging about how customers are using the service for cybercrime.

“They are constantly bragging with their community about the crimes that are being committed, how it’s making criminals money,” said Zach Edwards, a senior threat researcher at Silent Push. “They are also selling bulk data and dumps which appear to have been acquired with this tool or due to vulnerabilities found with the tool.”

Silent Push also found a cracked version of Acunetix was powering at least 20 instances of a similar cloud-based vulnerability testing service catering to Mandarin speakers, but they were unable to find any apparently related sales threads about them on the dark web.

Rumors of a cracked version of Acunetix being used by attackers surfaced in June 2023 on Twitter/X, when researchers first posited a connection between observed scanning activity and Araneida.

According to an August 2023 report (PDF) from the U.S. Department of Health and Human Services (HHS), Acunetix (presumably a cracked version) is among several tools used by APT 41, a prolific Chinese state-sponsored hacking group.

Silent Push notes that the website where Araneida is being sold — araneida[.]co — first came online in February 2023. But a review of this Araneida nickname on the cybercrime forums shows they have been active in the criminal hacking scene since at least 2018.

A search in the threat intelligence platform Intel 471 shows a user by the name Araneida promoted the scanner on two cybercrime forums since 2022, including Breached and Nulled. In 2022, Araneida told fellow Breached members they could be reached on Discord at the username “Ornie#9811.”

According to Intel 471, this same Discord account was advertised in 2019 by a person on the cybercrime forum Cracked who used the monikers “ORN” and “ori0n.” The user “ori0n” mentioned in several posts that they could be reached on Telegram at the username “@sirorny.”

Orn advertising Araneida Scanner in Feb. 2023 on the forum Cracked. Image: Ke-la.com.

The Sirorny Telegram identity also was referenced as a point of contact for a current user on the cybercrime forum Nulled who is selling website development services, and who references araneida[.]co as one of their projects. That user, “Exorn,” has posts dating back to August 2018.

In early 2020, Exorn promoted a website called “orndorks[.]com,” which they described as a service for automating the scanning for web-based vulnerabilities. A passive DNS lookup on this domain at DomainTools.com shows that its email records pointed to the address ori0nbusiness@protonmail.com.

Constella Intelligence, a company that tracks information exposed in data breaches, finds this email address was used to register an account at Breachforums in July 2024 under the nickname “Ornie.” Constella also finds the same email registered at the website netguard[.]codes in 2021 using the password “ceza2003” [full disclosure: Constella is currently an advertiser on KrebsOnSecurity].

A search on the password ceza2003 in Constella finds roughly a dozen email addresses that used it in an exposed data breach, most of them featuring some variation on the name “altugsara,” including altugsara321@gmail.com. Constella further finds altugsara321@gmail.com was used to create an account at the cybercrime community RaidForums under the username “ori0n,” from an Internet address in Istanbul.

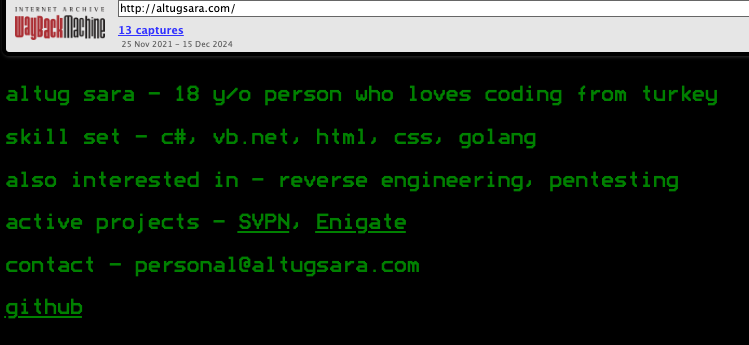

According to DomainTools, altugsara321@gmail.com was used in 2020 to register the domain name altugsara[.]com. Archive.org’s history for that domain shows that in 2021 it featured a website for a then 18-year-old Altuğ Şara from Ankara, Turkey.

Archive.org’s recollection of what altugsara dot com looked like in 2021.

LinkedIn finds this same altugsara[.]com domain listed in the “contact info” section of a profile for an Altug Sara from Ankara, who says he has worked the past two years as a senior software developer for a Turkish IT firm called Bilitro Yazilim.

Neither Altug Sara nor Bilitro Yazilim responded to requests for comment.

Invicti’s website states that it has offices in Ankara, but the company’s CEO said none of their employees recognized either name.

“We do have a small team in Ankara, but as far as I know we have no connection to the individual other than the fact that they are also in Ankara,” Invicti CEO Neil Roseman told KrebsOnSecurity.

Researchers at Silent Push say despite Araneida using a seemingly endless supply of proxies to mask the true location of its users, it is a fairly “noisy” scanner that will kick off a large volume of requests to various API endpoints, and make requests to random URLs associated with different content management systems.

What’s more, the cracked version of Acunetix being resold to cybercriminals invokes legacy Acunetix SSL certificates on active control panels, which Silent Push says provides a solid pivot for finding some of this infrastructure, particularly from the Chinese threat actors.

Further reading: Silent Push’s research on Araneida Scanner.

secator is a task and workflow runner used for security assessments. It supports dozens of well-known security tools and it is designed to improve productivity for pentesters and security researchers.

Curated list of commands

Unified input options

Unified output schema

CLI and library usage

Distributed options with Celery

Complexity from simple tasks to complex workflows

secator integrates the following tools:

| Name | Description | Category |

|---|---|---|

| httpx | Fast HTTP prober. | http |

| cariddi | Fast crawler and endpoint secrets / api keys / tokens matcher. | http/crawler |

| gau | Offline URL crawler (Alien Vault, The Wayback Machine, Common Crawl, URLScan). | http/crawler |

| gospider | Fast web spider written in Go. | http/crawler |

| katana | Next-generation crawling and spidering framework. | http/crawler |

| dirsearch | Web path discovery. | http/fuzzer |

| feroxbuster | Simple, fast, recursive content discovery tool written in Rust. | http/fuzzer |

| ffuf | Fast web fuzzer written in Go. | http/fuzzer |

| h8mail | Email OSINT and breach hunting tool. | osint |

| dnsx | Fast and multi-purpose DNS toolkit designed for running DNS queries. | recon/dns |

| dnsxbrute | Fast and multi-purpose DNS toolkit designed for running DNS queries (bruteforce mode). | recon/dns |

| subfinder | Fast subdomain finder. | recon/dns |

| fping | Find alive hosts on local networks. | recon/ip |

| mapcidr | Expand CIDR ranges into IPs. | recon/ip |

| naabu | Fast port discovery tool. | recon/port |

| maigret | Hunt for user accounts across many websites. | recon/user |

| gf | A wrapper around grep to avoid typing common patterns. | tagger |

| grype | A vulnerability scanner for container images and filesystems. | vuln/code |

| dalfox | Powerful XSS scanning tool and parameter analyzer. | vuln/http |

| msfconsole | CLI to access and work with the Metasploit Framework. | vuln/http |

| wpscan | WordPress Security Scanner | vuln/multi |

| nmap | Vulnerability scanner using NSE scripts. | vuln/multi |

| nuclei | Fast and customisable vulnerability scanner based on simple YAML based DSL. | vuln/multi |

| searchsploit | Exploit searcher. | exploit/search |

Feel free to request new tools to be added by opening an issue, but please check that the tool complies with our selection criterias before doing so. If it doesn't but you still want to integrate it into secator, you can plug it in (see the dev guide).

pipx install secator

pip install secator

wget -O - https://raw.githubusercontent.com/freelabz/secator/main/scripts/install.sh | sh

docker run -it --rm --net=host -v ~/.secator:/root/.secator freelabz/secator --help

alias secator="docker run -it --rm --net=host -v ~/.secator:/root/.secator freelabz/secator"

secator --help

git clone https://github.com/freelabz/secator

cd secator

docker-compose up -d

docker-compose exec secator secator --help

Note: If you chose the Bash, Docker or Docker Compose installation methods, you can skip the next sections and go straight to Usage.

secator uses external tools, so you might need to install languages used by those tools assuming they are not already installed on your system.

We provide utilities to install required languages if you don't manage them externally:

secator install langs go

secator install langs ruby

secator does not install any of the external tools it supports by default.

We provide utilities to install or update each supported tool which should work on all systems supporting apt:

secator install tools

secator install tools <TOOL_NAME>

secator install tools httpx

Please make sure you are using the latest available versions for each tool before you run secator or you might run into parsing / formatting issues.

secator comes installed with the minimum amount of dependencies.

There are several addons available for secator:

secator install addons worker

secator install addons google

secator install addons mongodb

secator install addons redis

secator install addons dev

secator install addons trace

secator install addons build

secator makes remote API calls to https://cve.circl.lu/ to get in-depth information about the CVEs it encounters. We provide a subcommand to download all known CVEs locally so that future lookups are made from disk instead:

secator install cves

To figure out which languages or tools are installed on your system (along with their version):

secator health

secator --help

Run a fuzzing task (ffuf):

secator x ffuf http://testphp.vulnweb.com/FUZZ

Run a url crawl workflow:

secator w url_crawl http://testphp.vulnweb.com

Run a host scan:

secator s host mydomain.com

and more... to list all tasks / workflows / scans that you can use:

secator x --help

secator w --help

secator s --help

To go deeper with secator, check out: * Our complete documentation * Our getting started tutorial video * Our Medium post * Follow us on social media: @freelabz on Twitter and @FreeLabz on YouTube

A make an LKM rootkit visible again.

It involves getting the memory address of a rootkit's "show_module" function, for example, and using that to call it, adding it back to lsmod, making it possible to remove an LKM rootkit.

We can obtain the function address in very simple kernels using /sys/kernel/tracing/available_filter_functions_addrs, however, it is only available from kernel 6.5x onwards.

An alternative to this is to scan the kernel memory, and later add it to lsmod again, so it can be removed.

So in summary, this LKM abuses the function of lkm rootkits that have the functionality to become visible again.

OBS: There is another trick of removing/defusing a LKM rootkit, but it will be in the research that will be launched.

Evade EDR's the simple way, by not touching any of the API's they hook.

I've noticed that most EDRs fail to scan scripting files, treating them merely as text files. While this might be unfortunate for them, it's an opportunity for us to profit.

Flashy methods like residing in memory or thread injection are heavily monitored. Without a binary signed by a valid Certificate Authority, execution is nearly impossible.

Enter BYOSI (Bring Your Own Scripting Interpreter). Every scripting interpreter is signed by its creator, with each certificate being valid. Testing in a live environment revealed surprising results: a highly signatured PHP script from this repository not only ran on systems monitored by CrowdStrike and Trellix but also established an external connection without triggering any EDR detections. EDRs typically overlook script files, focusing instead on binaries for implant delivery. They're configured to detect high entropy or suspicious sections in binaries, not simple scripts.

This attack method capitalizes on that oversight for significant profit. The PowerShell script's steps mirror what a developer might do when first entering an environment. Remarkably, just four lines of PowerShell code completely evade EDR detection, with Defender/AMSI also blind to it. Adding to the effectiveness, GitHub serves as a trusted deployer.

The PowerShell script achieves EDR/AV evasion through four simple steps (technically 3):

1.) It fetches the PHP archive for Windows and extracts it into a new directory named 'php' within 'C:\Temp'.

2.) The script then proceeds to acquire the implant PHP script or shell, saving it in the same 'C:\Temp\php' directory.

3.) Following this, it executes the implant or shell, utilizing the whitelisted PHP binary (which exempts the binary from most restrictions in place that would prevent the binary from running to begin with.)

With these actions completed, congratulations: you now have an active shell on a Crowdstrike-monitored system. What's particularly amusing is that, if my memory serves me correctly, Sentinel One is unable to scan PHP file types. So, feel free to let your imagination run wild.

I am in no way responsible for the misuse of this. This issue is a major blind spot in EDR protection, i am only bringing it to everyones attention.

A big thanks to @im4x5yn74x for affectionately giving it the name BYOSI, and helping with the env to test in bringing this attack method to life.

It appears as though MS Defender is now flagging the PHP script as malicious, but still fully allowing the Powershell script full execution. so, modify the PHP script.

hello sentinel one :) might want to make sure that you are making links not embed.

The Russia-based cybercrime group dubbed “Fin7,” known for phishing and malware attacks that have cost victim organizations an estimated $3 billion in losses since 2013, was declared dead last year by U.S. authorities. But experts say Fin7 has roared back to life in 2024 — setting up thousands of websites mimicking a range of media and technology companies — with the help of Stark Industries Solutions, a sprawling hosting provider that is a persistent source of cyberattacks against enemies of Russia.

In May 2023, the U.S. attorney for Washington state declared “Fin7 is an entity no more,” after prosecutors secured convictions and prison sentences against three men found to be high-level Fin7 hackers or managers. This was a bold declaration against a group that the U.S. Department of Justice described as a criminal enterprise with more than 70 people organized into distinct business units and teams.

The first signs of Fin7’s revival came in April 2024, when Blackberry wrote about an intrusion at a large automotive firm that began with malware served by a typosquatting attack targeting people searching for a popular free network scanning tool.

Now, researchers at security firm Silent Push say they have devised a way to map out Fin7’s rapidly regrowing cybercrime infrastructure, which includes more than 4,000 hosts that employ a range of exploits, from typosquatting and booby-trapped ads to malicious browser extensions and spearphishing domains.

Silent Push said it found Fin7 domains targeting or spoofing brands including American Express, Affinity Energy, Airtable, Alliant, Android Developer, Asana, Bitwarden, Bloomberg, Cisco (Webex), CNN, Costco, Dropbox, Grammarly, Google, Goto.com, Harvard, Lexis Nexis, Meta, Microsoft 365, Midjourney, Netflix, Paycor, Quickbooks, Quicken, Reuters, Regions Bank Onepass, RuPay, SAP (Ariba), Trezor, Twitter/X, Wall Street Journal, Westlaw, and Zoom, among others.

Zach Edwards, senior threat analyst at Silent Push, said many of the Fin7 domains are innocuous-looking websites for generic businesses that sometimes include text from default website templates (the content on these sites often has nothing to do with the entity’s stated business or mission).

Edwards said Fin7 does this to “age” the domains and to give them a positive or at least benign reputation before they’re eventually converted for use in hosting brand-specific phishing pages.

“It took them six to nine months to ramp up, but ever since January of this year they have been humming, building a giant phishing infrastructure and aging domains,” Edwards said of the cybercrime group.

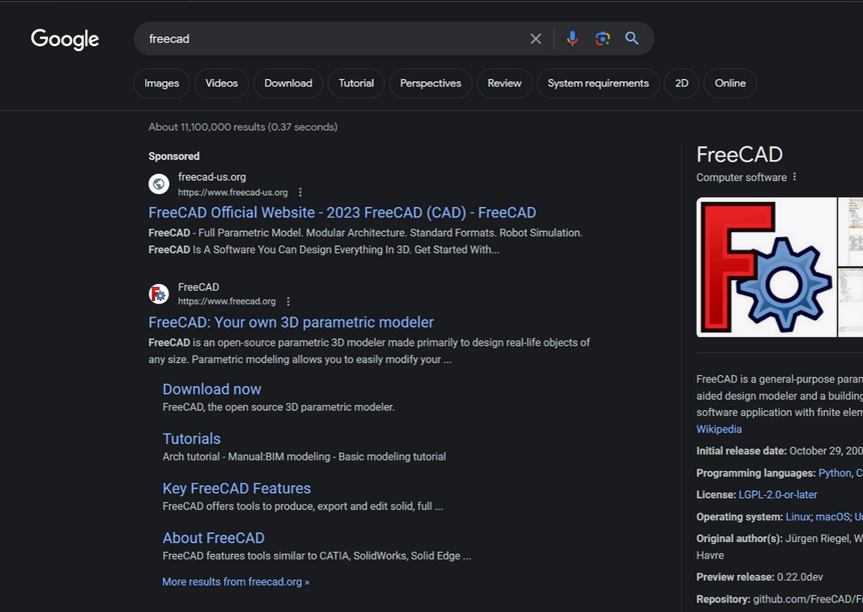

In typosquatting attacks, Fin7 registers domains that are similar to those for popular free software tools. Those look-alike domains are then advertised on Google so that sponsored links to them show up prominently in search results, which is usually above the legitimate source of the software in question.

A malicious site spoofing FreeCAD showed up prominently as a sponsored result in Google search results earlier this year.

According to Silent Push, the software currently being targeted by Fin7 includes 7-zip, PuTTY, ProtectedPDFViewer, AIMP, Notepad++, Advanced IP Scanner, AnyDesk, pgAdmin, AutoDesk, Bitwarden, Rest Proxy, Python, Sublime Text, and Node.js.

In May 2024, security firm eSentire warned that Fin7 was spotted using sponsored Google ads to serve pop-ups prompting people to download phony browser extensions that install malware. Malwarebytes blogged about a similar campaign in April, but did not attribute the activity to any particular group.

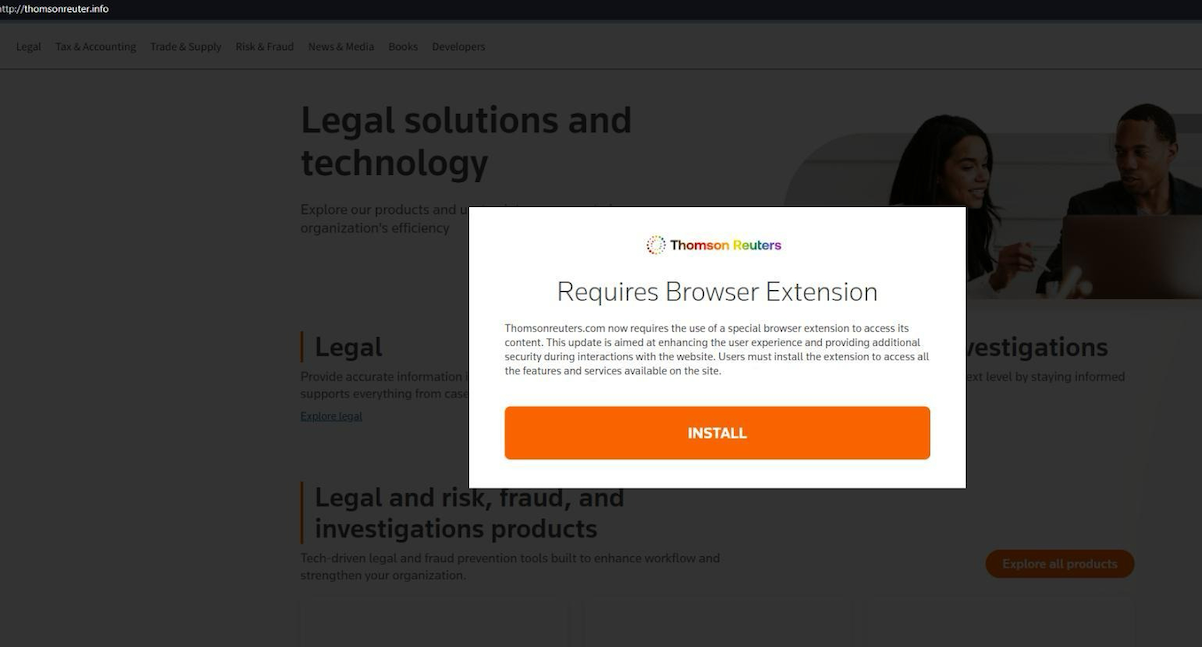

A pop-up at a Thomson Reuters typosquatting domain telling visitors they need to install a browser extension to view the news content.

Edwards said Silent Push discovered the new Fin7 domains after a hearing from an organization that was targeted by Fin7 in years past and suspected the group was once again active. Searching for hosts that matched Fin7’s known profile revealed just one active site. But Edwards said that one site pointed to many other Fin7 properties at Stark Industries Solutions, a large hosting provider that materialized just two weeks before Russia invaded Ukraine.

As KrebsOnSecurity wrote in May, Stark Industries Solutions is being used as a staging ground for wave after wave of cyberattacks against Ukraine that have been tied to Russian military and intelligence agencies.

“FIN7 rents a large amount of dedicated IP on Stark Industries,” Edwards said. “Our analysts have discovered numerous Stark Industries IPs that are solely dedicated to hosting FIN7 infrastructure.”

Fin7 once famously operated behind fake cybersecurity companies — with names like Combi Security and Bastion Secure — which they used for hiring security experts to aid in ransomware attacks. One of the new Fin7 domains identified by Silent Push is cybercloudsec[.]com, which promises to “grow your business with our IT, cyber security and cloud solutions.”

The fake Fin7 security firm Cybercloudsec.

Like other phishing groups, Fin7 seizes on current events, and at the moment it is targeting tourists visiting France for the Summer Olympics later this month. Among the new Fin7 domains Silent Push found are several sites phishing people seeking tickets at the Louvre.

“We believe this research makes it clear that Fin7 is back and scaling up quickly,” Edwards said. “It’s our hope that the law enforcement community takes notice of this and puts Fin7 back on their radar for additional enforcement actions, and that quite a few of our competitors will be able to take this pool and expand into all or a good chunk of their infrastructure.”

Further reading:

Stark Industries Solutions: An Iron Hammer in the Cloud.

A 2022 deep dive on Fin7 from the Swiss threat intelligence firm Prodaft (PDF).

Reconnaissance is the first phase of penetration testing which means gathering information before any real attacks are planned So Ashok is an Incredible fast recon tool for penetration tester which is specially designed for Reconnaissance" title="Reconnaissance">Reconnaissance phase. And in Ashok-v1.1 you can find the advanced google dorker and wayback crawling machine.

- Wayback Crawler Machine

- Google Dorking without limits

- Github Information Grabbing

- Subdomain Identifier

- Cms/Technology Detector With Custom Headers

~> git clone https://github.com/ankitdobhal/Ashok

~> cd Ashok

~> python3.7 -m pip3 install -r requirements.txt

A detailed usage guide is available on Usage section of the Wiki.

But Some index of options is given below:

Ashok can be launched using a lightweight Python3.8-Alpine Docker image.

$ docker pull powerexploit/ashok-v1.2

$ docker container run -it powerexploit/ashok-v1.2 --help

Retrieves relevant subdomains for the target website and consolidates them into a whitelist. These subdomains can be utilized during the scraping process.

Site-wide Link Discovery:

Collects all links throughout the website based on the provided whitelist and the specified max_depth.

Form and Input Extraction:

Identifies all forms and inputs found within the extracted links, generating a JSON output. This JSON output serves as a foundation for leveraging the XSS scanning capability of the tool.

XSS Scanning:

Note:

The scanning functionality is currently inactive on SPA (Single Page Application) web applications, and we have only tested it on websites developed with PHP, yielding remarkable results. In the future, we plan to incorporate these features into the tool.

Note:

This tool maintains an up-to-date list of file extensions that it skips during the exploration process. The default list includes common file types such as images, stylesheets, and scripts (

".css",".js",".mp4",".zip","png",".svg",".jpeg",".webp",".jpg",".gif"). You can customize this list to better suit your needs by editing the setting.json file..

$ git clone https://github.com/joshkar/X-Recon

$ cd X-Recon

$ python3 -m pip install -r requirements.txt

$ python3 xr.py

You can use this address in the Get URL section

http://testphp.vulnweb.com

SherlockChain is a powerful smart contract analysis framework that combines the capabilities of the renowned Slither tool with advanced AI-powered features. Developed by a team of security experts and AI researchers, SherlockChain offers unparalleled insights and vulnerability detection for Solidity, Vyper and Plutus smart contracts.

To install SherlockChain, follow these steps:

git clone https://github.com/0xQuantumCoder/SherlockChain.git

cd SherlockChain

pip install .

SherlockChain's AI integration brings several advanced capabilities to the table:

Natural Language Interaction: Users can interact with SherlockChain using natural language, allowing them to query the tool, request specific analyses, and receive detailed responses. he --help command in the SherlockChain framework provides a comprehensive overview of all the available options and features. It includes information on:

Vulnerability Detection: The --detect and --exclude-detectors options allow users to specify which vulnerability detectors to run, including both built-in and AI-powered detectors.

--report-format, --report-output, and various --report-* options control how the analysis results are reported, including the ability to generate reports in different formats (JSON, Markdown, SARIF, etc.).--filter-* options enable users to filter the reported issues based on severity, impact, confidence, and other criteria.--ai-* options allow users to configure and control the AI-powered features of SherlockChain, such as prioritizing high-impact vulnerabilities, enabling specific AI detectors, and managing AI model configurations.--truffle and --truffle-build-directory facilitate the integration of SherlockChain into popular development frameworks like Truffle.The --help command provides a detailed explanation of each option, its purpose, and how to use it, making it a valuable resource for users to quickly understand and leverage the full capabilities of the SherlockChain framework.

Example usage:

sherlockchain --help

This will display the comprehensive usage guide for the SherlockChain framework, including all available options and their descriptions.

usage: sherlockchain [-h] [--version] [--solc-remaps SOLC_REMAPS] [--solc-settings SOLC_SETTINGS]

[--solc-version SOLC_VERSION] [--truffle] [--truffle-build-directory TRUFFLE_BUILD_DIRECTORY]

[--truffle-config-file TRUFFLE_CONFIG_FILE] [--compile] [--list-detectors]

[--list-detectors-info] [--detect DETECTORS] [--exclude-detectors EXCLUDE_DETECTORS]

[--print-issues] [--json] [--markdown] [--sarif] [--text] [--zip] [--output OUTPUT]

[--filter-paths FILTER_PATHS] [--filter-paths-exclude FILTER_PATHS_EXCLUDE]

[--filter-contracts FILTER_CONTRACTS] [--filter-contracts-exclude FILTER_CONTRACTS_EXCLUDE]

[--filter-severity FILTER_SEVERITY] [--filter-impact FILTER_IMPACT]

[--filter-confidence FILTER_CONFIDENCE] [--filter-check-suicidal]

[--filter-check-upgradeable] [--f ilter-check-erc20] [--filter-check-erc721]

[--filter-check-reentrancy] [--filter-check-gas-optimization] [--filter-check-code-quality]

[--filter-check-best-practices] [--filter-check-ai-detectors] [--filter-check-all]

[--filter-check-none] [--check-all] [--check-suicidal] [--check-upgradeable]

[--check-erc20] [--check-erc721] [--check-reentrancy] [--check-gas-optimization]

[--check-code-quality] [--check-best-practices] [--check-ai-detectors] [--check-none]

[--check-all-detectors] [--check-all-severity] [--check-all-impact] [--check-all-confidence]

[--check-all-categories] [--check-all-filters] [--check-all-options] [--check-all]

[--check-none] [--report-format {json,markdown,sarif,text,zip}] [--report-output OUTPUT]

[--report-severity REPORT_SEVERITY] [--report-impact R EPORT_IMPACT]

[--report-confidence REPORT_CONFIDENCE] [--report-check-suicidal]

[--report-check-upgradeable] [--report-check-erc20] [--report-check-erc721]

[--report-check-reentrancy] [--report-check-gas-optimization] [--report-check-code-quality]

[--report-check-best-practices] [--report-check-ai-detectors] [--report-check-all]

[--report-check-none] [--report-all] [--report-suicidal] [--report-upgradeable]

[--report-erc20] [--report-erc721] [--report-reentrancy] [--report-gas-optimization]

[--report-code-quality] [--report-best-practices] [--report-ai-detectors] [--report-none]

[--report-all-detectors] [--report-all-severity] [--report-all-impact]

[--report-all-confidence] [--report-all-categories] [--report-all-filters]

[--report-all-options] [- -report-all] [--report-none] [--ai-enabled] [--ai-disabled]

[--ai-priority-high] [--ai-priority-medium] [--ai-priority-low] [--ai-priority-all]

[--ai-priority-none] [--ai-confidence-high] [--ai-confidence-medium] [--ai-confidence-low]

[--ai-confidence-all] [--ai-confidence-none] [--ai-detectors-all] [--ai-detectors-none]

[--ai-detectors-specific AI_DETECTORS_SPECIFIC] [--ai-detectors-exclude AI_DETECTORS_EXCLUDE]

[--ai-models-path AI_MODELS_PATH] [--ai-models-update] [--ai-models-download]

[--ai-models-list] [--ai-models-info] [--ai-models-version] [--ai-models-check]

[--ai-models-upgrade] [--ai-models-remove] [--ai-models-clean] [--ai-models-reset]

[--ai-models-backup] [--ai-models-restore] [--ai-models-export] [--ai-models-import]

[--ai-models-config AI_MODELS_CONFIG] [--ai-models-config-update] [--ai-models-config-reset]

[--ai-models-config-export] [--ai-models-config-import] [--ai-models-config-list]

[--ai-models-config-info] [--ai-models-config-version] [--ai-models-config-check]

[--ai-models-config-upgrade] [--ai-models-config-remove] [--ai-models-config-clean]

[--ai-models-config-reset] [--ai-models-config-backup] [--ai-models-config-restore]

[--ai-models-config-export] [--ai-models-config-import] [--ai-models-config-path AI_MODELS_CONFIG_PATH]

[--ai-models-config-file AI_MODELS_CONFIG_FILE] [--ai-models-config-url AI_MODELS_CONFIG_URL]

[--ai-models-config-name AI_MODELS_CONFIG_NAME] [--ai-models-config-description AI_MODELS_CONFIG_DESCRIPTION]

[--ai-models-config-version-major AI_MODELS_CONFIG_VERSION_MAJOR]

[--ai-models-config- version-minor AI_MODELS_CONFIG_VERSION_MINOR]

[--ai-models-config-version-patch AI_MODELS_CONFIG_VERSION_PATCH]

[--ai-models-config-author AI_MODELS_CONFIG_AUTHOR]

[--ai-models-config-license AI_MODELS_CONFIG_LICENSE]

[--ai-models-config-url-documentation AI_MODELS_CONFIG_URL_DOCUMENTATION]

[--ai-models-config-url-source AI_MODELS_CONFIG_URL_SOURCE]

[--ai-models-config-url-issues AI_MODELS_CONFIG_URL_ISSUES]

[--ai-models-config-url-changelog AI_MODELS_CONFIG_URL_CHANGELOG]

[--ai-models-config-url-support AI_MODELS_CONFIG_URL_SUPPORT]

[--ai-models-config-url-website AI_MODELS_CONFIG_URL_WEBSITE]

[--ai-models-config-url-logo AI_MODELS_CONFIG_URL_LOGO]

[--ai-models-config-url-icon AI_MODELS_CONFIG_URL_ICON]

[--ai-models-config-url-banner AI_MODELS_CONFIG_URL_BANNER]

[--ai-models-config-url-screenshot AI_MODELS_CONFIG_URL_SCREENSHOT]

[--ai-models-config-url-video AI_MODELS_CONFIG_URL_VIDEO]

[--ai-models-config-url-demo AI_MODELS_CONFIG_URL_DEMO]

[--ai-models-config-url-documentation-api AI_MODELS_CONFIG_URL_DOCUMENTATION_API]

[--ai-models-config-url-documentation-user AI_MODELS_CONFIG_URL_DOCUMENTATION_USER]

[--ai-models-config-url-documentation-developer AI_MODELS_CONFIG_URL_DOCUMENTATION_DEVELOPER]

[--ai-models-config-url-documentation-faq AI_MODELS_CONFIG_URL_DOCUMENTATION_FAQ]

[--ai-models-config-url-documentation-tutorial AI_MODELS_CONFIG_URL_DOCUMENTATION_TUTORIAL]

[--ai-models-config-url-documentation-guide AI_MODELS_CONFIG_URL_DOCUMENTATION_GUIDE]

[--ai-models-config-url-documentation-whitepaper AI_MODELS_CONFIG_URL_DOCUMENTATION_WHITEPAPER]

[--ai-models-config-url-documentation-roadmap AI_MODELS_CONFIG_URL_DOCUMENTATION_ROADMAP]

[--ai-models-config-url-documentation-blog AI_MODELS_CONFIG_URL_DOCUMENTATION_BLOG]

[--ai-models-config-url-documentation-community AI_MODELS_CONFIG_URL_DOCUMENTATION_COMMUNITY]

This comprehensive usage guide provides information on all the available options and features of the SherlockChain framework, including:

--detect, --exclude-detectors

--report-format, --report-output, --report-*

--filter-*

--ai-*

--truffle, --truffle-build-directory

--compile, --list-detectors, --list-detectors-info

By reviewing this comprehensive usage guide, you can quickly understand how to leverage the full capabilities of the SherlockChain framework to analyze your smart contracts and identify potential vulnerabilities. This will help you ensure the security and reliability of your DeFi protocol before deployment.

| Num | Detector | What it Detects | Impact | Confidence |

|---|---|---|---|---|

| 1 | ai-anomaly-detection | Detect anomalous code patterns using advanced AI models | High | High |

| 2 | ai-vulnerability-prediction | Predict potential vulnerabilities using machine learning | High | High |

| 3 | ai-code-optimization | Suggest code optimizations based on AI-driven analysis | Medium | High |

| 4 | ai-contract-complexity | Assess contract complexity and maintainability using AI | Medium | High |

| 5 | ai-gas-optimization | Identify gas-optimizing opportunities with AI | Medium | Medium |

| ## Detectors |

It can be difficult for security teams to continuously monitor all on-premises servers due to budget and resource constraints. Signature-based antivirus alone is insufficient as modern malware uses various obfuscation techniques. Server admins may lack visibility into security events across all servers historically. Determining compromised systems and safe backups to restore from during incidents is challenging without centralized monitoring and alerting. It is onerous for server admins to setup and maintain additional security tools for advanced threat detection. The rapid mean time to detect and remediate infections is critical but difficult to achieve without the right automated solution.

Determining which backup image is safe to restore from during incidents without comprehensive threat intelligence is another hard problem. Even if backups are available, without knowing when exactly a system got compromised, it is risky to blindly restore from backups. This increases the chance of restoring malware and losing even more valuable data and systems during incident response. There is a need for an automated solution that can pinpoint the timeline of infiltration and recommend safe backups for restoration.

The solution leverages AWS Elastic Disaster Recovery (AWS DRS), Amazon GuardDuty and AWS Security Hub to address the challenges of malware detection for on-premises servers.

This combo of services provides a cost-effective way to continuously monitor on-premises servers for malware without impacting performance. It also helps determine safe recovery point in time backups for restoration by identifying timeline of compromises through centralized threat analytics.

AWS Elastic Disaster Recovery (AWS DRS) minimizes downtime and data loss with fast, reliable recovery of on-premises and cloud-based applications using affordable storage, minimal compute, and point-in-time recovery.

Amazon GuardDuty is a threat detection service that continuously monitors your AWS accounts and workloads for malicious activity and delivers detailed security findings for visibility and remediation.

AWS Security Hub is a cloud security posture management (CSPM) service that performs security best practice checks, aggregates alerts, and enables automated remediation.

The Malware Scan solution assumes on-premises servers are already being replicated with AWS DRS, and Amazon GuardDuty & AWS Security Hub are enabled. The cdk stack in this repository will only deploy the boxes labelled as DRS Malware Scan in the architecture diagram.

Amazon Security Hub enabled. If not, please check this documentation

Warning

Currently, Amazon GuardDuty Malware scan does not support EBS volumes encrypted with EBS-managed keys. If you want to use this solution to scan your on-prem (or other-cloud) servers replicated with DRS, you need to setup DRS replication with your own encryption key in KMS. If you are currently using EBS-managed keys with your replicating servers, you can change encryption settings to use your own KMS key in the DRS console.

Create a Cloud9 environment with Ubuntu image (at least t3.small for better performance) in your AWS account. Open your Cloud9 environment and clone the code in this repository. Note: Amazon Linux 2 has node v16 which is not longer supported since 2023-09-11 git clone https://github.com/aws-samples/drs-malware-scan

cd drs-malware-scan

sh check_loggroup.sh

Deploy the CDK stack by running the following command in the Cloud9 terminal and confirm the deployment

npm install cdk bootstrap cdk deploy --all Note

The solution is made of 2 stacks: * DrsMalwareScanStack: it deploys all resources needed for malware scanning feature. This stack is mandatory. If you want to deploy only this stack you can run cdk deploy DrsMalwareScanStack

* ScanReportStack: it deploys the resources needed for reporting (Amazon Lambda and Amazon S3). This stack is optional. If you want to deploy only this stack you can run cdk deploy ScanReportStack

If you want to deploy both stacks you can run cdk deploy --all

All lambda functions route logs to Amazon CloudWatch. You can verify the execution of each function by inspecting the proper CloudWatch log groups for each function, look for the /aws/lambda/DrsMalwareScanStack-* pattern.

The duration of the malware scan operation will depend on the number of servers/volumes to scan (and their size). When Amazon GuardDuty finds malware, it generates a SecurityHub finding: the solution intercepts this event and runs the $StackName-SecurityHubAnnotations lambda to augment the SecurityHub finding with a note containing the name(s) of the DRS source server(s) with malware.

The SQS FIFO queues can be monitored using the Messages available and Message in flight metrics from the AWS SQS console

The DRS Volume Annotations DynamoDB tables keeps track of the status of each Malware scan operation.

Amazon GuardDuty has documented reasons to skip scan operations. For further information please check Reasons for skipping resource during malware scan

In order to analize logs from Amazon GuardDuty Malware scan operations, you can check /aws/guardduty/malware-scan-events Amazon Cloudwatch LogGroup. The default log retention period for this log group is 90 days, after which the log events are deleted automatically.

Run the following commands in your terminal:

cdk destroy --all

(Optional) Delete the CloudWatch log groups associated with Lambda Functions.

For the purpose of this analysis, we have assumed a fictitious scenario to take as an example. The following cost estimates are based on services located in the North Virginia (us-east-1) region.

| Monthly Cost | Total Cost for 12 Months |

|---|---|

| 171.22 USD | 2,054.74 USD |

| Service Name | Description | Monthly Cost (USD) |

|---|---|---|

| AWS Elastic Disaster Recovery | 2 Source Servers / 1 Replication Server / 4 disks / 100GB / 30 days of EBS Snapshot Retention Period | 71.41 |

| Amazon GuardDuty | 3 TB Malware Scanned/Month | 94.56 |

| Amazon DynamoDB | 100MB 1 Read/Second 1 Writes/Second | 3.65 |

| AWS Security Hub | 1 Account / 100 Security Checks / 1000 Finding Ingested | 0.10 |

| AWS EventBridge | 1M custom events | 1.00 |

| Amazon Cloudwatch | 1GB ingested/month | 0.50 |

| AWS Lambda | 5 ARM Lambda Functions - 128MB / 10secs | 0.00 |

| Amazon SQS | 2 SQS Fifo | 0.00 |

| Total | 171.22 |

Note The figures presented here are estimates based on the assumptions described above, derived from the AWS Pricing Calculator. For further details please check this pricing calculator as a reference. You can adjust the services configuration in the referenced calculator to make your own estimation. This estimation does not include potential taxes or additional charges that might be applicable. It's crucial to remember that actual fees can vary based on usage and any additional services not covered in this analysis. For critical environments is advisable to include Business Support Plan (not considered in the estimation)

See CONTRIBUTING for more information.

Retrieve and display information about active user sessions on remote computers. No admin privileges required.

The tool leverages the remote registry service to query the HKEY_USERS registry hive on the remote computers. It identifies and extracts Security Identifiers (SIDs) associated with active user sessions, and translates these into corresponding usernames, offering insights into who is currently logged in.

If the -CheckAdminAccess switch is provided, it will gather sessions by authenticating to targets where you have local admin access using Invoke-WMIRemoting (which most likely will retrieve more results)

It's important to note that the remote registry service needs to be running on the remote computer for the tool to work effectively. In my tests, if the service is stopped but its Startup type is configured to "Automatic" or "Manual", the service will start automatically on the target computer once queried (this is native behavior), and sessions information will be retrieved. If set to "Disabled" no session information can be retrieved from the target.

iex(new-object net.webclient).downloadstring('https://raw.githubusercontent.com/Leo4j/Invoke-SessionHunter/main/Invoke-SessionHunter.ps1')

If run without parameters or switches it will retrieve active sessions for all computers in the current domain by querying the registry

Invoke-SessionHunter

Gather sessions by authenticating to targets where you have local admin access

Invoke-SessionHunter -CheckAsAdmin

You can optionally provide credentials in the following format

Invoke-SessionHunter -CheckAsAdmin -UserName "ferrari\Administrator" -Password "P@ssw0rd!"

You can also use the -FailSafe switch, which will direct the tool to proceed if the target remote registry becomes unresponsive.

This works in cobination with -Timeout | Default = 2, increase for slower networks.

Invoke-SessionHunter -FailSafe

Invoke-SessionHunter -FailSafe -Timeout 5

Use the -Match switch to show only targets where you have admin access and a privileged user is logged in

Invoke-SessionHunter -Match

All switches can be combined

Invoke-SessionHunter -CheckAsAdmin -UserName "ferrari\Administrator" -Password "P@ssw0rd!" -FailSafe -Timeout 5 -Match

Invoke-SessionHunter -Domain contoso.local

Invoke-SessionHunter -Targets "DC01,Workstation01.contoso.local"

Invoke-SessionHunter -Targets c:\Users\Public\Documents\targets.txt

Invoke-SessionHunter -Servers

Invoke-SessionHunter -Workstations

Invoke-SessionHunter -Hunt "Administrator"

Invoke-SessionHunter -IncludeLocalHost

Invoke-SessionHunter -RawResults

Note: if a host is not reachable it will hang for a while

Invoke-SessionHunter -NoPortScan

HardeningMeter is an open-source Python tool carefully designed to comprehensively assess the security hardening of binaries and systems. Its robust capabilities include thorough checks of various binary exploitation protection mechanisms, including Stack Canary, RELRO, randomizations (ASLR, PIC, PIE), None Exec Stack, Fortify, ASAN, NX bit. This tool is suitable for all types of binaries and provides accurate information about the hardening status of each binary, identifying those that deserve attention and those with robust security measures. Hardening Meter supports all Linux distributions and machine-readable output, the results can be printed to the screen a table format or be exported to a csv. (For more information see Documentation.md file)

Scan the '/usr/bin' directory, the '/usr/sbin/newusers' file, the system and export the results to a csv file.

python3 HardeningMeter.py -f /bin/cp -s

Before installing HardeningMeter, make sure your machine has the following: 1. readelf and file commands 2. python version 3 3. pip 4. tabulate

pip install tabulate

The very latest developments can be obtained via git.

Clone or download the project files (no compilation nor installation is required)

git clone https://github.com/OfriOuzan/HardeningMeter

Specify the files you want to scan, the argument can get more than one file seperated by spaces.

Specify the directory you want to scan, the argument retrieves one directory and scan all ELF files recursively.

Specify whether you want to add external checks (False by default).

Prints according to the order, only those files that are missing security hardening mechanisms and need extra attention.

Specify if you want to scan the system hardening methods.

Specify if you want to save the results to csv file (results are printed as a table to stdout by default).

HardeningMeter's results are printed as a table and consisted of 3 different states: - (X) - This state indicates that the binary hardening mechanism is disabled. - (V) - This state indicates that the binary hardening mechanism is enabled. - (-) - This state indicates that the binary hardening mechanism is not relevant in this particular case.

When the default language on Linux is not English make sure to add "LC_ALL=C" before calling the script.

CrimsonEDR is an open-source project engineered to identify specific malware patterns, offering a tool for honing skills in circumventing Endpoint Detection and Response (EDR). By leveraging diverse detection methods, it empowers users to deepen their understanding of security evasion tactics.

| Detection | Description |

|---|---|

| Direct Syscall | Detects the usage of direct system calls, often employed by malware to bypass traditional API hooks. |

| NTDLL Unhooking | Identifies attempts to unhook functions within the NTDLL library, a common evasion technique. |

| AMSI Patch | Detects modifications to the Anti-Malware Scan Interface (AMSI) through byte-level analysis. |

| ETW Patch | Detects byte-level alterations to Event Tracing for Windows (ETW), commonly manipulated by malware to evade detection. |

| PE Stomping | Identifies instances of PE (Portable Executable) stomping. |

| Reflective PE Loading | Detects the reflective loading of PE files, a technique employed by malware to avoid static analysis. |

| Unbacked Thread Origin | Identifies threads originating from unbacked memory regions, often indicative of malicious activity. |

| Unbacked Thread Start Address | Detects threads with start addresses pointing to unbacked memory, a potential sign of code injection. |

| API hooking | Places a hook on the NtWriteVirtualMemory function to monitor memory modifications. |

| Custom Pattern Search | Allows users to search for specific patterns provided in a JSON file, facilitating the identification of known malware signatures. |

To get started with CrimsonEDR, follow these steps:

bash sudo apt-get install gcc-mingw-w64-x86-64

bash git clone https://github.com/Helixo32/CrimsonEDR

bash cd CrimsonEDR; chmod +x compile.sh; ./compile.sh

Windows Defender and other antivirus programs may flag the DLL as malicious due to its content containing bytes used to verify if the AMSI has been patched. Please ensure to whitelist the DLL or disable your antivirus temporarily when using CrimsonEDR to avoid any interruptions.

To use CrimsonEDR, follow these steps:

ioc.json file is placed in the current directory from which the executable being monitored is launched. For example, if you launch your executable to monitor from C:\Users\admin\, the DLL will look for ioc.json in C:\Users\admin\ioc.json. Currently, ioc.json contains patterns related to msfvenom. You can easily add your own in the following format:{

"IOC": [

["0x03", "0x4c", "0x24", "0x08", "0x45", "0x39", "0xd1", "0x75"],

["0xf1", "0x4c", "0x03", "0x4c", "0x24", "0x08", "0x45", "0x39"],

["0x58", "0x44", "0x8b", "0x40", "0x24", "0x49", "0x01", "0xd0"],

["0x66", "0x41", "0x8b", "0x0c", "0x48", "0x44", "0x8b", "0x40"],

["0x8b", "0x0c", "0x48", "0x44", "0x8b", "0x40", "0x1c", "0x49"],

["0x01", "0xc1", "0x38", "0xe0", "0x75", "0xf1", "0x4c", "0x03"],

["0x24", "0x49", "0x01", "0xd0", "0x66", "0x41", "0x8b", "0x0c"],

["0xe8", "0xcc", "0x00", "0x00", "0x00", "0x41", "0x51", "0x41"]

]

}

Execute CrimsonEDRPanel.exe with the following arguments:

-d <path_to_dll>: Specifies the path to the CrimsonEDR.dll file.

-p <process_id>: Specifies the Process ID (PID) of the target process where you want to inject the DLL.