Mac users often say, “I don’t have to worry about viruses. I have a Mac!” But that sense of safety is outdated. Macs face real threats today, including scareware and fake antivirus pop‑ups designed specifically for macOS. One of the most infamous examples is the Mac Defender family, which appeared around 2011 under names like “Mac Defender,” “Mac Security,” and “Mac Protector,” luring users with fake security alerts and then installing malicious software.

These scams have long targeted Windows PCs and later expanded to Macs, using similar tactics: bogus scan results, alarming pop-ups, and fake security sites that push users to download “protection” software or pay to remove nonexistent threats. Once installed, these programs can bombard you with persistent warnings, redirect you to unwanted or explicit sites, and may even try to capture your credit card details or other sensitive information under the guise of an urgent upgrade.

In this blog, we’ll take a closer look at how you become a target for these fake antivirus pop‑up ads, how to remove them from your Mac, and practical steps you can take to block them going forward.

Fake antivirus software is malicious software that tricks you into believing your Mac is infected with viruses or security threats when, in fact, it isn’t. These deceptive programs, also known as rogue antivirus or scareware, masquerade as legitimate security tools to manipulate you into taking actions that benefit cybercriminals.

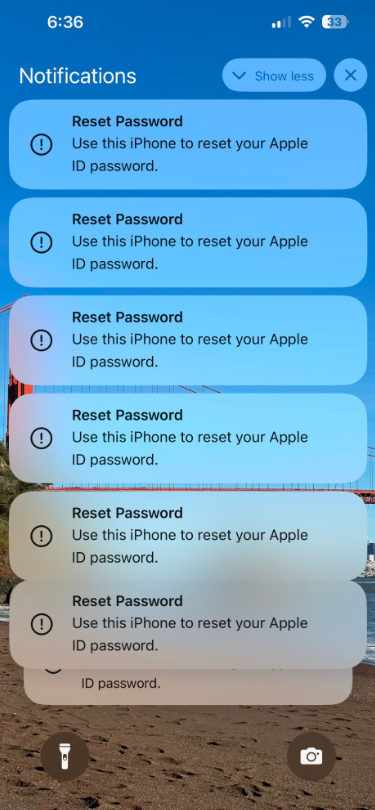

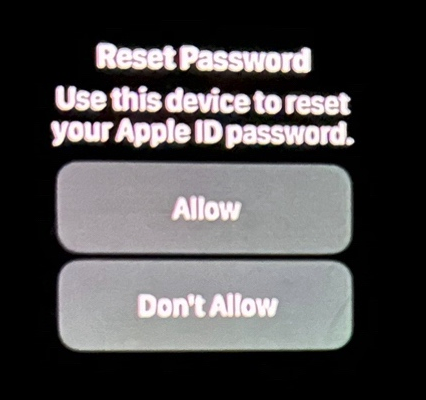

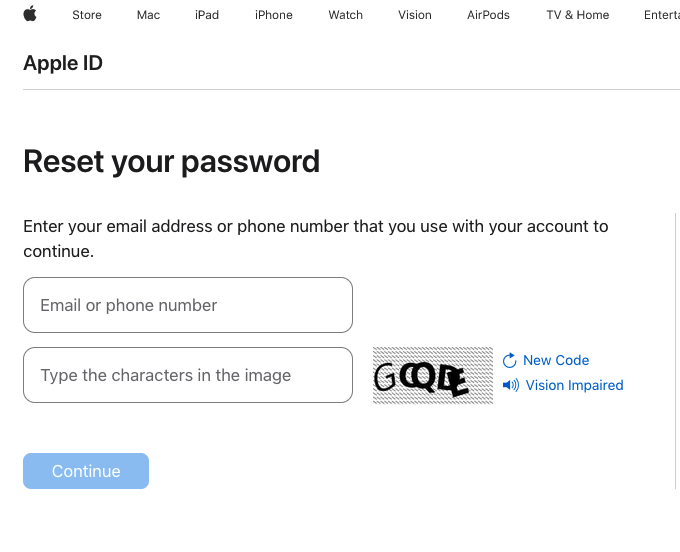

On your Mac, fake antivirus pop-up ads typically appear as urgent browser warnings or system alerts claiming to have detected multiple threats on your computer. These fraudulent notifications often use official-looking logos, technical language, and alarming messages like “Your Mac is infected with 5 viruses” or “Immediate action required” to create a sense of urgency and panic.

These scams manipulate you by:

Fake antivirus popups are almost always the result of a sneaky delivery method designed to catch you off guard. Scammers rely on ads, compromised websites, misleading downloads, and social engineering tricks to get their scareware onto your Mac without you realizing what’s happening. Let’s take a look at the common ways these scams spread so you can avoid them.

Fake virus alerts use a mix of visual tricks and psychological pressure to push you into clicking, calling, or paying before you have time to think. This section breaks down the common elements scammers use in these alerts so you can recognize a fake warning instantly and ignore it.

If you’re not sure whether an antivirus warning is real or just scareware, a quick verification is the safest next step. There are steps you can take and settings on your macOS you can check without putting your Mac at further risk.

The moment a fake virus warning pops up, scammers are hoping you’ll react fast, click a button, call a number, or download their “fix.” However, the safest approach is the opposite: take a moment to think, don’t interact with the alert, close the browser, and clear any files it may have tried to leave behind. Here’s exactly what to do right away to stay safe.

Your Mac experience should be enjoyable and secure. With the right awareness and tools, it absolutely can be, especially when you know what to look for and follow the right practices. By recognizing the warning signs of fake antivirus pop-ups, downloading software only from trusted sources, keeping your macOS and applications updated, and following the prevention tips outlined above, you can avoid falling victim to these fake antivirus scams.

Remember that legitimate security alerts from Apple come through System Preferences and official macOS notifications, not through alarming browser pop-ups demanding immediate payment or phone calls. Use reputable security tools from a trusted vendor, such as McAfee, that provides real-time protection and regular updates about emerging threats.

Share these tips with your family and friends, especially those who might be less tech-savvy and more vulnerable to these deceptive tactics. The more people understand how fake antivirus schemes operate, the safer our entire digital community is.

The post Stop Fake Antivirus Popups on Your Mac appeared first on McAfee Blog.

Google is suing more than two dozen unnamed individuals allegedly involved in peddling a popular China-based mobile phishing service that helps scammers impersonate hundreds of trusted brands, blast out text message lures, and convert phished payment card data into mobile wallets from Apple and Google.

In a lawsuit filed in the Southern District of New York on November 12, Google sued to unmask and disrupt 25 “John Doe” defendants allegedly linked to the sale of Lighthouse, a sophisticated phishing kit that makes it simple for even novices to steal payment card data from mobile users. Google said Lighthouse has harmed more than a million victims across 120 countries.

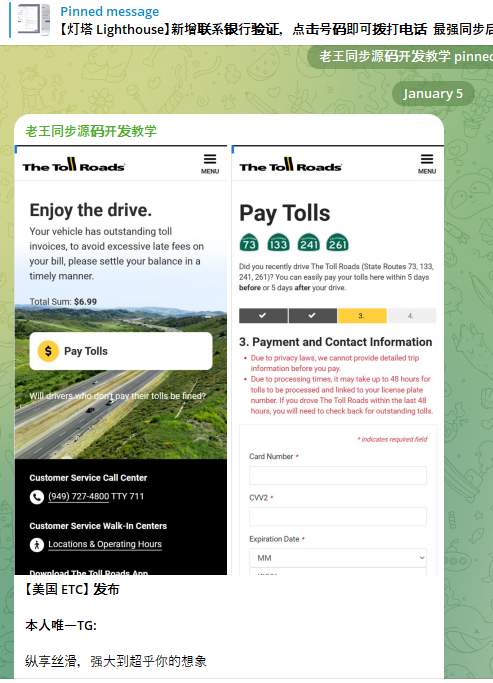

A component of the Chinese phishing kit Lighthouse made to target customers of The Toll Roads, which refers to several state routes through Orange County, Calif.

Lighthouse is one of several prolific phishing-as-a-service operations known as the “Smishing Triad,” and collectively they are responsible for sending millions of text messages that spoof the U.S. Postal Service to supposedly collect some outstanding delivery fee, or that pretend to be a local toll road operator warning of a delinquent toll fee. More recently, Lighthouse has been used to spoof e-commerce websites, financial institutions and brokerage firms.

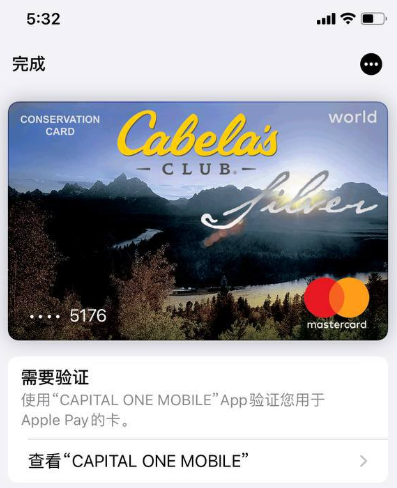

Regardless of the text message lure or brand used, the basic scam remains the same: After the visitor enters their payment information, the phishing site will automatically attempt to enroll the card as a mobile wallet from Apple or Google. The phishing site then tells the visitor that their bank is going to verify the transaction by sending a one-time code that needs to be entered into the payment page before the transaction can be completed.

If the recipient provides that one-time code, the scammers can link the victim’s card data to a mobile wallet on a device that they control. Researchers say the fraudsters usually load several stolen wallets onto each mobile device, and wait 7-10 days after that enrollment before selling the phones or using them for fraud.

Google called the scale of the Lighthouse phishing attacks “staggering.” A May 2025 report from Silent Push found the domains used by the Smishing Triad are rotated frequently, with approximately 25,000 phishing domains active during any 8-day period.

Google’s lawsuit alleges the purveyors of Lighthouse violated the company’s trademarks by including Google’s logos on countless phishing websites. The complaint says Lighthouse offers over 600 templates for phishing websites of more than 400 entities, and that Google’s logos were featured on at least a quarter of those templates.

Google is also pursuing Lighthouse under the Racketeer Influenced and Corrupt Organizations (RICO) Act, saying the Lighthouse phishing enterprise encompasses several connected threat actor groups that work together to design and implement complex criminal schemes targeting the general public.

According to Google, those threat actor teams include a “developer group” that supplies the phishing software and templates; a “data broker group” that provides a list of targets; a “spammer group” that provides the tools to send fraudulent text messages in volume; a “theft group,” in charge of monetizing the phished information; and an “administrative group,” which runs their Telegram support channels and discussion groups designed to facilitate collaboration and recruit new members.

“While different members of the Enterprise may play different roles in the Schemes, they all collaborate to execute phishing attacks that rely on the Lighthouse software,” Google’s complaint alleges. “None of the Enterprise’s Schemes can generate revenue without collaboration and cooperation among the members of the Enterprise. All of the threat actor groups are connected to one another through historical and current business ties, including through their use of Lighthouse and the online community supporting its use, which exists on both YouTube and Telegram channels.”

Silent Push’s May report observed that the Smishing Triad boasts it has “300+ front desk staff worldwide” involved in Lighthouse, staff that is mainly used to support various aspects of the group’s fraud and cash-out schemes.

An image shared by an SMS phishing group shows a panel of mobile phones responsible for mass-sending phishing messages. These panels require a live operator because the one-time codes being shared by phishing victims must be used quickly as they generally expire within a few minutes.

Google alleges that in addition to blasting out text messages spoofing known brands, Lighthouse makes it easy for customers to mass-create fake e-commerce websites that are advertised using Google Ads accounts (and paid for with stolen credit cards). These phony merchants collect payment card information at checkout, and then prompt the customer to expect and share a one-time code sent from their financial institution.

Once again, that one-time code is being sent by the bank because the fake e-commerce site has just attempted to enroll the victim’s payment card data in a mobile wallet. By the time a victim understands they will likely never receive the item they just purchased from the fake e-commerce shop, the scammers have already run through hundreds of dollars in fraudulent charges, often at high-end electronics stores or jewelers.

Ford Merrill works in security research at SecAlliance, a CSIS Security Group company, and he’s been tracking Chinese SMS phishing groups for several years. Merrill said many Lighthouse customers are now using the phishing kit to erect fake e-commerce websites that are advertised on Google and Meta platforms.

“You find this shop by searching for a particular product online or whatever, and you think you’re getting a good deal,” Merrill said. “But of course you never receive the product, and they will phish that one-time code at checkout.”

Merrill said some of the phishing templates include payment buttons for services like PayPal, and that victims who choose to pay through PayPal can also see their PayPal accounts hijacked.

A fake e-commerce site from the Smishing Triad spoofing PayPal on a mobile device.

“The main advantage of the fake e-commerce site is that it doesn’t require them to send out message lures,” Merrill said, noting that the fake vendor sites have more staying power than traditional phishing sites because it takes far longer for them to be flagged for fraud.

Merrill said Google’s legal action may temporarily disrupt the Lighthouse operators, and could make it easier for U.S. federal authorities to bring criminal charges against the group. But he said the Chinese mobile phishing market is so lucrative right now that it’s difficult to imagine a popular phishing service voluntarily turning out the lights.

Merrill said Google’s lawsuit also can help lay the groundwork for future disruptive actions against Lighthouse and other phishing-as-a-service entities that are operating almost entirely on Chinese networks. According to Silent Push, a majority of the phishing sites created with these kits are sitting at two Chinese hosting companies: Tencent (AS132203) and Alibaba (AS45102).

“Once Google has a default judgment against the Lighthouse guys in court, theoretically they could use that to go to Alibaba and Tencent and say, ‘These guys have been found guilty, here are their domains and IP addresses, we want you to shut these down or we’ll include you in the case.'”

If Google can bring that kind of legal pressure consistently over time, Merrill said, they might succeed in increasing costs for the phishers and more frequently disrupting their operations.

“If you take all of these Chinese phishing kit developers, I have to believe it’s tens of thousands of Chinese-speaking people involved,” he said. “The Lighthouse guys will probably burn down their Telegram channels and disappear for a while. They might call it something else or redevelop their service entirely. But I don’t believe for a minute they’re going to close up shop and leave forever.”

For the past week, domains associated with the massive Aisuru botnet have repeatedly usurped Amazon, Apple, Google and Microsoft in Cloudflare’s public ranking of the most frequently requested websites. Cloudflare responded by redacting Aisuru domain names from their top websites list. The chief executive at Cloudflare says Aisuru’s overlords are using the botnet to boost their malicious domain rankings, while simultaneously attacking the company’s domain name system (DNS) service.

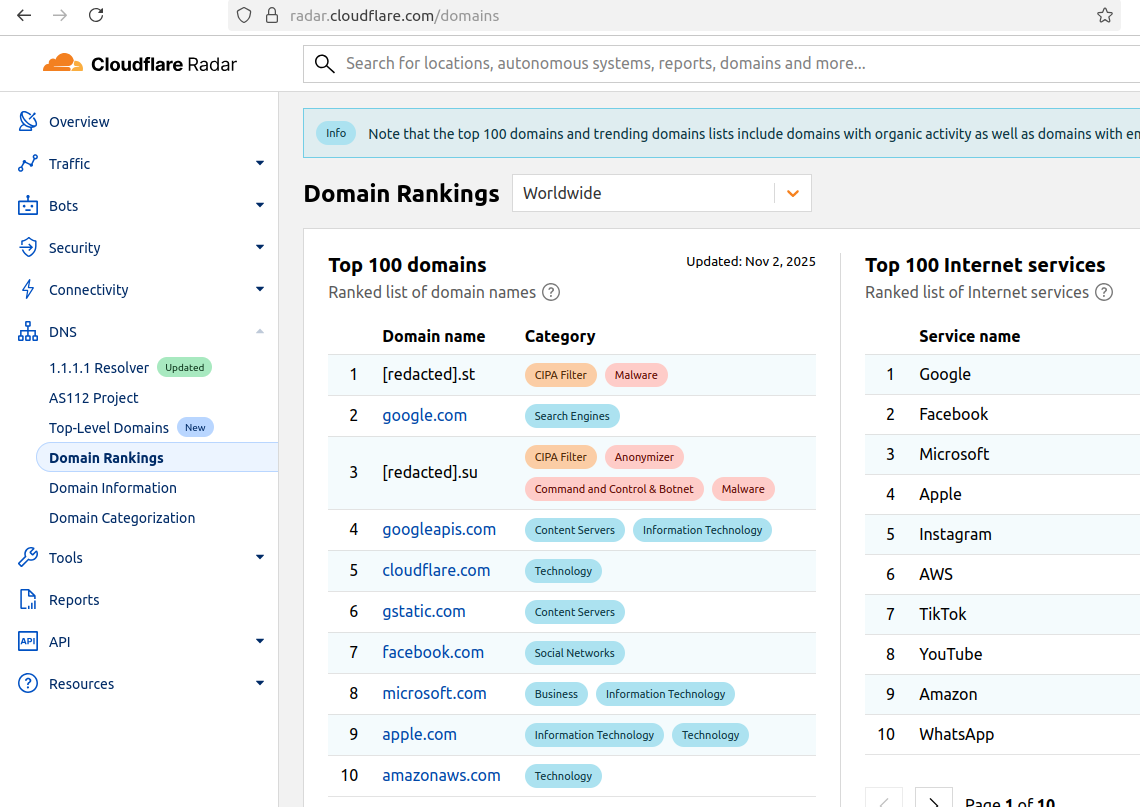

The #1 and #3 positions in this chart are Aisuru botnet controllers with their full domain names redacted. Source: radar.cloudflare.com.

Aisuru is a rapidly growing botnet comprising hundreds of thousands of hacked Internet of Things (IoT) devices, such as poorly secured Internet routers and security cameras. The botnet has increased in size and firepower significantly since its debut in 2024, demonstrating the ability to launch record distributed denial-of-service (DDoS) attacks nearing 30 terabits of data per second.

Until recently, Aisuru’s malicious code instructed all infected systems to use DNS servers from Google — specifically, the servers at 8.8.8.8. But in early October, Aisuru switched to invoking Cloudflare’s main DNS server — 1.1.1.1 — and over the past week domains used by Aisuru to control infected systems started populating Cloudflare’s top domain rankings.

As screenshots of Aisuru domains claiming two of the Top 10 positions ping-ponged across social media, many feared this was yet another sign that an already untamable botnet was running completely amok. One Aisuru botnet domain that sat prominently for days at #1 on the list was someone’s street address in Massachusetts followed by “.com”. Other Aisuru domains mimicked those belonging to major cloud providers.

Cloudflare tried to address these security, brand confusion and privacy concerns by partially redacting the malicious domains, and adding a warning at the top of its rankings:

“Note that the top 100 domains and trending domains lists include domains with organic activity as well as domains with emerging malicious behavior.”

Cloudflare CEO Matthew Prince told KrebsOnSecurity the company’s domain ranking system is fairly simplistic, and that it merely measures the volume of DNS queries to 1.1.1.1.

“The attacker is just generating a ton of requests, maybe to influence the ranking but also to attack our DNS service,” Prince said, adding that Cloudflare has heard reports of other large public DNS services seeing similar uptick in attacks. “We’re fixing the ranking to make it smarter. And, in the meantime, redacting any sites we classify as malware.”

Renee Burton, vice president of threat intel at the DNS security firm Infoblox, said many people erroneously assumed that the skewed Cloudflare domain rankings meant there were more bot-infected devices than there were regular devices querying sites like Google and Apple and Microsoft.

“Cloudflare’s documentation is clear — they know that when it comes to ranking domains you have to make choices on how to normalize things,” Burton wrote on LinkedIn. “There are many aspects that are simply out of your control. Why is it hard? Because reasons. TTL values, caching, prefetching, architecture, load balancing. Things that have shared control between the domain owner and everything in between.”

Alex Greenland is CEO of the anti-phishing and security firm Epi. Greenland said he understands the technical reason why Aisuru botnet domains are showing up in Cloudflare’s rankings (those rankings are based on DNS query volume, not actual web visits). But he said they’re still not meant to be there.

“It’s a failure on Cloudflare’s part, and reveals a compromise of the trust and integrity of their rankings,” he said.

Greenland said Cloudflare planned for its Domain Rankings to list the most popular domains as used by human users, and it was never meant to be a raw calculation of query frequency or traffic volume going through their 1.1.1.1 DNS resolver.

“They spelled out how their popularity algorithm is designed to reflect real human use and exclude automated traffic (they said they’re good at this),” Greenland wrote on LinkedIn. “So something has evidently gone wrong internally. We should have two rankings: one representing trust and real human use, and another derived from raw DNS volume.”

Why might it be a good idea to wholly separate malicious domains from the list? Greenland notes that Cloudflare Domain Rankings see widespread use for trust and safety determination, by browsers, DNS resolvers, safe browsing APIs and things like TRANCO.

“TRANCO is a respected open source list of the top million domains, and Cloudflare Radar is one of their five data providers,” he continued. “So there can be serious knock-on effects when a malicious domain features in Cloudflare’s top 10/100/1000/million. To many people and systems, the top 10 and 100 are naively considered safe and trusted, even though algorithmically-defined top-N lists will always be somewhat crude.”

Over this past week, Cloudflare started redacting portions of the malicious Aisuru domains from its Top Domains list, leaving only their domain suffix visible. Sometime in the past 24 hours, Cloudflare appears to have begun hiding the malicious Aisuru domains entirely from the web version of that list. However, downloading a spreadsheet of the current Top 200 domains from Cloudflare Radar shows an Aisuru domain still at the very top.

According to Cloudflare’s website, the majority of DNS queries to the top Aisuru domains — nearly 52 percent — originated from the United States. This tracks with my reporting from early October, which found Aisuru was drawing most of its firepower from IoT devices hosted on U.S. Internet providers like AT&T, Comcast and Verizon.

Experts tracking Aisuru say the botnet relies on well more than a hundred control servers, and that for the moment at least most of those domains are registered in the .su top-level domain (TLD). Dot-su is the TLD assigned to the former Soviet Union (.su’s Wikipedia page says the TLD was created just 15 months before the fall of the Berlin wall).

A Cloudflare blog post from October 27 found that .su had the highest “DNS magnitude” of any TLD, referring to a metric estimating the popularity of a TLD based on the number of unique networks querying Cloudflare’s 1.1.1.1 resolver. The report concluded that the top .su hostnames were associated with a popular online world-building game, and that more than half of the queries for that TLD came from the United States, Brazil and Germany [it’s worth noting that servers for the world-building game Minecraft were some of Aisuru’s most frequent targets].

A simple and crude way to detect Aisuru bot activity on a network may be to set an alert on any systems attempting to contact domains ending in .su. This TLD is frequently abused for cybercrime and by cybercrime forums and services, and blocking access to it entirely is unlikely to raise any legitimate complaints.

While Apple goes to great lengths to keep all its devices safe, this doesn’t mean your Mac is immune to all computer viruses. What does Apple provide in terms of antivirus protection? In this article, we will discuss some signs that your Mac may be infected with a virus or malware, the built-in protections that Apple provides, and how you can protect your computer and yourself from threats beyond viruses.

A computer virus is a piece of code that inserts itself into an application or operating system and spreads when that program is run. While viruses exist, most modern threats to macOS come in the form of other malicious software, also known as malware. While technically different from viruses, malware impacts your Mac computers similarly: it compromises your device, data, and privacy.

While Apple’s macOS has robust security features, it’s not impenetrable. Cybercriminals can compromise a Mac through several methods that bypass traditional virus signatures. Common attack vectors include software vulnerabilities, phishing attacks that steal passwords, drive-by downloads from compromised websites, malicious browser extensions that seem harmless, or remote access Trojans disguised as legitimate software.

Understanding the common types of viruses and malware that target macOS can help you better protect your device and data. Here’s a closer look at the most prevalent forms of malware that Mac users should watch out for.

Whether hackers physically sneak it onto your device or by tricking you into installing it via a phony app, a sketchy website, or a phishing attack, viruses and malware can create problems for you in a couple of ways:

Is your device operating slower, are web pages and apps harder to load, or does your battery never seem to keep a charge? These are all signs that you could have a virus or malware running in the background, zapping your device’s resources.

Malware or mining apps running in the background can burn extra computing power and data, causing your computer to operate at a high temperature or overheat.

If you find unfamiliar apps you didn’t download, along with messages and emails that you didn’t send, that’s a red flag. A hacker may have hijacked your computer to send messages or to spread malware to your contacts. Similarly, if you see spikes in your data usage, that could be a sign of a hack as well.

Malware can also be behind spammy pop-ups, unauthorized changes to your home screen, or bookmarks to suspicious websites. In fact, if you see any configuration changes you didn’t personally make, this is another big clue that your computer has been hacked.

Your browser’s homepage or default search engine changes without your permission, and searches are redirected to unfamiliar sites. Check your browser’s settings and extensions for anything you don’t recognize.

Your antivirus software or macOS firewall is disabled without your action. Some viruses or malware are capable of turning off your security software to allow them to perform their criminal activities.

Fortunately, there are easy-to-use tools and key steps to help you validate for viruses and malware so you can take action before any real damage is done.

Macs contain several built-in features that help protect them from viruses:

There are a couple of reasons why Mac users may want to consider additional protection on top of the built-in antivirus safeguards:

Macs are like any other connected device. They’re also susceptible to the wider world of threats and vulnerabilities on the internet. For this reason, Mac users should think about bolstering their defenses further with online protection software.

If you suspect your Mac has been infected with a virus or other malware, acting quickly is essential to protect your personal data and stop the threat from spreading. Fortunately, this can be effectively done with a combination of manual steps and trusted security software:

In the most extreme cases, erasing your hard drive and reinstalling a fresh copy of macOS is a very effective way to eliminate viruses and malware. This process wipes out all data, including the malicious software. This, however, is considered the last resort for deep-rooted infections that are difficult to remove manually.

As cyber threats grow more sophisticated, taking proactive steps now can protect your device, your data, and your identity in the long run. Here are simple but powerful ways to future-proof your Mac, and help ensure your device stays protected against tomorrow’s threats before they reach you:

Staying safe online isn’t just about having the right software—it’s about making smart choices every day. Adopting strong digital habits can drastically reduce your risk of falling victim to viruses, scams, or data breaches.

An important part of a McAfee’s Protection Score involves protecting your identity and privacy beyond the antivirus solution. While online threats have evolved, McAfee has elevated its online protection software to thwart hackers, scammers, and cyberthieves who aim to steal your personal info, online banking accounts, financial info, and even your social media accounts to commit identity theft and fraud in your name. As you go about your day online, online protection suites help you do it more privately and safely. Comprehensive security solutions like McAfee+ include:

Yes. While Safari has built-in security features, you can still get a Mac virus by visiting a compromised website that initiates a drive-by download or by being tricked into downloading and running a malicious file.

Not necessarily. Many websites use aggressive pop-up advertising. However, if you see persistent pop-ups that are difficult to close, or fake virus warnings, it’s a strong sign of an adware infection.

Yes. While some consider it less harmful than a trojan, adware is a form of malware. It compromises your browsing experience, tracks your activity, slows down your computer, and can serve as a gateway for more dangerous infections.

If you have a security suite with real-time protection, your Mac is continuously monitored. It is still good practice to run a full system scan at least once a week for peace of mind.

Direct infection via a cable is extremely unlikely due to the security architecture of both operating systems. The greater risk comes from shared accounts. A malicious link or file opened on one device and synced via iCloud, or a compromised Apple ID, could affect your other devices.

Current trends show a rise in sophisticated adware and PUPs that are often bundled with legitimate-looking software. Cybercriminals are also focusing on malicious browser extensions that steal data and credentials, injecting malicious code into legitimate software updates, or devising clever ways to bypass Apple’s notarization process. Given these developments, Macs can and do get viruses and are subject to threats just like any other computer. While Apple provides a strong security foundation, their operating systems may not offer the full breadth of protection you need, particularly against online identity theft and the latest malware threats. Combining an updated system, smart online habits, and a comprehensive protection solution helps you stay well ahead of emerging threats. Regularly reviewing your Mac’s security posture and following the tips outlined here will also enable you to use your device with confidence and peace of mind.

The post Can Apple Macs get Viruses? appeared first on McAfee Blog.

Microsoft Corp. today issued security updates to fix more than 80 vulnerabilities in its Windows operating systems and software. There are no known “zero-day” or actively exploited vulnerabilities in this month’s bundle from Redmond, which nevertheless includes patches for 13 flaws that earned Microsoft’s most-dire “critical” label. Meanwhile, both Apple and Google recently released updates to fix zero-day bugs in their devices.

Microsoft assigns security flaws a “critical” rating when malware or miscreants can exploit them to gain remote access to a Windows system with little or no help from users. Among the more concerning critical bugs quashed this month is CVE-2025-54918. The problem here resides with Windows NTLM, or NT LAN Manager, a suite of code for managing authentication in a Windows network environment.

Redmond rates this flaw as “Exploitation More Likely,” and although it is listed as a privilege escalation vulnerability, Kev Breen at Immersive says this one is actually exploitable over the network or the Internet.

“From Microsoft’s limited description, it appears that if an attacker is able to send specially crafted packets over the network to the target device, they would have the ability to gain SYSTEM-level privileges on the target machine,” Breen said. “The patch notes for this vulnerability state that ‘Improper authentication in Windows NTLM allows an authorized attacker to elevate privileges over a network,’ suggesting an attacker may already need to have access to the NTLM hash or the user’s credentials.”

Breen said another patch — CVE-2025-55234, a 8.8 CVSS-scored flaw affecting the Windows SMB client for sharing files across a network — also is listed as privilege escalation bug but is likewise remotely exploitable. This vulnerability was publicly disclosed prior to this month.

“Microsoft says that an attacker with network access would be able to perform a replay attack against a target host, which could result in the attacker gaining additional privileges, which could lead to code execution,” Breen noted.

CVE-2025-54916 is an “important” vulnerability in Windows NTFS — the default filesystem for all modern versions of Windows — that can lead to remote code execution. Microsoft likewise thinks we are more than likely to see exploitation of this bug soon: The last time Microsoft patched an NTFS bug was in March 2025 and it was already being exploited in the wild as a zero-day.

“While the title of the CVE says ‘Remote Code Execution,’ this exploit is not remotely exploitable over the network, but instead needs an attacker to either have the ability to run code on the host or to convince a user to run a file that would trigger the exploit,” Breen said. “This is commonly seen in social engineering attacks, where they send the user a file to open as an attachment or a link to a file to download and run.”

Critical and remote code execution bugs tend to steal all the limelight, but Tenable Senior Staff Research Engineer Satnam Narang notes that nearly half of all vulnerabilities fixed by Microsoft this month are privilege escalation flaws that require an attacker to have gained access to a target system first before attempting to elevate privileges.

“For the third time this year, Microsoft patched more elevation of privilege vulnerabilities than remote code execution flaws,” Narang observed.

On Sept. 3, Google fixed two flaws that were detected as exploited in zero-day attacks, including CVE-2025-38352, an elevation of privilege in the Android kernel, and CVE-2025-48543, also an elevation of privilege problem in the Android Runtime component.

Also, Apple recently patched its seventh zero-day (CVE-2025-43300) of this year. It was part of an exploit chain used along with a vulnerability in the WhatsApp (CVE-2025-55177) instant messenger to hack Apple devices. Amnesty International reports that the two zero-days have been used in “an advanced spyware campaign” over the past 90 days. The issue is fixed in iOS 18.6.2, iPadOS 18.6.2, iPadOS 17.7.10, macOS Sequoia 15.6.1, macOS Sonoma 14.7.8, and macOS Ventura 13.7.8.

The SANS Internet Storm Center has a clickable breakdown of each individual fix from Microsoft, indexed by severity and CVSS score. Enterprise Windows admins involved in testing patches before rolling them out should keep an eye on askwoody.com, which often has the skinny on wonky updates.

AskWoody also reminds us that we’re now just two months out from Microsoft discontinuing free security updates for Windows 10 computers. For those interested in safely extending the lifespan and usefulness of these older machines, check out last month’s Patch Tuesday coverage for a few pointers.

As ever, please don’t neglect to back up your data (if not your entire system) at regular intervals, and feel free to sound off in the comments if you experience problems installing any of these fixes.

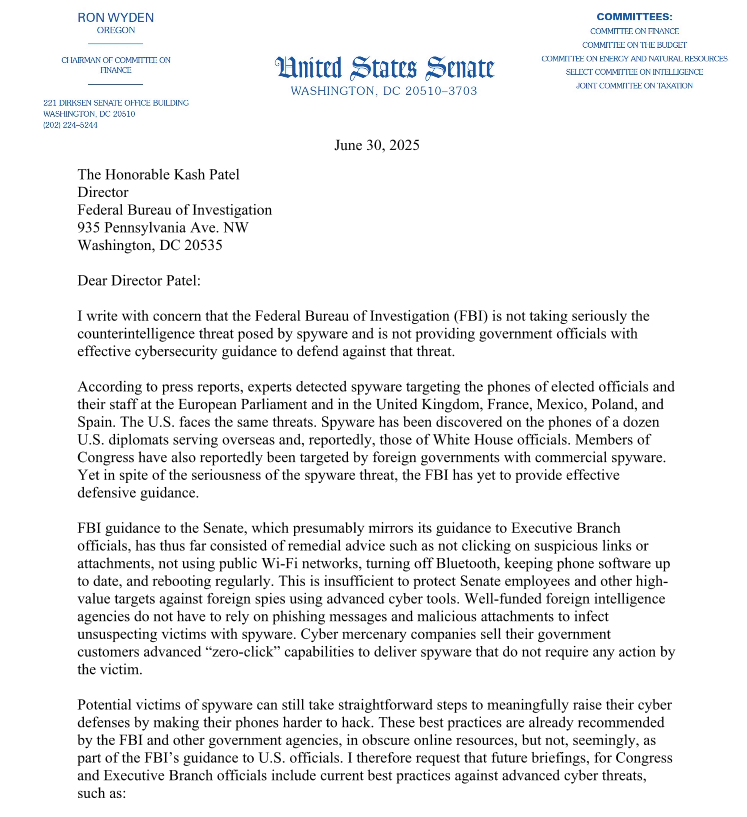

Agents with the Federal Bureau of Investigation (FBI) briefed Capitol Hill staff recently on hardening the security of their mobile devices, after a contacts list stolen from the personal phone of the White House Chief of Staff Susie Wiles was reportedly used to fuel a series of text messages and phone calls impersonating her to U.S. lawmakers. But in a letter this week to the FBI, one of the Senate’s most tech-savvy lawmakers says the feds aren’t doing enough to recommend more appropriate security protections that are already built into most consumer mobile devices.

A screenshot of the first page from Sen. Wyden’s letter to FBI Director Kash Patel.

On May 29, The Wall Street Journal reported that federal authorities were investigating a clandestine effort to impersonate Ms. Wiles via text messages and in phone calls that may have used AI to spoof her voice. According to The Journal, Wiles told associates her cellphone contacts were hacked, giving the impersonator access to the private phone numbers of some of the country’s most influential people.

The execution of this phishing and impersonation campaign — whatever its goals may have been — suggested the attackers were financially motivated, and not particularly sophisticated.

“It became clear to some of the lawmakers that the requests were suspicious when the impersonator began asking questions about Trump that Wiles should have known the answers to—and in one case, when the impersonator asked for a cash transfer, some of the people said,” the Journal wrote. “In many cases, the impersonator’s grammar was broken and the messages were more formal than the way Wiles typically communicates, people who have received the messages said. The calls and text messages also didn’t come from Wiles’s phone number.”

Sophisticated or not, the impersonation campaign was soon punctuated by the murder of Minnesota House of Representatives Speaker Emerita Melissa Hortman and her husband, and the shooting of Minnesota State Senator John Hoffman and his wife. So when FBI agents offered in mid-June to brief U.S. Senate staff on mobile threats, more than 140 staffers took them up on that invitation (a remarkably high number considering that no food was offered at the event).

But according to Sen. Ron Wyden (D-Ore.), the advice the FBI provided to Senate staffers was largely limited to remedial tips, such as not clicking on suspicious links or attachments, not using public wifi networks, turning off bluetooth, keeping phone software up to date, and rebooting regularly.

“This is insufficient to protect Senate employees and other high-value targets against foreign spies using advanced cyber tools,” Wyden wrote in a letter sent today to FBI Director Kash Patel. “Well-funded foreign intelligence agencies do not have to rely on phishing messages and malicious attachments to infect unsuspecting victims with spyware. Cyber mercenary companies sell their government customers advanced ‘zero-click’ capabilities to deliver spyware that do not require any action by the victim.”

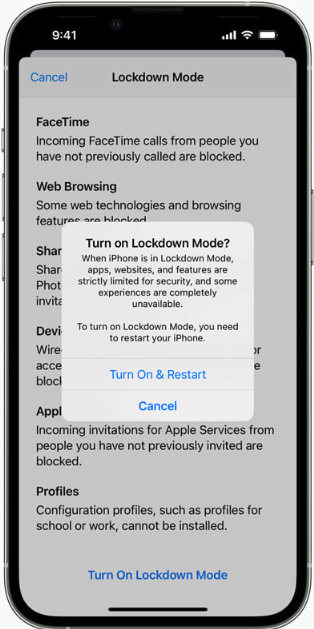

Wyden stressed that to help counter sophisticated attacks, the FBI should be encouraging lawmakers and their staff to enable anti-spyware defenses that are built into Apple’s iOS and Google’s Android phone software.

These include Apple’s Lockdown Mode, which is designed for users who are worried they may be subject to targeted attacks. Lockdown Mode restricts non-essential iOS features to reduce the device’s overall attack surface. Google Android devices carry a similar feature called Advanced Protection Mode.

Wyden also urged the FBI to update its training to recommend a number of other steps that people can take to make their mobile devices less trackable, including the use of ad blockers to guard against malicious advertisements, disabling ad tracking IDs in mobile devices, and opting out of commercial data brokers (the suspect charged in the Minnesota shootings reportedly used multiple people-search services to find the home addresses of his targets).

The senator’s letter notes that while the FBI has recommended all of the above precautions in various advisories issued over the years, the advice the agency is giving now to the nation’s leaders needs to be more comprehensive, actionable and urgent.

“In spite of the seriousness of the threat, the FBI has yet to provide effective defensive guidance,” Wyden said.

Nicholas Weaver is a researcher with the International Computer Science Institute, a nonprofit in Berkeley, Calif. Weaver said Lockdown Mode or Advanced Protection will mitigate many vulnerabilities, and should be the default setting for all members of Congress and their staff.

“Lawmakers are at exceptional risk and need to be exceptionally protected,” Weaver said. “Their computers should be locked down and well administered, etc. And the same applies to staffers.”

Weaver noted that Apple’s Lockdown Mode has a track record of blocking zero-day attacks on iOS applications; in September 2023, Citizen Lab documented how Lockdown Mode foiled a zero-click flaw capable of installing spyware on iOS devices without any interaction from the victim.

Earlier this month, Citizen Lab researchers documented a zero-click attack used to infect the iOS devices of two journalists with Paragon’s Graphite spyware. The vulnerability could be exploited merely by sending the target a booby-trapped media file delivered via iMessage. Apple also recently updated its advisory for the zero-click flaw (CVE-2025-43200), noting that it was mitigated as of iOS 18.3.1, which was released in February 2025.

Apple has not commented on whether CVE-2025-43200 could be exploited on devices with Lockdown Mode turned on. But HelpNetSecurity observed that at the same time Apple addressed CVE-2025-43200 back in February, the company fixed another vulnerability flagged by Citizen Lab researcher Bill Marczak: CVE-2025-24200, which Apple said was used in an extremely sophisticated physical attack against specific targeted individuals that allowed attackers to disable USB Restricted Mode on a locked device.

In other words, the flaw could apparently be exploited only if the attacker had physical access to the targeted vulnerable device. And as the old infosec industry adage goes, if an adversary has physical access to your device, it’s most likely not your device anymore.

I can’t speak to Google’s Advanced Protection Mode personally, because I don’t use Google or Android devices. But I have had Apple’s Lockdown Mode enabled on all of my Apple devices since it was first made available in September 2022. I can only think of a single occasion when one of my apps failed to work properly with Lockdown Mode turned on, and in that case I was able to add a temporary exception for that app in Lockdown Mode’s settings.

My main gripe with Lockdown Mode was captured in a March 2025 column by TechCrunch’s Lorenzo Francheschi-Bicchierai, who wrote about its penchant for periodically sending mystifying notifications that someone has been blocked from contacting you, even though nothing then prevents you from contacting that person directly. This has happened to me at least twice, and in both cases the person in question was already an approved contact, and said they had not attempted to reach out.

Although it would be nice if Apple’s Lockdown Mode sent fewer, less alarming and more informative alerts, the occasional baffling warning message is hardly enough to make me turn it off.

Authorities in at least two U.S. states last week independently announced arrests of Chinese nationals accused of perpetrating a novel form of tap-to-pay fraud using mobile devices. Details released by authorities so far indicate the mobile wallets being used by the scammers were created through online phishing scams, and that the accused were relying on a custom Android app to relay tap-to-pay transactions from mobile devices located in China.

Image: WLVT-8.

Authorities in Knoxville, Tennessee last week said they arrested 11 Chinese nationals accused of buying tens of thousands of dollars worth of gift cards at local retailers with mobile wallets created through online phishing scams. The Knox County Sheriff’s office said the arrests are considered the first in the nation for a new type of tap-to-pay fraud.

Responding to questions about what makes this scheme so remarkable, Knox County said that while it appears the fraudsters are simply buying gift cards, in fact they are using multiple transactions to purchase various gift cards and are plying their scam from state to state.

“These offenders have been traveling nationwide, using stolen credit card information to purchase gift cards and launder funds,” Knox County Chief Deputy Bernie Lyon wrote. “During Monday’s operation, we recovered gift cards valued at over $23,000, all bought with unsuspecting victims’ information.”

Asked for specifics about the mobile devices seized from the suspects, Lyon said “tap-to-pay fraud involves a group utilizing Android phones to conduct Apple Pay transactions utilizing stolen or compromised credit/debit card information,” [emphasis added].

Lyon declined to offer additional specifics about the mechanics of the scam, citing an ongoing investigation.

Ford Merrill works in security research at SecAlliance, a CSIS Security Group company. Merrill said there aren’t many valid use cases for Android phones to transmit Apple Pay transactions. That is, he said, unless they are running a custom Android app that KrebsOnSecurity wrote about last month as part of a deep dive into the operations of China-based phishing cartels that are breathing new life into the payment card fraud industry (a.k.a. “carding”).

How are these China-based phishing groups obtaining stolen payment card data and then loading it onto Google and Apple phones? It all starts with phishing.

If you own a mobile phone, the chances are excellent that at some point in the past two years it has received at least one phishing message that spoofs the U.S. Postal Service to supposedly collect some outstanding delivery fee, or an SMS that pretends to be a local toll road operator warning of a delinquent toll fee.

These messages are being sent through sophisticated phishing kits sold by several cybercriminals based in mainland China. And they are not traditional SMS phishing or “smishing” messages, as they bypass the mobile networks entirely. Rather, the missives are sent through the Apple iMessage service and through RCS, the functionally equivalent technology on Google phones.

People who enter their payment card data at one of these sites will be told their financial institution needs to verify the small transaction by sending a one-time passcode to the customer’s mobile device. In reality, that code will be sent by the victim’s financial institution in response to a request by the fraudsters to link the phished card data to a mobile wallet.

If the victim then provides that one-time code, the phishers will link the card data to a new mobile wallet from Apple or Google, loading the wallet onto a mobile phone that the scammers control. These phones are then loaded with multiple stolen wallets (often between 5-10 per device) and sold in bulk to scammers on Telegram.

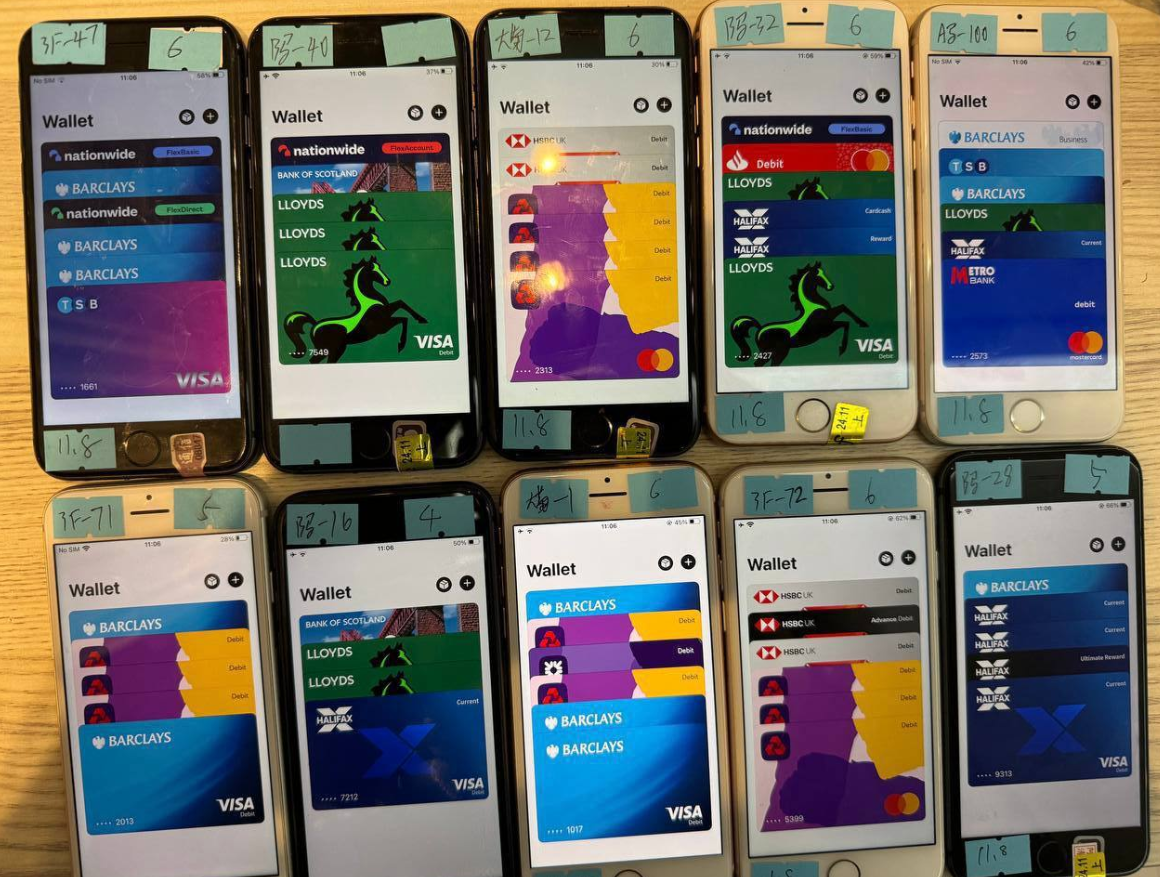

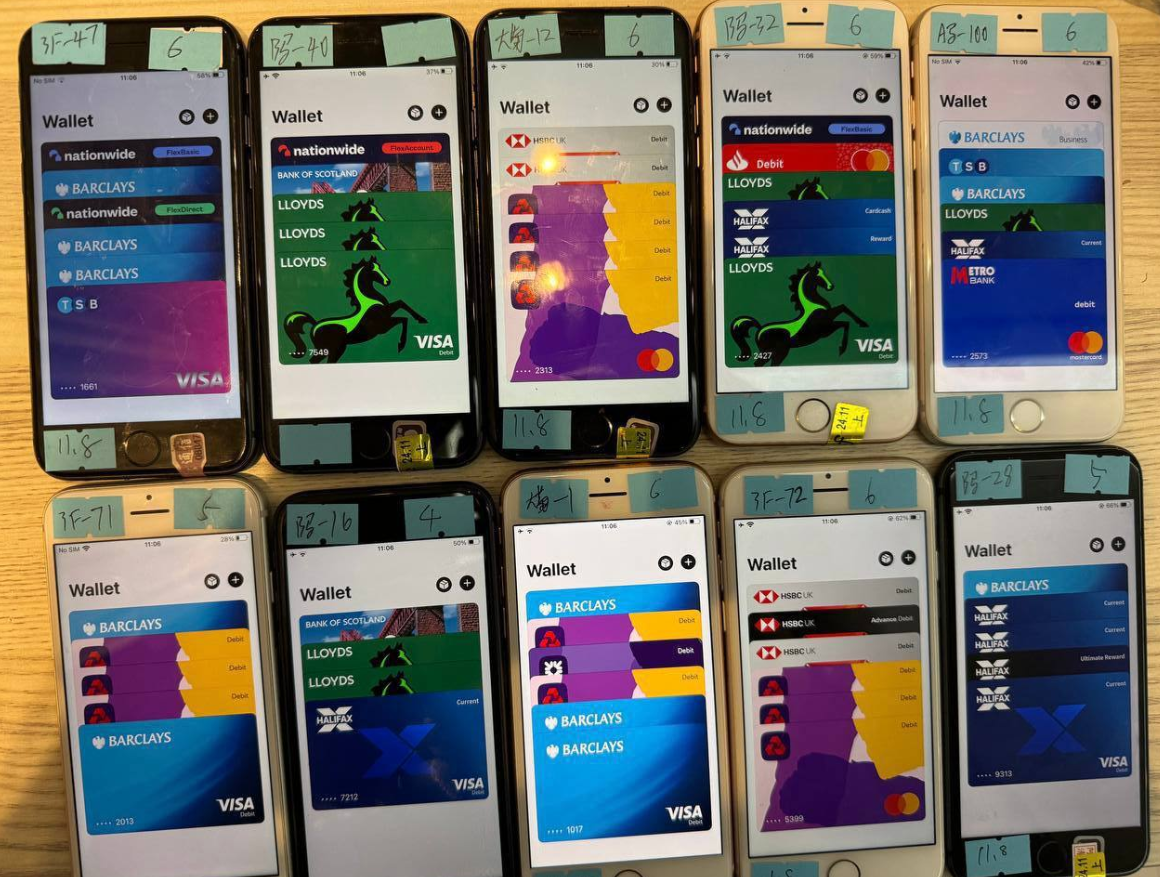

An image from the Telegram channel for a popular Chinese smishing kit vendor shows 10 mobile phones for sale, each loaded with 5-7 digital wallets from different financial institutions.

Merrill found that at least one of the Chinese phishing groups sells an Android app called “Z-NFC” that can relay a valid NFC transaction to anywhere in the world. The user simply waves their phone at a local payment terminal that accepts Apple or Google pay, and the app relays an NFC transaction over the Internet from a phone in China.

“I would be shocked if this wasn’t the NFC relay app,” Merrill said, concerning the arrested suspects in Tennessee.

Merrill said the Z-NFC software can work from anywhere in the world, and that one phishing gang offers the software for $500 a month.

“It can relay both NFC enabled tap-to-pay as well as any digital wallet,” Merrill said. “They even have 24-hour support.”

On March 16, the ABC affiliate in Sacramento (ABC10), Calif. aired a segment about two Chinese nationals who were arrested after using an app to run stolen credit cards at a local Target store. The news story quoted investigators saying the men were trying to buy gift cards using a mobile app that cycled through more than 80 stolen payment cards.

ABC10 reported that while most of those transactions were declined, the suspects still made off with $1,400 worth of gift cards. After their arrests, both men reportedly admitted that they were being paid $250 a day to conduct the fraudulent transactions.

Merrill said it’s not unusual for fraud groups to advertise this kind of work on social media networks, including TikTok.

A CBS News story on the Sacramento arrests said one of the suspects tried to use 42 separate bank cards, but that 32 were declined. Even so, the man still was reportedly able to spend $855 in the transactions.

Likewise, the suspect’s alleged accomplice tried 48 transactions on separate cards, finding success 11 times and spending $633, CBS reported.

“It’s interesting that so many of the cards were declined,” Merrill said. “One reason this might be is that banks are getting better at detecting this type of fraud. The other could be that the cards were already used and so they were already flagged for fraud even before these guys had a chance to use them. So there could be some element of just sending these guys out to stores to see if it works, and if not they’re on their own.”

Merrill’s investigation into the Telegram sales channels for these China-based phishing gangs shows their phishing sites are actively manned by fraudsters who sit in front of giant racks of Apple and Google phones that are used to send the spam and respond to replies in real time.

In other words, the phishing websites are powered by real human operators as long as new messages are being sent. Merrill said the criminals appear to send only a few dozen messages at a time, likely because completing the scam takes manual work by the human operators in China. After all, most one-time codes used for mobile wallet provisioning are generally only good for a few minutes before they expire.

For more on how these China-based mobile phishing groups operate, check out How Phished Data Turns Into Apple and Google Wallets.

The ashtray says: You’ve been phishing all night.

Carding — the underground business of stealing, selling and swiping stolen payment card data — has long been the dominion of Russia-based hackers. Happily, the broad deployment of more secure chip-based payment cards in the United States has weakened the carding market. But a flurry of innovation from cybercrime groups in China is breathing new life into the carding industry, by turning phished card data into mobile wallets that can be used online and at main street stores.

An image from one Chinese phishing group’s Telegram channel shows various toll road phish kits available.

If you own a mobile phone, the chances are excellent that at some point in the past two years it has received at least one phishing message that spoofs the U.S. Postal Service to supposedly collect some outstanding delivery fee, or an SMS that pretends to be a local toll road operator warning of a delinquent toll fee.

These messages are being sent through sophisticated phishing kits sold by several cybercriminals based in mainland China. And they are not traditional SMS phishing or “smishing” messages, as they bypass the mobile networks entirely. Rather, the missives are sent through the Apple iMessage service and through RCS, the functionally equivalent technology on Google phones.

People who enter their payment card data at one of these sites will be told their financial institution needs to verify the small transaction by sending a one-time passcode to the customer’s mobile device. In reality, that code will be sent by the victim’s financial institution to verify that the user indeed wishes to link their card information to a mobile wallet.

If the victim then provides that one-time code, the phishers will link the card data to a new mobile wallet from Apple or Google, loading the wallet onto a mobile phone that the scammers control.

Ford Merrill works in security research at SecAlliance, a CSIS Security Group company. Merrill has been studying the evolution of several China-based smishing gangs, and found that most of them feature helpful and informative video tutorials in their sales accounts on Telegram. Those videos show the thieves are loading multiple stolen digital wallets on a single mobile device, and then selling those phones in bulk for hundreds of dollars apiece.

“Who says carding is dead?,” said Merrill, who presented about his findings at the M3AAWG security conference in Lisbon earlier today. “This is the best mag stripe cloning device ever. This threat actor is saying you need to buy at least 10 phones, and they’ll air ship them to you.”

One promotional video shows stacks of milk crates stuffed full of phones for sale. A closer inspection reveals that each phone is affixed with a handwritten notation that typically references the date its mobile wallets were added, the number of wallets on the device, and the initials of the seller.

An image from the Telegram channel for a popular Chinese smishing kit vendor shows 10 mobile phones for sale, each loaded with 4-6 digital wallets from different UK financial institutions.

Merrill said one common way criminal groups in China are cashing out with these stolen mobile wallets involves setting up fake e-commerce businesses on Stripe or Zelle and running transactions through those entities — often for amounts totaling between $100 and $500.

Merrill said that when these phishing groups first began operating in earnest two years ago, they would wait between 60 to 90 days before selling the phones or using them for fraud. But these days that waiting period is more like just seven to ten days, he said.

“When they first installed this, the actors were very patient,” he said. “Nowadays, they only wait like 10 days before [the wallets] are hit hard and fast.”

Criminals also can cash out mobile wallets by obtaining real point-of-sale terminals and using tap-to-pay on phone after phone. But they also offer a more cutting-edge mobile fraud technology: Merrill found that at least one of the Chinese phishing groups sells an Android app called “ZNFC” that can relay a valid NFC transaction to anywhere in the world. The user simply waves their phone at a local payment terminal that accepts Apple or Google pay, and the app relays an NFC transaction over the Internet from a phone in China.

“The software can work from anywhere in the world,” Merrill said. “These guys provide the software for $500 a month, and it can relay both NFC enabled tap-to-pay as well as any digital wallet. They even have 24-hour support.”

The rise of so-called “ghost tap” mobile software was first documented in November 2024 by security experts at ThreatFabric. Andy Chandler, the company’s chief commercial officer, said their researchers have since identified a number of criminal groups from different regions of the world latching on to this scheme.

Chandler said those include organized crime gangs in Europe that are using similar mobile wallet and NFC attacks to take money out of ATMs made to work with smartphones.

“No one is talking about it, but we’re now seeing ten different methodologies using the same modus operandi, and none of them are doing it the same,” Chandler said. “This is much bigger than the banks are prepared to say.”

A November 2024 story in the Singapore daily The Straits Times reported authorities there arrested three foreign men who were recruited in their home countries via social messaging platforms, and given ghost tap apps with which to purchase expensive items from retailers, including mobile phones, jewelry, and gold bars.

“Since Nov 4, at least 10 victims who had fallen for e-commerce scams have reported unauthorised transactions totaling more than $100,000 on their credit cards for purchases such as electronic products, like iPhones and chargers, and jewelry in Singapore,” The Straits Times wrote, noting that in another case with a similar modus operandi, the police arrested a Malaysian man and woman on Nov 8.

Three individuals charged with using ghost tap software at an electronics store in Singapore. Image: The Straits Times.

According to Merrill, the phishing pages that spoof the USPS and various toll road operators are powered by several innovations designed to maximize the extraction of victim data.

For example, a would-be smishing victim might enter their personal and financial information, but then decide the whole thing is scam before actually submitting the data. In this case, anything typed into the data fields of the phishing page will be captured in real time, regardless of whether the visitor actually clicks the “submit” button.

Merrill said people who submit payment card data to these phishing sites often are then told their card can’t be processed, and urged to use a different card. This technique, he said, sometimes allows the phishers to steal more than one mobile wallet per victim.

Many phishing websites expose victim data by storing the stolen information directly on the phishing domain. But Merrill said these Chinese phishing kits will forward all victim data to a back-end database operated by the phishing kit vendors. That way, even when the smishing sites get taken down for fraud, the stolen data is still safe and secure.

Another important innovation is the use of mass-created Apple and Google user accounts through which these phishers send their spam messages. One of the Chinese phishing groups posted images on their Telegram sales channels showing how these robot Apple and Google accounts are loaded onto Apple and Google phones, and arranged snugly next to each other in an expansive, multi-tiered rack that sits directly in front of the phishing service operator.

The ashtray says: You’ve been phishing all night.

In other words, the smishing websites are powered by real human operators as long as new messages are being sent. Merrill said the criminals appear to send only a few dozen messages at a time, likely because completing the scam takes manual work by the human operators in China. After all, most one-time codes used for mobile wallet provisioning are generally only good for a few minutes before they expire.

Notably, none of the phishing sites spoofing the toll operators or postal services will load in a regular Web browser; they will only render if they detect that a visitor is coming from a mobile device.

“One of the reasons they want you to be on a mobile device is they want you to be on the same device that is going to receive the one-time code,” Merrill said. “They also want to minimize the chances you will leave. And if they want to get that mobile tokenization and grab your one-time code, they need a live operator.”

Merrill found the Chinese phishing kits feature another innovation that makes it simple for customers to turn stolen card details into a mobile wallet: They programmatically take the card data supplied by the phishing victim and convert it into a digital image of a real payment card that matches that victim’s financial institution. That way, attempting to enroll a stolen card into Apple Pay, for example, becomes as easy as scanning the fabricated card image with an iPhone.

An ad from a Chinese SMS phishing group’s Telegram channel showing how the service converts stolen card data into an image of the stolen card.

“The phone isn’t smart enough to know whether it’s a real card or just an image,” Merrill said. “So it scans the card into Apple Pay, which says okay we need to verify that you’re the owner of the card by sending a one-time code.”

How profitable are these mobile phishing kits? The best guess so far comes from data gathered by other security researchers who’ve been tracking these advanced Chinese phishing vendors.

In August 2023, the security firm Resecurity discovered a vulnerability in one popular Chinese phish kit vendor’s platform that exposed the personal and financial data of phishing victims. Resecurity dubbed the group the Smishing Triad, and found the gang had harvested 108,044 payment cards across 31 phishing domains (3,485 cards per domain).

In August 2024, security researcher Grant Smith gave a presentation at the DEFCON security conference about tracking down the Smishing Triad after scammers spoofing the U.S. Postal Service duped his wife. By identifying a different vulnerability in the gang’s phishing kit, Smith said he was able to see that people entered 438,669 unique credit cards in 1,133 phishing domains (387 cards per domain).

Based on his research, Merrill said it’s reasonable to expect between $100 and $500 in losses on each card that is turned into a mobile wallet. Merrill said they observed nearly 33,000 unique domains tied to these Chinese smishing groups during the year between the publication of Resecurity’s research and Smith’s DEFCON talk.

Using a median number of 1,935 cards per domain and a conservative loss of $250 per card, that comes out to about $15 billion in fraudulent charges over a year.

Merrill was reluctant to say whether he’d identified additional security vulnerabilities in any of the phishing kits sold by the Chinese groups, noting that the phishers quickly fixed the vulnerabilities that were detailed publicly by Resecurity and Smith.

Adoption of touchless payments took off in the United States after the Coronavirus pandemic emerged, and many financial institutions in the United States were eager to make it simple for customers to link payment cards to mobile wallets. Thus, the authentication requirement for doing so defaulted to sending the customer a one-time code via SMS.

Experts say the continued reliance on one-time codes for onboarding mobile wallets has fostered this new wave of carding. KrebsOnSecurity interviewed a security executive from a large European financial institution who spoke on condition of anonymity because they were not authorized to speak to the press.

That expert said the lag between the phishing of victim card data and its eventual use for fraud has left many financial institutions struggling to correlate the causes of their losses.

“That’s part of why the industry as a whole has been caught by surprise,” the expert said. “A lot of people are asking, how this is possible now that we’ve tokenized a plaintext process. We’ve never seen the volume of sending and people responding that we’re seeing with these phishers.”

To improve the security of digital wallet provisioning, some banks in Europe and Asia require customers to log in to the bank’s mobile app before they can link a digital wallet to their device.

Addressing the ghost tap threat may require updates to contactless payment terminals, to better identify NFC transactions that are being relayed from another device. But experts say it’s unrealistic to expect retailers will be eager to replace existing payment terminals before their expected lifespans expire.

And of course Apple and Google have an increased role to play as well, given that their accounts are being created en masse and used to blast out these smishing messages. Both companies could easily tell which of their devices suddenly have 7-10 different mobile wallets added from 7-10 different people around the world. They could also recommend that financial institutions use more secure authentication methods for mobile wallet provisioning.

Neither Apple nor Google responded to requests for comment on this story.

Microsoft today issued security updates to fix at least 56 vulnerabilities in its Windows operating systems and supported software, including two zero-day flaws that are being actively exploited.

All supported Windows operating systems will receive an update this month for a buffer overflow vulnerability that carries the catchy name CVE-2025-21418. This patch should be a priority for enterprises, as Microsoft says it is being exploited, has low attack complexity, and no requirements for user interaction.

Tenable senior staff research engineer Satnam Narang noted that since 2022, there have been nine elevation of privilege vulnerabilities in this same Windows component — three each year — including one in 2024 that was exploited in the wild as a zero day (CVE-2024-38193).

“CVE-2024-38193 was exploited by the North Korean APT group known as Lazarus Group to implant a new version of the FudModule rootkit in order to maintain persistence and stealth on compromised systems,” Narang said. “At this time, it is unclear if CVE-2025-21418 was also exploited by Lazarus Group.”

The other zero-day, CVE-2025-21391, is an elevation of privilege vulnerability in Windows Storage that could be used to delete files on a targeted system. Microsoft’s advisory on this bug references something called “CWE-59: Improper Link Resolution Before File Access,” says no user interaction is required, and that the attack complexity is low.

Adam Barnett, lead software engineer at Rapid7, said although the advisory provides scant detail, and even offers some vague reassurance that ‘an attacker would only be able to delete targeted files on a system,’ it would be a mistake to assume that the impact of deleting arbitrary files would be limited to data loss or denial of service.

“As long ago as 2022, ZDI researchers set out how a motivated attacker could parlay arbitrary file deletion into full SYSTEM access using techniques which also involve creative misuse of symbolic links,”Barnett wrote.

One vulnerability patched today that was publicly disclosed earlier is CVE-2025-21377, another weakness that could allow an attacker to elevate their privileges on a vulnerable Windows system. Specifically, this is yet another Windows flaw that can be used to steal NTLMv2 hashes — essentially allowing an attacker to authenticate as the targeted user without having to log in.

According to Microsoft, minimal user interaction with a malicious file is needed to exploit CVE-2025-21377, including selecting, inspecting or “performing an action other than opening or executing the file.”

“This trademark linguistic ducking and weaving may be Microsoft’s way of saying ‘if we told you any more, we’d give the game away,'” Barnett said. “Accordingly, Microsoft assesses exploitation as more likely.”

The SANS Internet Storm Center has a handy list of all the Microsoft patches released today, indexed by severity. Windows enterprise administrators would do well to keep an eye on askwoody.com, which often has the scoop on any patches causing problems.

It’s getting harder to buy Windows software that isn’t also bundled with Microsoft’s flagship Copilot artificial intelligence (AI) feature. Last month Microsoft started bundling Copilot with Microsoft Office 365, which Redmond has since rebranded as “Microsoft 365 Copilot.” Ostensibly to offset the costs of its substantial AI investments, Microsoft also jacked up prices from 22 percent to 30 percent for upcoming license renewals and new subscribers.

Office-watch.com writes that existing Office 365 users who are paying an annual cloud license do have the option of “Microsoft 365 Classic,” an AI-free subscription at a lower price, but that many customers are not offered the option until they attempt to cancel their existing Office subscription.

In other security patch news, Apple has shipped iOS 18.3.1, which fixes a zero day vulnerability (CVE-2025-24200) that is showing up in attacks.

Adobe has issued security updates that fix a total of 45 vulnerabilities across InDesign, Commerce, Substance 3D Stager, InCopy, Illustrator, Substance 3D Designer and Photoshop Elements.

Chris Goettl at Ivanti notes that Google Chrome is shipping an update today which will trigger updates for Chromium based browsers including Microsoft Edge, so be on the lookout for Chrome and Edge updates as we proceed through the week.

New mobile apps from the Chinese artificial intelligence (AI) company DeepSeek have remained among the top three “free” downloads for Apple and Google devices since their debut on Jan. 25, 2025. But experts caution that many of DeepSeek’s design choices — such as using hard-coded encryption keys, and sending unencrypted user and device data to Chinese companies — introduce a number of glaring security and privacy risks.

Public interest in the DeepSeek AI chat apps swelled following widespread media reports that the upstart Chinese AI firm had managed to match the abilities of cutting-edge chatbots while using a fraction of the specialized computer chips that leading AI companies rely on. As of this writing, DeepSeek is the third most-downloaded “free” app on the Apple store, and #1 on Google Play.

DeepSeek’s rapid rise caught the attention of the mobile security firm NowSecure, a Chicago-based company that helps clients screen mobile apps for security and privacy threats. In a teardown of the DeepSeek app published today, NowSecure urged organizations to remove the DeepSeek iOS mobile app from their environments, citing security concerns.

NowSecure founder Andrew Hoog said they haven’t yet concluded an in-depth analysis of the DeepSeek app for Android devices, but that there is little reason to believe its basic design would be functionally much different.

Hoog told KrebsOnSecurity there were a number of qualities about the DeepSeek iOS app that suggest the presence of deep-seated security and privacy risks. For starters, he said, the app collects an awful lot of data about the user’s device.

“They are doing some very interesting things that are on the edge of advanced device fingerprinting,” Hoog said, noting that one property of the app tracks the device’s name — which for many iOS devices defaults to the customer’s name followed by the type of iOS device.

The device information shared, combined with the user’s Internet address and data gathered from mobile advertising companies, could be used to deanonymize users of the DeepSeek iOS app, NowSecure warned. The report notes that DeepSeek communicates with Volcengine, a cloud platform developed by ByteDance (the makers of TikTok), although NowSecure said it wasn’t clear if the data is just leveraging ByteDance’s digital transformation cloud service or if the declared information share extends further between the two companies.

Perhaps more concerning, NowSecure said the iOS app transmits device information “in the clear,” without any encryption to encapsulate the data. This means the data being handled by the app could be intercepted, read, and even modified by anyone who has access to any of the networks that carry the app’s traffic.

“The DeepSeek iOS app globally disables App Transport Security (ATS) which is an iOS platform level protection that prevents sensitive data from being sent over unencrypted channels,” the report observed. “Since this protection is disabled, the app can (and does) send unencrypted data over the internet.”

Hoog said the app does selectively encrypt portions of the responses coming from DeepSeek servers. But they also found it uses an insecure and now deprecated encryption algorithm called 3DES (aka Triple DES), and that the developers had hard-coded the encryption key. That means the cryptographic key needed to decipher those data fields can be extracted from the app itself.

There were other, less alarming security and privacy issues highlighted in the report, but Hoog said he’s confident there are additional, unseen security concerns lurking within the app’s code.

“When we see people exhibit really simplistic coding errors, as you dig deeper there are usually a lot more issues,” Hoog said. “There is virtually no priority around security or privacy. Whether cultural, or mandated by China, or a witting choice, taken together they point to significant lapse in security and privacy controls, and that puts companies at risk.”

Apparently, plenty of others share this view. Axios reported on January 30 that U.S. congressional offices are being warned not to use the app.

“[T]hreat actors are already exploiting DeepSeek to deliver malicious software and infect devices,” read the notice from the chief administrative officer for the House of Representatives. “To mitigate these risks, the House has taken security measures to restrict DeepSeek’s functionality on all House-issued devices.”

TechCrunch reports that Italy and Taiwan have already moved to ban DeepSeek over security concerns. Bloomberg writes that The Pentagon has blocked access to DeepSeek. CNBC says NASA also banned employees from using the service, as did the U.S. Navy.

Beyond security concerns tied to the DeepSeek iOS app, there are indications the Chinese AI company may be playing fast and loose with the data that it collects from and about users. On January 29, researchers at Wiz said they discovered a publicly accessible database linked to DeepSeek that exposed “a significant volume of chat history, backend data and sensitive information, including log streams, API secrets, and operational details.”

“More critically, the exposure allowed for full database control and potential privilege escalation within the DeepSeek environment, without any authentication or defense mechanism to the outside world,” Wiz wrote. [Full disclosure: Wiz is currently an advertiser on this website.]

KrebsOnSecurity sought comment on the report from DeepSeek and from Apple. This story will be updated with any substantive replies.

Private tech companies gather tremendous amounts of user data. These companies can afford to let you use social media platforms free of charge because it’s paid for by your data, attention, and time.

Big tech derives most of its profits by selling your attention to advertisers — a well-known business model. Various documentaries (like Netflix’s “The Social Dilemma”) have tried to get to the bottom of the complex algorithms that big tech companies employ to mine and analyze user data for the benefit of third-party advertisers.

Tech companies benefit from personal info by being able to provide personalized ads. When you click “yes” at the end of a terms and conditions agreement found on some web pages, you might be allowing the companies to collect the following data:

For someone unfamiliar with privacy issues, it is important to understand the extent of big tech’s tracking and data collection. After these companies collect data, all this info can be supplied to third-party businesses or used to improve user experience.

The problem with this is that big tech has blurred the line between collecting customer data and violating user privacy in some cases. While tracking what content you interact with can be justified under the garb of personalizing the content you see, big tech platforms have been known to go too far. Prominent social networks like Facebook and LinkedIn have faced legal trouble for accessing personal user data like private messages and saved photos.

The info you provide helps build an accurate character profile and turns it into knowledge that gives actionable insights to businesses. Private data usage can be classified into three cases: selling it to data brokers, using it to improve marketing, or enhancing customer experience.

To sell your info to data brokers

Along with big data, another industry has seen rapid growth: data brokers. Data brokers buy, analyze, and package your data. Companies that collect large amounts of data on their users stand to profit from this service. Selling data to brokers is an important revenue stream for big tech companies.

Advertisers and businesses benefit from increased info on their consumers, creating a high demand for your info. The problem here is that companies like Facebook and Alphabet (Google’s parent company) have been known to mine massive amounts of user data for the sake of their advertisers.

To personalize marketing efforts

Marketing can be highly personalized thanks to the availability of large amounts of consumer data. Tracking your response to marketing campaigns can help businesses alter or improve certain aspects of their campaign to drive better results.

The problem is that most AI-based algorithms are incapable of assessing when they should stop collecting or using your info. After a point, users run the risk of being constantly subjected to intrusive ads and other unconsented marketing campaigns that pop up frequently.

To cater to the customer experience

Analyzing consumer behavior through reviews, feedback, and recommendations can help improve customer experience. Businesses have access to various facets of data that can be analyzed to show them how to meet consumer demands. This might help improve any part of a consumer’s interaction with the company, from designing special offers and discounts to improving customer relationships.

For most social media platforms, the goal is to curate a personalized feed that appeals to users and allows them to spend more time on the app. When left unmonitored, the powerful algorithms behind these social media platforms can repeatedly subject you to the same kind of content from different creators.

Here are the big tech companies that collect and mine the most user data.

Users need a comprehensive data privacy solution to tackle the rampant, large-scale data mining carried out by big tech platforms. While targeted advertisements and easily found items are beneficial, many of these companies collect and mine user data through several channels simultaneously, exploiting them in several ways.

It’s important to ensure your personal info is protected. Protection solutions like McAfee’s Personal Data Cleanup feature can help. It scours the web for traces of your personal info and helps remove it for your online privacy.

McAfee+ provides antivirus software for all your digital devices and a secure VPN connection to avoid exposure to malicious third parties while browsing the internet. Our Identity Monitoring and personal data removal solutions further remove gaps in your devices’ security systems.

With our data protection and custom guidance (complete with a protection score for each platform and tips to keep you safer), you can be sure that your internet identity is protected.

The post What Personal Data Do Companies Track? appeared first on McAfee Blog.

Not long ago, the ability to digitally track someone’s daily movements just by knowing their home address, employer, or place of worship was considered a dangerous power that should remain only within the purview of nation states. But a new lawsuit in a likely constitutional battle over a New Jersey privacy law shows that anyone can now access this capability, thanks to a proliferation of commercial services that hoover up the digital exhaust emitted by widely-used mobile apps and websites.

Image: Shutterstock, Arthimides.

Delaware-based Atlas Data Privacy Corp. helps its users remove their personal information from the clutches of consumer data brokers, and from people-search services online. Backed by millions of dollars in litigation financing, Atlas so far this year has sued 151 consumer data brokers on behalf of a class that includes more than 20,000 New Jersey law enforcement officers who are signed up for Atlas services.

Atlas alleges all of these data brokers have ignored repeated warnings that they are violating Daniel’s Law, a New Jersey statute allowing law enforcement, government personnel, judges and their families to have their information completely removed from commercial data brokers. Daniel’s Law was passed in 2020 after the death of 20-year-old Daniel Anderl, who was killed in a violent attack targeting a federal judge — his mother.

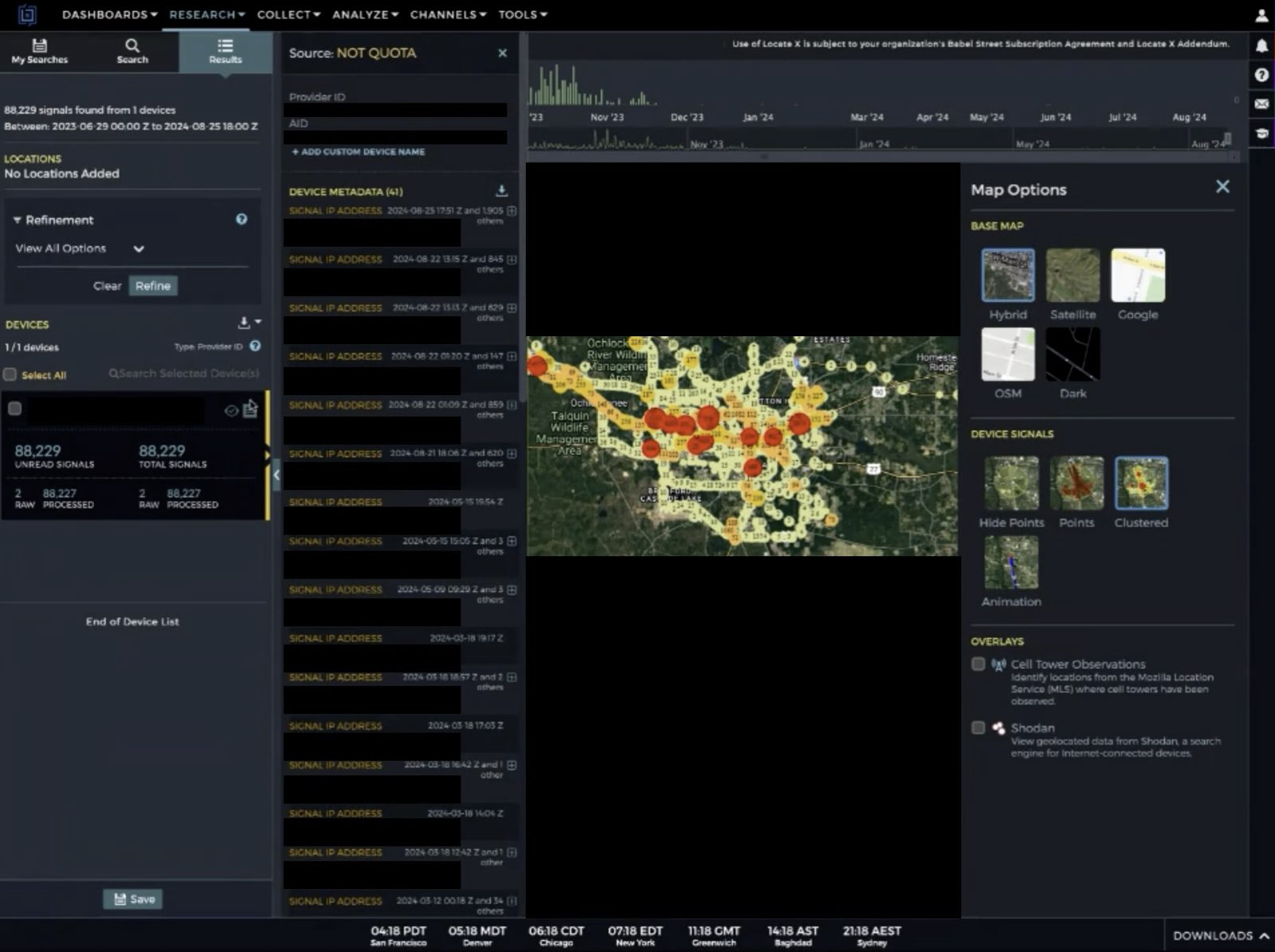

Last week, Atlas invoked Daniel’s Law in a lawsuit (PDF) against Babel Street, a little-known technology company incorporated in Reston, Va. Babel Street’s core product allows customers to draw a digital polygon around nearly any location on a map of the world, and view a slightly dated (by a few days) time-lapse history of the mobile devices seen coming in and out of the specified area.

Babel Street’s LocateX platform also allows customers to track individual mobile users by their Mobile Advertising ID or MAID, a unique, alphanumeric identifier built into all Google Android and Apple mobile devices.

Babel Street can offer this tracking capability by consuming location data and other identifying information that is collected by many websites and broadcast to dozens and sometimes hundreds of ad networks that may wish to bid on showing their ad to a particular user.

This image, taken from a video recording Atlas made of its private investigator using Babel Street to show all of the unique mobile IDs seen over time at a mosque in Dearborn, Michigan. Each red dot represents one mobile device.

In an interview, Atlas said a private investigator they hired was offered a free trial of Babel Street, which the investigator was able to use to determine the home address and daily movements of mobile devices belonging to multiple New Jersey police officers whose families have already faced significant harassment and death threats.

Atlas said the investigator encountered Babel Street while testing hundreds of data broker tools and services to see if personal information on its users was being sold. They soon discovered Babel Street also bundles people-search services with its platform, to make it easier for customers to zero in on a specific device.

The investigator contacted Babel Street about possibly buying home addresses in certain areas of New Jersey. After listening to a sales pitch for Babel Street and expressing interest, the investigator was told Babel Street only offers their service to the government or to “contractors of the government.”

“The investigator (truthfully) mentioned that he was contemplating some government contract work in the future and was told by the Babel Street salesperson that ‘that’s good enough’ and that ‘they don’t actually check,’” Atlas shared in an email with reporters.

KrebsOnSecurity was one of five media outlets invited to review screen recordings that Atlas made while its investigator used a two-week trial version of Babel Street’s LocateX service. References and links to reporting by other publications, including 404 Media, Haaretz, NOTUS, and The New York Times, will appear throughout this story.